Cache Memory Access Patterns in the GPU Architecture

Full text

Figure

![Figure 3.1: Block diagram of the AMD 7970 architecture [14]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/29.612.112.533.75.191/figure-block-diagram-amd-architecture.webp)

![Figure 3.2: Block diagram of the Compute Unit of the AMD 7970 architecture [14]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/30.612.200.451.79.272/figure-block-diagram-compute-unit-amd-architecture.webp)

![Table 3.3: AMD Southern Islands emulated GPU configuration on Multi2Sim [10, 14]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/31.612.156.491.107.436/table-amd-southern-islands-emulated-gpu-conguration-multi.webp)

![Figure 3.3: Block diagram of the NVIDIA Kepler architecture [1]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/32.612.117.535.72.222/figure-block-diagram-nvidia-kepler-architecture.webp)

Related documents

To master these moments, use the IDEA cycle: identify the mobile moments and context; design the mobile interaction; engineer your platforms, processes, and people for

To verify if train paths of a timetable, developed on a macroscopic or mesoscopic railway infrastructure, can actually be arranged without conflicts within the block occupancy

This is based on primary data collected through a case study carried out in East Java, comprising 14 interviews and an FGD with stakeholders related to sugar-

En esta fase se debe analizar y decidir cuáles serán los medios utilizados para informar a los probables candidatos sobre En esta fase se debe analizar y decidir cuáles serán los

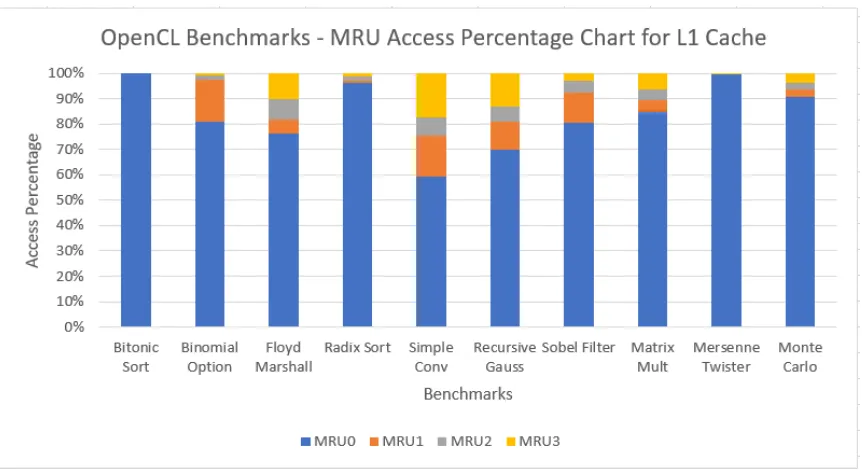

If the classifier indicates that the cache line is L2-Private, then the line is sent to the requesting core’s L2 cache (L2 replica location) and the L1 mode provided by the

South Asian Clinical Toxicology Research Collaboration, Faculty of Medicine, University of Peradeniya, Peradeniya, Sri Lanka.. Department of Medicine, Faculty of

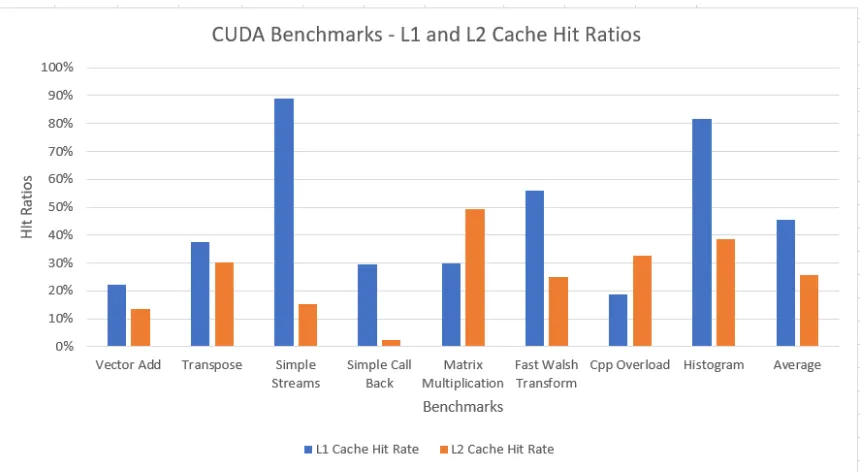

Once the data set reaches the size of the L2 cache and the program starts to miss the cache and go to main memory, average access times get much worse. • When all the data fits in

En este trabajo hablamos de patronímico como el recurso denominativo que incorpora total o parcialmente, directa o indirectamente (siglas o acrónimos), el nombre

![Figure 3.4: Kepler GPU Memory Architecture [1]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/33.612.222.424.76.234/figure-kepler-gpu-memory-architecture.webp)

![Figure 3.5: NVIDIA Kepler Steaming Multi-processor (SM) architecture [1]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/34.612.203.443.74.325/figure-nvidia-kepler-steaming-processor-sm-architecture.webp)

![Table 3.4: NVIDIA Kepler emulated GPU configuration on Multi2Sim [1, 14]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/35.612.160.489.107.373/table-nvidia-kepler-emulated-gpu-conguration-multi-sim.webp)

![Figure 4.1: Cache Replacement Policies [15]](https://thumb-us.123doks.com/thumbv2/123dok_us/32513.2575/42.612.131.523.71.383/figure-cache-replacement-policies.webp)