Feature Selection for Fluency Ranking

Full text

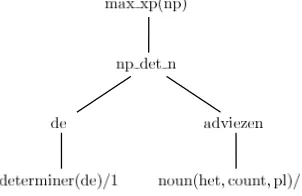

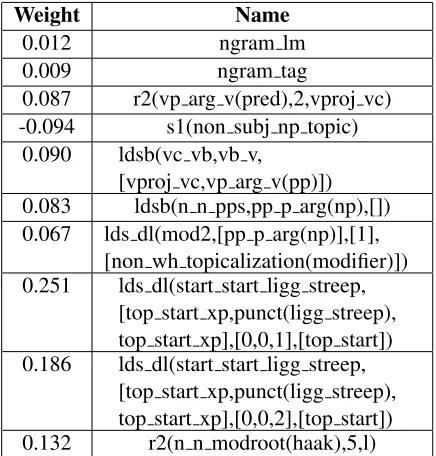

Figure

Related documents

In this paper, we introduce a parallel version of the well-known AdaBoost al- gorithm to speed up and size up feature selection for binary clas- sification tasks using large

Chapter 3 proposes an unsupervised feature selection method for high-dimensional data (called AUFS). To overcome traditional unsupervised feature selection methods, we proposed

One can observe that the proposed method achieved higher validation accuracy (0.81) than all other compared feature selection approaches, while using the lowest number of features

Finally, we compared the performance of HMC-ReliefF with a feature ranking algorithm based on binary relevance – a method typically used for solving the task of feature ranking

From the experimental results, it can be seen that, when compared with fuzzy entropy based feature selection and the raw data sets, the proposed method can select feature subset

Twitter, Tweet Ranking, Weighted Borda-Count, Content-based Features, Reputation, Baldwin method, Greedy Feature

In what follows, various existing feature selection methods will be described to be compared (Compared Feature Selection Methods section), our proposed improved method

Filter feature selection methods present different ranking algorithms; therefore, we propose an EMFFS method that combines the output of IG, gain ratio, chi-squared and ReliefF to