2008 情財第 0142 号

2009 年 3 ⽉

欧州における情報セキュリティ関連調査報告書

Study on EU Information Security Situation

Survey on the Current Status of Research and Development (R&D) of

Cryptographic Technology in the European Commission

Survey on the Current Status of Research and

Development (R&D) of Cryptographic Technology in

the European Commission

Summary of the R&D Support

Target of the R&D Support

Details and Results of the R&D Support

Future Plan of the R&D Support

Relationship between the European Commission,

JIWG and JHAS

Final Report

February 10th, 2009 Customer: IT Security Center IPA/ISEC JAPAN Contact Persons: Akira Yamada Wolfgang SchneiderStatus of Document

This document is the Final Report of the “Survey on the Current Status of Research and Development (R&D) of Cryptographic Technology in the European Commission”.

Editor:

Dr. Dirk Scheuermann dirk.scheuermann@sit.fraunhofer.de Further contributors:

Thomas Schroeder thomas.schroeder@sit.fraunhofer.de Bartol Filipovic bartol.filipovic@sit.fraunhofer.de Ulrich Waldmann ulrich.waldmann@sit.fraunhofer.de

Management Summary

This report presents the results of the “Survey on the Current Status of Research and Development (R&D) of Cryptographic Technology in the European Commission” performed by SIT on behalf of IPA. The report gives an overview of the current status of the R&D support, its target, details, results, future plan and relationship between the European Commission, JIWG and JHAS.

Regarding the general R&D support in Europe, the EU Framework Programmes FP6 and FP7 obviously play a major role. However, it is difficult to explicitly determine the budget situation for cryptography research. EU projects dealing with different aspects of cryptography fit into many different calls and corresponding objectives, but no specific budget is foreseen for this specific topic. Furthermore, many EU projects cover quite different key topics, and cryptography is just used as one topic within the project.

The most important research targets and research results to be mentioned in Europe are obviously dominated by the Network of Excellence (NoE) ECRYPT. New research targets and implications for future plans of R&D support are indicated by the recently started follow-up project ECRYPT II. Despite its very important academic meaning, the percentage of the total research budget for the participating partners is very low (caused by its nature as a NoE with many participating partners).

Additional EU projects specifically dealing with mathematical aspects of cryptography are very rare. However, there exist a couple of projects covering very important other, non-mathematical aspects, like e.g. secure and efficient implementation of existing cryptography. The example of the ICE project headed by the Turkey-National Research institute shows that there also exists a strong interest in funding the promotion of experts in cryptography that were previously not very well known in the European scene.

Fundamental research tasks regarding standardized secure crypto devices are distributed to different institutions as a result of the general organizational framework. When analyzing the work performed within ECRYPT, it becomes obvious that a lot of research targets are set by specific mathematical problems related to certain cryptography schemes and corresponding security requirements. Many open problems especially exist in the area of provable security, in particular hardness of mathematical problems.

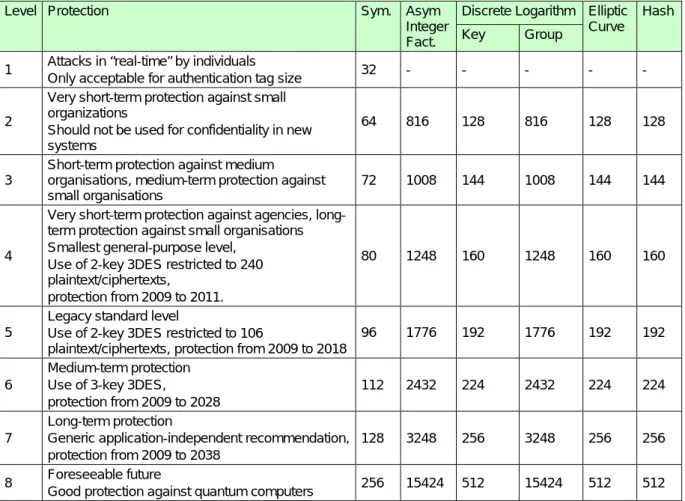

Regarding recommended key lengths for asymmetric keys being equivalent to certain lengths of symmetric keys, the R&D results of different projects are differing over the years. Surprisingly, there is no continuous growing of recommended

asymmetric key lengths related to certain symmetric key lengths. Within ECRYPT, these different recommendations have been synthesized to one unique recommendation table. K.U. Leuven is observing increasing interest in these recommendations and therefore plans a new recommendation table as the result of ECRYPT II.

A broad and interesting research field is provided by the area of quantum cryptography resp. development of cryptographic methods resistant against the development of quantum computers. The currently achieved R&D results show that in this area even more aspects have to be considered for the design of secure solutions. Fundamental algorithms providing a secure solution at first sight still show some weaknesses against certain attack strategies due to physical effects. Only few information have been published about the activities of the working groups JIWG and JHAS and their relation to the EU. Their work obviously concentrates on the area of CC evaluation of smart cards and smart card terminals. This means that they will influence the smart card industry and set up new research targets regarding the use of smart cards for cryptography.

Table of Contents

Management Summary iii

Table of Contents v

List of Tables viii

Abbreviations and Acronyms ix

1 Introduction 10

2 Terms and Definitions 11

2.1 Block Cipher 11 2.2 Boolean Satisfiability 11 2.3 Forking Lemma 11 2.4 Homomorphic Encryption 11 2.5 Index Calculus 11 2.6 Indistinguishability of Encryptions 12 2.7 Lattice Reduction 12 2.8 Obfuscation 12 2.9 Quantum Cryptography 12 2.10 Representation Theory 12 2.11 Standard Model 12 2.12 Stream Cipher 13

2.13 Torus Based Cryptography 13

3 Summary of General R&D Support 13

3.1 Organizational Framework 13

3.2 Budget Scales 14

4 Targets of Cryptographic Research 14

4.1 Fundamental Research Targets of ECRYPT 15

4.2 Mathematical Problems 24

4.3 Digital Signature Schemes 25

4.4 Public Key Encryption Schemes 26

4.5 Open Problems in Provable Security 29

4.6 Additional Requirements 32

4.7 FP 7 Projects with Non-algorithmic Research Targets 32

4.8 National Projects 38

5 Results of R&D Activities and Plans for Future Activities 40

5.1 Fundamental Results of ECRYPT 41

5.2 eSTREAM 42

5.3 Recommended Key Sizes 42

5.4 Application Scenarios 44

5.5 Hardness of the Main Computational Problems Used in

Cryptography 45

5.6 Results on Quantum Computing and Quantum Cryptography 51

5.7 Future Key Topics identified by K.U. Leuven 51

6 Relationships to JIWG and JHAS 52

6.2 JHAS 52

List of Tables

Abbreviations and Acronyms

AZTEC Asymmetric Techniques Virtual Lab

BSI Bundesamt für Sicherheit in der Informationstechnik, Federal Office for Information Security, GER

CACE Computer Aided Cryptography Engineering

CC Common Criteria

CDH Computational Diffie-Hellman

CESG Communications Electronics Security Group, UK

CORDIS Community Research and Development Information Service, EU

DCSSI Direction Centrale de la Sécurité des Systèmes d'Information, Central Directorate for Information Systems Security, FRA

DDH Decisional Diffie-Hellman ECM Elliptic Curve Method ERA European Research Area ERC European Research Council

EU European Union

FPEG Fraud Prevention Expert Group FP6 6th Framework Program, EU

FP7 7th Framework Program, EU GNFS General Number Field Sieve IAP Interuniversity Attraction Pole

ICT Information and Communication Technologies ISCI International Security Certification Initiative JHAS JIL Hardware Related Attack Subgroup JIL Joint Interpretation Library

JIWG Joint Interpretation Working Group LLL Lenstra, Lenstra and Lovacz

MAYA Multi-party and Asymmetric Algorithms Virtual Lab NIS Network and Information Security, EU

NTRU N-th degree truncated polynomial ring OAEP Optimal Asymmetric Encryption Padding PSSWG Product and System Security Working Group QKD Quantum Key Distribution

SNFS Special Number Field Sieve STVL Symmetric Techniques Virtual Lab TPM Trusted Platform Module

TWIRL The Weizmann Institute Relation Locator UC Universal Composability

VAMPIRE Virtual Applications and Implementations Research Lab WAVILA Watermarking Virtual Lab

1

Introduction

This report presents a survey about the current status of R&D activities regarding cryptographic technology in the EU region. As far as possible, the survey takes a look at actual research targets, already achieved results as well R&D activities planned in the future.

The current situation and required further initiatives regarding the current status of Research and development (R&D) of cryptographic technology in the EU region will be analyzed according to the following aspects:

General organizational framework in Europe

General R&D targets and R&D results of specific EU projects

R&D targets and R&D results related to specific, well-known mathematical problems.

This report focuses largely on the work of the Network of Excellence ECRYPT and the follow-up project ECRYPT II. This also includes the consideration of the eSTREAM project initiated within ECRYPT. Further projects will be considered for additional, non-mathematical aspects that are also strongly relevant for a secure and efficient use of cryptography.

The rest of the report is organized in the following way: Chapter 2 states some general terms and definitions in the area of cryptography relevant for the later activity descriptions. In Chapter 3, we give some information about the general situation of R&D support in Europe. After that, the analysis of the actual targets of cryptographic research is given in Chapter 4, whereby one important focus is set on the open problems of provable security. An overview about R&D results is presented in Chapter 5 with an emphasis on recent perceptions on the hardness of computational problems. Finally, Chapter 6 describes some relations between the EU and the JIWG and the JHAS working groups as far as information about these groups are publicly available.

2

Terms and Definitions

2.1 Block Cipher

A block cipher algorithm is an encryption algorithm with the following properties: The string to be encrypted is portioned into blocks of a fixed size, and a fixed encryption key is applied to each block. A block cipher algorithm is uniquely defined by a calculation procedure for one single block which is then repeated for each block to be encrypted.

2.2 Boolean Satisfiability

The Boolean satisfiability problem is a decision problem, whose instance is a Boolean expression written using only AND, OR, NOT, variables, and parentheses. The question is: given the expressions, is there some assignment of TRUE and FALSE values to the variables that will make the entire expression true?

2.3 Forking Lemma

The forking lemma states that if an adversary (typically a probabilistic Turing machine), on inputs drawn from some distribution, produces an output that has some property with non-negligible probability, then with non-negligible probability, if the adversary is re-run on new inputs but with the same random tape, its second output will also have the property. The idea is to think of an algorithm as running twice in related executions, where the process forks at a certain point, when some but not all of the input has been examined.

2.4 Homomorphic Encryption

Homomorphic Encryption is a form of encryption where certain algebraic operations on the plaintext are automatically copied to certain algebraic operations on the cipher text. Homomorphic encryption schemes are malleable by design and are thus unsuited for secure transmission of longer data. On the other hand, the homomorphic property of a cryptosystem can be desired in certain applications like secure voting systems and private information retrieval schemes or if a verification of algebraic relations between plaintexts without the need to reconstruct them is desired.

2.5 Index Calculus

Index calculus is an algorithm for computing discrete logarithms. It requires a factor base as input. The algorithm is performed in three stages. The first two

stages find the discrete logarithms of a factor base of small primes. The third stage finds the discrete log of the desired number h in terms of the discrete logs of the factor base.

2.6 Indistinguishability of Encryptions

Indistinguishability of encryptions is a security property of encryption schemes. If a cryptosystem possesses the property of indistinguishability, then an adversary will be unable to distinguish cipher texts by looking at the messages they encrypt. 2.7 Lattice Reduction

Given an integer lattice basis as input, the goal of lattice basis reduction is to find a basis with short, nearly orthogonal vectors. This is realized using different algorithms, whose run time is usually at least exponential in the dimension of the lattice. Although determining the shortest basis is possibly an NP-complete problem, algorithms such as the LLL (Lenstra, Lenstra, and Lovasz) algorithm can find a short basis in polynomial time with guaranteed worst-case performance. LLL is widely used in the cryptanalysis of public key cryptosystems.

2.8 Obfuscation

Obfuscation refers to encoding the input data before it is sent to a hash function or other encryption scheme. This technique helps to make brute force attacks unfeasible, as it is difficult to determine the correct clear text (not directly made invisible, but rather indistinguishable from other characters used for encoding). 2.9 Quantum Cryptography

Quantum cryptography works in the same way as ordinary symmetric cryptography from the mathematical point of view. However, there is a special way to handle out the common secret key: The key is provided by a random number generated in a quantum mechanical way.

2.10 Representation Theory

Representation theory studies abstract algebraic structures by representing their elements as linear transformations of vector spaces.

2.11 Standard Model

The standard model (in cryptography) is the model of computation in which the adversary is only limited by the amount of time and computational power available to him. Other names used are bare model and plain model.

2.12 Stream Cipher

A stream cipher algorithm is an encryption algorithm where the encryption is performed by bitwise connection of the string to be encrypted with a pseudorandom bit stream (the key stream).

2.13 Torus Based Cryptography

Torus based cryptography applies algebraic tori to construct a group for use in ciphers based on the discrete logarithm problem. This idea was first introduced in the form of a public key algorithm named CEILIDH. It improves on conventional cryptosystems by representing some elements of large finite fields compactly and therefore transmitting fewer bits.

2.14 Universal Composability

Universal composability (UC) is a means for defining the security of cryptographic protocols. The name stems from the fact that instances of protocols that are UC secure remain secure even if arbitrarily composed with other instances of the same or other protocols.

For further terms related to PKI cryptography see the terms and definitions listed in part I of the study.

3

Summary of General R&D Support

Within this chapter, we will give a short overview about the general situation of the R&D support in the EU related to cryptography. For this purpose, we have to look at the general framework conditions as well as the more specific budget situation.

3.1 Organizational Framework

The institutions of the EU are primarily the European Commission, the Council of the European Union (not to be confused with the European Council and the Council of Europe), and the European Parliament. For short, the Commission is the executive government; the Council and the Parliament form the legislature. As far as we are concerned here, the Commission controls most of the research activities defined by the EU.

Sequences of calls are issued periodically within the existing framework programs (FP6, FP7). Normally, the EU does not call directly for any specific research in the area of cryptography. Nevertheless, there always re-occur specific calls for the general topics information and communication technology (ICT-Calls) or security (SEC-Calls) where research projects dealing with cryptography fit very well. However, EU projects with cryptography directly visible as their major topics (like ECRYPT) are very rare. Most of the funded projects deal with the development and design of ICT or security applications where the use of cryptography is one important aspect to be considered within the project. Furthermore, it turns out that cryptography also fits into many EU projects within FP7 calls or objectives within the calls that are not explicitly related to security.

Standardisation of cryptographic devices and procedures throughout the EU is also of prime concern. It has been laid in the hands of CEN (Comité Européen de Normalisation), CENELEC (Comité Européen de Normalisation Electrotechnique) and ETSI (European Telecommunication Standards Institute). These three institutions together form the ICTSB (Information and Communications Technology Standards Board).

3.2 Budget Scales

The total budget of 1.35 Billion EUR foreseen for security research within FP7 allows a large amount of research related to cryptography, but no specific budget is specifically related to this topic.

Although the projects ECRYPT I and ECRYPT II clearly appear as the most important research projects dealing with mathematical aspects of cryptography, they only form about 3-5% of the research budget of the participating partners. But this is caused by the facts that

the budget of such NoEs are generally low (primarily for labor equipment and not for funded working time) and have to be divided among many partners, and,

there are not much EU projects directly dealing with cryptography anyway (as already mentioned above).

4

Targets of Cryptographic Research

This chapter gives a survey over European initiatives regarding cryptographical research, especially under the aspect of FP6 and FP7 funding. To analyze the current research targets, many different aspects have to be considered. First of all, the general objectives of existing research projects have to be analyzed. Furthermore, it is also important to look at specific mathematical problems and

research issues related to certain cryptography schemes. Similar as for the PKI transition plan, the ECRYPT project also provides the most important sources to identify targets of cryptographic research. Further EU project cover some additional, non-algorithmic and non-mathematical research targets that also have to be considered for an efficient and secure use of cryptography.

4.1 Fundamental Research Targets of ECRYPT

In the following, the specific research targets of ECRYPT will be summarized according to their publicly available deliverables. ECRYPT also gives a good view of the future development of cryptography in Europe.

Until recently, the development of cryptographic solutions for ICT applications was mostly done in an ad hoc manner, i.e. intuitively rather than rigorously. Schemes were designed that were subsequently broken; modifications were made to prevent those specific attacks, and so forth. This is clearly an unsatisfactory methodology, since an adversary will devise his attacks according to the scheme specified. In particular, he will try to take actions other than those envisioned by the designer. Therefore, a more efficient design of schemes is an important research target.

Although the advantages of PKI cryptography (covered in part I of our study) against symmetric cryptography is often propagated, the strong need for further research in symmetric cryptography has also been identified by ECRYPT [EC DSTVL9].

Provable Security

One important target of ECRYPT is the aspect of provable security. The idea of provable security is to formally define notions for cryptographic algorithms, thus providing mathematical justification for their security. This can be done by showing that breaking the scheme could be used to solve some problem known to be computationally intractable, such as factoring large integers. The conclusion would be that, if the problem is indeed intractable, then the scheme must be secure under the chosen definition.

ICT users demand security guarantees regarding services and applications used; the most basic requirements being privacy and authenticity of data. With many electronic transactions being carried out over public networks such as the Internet, a highly adversarial setting has to be considered. Thus it makes little sense to make assumptions regarding specific adversarial strategies. Instead, the idea of provable security is to relate the security of a scheme to the difficulty of a computational problem, thus giving rise to an atomic primitive in the design of cryptosystems.

The first step would be to come up with a precisely specified security definition for a protocol. These definitions come in two parts: an adversarial goal and an adversarial model. The adversary’s goal captures what it means to break the protocol, while the model describes what powers an adversary has at its disposal when trying to achieve its goal. The aim is to provide security results in a quantitative manner. If possible, one seeks exact reductions. This approach is advantageous because, in practice, reduction strength translates directly into protocol efficiency. The intractability of the mathematical problem used in a proof of security is the computational assumption on which the proof relies.

A proof will be given by reducing the problem of breaking the scheme to the problem of breaking the atomic primitive, reducing Boolean satisfiability. Such a reduction would allow the conclusion that the only way to break the scheme is to break the atomic primitive. Thus, as long as the atomic primitive is sound, the scheme would be secure. Following these ideas, techniques have been developed to provide proofs of security for practical cryptosystems. Standards bodies now regard such proof of security as a necessary attribute for any cryptographic scheme to be adopted into standards.

Block Ciphers

The understanding how to build block ciphers may already be judged as much better than for building hash functions. This is caused by the publication of DES as well as the long-standing AES effort. One interesting aspect in the design of the AES was the use of a strong mathematical framework to establish some assurance against classical cryptanalytic attacks.

However, this very same framework might also offer opportunities to the attacker. Therefore, ECRYPT sees a great challenge in continuing work on the cryptanalysis of the AES, including the use of new techniques from other branches of mathematics in an attempt to exploit the rich structure of the cipher. One first attempt in this direction has been that of algebraic cryptanalysis. While this appears to have limited application in practice, there may well be more advances in the future. On the constructive side, much important work is expected on the provision of an increasing level of provable security against large classes of attacks on block ciphers. This effort will be coupled with the increased analysis of block ciphers and attempts to extend such analysis by appealing to mathematical techniques such as Gröbner basis, stochastic analysis, coding theory, finite fields, and representation theory, among many others.

While 3DES and the AES currently provide a trusted base for many future deployments, there will always be new application demands and hence the need for new designs. One important application area includes those environments for which the physical resources are constrained in some fundamental way. Some immediate application areas are RFID tags, sensor networks, and long-life

deployments such as satellites where very low footprint and/or very low power consumption designs might be required. It is very likely that new block cipher designs will emerge, attempting to exploit hard mathematical problems in their construction and in turn result in provable security.

Stream Ciphers

As viewed by ECRYPT, the amending of the knowledge of stream cipher design will likely lead to future work. Much as for block ciphers, there will be new applications and deployments that stretch the performance of even newly-available algorithms. Elements of provable security have already appeared in stream cipher design and it is likely that this will be extended in the future. When deploying symmetric primitives, one significant question is whether there is a "good" way to deploy such primitives so as to provide system resiliency in the case of future cryptanalytic advance. While there are obvious ways to build in some level of cryptographic redundancy, the exploration of efficient solutions to this problem is likely to be an important area for future symmetric cryptographic research. In order to closer follow these research targets, the eSTREAM project was initiated by ECRYPT (see 5.2 for further details).

Implementation Attacks and Cost

Major concerns for real-world security are obviously provided by implementation attacks. This also includes reverse engineering, side-channel attacks, and active ones such as fault injection attacks. This holds in particular for embedded systems (including, for instance, e-passports, smart cards, cell phones, or software download in cars) which will occupy an increasing portion of the security applications market. At this point it should be stressed that even though Europe’s industry does not play a central role that in the PC market, it is a major force in many embedded application domains such as automation, car parts, smart cards or household appliances. A common factor in many embedded applications is that attackers often have readily access to the security device. This situation gives rise to several principal research questions. Generally, more formal, models of attacks have very recently started to appear. However, much work has to be done, for instance towards establishing bounds on side-channel resistance. There is also a clear need for a common and open evaluation methodology for the security against this type of attacks.

It seems likely that perfect security against implementation attacks will be impossible to achieve. This situation is similar when comparing it to other attacks such as brute-force or analytical attacks, where the associated costs are often relatively well understood. However, there is a very poor understanding of the cost issue for implementation attacks. This holds in particular regarding reverse engineering and fault injection attacks. In order to obtain reliable statements about the security of embedded systems regarding implementation attack, the

“costs” (defined broadly) involved should be formalized and quantified. Without looking at the costs involved, statements about physical security are often not very meaningful. One interesting approaches is constant time implementations which avoid timing attacks.

Active attacks (esp. fault injection and reverse engineering) will become increasingly important issues. The feasibility of such attacks can often not be answered reliably by cryptographers, but will have to be investigated by physicists and semiconductor engineers. Solid statements must be obtained regarding the robustness of systems against such attacks, For this purpose, interdisciplinary research appears as a great research challenge which involves both cryptographers and people building the hardware.

Light-weight Cryptography

As described earlier, security is needed in an ever-increasing range of everyday devices, including for instance smart bar code labels, ID cards, or low cost car parts. There will be more and more applications which require robust security solutions at almost “no” costs. Most of cryptology deals with “heavy” protocols which can be implemented easily in nowadays powerful desktop computers. However, the market for microprocessors is mainly dominated by 8-bit or even 4-bit architectures whose computing power is by a factor 1,000 to 10,000 less than that of current desktop PCs. For these kinds of restricted environments, heavy cryptographic tools cannot be used. For RFID tags and many other mobile systems, it is particularly important to design low power implementations. Hence, the study of the area of light weight cryptography is very interesting to increase Europe’s competitiveness in the area of embedded systems and beyond. Providing symmetric algorithms, asymmetric algorithms and protocols at extremely low cost will be a major challenge. Even though ad-hoc solutions are slowly appearing – especially in the context of RFIDs there is still a long way to go towards general understanding of issues such as lower bounds (e.g., with respect to area or power consumption) for secure symmetric or asymmetric ciphers. Low-power Cryptography

A wide range of devices need the implementation of low-power cryptography. This ranges from embedded RFID tags, sensor networks, mobile phones to laptop computers running on batteries. There are two fundamental goals that need to be balanced: first, achieving the lowest possible power consumption; second, minimizing the human effort required for cryptographic design and implementation, allowing a broader range of applications. One challenging research area is the possible reuse of existing chips for low-power cryptographic applications. This saves production time both by avoiding hardware redesign and by providing tools for chip-specific optimization. Important tools include languages that account for chip architectures and compilers that automatically add some

side-channel resistance to cryptographic code. Even lower power can be achieved, at the expense of chip modification, by code signing instruction-set extensions and cryptographic algorithms.

Special-purpose Cryptanalytical Hardware based on Conventional Computers Special-purpose computers appear as soon as a certain budget for cryptanalytical attacks is available. Then they are running the best known attack algorithms provide the best cost-performance ratio. This holds in particular for attacks by government agencies, e.g., in the context of espionage. In order to thwart attempts like Echelon (a world-wide eavesdropping network run by intelligence agencies of Australia, Canada, New Zealand and United Kingdom, and coordinated by the USA) important research targets are set by reliable security estimations or parameter sets of commercial symmetric and asymmetric methods. Furthermore, the field or special-purpose hardware should be investigated systematically.

Special-purpose Cryptanalytical Hardware based on Novel Technologies

As long as only conventional computers are available, ciphers with large security margins such as AES-128, ECC-192 or RSA-2048 seem secure against attacks for many decades to come in the absence of cryptanalytic breakthroughs. However, another threat is given by attacks based on non-conventional computers which exploit physical phenomena.

Quantum computers are only one instance of such a technology. Therefore, research on further technologies is seen as a great challenge. Less ambitious technologies such as optical computers or analogue electronic computers have to be seen much more critical. This might pose a more realistic threat to existing ciphers. Again, attacks by foreign government organizations motivated by industrial espionage or national security are real-world scenarios to be considered for such attacks.

Data Authentication

There is a strong request from content industries for reliable technologies and protocols for protecting the rights of the works they own. But beside that, there also is an increasing interest in manipulation recognition and media data authentication forced by national or European signature regulations. One of the (undesired) effects of the universal availability of effective signal processing tools is the possibility to tamper digital multimedia data or to manipulate the media to embed secret information in order to hide the communication without leaving any trace. This puts the credibility of digital multimedia data in doubt.

A possible establishment of a covert communication channel by means of steganographic tools is another key issue for current research activities. However the purpose of this action may be legitimate (privacy, anonymity), it may be the case that the covert channel is used for malicious purposes, e.g. terrorist activities. For this reason and in recognition of hidden communication due to recent terrorist activities, reliable and accurate detection of covert communication could prove vital in the future as the society creates defence mechanisms against criminal and terrorist activities. Fast and reliable identification of stego media objects and estimation of the secret message size and its decoding provide highest ranking requirements formulated by law enforcement and therefore provide important objectives for research.

Secure Media

A lot of enabling technologies have been identified as important subjects for research activities. They have been brought together under a new term called “Secure Media”. This in particular includes the following items:

Data Hiding

(Robust) Watermarking Steganography/steganalysis

Watermarking-based authentication (intrusive content identification) Perceptual hashing or fingerprinting (for non-intrusive content identification)

Joint signal processing and encryption (e.g., zero knowledge, watermarking or other signal processing tools operating in the encrypted domain)

Multimedia forensics

Wireless Sensor Networks

In contrast to conventional sensor networks, wireless sensor networks are subject to numerous security threats. The operation environments of the sensor nodes often consist in hostile environments where they can be easily captured and manipulated. Furthermore, attackers can easily eavesdrop on the wireless communication between sensor nodes and manipulate messages.

Sophisticated security protocols, in combination with well-established cryptographic primitives, could ensure the integrity of communication in a wireless sensor network. However, certain cryptographic primitives, especially public key primitives, are extremely computation intensive and impose therefore a considerable burden on the limited energy resources of sensor nodes. Therefore, it is widely believed that the public key cryptography is not feasible for sensor nodes. However, the availability of public key schemes could significantly improve the overall security of sensor networks.

The first goal is to develop and implement cryptographic primitives, especially elliptic curve schemes, which are sufficiently efficient for deployment in wireless sensor networks. Both the execution time and energy consumption of elliptic curve schemes can be significantly reduced by combining efficient field arithmetic with efficient curve/group arithmetic. An example for a family of fields that allows for efficient implementation of the field arithmetic are the generalized-Mersenne prime fields, which are recommended by the NIST standard [FIPS186-2]. On the other hand, a family of curves which facilitate scalar multiplication by using an efficiently computable endomorphism has been proposed by Gallant, Lambert and Vanstone in 2001.

Beside public-key schemes, symmetric primitives like block/stream ciphers and hash functions are needed to secure wireless sensor networks. Fortunately, most radio modules compliant to the standard [IEEE802.15.4] are equipped with AES hardware, which can be accessed via software. Therefore, it is desirable to use the AES hardware for the implementation of hash functions. There exist already a few proposals for AES-based hash functions, but their efficiency and security has not been widely analyzed until now. The second goal of this proposal is to analyze existing and/or develop new AES-based hash functions.

The third goal is the implementation and analysis of security protocols for wireless sensor networks. New protocols targeting the specific needs of wireless sensor networks that use symmetric key cryptography should be developed, and their limitations should be explored. In addition, new protocols should be developed that combine both public-key and secret-key primitives, but should reduce the number of public-key operations to a minimum. Another important criterion is the minimization of the communication overhead during key establishment and/or authentication.

Distributing Trust: Voting and Auction Schemes and Privacy Preserving Data Mining

Further important research target is presented by the area of larger protocols and secure multi-party computation. Three exemplar applications are selected to show the relevance of the technologies in this document. It should be noted that to perform significant advances in the following areas requires all the technologies

we discuss to be used together in novel ways, namely public and private key techniques, implementation research, protocol design and provable security. Electronic Voting: Current deployed electronic voting technologies are not cryptographically secure. Voters are liable to coercion, ballots can be rigged or altered and the systems are not suitable for external auditing. There are cryptographic electronic voting proposals which solve many of these problems, but currently they suffer from scalability. For example, it is possible to mount a cryptographically secure election with two candidates and (say) one million voters. However, as the number of candidates increases, the number of possible voters decreases exponentially. Another problem is related to what one could call human factors problems. For example how can a voter trust his vote is being counted? As the voter does not understand the underlying protocols there is a need for creating open solutions where both source codes and the election procedures can be verified by anyone without compromising privacy of the voting the process. In addition how does a political party know that the vote is not being rigged by another party?

Auctions: Auctions, and other forms of electronic trading, also are not necessarily currently secure. For example one needs to ensure that the auctioneer is not unduly altering the outcome, also one need to ensure in some situations the anonymity of buyers/sellers and even the amount of the highest bid. Coalitions of parties can try to alter the working of the market, via cartels etc. Economists have pointed out the importance of creating true free market conditions so as to maximise utility, yet this is needed to be implemented in online trading and auctions so as to obtain a more efficient electronic market place. Of particular relevance, here is the work on modelling adversaries who work in a rational rather than totally malicious manner.

Privacy Preserving Data Mining: With vast amounts of data now being made online, it becomes important to be able to extract information from this data. This has traditionally been the subject of data mining technologies. However, much data is confidential (for example medical records, or company’s customer information) but one can extract more valuable information by pooling data. These two conflicts, between the extra power of pooling information and the desire to maintain confidentiality or privacy is going to become greater as the years progress. Cryptographers are starting to be able to tackle this problem via the use of data mining techniques which maintain privacy of the underlying data. Indeed, one can think of an electronic election in this manner, the pooled data which is to be mined is the set of votes, yet each voter wants their specific vote to be kept secret. General privacy preserving data mining techniques promise much if extra research can be directed to this problem.

The Trusted Platform Module (TPM) provides a quite new device usable for cryptographic operations and subject to quite new research activities. Trusted Computing provides cryptographic functionalities based on which a trustworthy system can be built where trustworthiness is defined according to the underlying security policies. These functionalities can be used to (i) remotely verify the integrity of a computing platform (attestation and secure booting), (ii) bind secret keys to a specific platform configuration (sealing), (iii) generate secure random numbers (in Hardware), and to (iv) securely store cryptographic keys.

In this context there are various research issues to be explored:

Security model for the components used on a Trusted Computing Platform such as a TPM: For future developments it is important to establish an abstract model of these components and their interfaces to be able to analyse the security and cryptographic as well system-related mechanisms that rely on the functionalities of these components.

Efficient multiparty computation using tiny trusted components which have only a limited amount of storage and provide only a few cryptographic functionalities as mentioned above: Many interesting applications like auction and voting may require complex cryptographic protocols or still inefficient computations for their realization depending on the underlying trust model and the security requirements. It is interesting to examine how and to what extent trusted computing can improve the existing solutions.

Property-based attestation: the attestation functionality allows one to verify the configuration of an IT System. This, however, raises privacy problems since one may not be willing to disclose details about the internals of an IT system. In this context property-based attestation would only require an IT system to prove that it has a configuration of a certain property, i.e., it is conforming to a certain (security) policy instead of revealing the configuration itself. Here, one can prove the correctness even if a configuration changes but still obeys the same policy.

Maintenance and migration: using Trusted Platform Modules also requires methods and mechanism for transferring complete images (of applications and operating system) form one computing platform to another. Here, one needs to design efficient and secure mechanism to move a complete software image between platforms with different TPMs and different security policies.

Integration of Cryptology with Biometry

Biometrics is currently growing rapidly as an alternative to previously used knowledge based verification resp. authentication methods. Combining cryptology and biometry improves security and convenience of a system. However, traditional cryptology cannot apply to biometrics since biometrical data

cannot be produced exactly. The purpose of the research is to meet the challenge of developing new cryptology for noisy data. Techniques such as perceptual hashing and the derivation of keys from biometric data using additional helper data (referenced and/or metadata) are promising. The combination of biometrics and cryptology with steganography and digital watermarking also offers new opportunities for secure and user-friendly identification protocols that offer better privacy.

ECRYPT II

The recently started follow-up project ECRYPT II continues the basic research targets of ECRYPT I and follows new ones based on the previous results. The ECRYPT II research roadmap is motivated by the changing environment and threat models in which cryptology is deployed, by the gradual erosion of the computational difficulty of the mathematical problems on which cryptology is based, and by the requirements of new applications and cryptographic implementations.

Similar as for ECRYPTI, the activities of the ECRYPT II Network of Excellence are organized into three virtual laboratories established as follows:

Symmetric techniques virtual lab (STVL)

Multi-party and asymmetric algorithms virtual lab (MAYA) Secure and efficient implementations virtual lab (VAMPIRE) The objectives of STVL stay the same as in STVL for ECRYPT I.

The main technical objective of the MAYA lab is to allow better collaboration among European institutions on the design and analysis of asymmetric cryptographic primitives and protocols. It basically continues the activities of the AZTEC lab of ECRYPT I.

The VAMPIRE lab also follows the same objectives as in ECRYPT I. 4.2 Mathematical Problems

Discrete Logarithms and Diffie-Hellman Problems

Developing improved index-calculus based algorithms for the discrete logarithm problem in finite fields remains an active target of research.

To build confidence in the DDH assumption, one would like to prove that the CDH assumption implies the DDH assumption. To do this, one would have to

show that CDH could be solved using a DDH oracle. This remains an open problem.

Elliptic curve groups have certain advantages over their finite field counterparts. For example, the index-calculus methods mentioned above do not apply to the elliptic curve discrete logarithm (ECDL) problem. As in the finite field case, there has been recent interest in the elliptic curve Diffie-Hellman (ECDH) problem and the elliptic curve decision Diffie-Hellman (ECDDH) problem.

RSA Related Problems

The idea consists in RSA encryption being a one-way function, i.e., it is hard to invert, unless you know the trapdoor.The system is based on a property described by the theorem of Euler. It is well known that factoring provides a method of decryption.

What was not established, and has never been determined, is whether or not computing roots modulo nis as hard as factoring. Factoring is a problem that has been studied much more intensively than computing roots and so it would be much more satisfactory to show that the security of RSA was guaranteed by the infeasibility of factoring the modulus.

Tremendous advances have been made in factoring using Fermat’s method as a starting point. One such algorithm is the Quadratic Sieve. The most powerful factoring algorithm known today is the GeneralNumber Field Sieve (GNFS). The principal difference between the two methods is the technique used to generate the relations. The GNFS uses two factor bases: one contains small primes as in the Quadratic Sieve case; the second contains prime ideals from the ring of integers of a suitably chosen number field whose norm is less than a given bound.

4.3 Digital Signature Schemes

A digital signature scheme is often thought to be the digital analogue of the hand-written signature, at least as close as can be. The idea is that a user has an identity and his public key, attached to it in a trustworthy way. The user also has a private key related to its public key that he keeps as a personal secret for himself. A signature on a message can be computed using the private key and can be verified using the public key. Moreover, no adversary can produce the user’s signature on any message, even if the user can be convinced to sign other messages of the adversary’s choice.

Digital signatures have been recognised in many countries as legally binding. As with hand written signatures, digital signatures principally have two purposes. First, they guarantee that a digital document came from the person it appears to have come from and that the document remained intact in transmission, even if it

passed through adversarial hands on the way. Secondly, they allow the recipient of a digitally signed document to prove to a third party that the document came from the signer, i.e. digital signatures provide non-repudiation.

A complete definition of security for a scheme has two parts. Once we have decided on what an adversary’s goal could be, we have to decide what powers an adversary may have at its disposal when trying to achieve its goal. As a rule, the weaker the adversarial goal, and the more powerful the adversary considered in a definition, the stronger the definition. Combination of an adversarial goal with an adversarial model gives the two possible definitions of security for signature schemes:

A signature scheme is secure if it is not possible for any polynomial-time adversary to totally break the scheme with non-negligible probability using a passive attack.

A signature scheme is secure if it is not possible for any polynomial-time adversary to existentially forge a signature with non-negligible probability using an adaptive chosen-message attack.

Now, these two different definitions give rise to further research activities regarding conditions for secure signature schemes and how to satisfy them. 4.4 Public Key Encryption Schemes

Adversarial Goals

Intuitively, the point of using a public key encryption scheme is to prevent unauthorized extraction of information from communications. Consider to begin with an encryption scheme that is simply a trapdoor one-way function such as raw RSA. Using a trapdoor one-way function in this way does not rule out the possibility that a message is easy to compute from its encryption when it is of special form. For example, 0 and 1 encrypt to themselves under any raw RSA encryption transformation. One other problem with a deterministic encryption transformation such as RSA is that any message encrypts to a unique cipher text. If an eavesdropper suspects that a message comes from a small set, all he needs to do is to encrypt all these messages and to compare the resulting encryptions with the cipher text to decide what the message is. This problem can be overcome by concatenating the message with a padding string before encryption.

In addition, an encryption transformation being a trapdoor one-way function does not preclude the possibility that an eavesdropper can obtain some information about a message from its cipher text, even if it cannot recover the whole message. In the case of RSA, an eavesdropper can easily learn one bit of information about a message from its cipher text, because the encryption

exponent is always odd. We have seen why not being able to recover plaintexts from cipher texts is not on its own a satisfactory security property for encryption schemes. Stronger notions which are generally accepted are needed.

Shannon defined what he called perfect secrecy. Let us suppose that the possible messages to be sent are finite in number. They could be possible chunks of English text of a given length for example. Before any message is sent, an adversary computes a priori probabilities for the sending of each message. A message is now encrypted using one of the encryption functions E1, . . . , El to

produce the cipher text. The adversary intercepts the cipher text and calculates the a posteriori probabilities for the various messages. If, for all possible cipher texts, the a priori and a posteriori probabilities calculated by an adversary with unlimited time and computational resources are equal, then we have perfect secrecy. In this case the adversary learns nothing about the message from the cipher text that could not be found without the cipher text.

In reality, an adversary’s time and computational resources are bounded. All adversaries considered are modeled as probabilistic polynomial-time algorithms. The revised definition of semantic security allows for the possibility that the adversary has some information about the message. Of course, the information that the adversary has must be the same. Polynomial security has been renamed with the more informative indistinguishability of encryptions. Distinguishing encryptions has become the accepted adversarial goal that is used in the definition of security for public key encryption schemes today. While semantic security captures intuition about what security should be, the cipher text should give nothing away about the plaintext; indistinguishability of encryptions is technically useful when it comes to proving security of encryption schemes. The major result is the equivalence of semantic security and indistinguishability of encryptions.

Adversarial Models

It remains to consider the powers of an attacking adversary. Dealing with public key encryption schemes, any adversary is able to encrypt any plaintext to see a corresponding cipher text. An attack where this is possible is known as a chosen-plaintext attack. This is the weakest possible attack scenario for a public key encryption scheme. There is a possibility that an attacking adversary may, in the absence of the legitimate user, gain access to the decryption mechanism. In this instance the adversary is able to produce cipher texts and then see the corresponding plaintexts, such having access to a decryption oracle.

This attack is known today as a non-adaptive chosen-cipher text attack. It is non-adaptive in the sense that the adversary is unable to adapt the cipher texts sent to the decryption oracle once it has seen the challenge cipher text. In the strongest attack scenario the attacker is able to query the decryption oracle after having

seen the challenge cipher text. Of course, the attacker is not permitted to submit the challenge cipher text itself to the decryption oracle. This would be an adaptive chosen-cipher text attack. As mentioned above, indistinguishability of encryptions and semantic security are equivalent notions. This was originally proved in a setting where an adversary does not have access to a decryption oracle. However, this is not the case with adversaries that are able to use chosen-cipher text attacks. Cryptographic Hash Functions

Cryptographic hash functions are similar to the hash functions used in many areas of computer science. In all cases a large domain is hashed onto a smaller range. Hash functions are frequently used in integrity checking for a digital signature scheme or a public key encryption scheme. In the case of signature schemes, one is able to sign an arbitrary length message by computing its hash value and signing this instead of the message itself. Since the domain is larger than the range, a hash function is necessarily many-to-one, implying the existence of collisions.

The Random Oracle Paradigm

In the past, a common design paradigm was to take some number-theoretic primitive and use it to build a hash function. Powerful primitives such as the hash functions SHA-1 and MD5 were readily available. The properties of these primitives are modeled by the random oracle model (ROM) in which such primitives could be used to design provably-secure cryptosystems. The idea is to assume that all parties, good and bad alike, have access to a random oracle. A random oracle is an oracle that, when queried, replies with a random response, subject to the condition that on a repeat query, it give the same response. Such an oracle indeed has the properties that we require from a hash function.

The Forking Lemma

The adversary considered is one that is able to use an adaptive chosen message attack against the scheme. The adversary is used to recover the discrete logarithm of the public key that it attacks. This presents the problem of responding to the adversary’s signing queries without knowledge of the private signing key. It turns out that it is possible to simulate these queries without knowledge of the key if the hash function is modeled as a random oracle. The adversary is assumed to be a probabilistic polynomial time algorithm that runs on random input. The reduction works by running the adversary many times with the same inputbut each time making it interact with a different simulation of the random oracle. This method is called the oracle replay attack.

In the oracle replay attack, the adversary is run until a successful forgery is produced. The simulator runs the adversary again in the same way until it makes certain query. At this point, it chooses a new random response to the query. This causes a fork in the execution of the adversary. This describes the intuition behind a forking lemma argument.

4.5 Open Problems in Provable Security

Finding ways to assure provable security in cryptographic schemes is still an important target for security research in the EU. In the following, a set of open problems is listed which are actively being researched by AZTEC of ECRYPT. Oracle Model

Owing to many results, the use of the random oracle model (ROM) is not entirely satisfactory. Separation results show that there exist ROM-secure encryption or signature schemes that are insecure under any instantiation of the random oracle model with any hash functions. One open question is to establish whether or not all such results must rely on contrived examples. If this turned out to be the case, and one could formalize a suitable notion of contrivance, one could determine when a proof in the ROM applied in the real world.

A general open problem is to construct signature and encryption schemes which are secure in the standard model (without random oracles) but which are also efficient. A major open problem is to construct such an encryption scheme which is based on a computational problem such as the RSA problem or the Diffie-Hellman problem. A similar problem is to construct a signature scheme based on either the RSA problem or the standard discrete logarithm problem (as opposed to the computational Diffie-Hellman problem).

All three of these models work by assuming a given component of the system behaves in an ideal way: a hash function in the ROM, a group in the generic group model and a symmetric cipher in the ideal cipher model. It is natural to ask questions about the relationship between these models. For example, can one interpret the generic group model and the ideal cipher model as specific instances of the ROM? Alternatively, is there a separation between these models?

Composability of Schemes and Protocols

The idea is to use an asymmetric key encapsulation mechanism (KEM) to encrypt a key for a symmetric data encapsulation mechanism (DEM). Thus one wishes to build schemes whose security is guaranteed by the security of its simple components. It would be interesting to investigate if such a design philosophy be extended to other primitives. Similarly, higher level protocols are made up of smaller sub-protocols, for example, homomorphic encryption and commitment

schemes. In this context, it is important to understand how components which are provably secure may interact badly in higher level protocols to create weaknesses. Formal Security Proofs

Many existing schemes do not have any formal security proofs yet, in particular ones based on the problem of hidden number fields (HNFs) or hidden field equations (HFEs). The main impediment to such proofs is the ability to formally define hard problems on which to base cryptosystems. Finding formal security proofs is a great challenge to security research as they could lead to methods for formal verification of security properties.

Two-Party and Group Key Agreement

Key agreement is one of the most important asymmetric cryptographic primitives. The idea was to take a more modular approach to the design and analysis of protocols which culminated in the notion of universal composability. This is the strongest notion for it models the security of a protocol when composed with any other arbitrary protocols.

Group Key agreement schemes allow a set of users to agree on some common (secret) value from which they can easily derive a session key. These protocols are arguably extremely useful in many group-oriented scenarios, such as video-conferencing or secure duplicate database to cite a few, as they allow efficient implementations of secure multi-cast channels. A protocol is considered secure if an adversary with complete control over the communication network remains unable to distinguish the real key from a random one. In addition, it should offer protection with respect to malicious intruders or parties who arbitrarily deviate from the protocol.

Password Based Key Agreement

In one model that is used at present, an adversary is given a series of oracles that model the various attacks that it may have at its disposal (on-line password guessing, passive eavesdropping etc.); its challenge is to distinguish a session key established using the protocol from a random string.

From a constructive perspective, there are several efficient schemes in the random oracle model, based on various computational assumptions (including the Diffie-Hellman problem, the RSA problem and integer factoring). In the standard model, decisional problems are required. Also, the computational overhead is greater than for their ROM counterparts.

In a three-party password-based key agreement, the users trying to establish a session key do not need to share a password directly among themselves but only

with a trusted server. This scenario is of great practical interest as users are only required to memorize a single password. To date, only few schemes are known for this scenario, but none of them are provably secure. Key agreement protocols constructed from any standard two-party password-based key agreement should be possible.

Group schemes for password-based key agreement are designed to provide a pool of players communicating over a public network, and sharing just a human-memorable password, with a session key (e.g., the key is used for multicast data integrity and confidentiality). The fundamental security goal to achieve in this scenario is security against dictionary attacks.

Side Channel Protection and Provable Security

There are two potential avenues for research in this area: the first is based on extending the models used in provable security to include issues such as the adversary learning extra information from physical side channels; the second, as yet unexplored, is based on the concept of obfuscation. As for the second avenue mentioned above, it is known that there cannot exist a general obfuscator, however, obfuscators may exist for particular algorithms, for example, a given encryption or decryption algorithm.

Provably Security in the Presence of Quantum Adversaries

A major problem with existing, practical schemes for public key encryption and digital signing is that they depend on problems which could be rendered insecure by future developments in quantum information processing. A major open problem arising from this is to build new signature and encryption schemes that do not rely on number theory problems, many of which appear to be easy on a quantum computer. Even in the absence of quantum computers, schemes based on non-number theoretic constructions will protect against any significant advance in classical algorithms or implementation techniques for these number theoretic problems.

Further Issues

Almost all security results are based on complexity theoretic considerations within the standard Turing machine/von Neumann model of computing. However, an open problem remains by possible attacks on systems using novel forms of computing.

Another open question is how to produce a provably secure public key encryption scheme whose security is based on an NP-complete problem.

4.6 Additional Requirements

In addition to the fulfillment of pure security requirements (e.g. provable security), there may be additional requirements caused by constraints of available resources. This is especially relevant when using smart devices for cryptographic operations. Looking for ways how to meet these additional requirements is also a research target followed by ECRYPT.

Reduction in Size

The energy used to transmit a single bit using wireless technology may be several orders of magnitude that required computation. Considering such issues, it is of great practical importance to construct signature schemes for which the signature is as short as possible. Similar questions arise in encryption. Some encryption schemes use a large amount of redundancy in the cipher text to attain provable security. Essentially, this is done to build in a notion of plaintext awareness, so that almost all cipher texts are invalid in some way. This clearly results in a large expansion between the plaintext and the cipher text. An interesting and important question is that of designing secure encryption schemes with little or no redundancy.

Reduction in Time

All schemes, whether secure in the standard model or in the ROM, have cubic decryption time or cubic encryption time, and sometimes both (where complexity is measured in terms of the size of the security parameter). For example, RSA with short public exponent requires quadratic time to encrypt, but cubic time for decryption. Schemes based on ElGamal usually require cubic time for both encryption and decryption.

An open problem is to find a provably secure public key encryption scheme with both encryption time and decryption time, a quadratic function of the size of the security parameter. Similarly, the advent of new, small, low-powered computing devices such as RFID tags means that there is a need for a secure, low-cost signing algorithm. In such a situation, a high cost for verification can be accepted but a low cost for signing is crucial.

4.7 FP 7 Projects with Non-algorithmic Research Targets

ECRYPT resp. ECRYPT II strongly concentrates on the properties of cryptographic algorithms. Beside these well known NoEs, a lot of new projects were initiated by FP7 in 2008 that go further beyond this subject and also cover a couple of non-algorithmic aspects as well as non-classical cryptographic methods like quantum cryptography. In the following, we give an overview about these projects and their major research targets.

Computer Aided Cryptography Engineering (CACE)

The CACE1 project deals with problems of secure and efficient design of

cryptographic hardware or software solutions based on existing algorithms. It is a collaborative project lasting for three years, headed by the Austrian company Technikon.

Development of hardware devices and software products is facilitated by a design flow, and a set of tools (e.g., compilers and debuggers), which automate tasks normally performed by experienced, highly skilled developers. However, in both hardware and software examples the tools are generic since they seldom provide specific support for a particular domain. The goal of this project is to design, develop and deploy a toolbox that will support the specific domain of cryptographic software engineering. Ordinarily, development of cryptographic software is a huge challenge: security and trust is mission critical and modern applications processing sensitive data typically require the deployment of sophisticated cryptographic techniques.

The proposed toolbox will allow non-experts to develop high-level cryptographic applications and business models by means of cryptography-aware high-level programming languages and compilers. The description of such applications in this way will allow automatic analysis and transformation of cryptographic software to detect security critical implementation failures, e.g., software and hardware based side-channel attacks, when realizing low level cryptographic primitives and protocols. Ultimately, the end result will be better quality, more robust software at much lower cost; this provides both a clear economic benefit to the European industry in the short term, and positions it better in dealing with any future roadblocks to ICT development in the longer term.

Cryptography on Non-Trusted Machines (CNTM)

Beside the security properties of cryptographic algorithms, trust in the devices to be used for performing cryptographic operations is also an important aspect. The project CNTM2 – an ERC Starting Grant headed by the University of Rome – is

about the design of cryptographic schemes that are secure even if implemented on not-secure devices. The motivation for this problem comes from an observation that most of the real-life attacks on cryptographic devices do not break their mathematical foundations, but exploit vulnerabilities of their implementations. This concerns both the cryptographic software executed on PCs (that can be attacked by viruses), and the implementations on hardware (that can be subject to the side-channel attacks). Traditionally, fixing this problem was left to