Rise of High-Capacity Data Center Interconnect

in Hyper-Scale Service Provider Systems

B

ACKGROUNDThe growth of cloud-based services in consumer and business applications during the past five years has been spectacular. Projections are that this trajectory will continue during the next five years. By 2019 mobile network traffic is forecasted to increase tenfold. Half of this increase will be in video services, and an additional 10 percent will be in social networking applications. At the same time, business cloud computing services are expected to increase at a 40 percent average annual rate. By 2019 there will be 60 percent more data centers in the world’s metropolitan areas than there are today, and data center interconnect volumes will increase by more than 400 percent.

During this initial cycle of adoption, innovations in cloud provider infrastructures have generally focused on systems running within

their data center sites. For example, a top priority has been to dramatically improve the efficiency of computing platforms. We have seen significant crossover in server NICs from 1 GbE to 10 GbE in 2014. Virtual machine solutions and distributed application architectures such as Hadoop have been at the forefront of those developments. In parallel, the networks inside data centers have dramatically expanded their capacity and agility. As the capacity of server NICs has increased we have seen cost-effective top-of-rack switches being enabled with 40 Gb/s and even 100 Gb/s connections in some cases today. Pundits expect 25 Gb/s server NICs to become available in 2016, further increasing server outputs. Vendors will continue to deliver architectures that drive latency down and scalability up. Providers will increase their adoption of software that automates allocation of resources and deployment of services on

Report Highlights

By 2019 there will be 60 percent more data centers in the world’s metropolitan areas than there are today, and data center interconnect volumes will increase by more than 400 percent.

DCI capacity between cloud

provider sites ranges from 10's of tb/s in medium scale sites to 250 tb/s and more in hyper scale provider sites.

A new category of DCI solution is emerging to meet the cloud providers' requirements.

Small form factor, high capacity, stackable platforms compatible

with cloud providers' elastic

resource provisioning models are in

the forefrontof the developments.

High capacity point-to-point and

shared network deployments will each be used, depending on the use case and the operator.

2

top of high-performance infrastructures. As the popularity of cloud-based services has surged, a new category of requirements has emerged, focused on the growing volumes of traffic flowing between

cloud providers’ data centers, particularly in the metro.

Since the initial development of cloud-based services, the industry has recognized the need for data center interconnect (DCI). In the initial phases, providers deployed DCI solutions connecting end customer data centers to service providers’ data centers for offerings such as Infrastructure as a Service, hybrid cloud, Platform as a Service, and Software as a Service solutions. DCI has also been used to connect ecosystem partners with service providers’ data centers, for example in content distribution and compound application services.

New requirements for DCI have grown out of operators’ needs to deploy very high-capacity, high-speed, efficient transport between their own data center sites. In many cases we hear customer reports that their DC-DC traffic is growing faster than their DC-to-user traffic. The behavior and demand for cloud-based applications has forced operators to deploy highly interconnected internal data center sites, and their need for deploying DCI networks to support those applications has evolved alongside.

The following table describes the mix of data center interconnect solutions for cloud-based services that have evolved:

Source Data Center Destination Data Center Deployment Type

Enterprise Cloud SP Metro or Campus1

Cloud SP Same Cloud SP Metro or Campus

Cloud SP Different Cloud SP Metro or Campus

Enterprise Cloud SP Long Haul

Cloud SP Same Cloud SP Long Haul

Cloud SP Different Cloud SP Long Haul

Table 1. Mix of DCI Deployment Types

Cloud providers often lease wavelengths from traditional service providers to satisfy their DCI needs. However more and more cloud providers are deploying their own optical transport equipment over dark fiber as this provides superior economics in the face of a dramatic rise in DC-DC traffic. From the transport point of view, these deployments can be implemented using dedicated point-to-point optical links or using capacity on a shared optical network (metro or long haul). Choice of implementation is based on the required capacity, agility, available infrastructure, and overall cost.

Understanding that this mix of options has emerged for connecting cloud providers’ data centers in the market overall, let us turn our attention to the developments in hyper-scale providers’ services that are generating the need for a new class of DCI solution.

1

Campus in this analysis refers to large business campuses in which one or a number of firms have data centers that interconnect with each other. We view them as similar to metro area deployments with obvious variations in length and precise construction of the links interconnecting the sites.

3

Two scenarios dominate the emerging requirements. Each illustrates the reasons underpinning the need for new solution designs.

In the first scenario, the options available to an operator for expanding data center capacity in a geographic area are constrained by the availability of power and/or space, and the only or most effective way of expanding capacity in that area is to construct one or more new data centers in the same campus or metropolitan area. For example, in the Bay Area one large Internet content provider deploys a campus with each building in the campus located a few kilometers away so it is connected to a separate substation to mitigate power overload risks. Although the need for efficiencies within the data center sites remain, those efficiencies now also have to be achieved in a distributed platform across a set of data center sites in the metro where each site may be anywhere from a few hundred meters to, more typically, a few kilometers away. The demand for a high-capacity, efficient, and scalable DCI infrastructure to enable smooth integration of sites emerges.

A process called transaction magnification provides a clear example of why high-capacity DCI matters for operators deploying this kind of infrastructure. Facebook’s applications2 provide a good example of how transaction magnification works. Facebook analyzes its processing workloads regularly. One recent measurement showed that a single 1 KB HTTP request spawned > 35 additional data base lookups, >300 related backend RPCs, and a >900x increase in bandwidth for machine to machine communications within its data centers compared to the traffic traversing the user-facing parts of its networks. When the applications are distributed across multiple data centers in a given geographic area, it is evident there is a need for efficient and high-capacity interconnection between those sites. This new model of distributed computing across multiple data center buildings will only continue to grow.

In the second scenario, an operator places large-scale data centers in distant, rural geographic locations to improve economics (power, cooling, and space) or meet regulatory and/or performance and proximity requirements for users. For example, multiple data centers have now been deployed in Iowa on large tracts of available land along with economic incentives. Large data centers are being deployed north of the Arctic Circle in Lulea, Sweden, both for the land available and the cost effectiveness of air cooling in that region. Coverage areas for these data centers are generally very large, as in the Eastern or Western U.S., Western Europe, South Asia, China or Latin America. Operators deploying data centers in this manner include web-scale application providers such as Google and Facebook; large-scale cloud IT providers such as Amazon Web Services, Microsoft Azure; and regional providers of cloud-based IT such as Pacnet, Internap, Equinix, Numergy, and COLT.

Google’s internal B4 WAN3 provides a dramatic example of the use of high-capacity, dedicated DCI between widely distributed internal sites. The B4 WAN communicates only between Google’s data

2

Najam Ahmad, Facebook, Executive Forum Keynote, March 21, 2013.

3

4

center sites, is optimized for that purpose, and is separate from its customer-facing access network infrastructure.

To produce the B4 design, Google analyzed the pattern of its application communications for an extended time. In search, email, chat, video upload/download or other applications, Google identified needs for consistency in content across its data center sites; for high-quality experiences across its application portfolio; and for handling its own versions of the magnification effect efficiently across locations. Together these needs led to the design of a data center interconnect network of very large scale and with specific engineering requirements. The rate of growth of traffic between its data center sites is now greater than the rate of growth in its user-facing traffic—though both are large! The volumes carried between its data center sites are greater than the volumes flowing between its data centers and its users. The efficiency of its B4 DCI network is a fundamental enabler of Google’s ability to innovate and deliver its application portfolio globally, the Google Instant search enhancement as a recent example of that relationship.

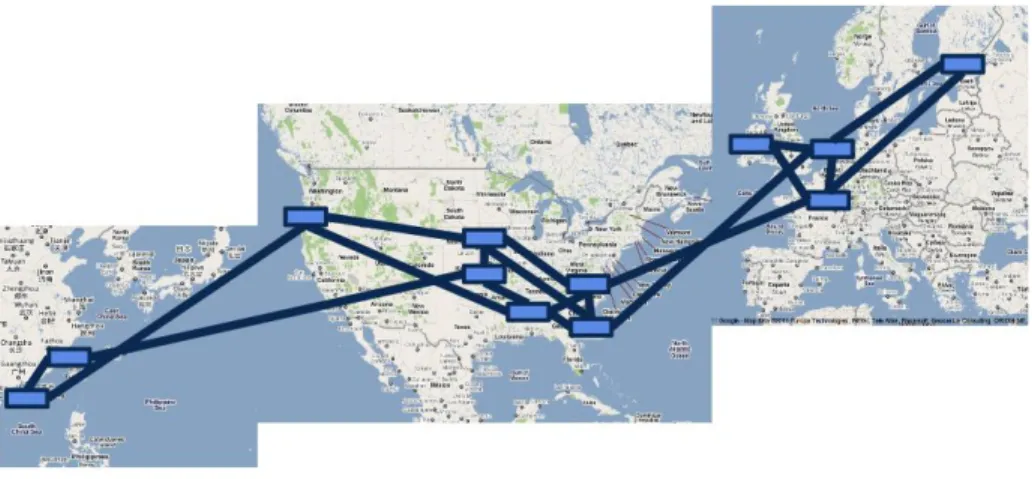

A view of the B4 topology as reported by Google on deploying B4 at the ACM Sigcomm 2013 conference in Hong Kong is shown in the following figure:

Figure 1. Google’s B4 DCI WAN as of 2011

Of note is that both types of high capacity DCI solution (solutions addressing metro/campus DCI connectivity and solutions addressing long-haul DCI connectivity) are deployed in the B4 WAN. This underscores the reality that hyper-scale providers often have to optimize on each dimension (metro and long-haul) concurrently to address their economic, scalability, and application delivery goals,

The two cases we have highlighted (Facebook’s magnification effect and Google’s B4 WAN) are just two of many that illustrate the need for higher capacities and increased efficiencies in DCI solutions for large scale cloud provider infrastructures.

5

H

OWB

IGA

RE THEDCI

N

ETWORKS?

Given that cloud operators have special needs to address within their DCI networks, an important question emerges, namely, how big are the required capacities for the new DCI solutions? To examine this we looked at a range of data center sizes (and use cases) to create a profile of cloud provider DCI bandwidth requirements. This profile is summarized in Table 2. We divided cloud provider data centers into hyper-scale, large-scale and medium-scale classes. We focused on parameters with a direct impact on traffic volumes for DCI.

Data Center + DCI Parameters Hyper-Scale DC Large-Scale DC Medium Scale DC

Notes

# Servers inside one building 200,000 100,000 50,000 Hyper-scale Can ~ 2-3x

This # @ Extreme

% Servers with 10 GbE NICs 100 75 50

% NICs Utilization 35 35 30 Can Be Higher @ Peak

Total Server-Facing Bandwidth Used in This DC

700 Tb/s 270 Tb/s 83 Tb/s Intra-DC, Inter-DC, +

User-Facing % Server Traffic Used in

Communication External to DC

60 60 60 Includes DC-DC in SP +

User-Facing

Bandwidth Used for DC External 420 Tb/s 162 Tb/s 50 Tb/s User-Facing + DCI

% DC External Bandwidth Used for DCI

60 60 60

Total DCI Bandwidth Required 252 Tb/s 97 Tb/s 30 Tb/s

# 100G DCI Ports Required for This Single DC building

2,520 1,000 300

Table 2. Profile of Cloud Provider DCI Bandwidth Requirements

Hyper-scale data centers are the largest installations for providers such as Google and Facebook, as well as the largest cloud IT providers such as Amazon Web Services and Microsoft Azure. The amount of DCI required varies per site based on clustering of data centers in a given area, the services supported in a particular data center, etc. At the extreme high end of the range, hyper-scale data centers can house 500,000 or more servers. We used 200,000 as a normative number for the class. Totals for other sites can be increased straightforwardly if their requirements are greater.

Other variables differ between the classes, contributing to the amount of DCI capacity they require. These include the percentage of the servers in the data centers using 10 Gb Ethernet NICs (whose penetration rate will grow significantly in the next three years); and the utilization of the servers and NICs in a given data center site, which has an effect on the traffic being supported in different portions of each data center’s networks.

The DCI capacity required in a hyper-scale cloud provider center is on the order of 2,500 100 G ports per site. If there were 25 such data centers in the world whose capacity were outfitted with solutions at this capacity in a year, that alone would generate demand for 65,000 100 G ports in that year for hyper-scale data center sites. From this we can begin to see a profile of the growing demand for high-capacity DCI

6

solutions in cloud operators’ networks. By 2019 we anticipate this demand being measured in hundreds of thousands of high-capacity ports annually. We project a market for DCI transport solutions in the range of $4 billion by 2019.

N

EEDED:

D

ESIGNS THATW

ORK IN THEC

LOUDIn parallel with the capacities required for operators’ DCI solutions it is important we look at other dimensions of their environments that affect the kinds of solutions they will adopt. Four of these stand out as most important:

Form factor compactness/density Low power consumption

Operational efficiencies

Programmability and automation in service management

One principle highly valued in cloud provider data centers is making resources available in compact units that can be allocated to small or modest sized workloads, but which can also be pooled or aggregated into greater bundles that support a larger task collectively. This is an approach heavily used in managing compute and storage resources; and for well defined networking tasks such as server aggregation or deploying large amounts of capacity on a DCI path, it is a concept that can be leveraged again. This approach works well from the space management perspective and supports deploying capacity in flexible increments, depending on application.

Closely related to form factor are operational efficiencies that make a solution attractive to the operator. First is efficiency in power consumption. Cloud providers are highly conscious of minimizing power consumption while maximizing the throughput and value of their services. It is likely a stackable platform for DCI built using a design closer to a server’s than to a telco central office platform’s will draw less power per unit of function (say, 100 G of transport) than its telco office counterpart. It is within reason to think that a stackable solution would use a third or more less power per rack than the corresponding functionality in a telco design. The appeal of this in support of 5 to 10 racks’ worth of capacity in a hyper-scale cloud provider’s case is evident.

A second area of efficiencies is simplicity in installation and operation by the data center staff. Given the size of the installations cloud operators run, a premium is placed on simplicity in managing physical deployment and repair as well as in troubleshooting. Having units that install quickly, that can be expanded easily, and that are similar in form and deployment to other platforms with which technicians are familiar, such as servers, are all a plus. Providers generally prefer a rack and stack approach in which a single person can easily install the element, and the unit can then self-configure using embedded software and APIs, leveraging auto-provisioning software of the type already in use for servers and storage.

A final requirement to consider in terms of readiness for the cloud is whether a solution is programmable and ready to integrate into the service automation platforms operators are

7

implementing. Motivations for using the automation platforms are to turn up services quickly, experiment efficiently with new service offerings, and operate effortlessly at very large scale. If cloud-enabling DCI solutions can interact dynamically with the bulk provisioning, path management, bandwidth management, traffic analysis, and other service automation applications operators want to use, operators will be only more inclined to deploy them for their DCI requirements.

F

ASTD

EVELOPING:

N

EWDCI

F

ORMF

ACTORS FORC

LOUDC

ONNECTIVITYAs the demand for their services continues to surge, cloud providers are creating their own new demands for supporting the capacity, performance, and economics their offerings require. Because they are steadily deploying new data center capacity, and the data centers need to operate as a well orchestrated service delivery fabric for the cloud, providers require a fresh generation of data center interconnection network designs to realize their goals.

The new DCI solutions need to embrace the fast moving rack and stack operational model in use by cloud providers already to deliver the simplicity, economy, and elasticity the rest of the cloud providers’ infrastructures employ. If they do this, then providers can bring new capacity online quickly to interconnect multiple data center buildings and support a rapidly growing distributed computing model where traffic moves across multiple buildings even in response to a single user request. Design innovations are emerging that provide the convenient rack and stack form factors cloud operators prefer. These form factors will enable the efficiencies crucial to delivering cloud services at scale. Combining this packaging with programmability for service automation will allow high-capacity solutions to participate fully in the intelligent network model that operators want.

Delivering these innovations successfully will allow suppliers and operators alike to continue realizing remarkable promise of cloud.

Copyright © 2014 ACG Research, The copyright in this publication or the material on this website (including without limitation the text, computer code, artwork, photographs, images, music, audio material, video material and audio-visual material on this website) is owned by ACG Research. All Rights Reserved