T h e R h y t h m of Lexical Stress in P r o s e

D o u g B e e f e r m a n

S c h o o l o f C o m p u t e r S c i e n c eC a r n e g i e M e l l o n U n i v e r s i t y P i t t s b u r g h , P A 1 5 2 1 3 , U S A

dougb+@cs, cmu. edu

A b s t r a c t

"Prose r h y t h m " is a widely observed but scarcely quantified phenomenon. We de- scribe an information-theoretic model for measuring the regularity of lexical stress in English texts, and use it in combination with trigram language models to demon- strate a relationship between the probabil- ity of word sequences in English and the a m o u n t of r h y t h m present in them. We find t h a t the stream of lexical stress in text from the Wall Street Journal has an en- tropy rate of less than 0.75 bits per sylla- ble for c o m m o n sentences. We observe that the average number of syllables per word is greater for rarer word sequences, and to normalize for this effect we run control ex- periments to show that the choice of word order contributes significantly to stress reg- ularity, and increasingly with lexical prob- ability.

1

I n t r o d u c t i o n

R h y t h m inheres in creative output, asserting itself as the meter in music, the iambs and trochees of poetry, and the uniformity in distances between objects in art and architecture. More subtly there is widely be- lieved to be r h y t h m in English prose, reflecting the arrangement of words, whether deliberate or sub- conscious, to enhance the perceived acoustic signal or reduce the burden of remembrance for the reader or author.

In this paper we describe an information-theoretic model based on lexical stress t h a t substantiates this c o m m o n perception and relates stress regularity in written speech (which we shall equate with the in- tuitive notion of " r h y t h m " ) to the probability of the

text itself. By computing the stress entropy rate for

b o t h a set of Wall Street Journal sentences and a ver-

sion of the corpus with randomized intra-sentential word order, we also find that word order contributes significantly to r h y t h m , particularly within highly probable sentences. We regard this as a first step in

quantifying the

extent to which metrical propertiesinfluence syntactic choice in writing.

1.1

Basics

In speech production, syllables are emitted as pulses of sound synchronized with movements of the mus-

culature

in the

rib cage. Degrees of stress arise fromvariations in the a m o u n t of energy expended by the speaker to contract these muscles, and from other factors such as intonation. Perceptually stress is more abstractly defined, and it is often associated with "peaks of prominence" in some representation of the acoustic input signal (Ochsner, 1989).

Stress as a lexical property, the p r i m a r y concern of this paper, is a function t h a t maps a word to a sequence of discrete levels of physical stress, approx- imating the relative emphasis given each syllable when the word is pronounced. Phonologists distin- guish between three levels of lexical stress in English:

primary, secondary, and what we shall call weak

for lack of a better substitute for unstressed. For

the purposes of this paper we shall regard stresses as symbols fused serially in time by the writer or speaker, with words acting as building blocks of pre- defined stress sequences t h a t m a y be arranged arbi- trarily but never broken apart.

T h e culminative property of stress states t h a t ev-

ery content word has exactly one primary-stressed syllable, and t h a t whatever syllables remain are sub- ordinate to it. Monosyllabic function words such as

the and of usually receive weak stress, while content

words get one strong stress and possibly m a n y sec- ondary and weak stresses.

It has been widely observed t h a t strong and weak tend to alternate at "rhythmically ideal disyllabic distances" (Kager, 1989a). "Ideal" here is a complex function involving production, perception, and m a n y unknowns. Our concern is not to pinpoint this ideal, nor to answer precisely why it is sought by speakers

and writers, but to gauge to what extent it is sought.

We seek to investigate, for example, whether the

avoidance of primary stress clash, the placement of

nal corpus we find such sentences as "The fol-low- ing is-sues re-cent-ly were f i l e d with the Se-cur- i-ties and E x - c h a n g e Com-mis-sion". The phrase "recently were filed" can be syntactically permuted

as "were filed recently", but this clashes filed with

the first syllable of recently. The chosen sentence

avoids consecutive primary stresses. Kager postu- lates with a decidedly information theoretic under- tone that the resulting binary alternation is "simply the maximal degree of rhythmic organization com- patible with the requirement that adjacent stresses are to be avoided." (Kager, 1989a)

Certainly we are not proposing that a hard deci- sion based only on metrical properties of the o u t p u t is made to resolve syntactic choice ambiguity, in the case above or in general. Clearly semantic empha- sis has its say in the decision. But it is our belief that r h y t h m makes a nontrivial contribution, and that the tools of statistics and information theory will help us to estimate it formally. Words are the building blocks. How much do their selection (dic- tion) and their arrangement (syntax) act to enhance r h y t h m ?

1.2 P a s t m o d e l s a n d q u a n t i f i c a t i o n s

Lexical stress is a well-studied subject at the intra- word level. Rules governing how to map a word's orthographic or phonetic transcription to a sequence of stress values have been searched for and studied from rules-based, statistical, and connectionist per- spectives.

Word-external stress regularity has been denied

this level of attention. Patterns in phrases and

compound words have been studied by Halle (Halle and Vergnaud, 1987) and others, who observe and reformulate such phenomena as the emphasis of the penultimate constituent in a compound noun

(National Center for Supercomputing Applications,

for example.) T r e a t m e n t of lexical stress across

word boundaries is scarce in the literature, however. T h o u g h prose r h y t h m inquiry is more than a hun- dred years old (Ochsner, 1989), it has largely been dismissed by the linguistic community as irrelevant to formal models, as a mere curiosity for literary analysis. This is partly because formal methods of inquiry have failed to present a compelling case for the existence of regularity (Harding, 1976).

Past a t t e m p t s to quantify prose r h y t h m may be classified as perception-oriented or signal-oriented. In both cases the studies have typically focussed on regularities in the distance between peaks of promi- nence, or interstress intervals, either perceived by a h u m a n subject or measured in the signal. The former class of experiments relies on the subjective segmentation of utterances by a necessarily limited number of participants--subjects tapping out the r h y t h m s they perceive in a waveform on a recording device, for example (Kager, 1989b). To say nothing of the psychoacoustic biases this methodology intro-

duces, it relies on too little d a t a for anything but a sterile set of means and variances.

Signal analysis, too, has not yet been applied to very large speech corpora for the purpose of inves- tigating prose rhythm, though the technology now exists to lend efficiency to such studies. The ex- periments have been of smaller scope and geared toward detecting isochrony, regularity in absolute

time. Jassem et al.(Jassem, Hill, and Witten, 1984) use statistical techniques such as regression to ana-

lyze the duration of what they term rhythm units.

Jassem postulates that speech is composed of extra-

syllable narrow r h y t h m units with roughly fixed du-

ration independent of the number of syllable con-

stituents, surrounded by varia.ble-length anacruses.

Abercrombie (Abercrombie, 1967) views speech as

composed of metrical feet of variable length that be-

gin with and are conceptually highlighted by a single stressed syllable.

Many experiments lead to the c o m m o n conclu-

sion that English is stress-timed, that there is some

regularity in the absolute duration between strong

stress events. In contrast to postulated syllable-

timed languages like French in which we find exactly the inverse effect, speakers of English tend to expand and to contract syllable streams so that the dura- tion between bounding primary stresses matches the other intervals in the utterance. It is unpleasant for production and perception alike, however, when too m a n y weak-stressed syllables are forced into such an interval, or when this a m o u n t of "padding" varies wildly from one interval to the next. Prose r h y t h m analysts so far have not considered the syl- lable stream independent from syllabic, phonemic, or interstress duration. In particular they haven't measured the regularity of the purely lexical stream. They have instead continually re-answered questions concerning isochrony.

Given that speech can be divided into interstress units of roughly equal duration, we believe the more interesting question is whether a speaker or writer modifies his diction and syntax to fit a regular num-

ber of syllables into each unit. This question can

only be answered by a lexical approach, an approach that pleasingly lends itself to efficient experimenta- tion with very large amounts of data.

2

S t r e s s e n t r o p y r a t e

We regard every syllable as having either strong or weak stress, and we employ a purely lexical, con- text independent mapping, a pronunciation dictio- nary a, to tell us which syllables in a word receive which level of stress. We base our experiments on a binary-valued symbol set E1 = {W, S} and on a ternary-valued symbol set E2 = {W, S, P } , where 'W' indicates weak stress, 'S' indicates strong stress,

1 We use the ll6,000-entry CMU Pronouncing Dictio-

nary version 0.4 for all experiments in this paper.

i

(,

Figure 2: A 5-gram model viewed as a first-order Markov chain

and ' P ' indicates a pause. Abstractly the dictionary maps words to sequences of symbols from {primary, secondary, unstressed}, which we interpret by down-

sampling to our binary s y s t e m - - p r i m a r y stress is

strong, non-stress is weak, and secondary stress ('2') we allow to be either weak or strong depending on the experiment we are conducting.

We represent a sentence as the concatenation of the stress sequences of its constituent words, with • 'P' symbols (for the N2 experiments) breaking the

stream where natural pauses occur.

Traditional approaches to lexicai language mod- eling provide insight on o u r analogous problem, in which the input is a stream of syllables rather than words and the values are drawn from a vocabu- lary N of stress levels. We wish to create a model t h a t yields approximate values for probabilities of

the form p(sklso, s l , . . . , Sk-1), where si E ~ is the

stress symbol at syllable i in the text. A model with separate parameters for each history is prohibitively large, as the number of possible histories grows ex- ponentially with the length of the input; and for the same reason it is impossible to train on limited data. Consequently we partition the history space into equivalence classes, and the stochastic n-gram approach that has served lexicai language modeling so well treats two histories as equivalent if they end in the same n - 1 symbols.

As Figure 2 demonstrates, an n-gram model is simply a stationary Markov chain of order k = n -

1, or equivalently a first-order Markov chain whose states are labeled with tuples from Ek.

To gauge the regularity and compressibility of the

training d a t a we can calculate the entropy rate of the

stochastic process as approximated by our model, an upper bound on the expected number of bits needed to encode each symbol in the best possible encod- ing. Techniques for computing the entropy rate of a stationary Markov chain are well known in infor- mation theory (Cover and Thomas, 1991). If {Xi} is a Markov chain with stationary distribution tt

and transition m a t r i x P , then its entropy rate is

H ( X ) = - ~.i,j I'tiPij logpij.

The probabilities in P can be trained by ac-

cumulating, for each ( s x , s 2 , . . . , s k ) E E k, the

k-gram count in C ( s l , s z , . . . , s k ) in the training

data, and normalizing by the (k - 1)-gram count

C(sl,

s 2 , . . . , s l , - 1 ) .T h e stationary distribution p satisfies p P = #, or equivalently #k = ~ j #jPj,k (Parzen, 1962). In general finding p for a large state space requires an eigenvector computation, but in the special case of an n-gram model it can be shown t h a t the value in p corresponding to the state (sl, s 2 , . . . , sk) is simply

the k-gram frequency C(sl, s 2 , . . . , s k ) / N , where N

is the number of symbols in the data. 2 We therefore can compute the entropy rate of a stress sequence in time linear in both the a m o u n t of d a t a and the size of the state space. This efficiency will enable us to experiment with values of n as large as seven; for larger values the a m o u n t of training data, not time, is the limiting factor.

3 Methodology

T h e training procedure entails simply counting the number of occurrences of each n-gram for the train- ing d a t a and computing the stress entropy rate by the m e t h o d described. As we treat each sentence as an independent event, no cross-sentence n-grams are kept: only those t h a t fit between sentence bound- aries are counted.

3.1 T h e m e a n i n g o f s t r e s s e n t r o p y r a t e

We regard these experiments as computing the en- tropy rate of a Markov chain, estimated from train- ing data, t h a t approximately models the emission of symbols from a r a n d o m source. T h e entropy rate bounds how compressible the training sequence is, and not precisely how predictable unseen sequences from the same source would be. To measure the effi- cacy of these models in prediction it would be neces- sary to divide the corpus, train a model on one sub- set, and measure the entropy rate of the other with respect to the trained model. Compression can take place off-line, after the entire training set is read, while prediction cannot "cheat" in this manner.

But we claim t h a t our results predict how effective prediction would be, for the small state space in our Markov model and the huge a m o u n t of training d a t a translate to very good state coverage. In language modeling, unseen words and unseen n-grams are a serious problem, and are typically c o m b a t t e d with smoothing techniques such as the backoff model and the discounting formula offered by G o o d and Tur- ing. In our case, unseen "words" never occur, for

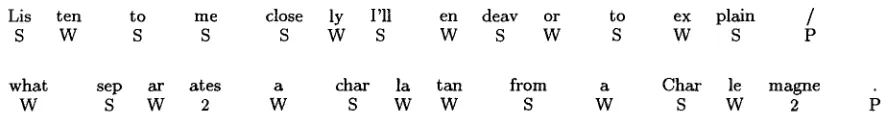

[image:3.612.113.259.75.224.2]Lis ten to me close ly I'll en deav or to ex plain /

S W S S S W S W S W S W S P

what sep ar ates a char la tan from a Char le magne

W S W 2 W S W W S W S W 2 P

Figure 1: A song lyric exemplifies a highly regular stress s t r e a m (from the musical

Pippin

by StephenSchwartz.)

the tiniest of realistic training sets will cover the bi- n a r y or t e r n a r y vocabulary. Coverage of the n - g r a m set is complete for our prose training texts for n as high as eight; nor do singleton states (counts t h a t occur only once), which are the bases of Turing's es- t i m a t e of the frequency of untrained states in new data, occur until n = 7.

3.2 Lexicallzing stress

Lexical stress is the "backbone of speech r h y t h m " and the p r i m a r y tool for its analysis. (Baum, 1952) While the precise acoustical prominences of sylla- bles within an utterance are subject to certain word- external hierarchical constraints observed by Halle (Halle and Vergnaud, 1987) and others, lexical stress is a local property. T h e stress p a t t e r n s of individ- ual words within a phrase or sentence are generally context independent.

One source of error in our m e t h o d is the a m b i g u i t y for words with multiple phonetic transcriptions t h a t differ in stress assignment. Highly accurate tech- niques for part-of-speech labeling could be used for stress p a t t e r n disambiguation when the ambiguity is purely lexical, but often the choice, in b o t h pro- duction and perception, is dialectal. It would be straightforward to divide a m o n g all alternatives the count for each n - g r a m t h a t includes a word with multiple stress patterns, but in the absence of reli- able frequency information to weight each p a t t e r n we chose simply to use the pronunciation listed first in the dictionary, which is judged by the lexicogra- pher to be the m o s t popular. Very little accuracy is lost in m a k i n g this assumption. Of the 115,966 words in the dictionary, 4635 have more t h a n one pronunciation; of these, 1269 have more than one distinct stress pattern; of these, 525 have different p r i m a r y stress placements. This smallest class has a few c o m m o n words (such as "refuse" used as a noun and as a verb), b u t m o s t either occur infrequently in text (obscure proper nouns, for example), or have a p r i m a r y pronunciation t h a t is overwhelmingly more c o m m o n t h a n the rest.

4 E x p e r i m e n t s

T h e efficiency of the n - g r a m training procedure al- lowed us to exploit a wealth of d a t a - - o v e r 60 mil- lion s y l l a b l e s - - f r o m 38 million words of

Wall Street

Journal

text. We discarded sentences not completelycovered by the pronunciation dictionary, leaving 36.1 million words and 60.7 million syllables for experi- mentation.

Our first experiments used the binary ~1 alpha- bet. T h e m a x i m u m entropy rate possible for this process is one bit per syllable, and given the u n i g r a m distribution of stress values in the d a t a (55.2% are primary), an upper bound of slightly over 0.99 bits can be computed. E x a m i n i n g the 4-gram frequencies for the entire corpus (Figure 3a) sharpens this sub- stantially, yielding an entropy rate estimate of 0.846 bits per syllable. Most frequent a m o n g the 4-grams are the patterns W S W S and SWSW, consistent with the principle of binary alternation mentioned in sec- tion 1.

The 4-gram estimate matches quite closely with the estimate of 0.852 bits t h a t can be derived from the distribution of word stress p a t t e r n s excerpted in Figure 3b. But b o t h measures overestimate the entropy rate by ignoring longer-range dependencies t h a t become evident when we use larger values of n. For n = 6 we obtain a rate of 0.795 bits per syllable over the entire corpus.

Since we had several thousand times m o r e d a t a t h a n is needed to m a k e reliable estimates of stress entropy rate for values of n less t h a n 7, it was prac- tical to subdivide the corpus according to some cri- terion, a n d calculate the stress entropy rate for each subset as well as for the whole. We chose to divide at the sentence level and to partition the 1.59 million sentences in the d a t a based on a likelihood measure suitable for testing the hypothesis from section 1.

A lexical t r i g r a m backoff-smoothed language model was trained on separate d a t a to e s t i m a t e the

language

perplexity

of each sentence in the corpus.Sentence perplexity

PP(S)

is the inverse of sentence1

probability normalized for length,

1/P(S)r~7,

whereP(S)

is the probability of the sentence according tothe language model and ISI is its word count. This measure gauges the average "surprise" after reveal- ing each word in the sentence as judged by the tri- g r a m model. T h e question of whether more probable word sequences are also more r h y t h m i c can be ap- p r o x i m a t e d by asking whether sentences with lower perplexity have lower stress entropy rate.

Each sentence in the corpus was assigned to one of one hundred bins according to its p e r p l e x i t y - - sentences with perplexity between 0 and 10 were as- signed to the first bin; between 10 and 20, the sec-

[image:4.612.73.515.76.136.2]3e406

W'u'4W: 0.78~ WSk'-~: 6.91~ SWt,/W: 2.96~ SSWW: 3.94~

~ S : 2 . 9 4 ~ WSWS: 11.00~ S~F~S: 7 . 8 0 ~ SSWS: 8.59~

I~SW: 6 . 9 7 ~ WSSW: 6 . 1 6 ~ SWSW: 11.21~ SSSW: 6.25~

k'WSS: 3.71~ WSSS: 6.06~ SWSS: 8.48~ S S S S : 6.27~

S 4 5 . 8 7 ~

SW 18.94~

W 9 . 5 4 ~

(b) s ~ r ~ s . 7 4 ~

ws 5 . 1 4 ~

WSW 4 . 5 4 ~

Figure 3: (a) T h e corpus frequencies of all binary stress 4-grams (based on 60.7 million syllables), with secondary stress m a p p e d to "weak" (W). (b) T h e corpus frequencies of the top six lexical stress patterns.

Wail Sb'eet Jouinal sylaldes per tmtd, by perpledty bin

Wan Street Journal sentences

2.5e+Q6

==

13

!

:~ t e ~ 6

5QO000

Wall Street Journal Iraining symbols (sylabl=), by perple~dty bin

Wall Street Journal se~llences

100 2~0 300 400 500 600 700 800 900 1000

L=~gu~e peq~zay

1.78

1,76

1,74

1.72

t.7

|

~. 1.68

_= 1.66

1.64

1,62

1.6

1.58

1.56 ( a )

I i f i I I I i I

100 200 300 400 500 600 700 8{]0 900 1000

Language peq31e~y

Figure 4: T h e a m o u n t of training data, in syllables,

in each perplexity bin. T h e bin at perplexity level pp

contains all sentences in the corpus with perplexity

no less than pp and no greater than pp + 10. The

smallest count (at bin 990) is 50662.

ond; and so on. Sentences with perplexity greater than 1000, which numbered roughly 106 thousand out of 1.59 million, were discarded from all exper- iments, as 10-unit bins at that level captured too little d a t a for statistical significance. A histogram showing the a m o u n t of training d a t a (in syllables) per perplexity bin is given in Figure 4.

It is crucial to detect and understand potential sources of bias in the methodology so far. It is clear t h a t the perplexity bins are well trained, but not yet t h a t they are comparable with each other. Figure 5 shows the average number of syllables per word in sentences that appear in each bin. T h a t this func- tion is roughly increasing agrees with our intuition that sequences with longer words are rarer. But it biases our perplexity bins at the extremes. Early bins, with sequences that have a small syllable rate per word (1.57 in the 0 bin, for example), are pre- disposed to a lower stress entropy rate since primary stresses, which occur roughly once per word, are more frequent. Later bins are also likely to be prej- udiced in that direction, for the inverse reason: T h e

Figure 5: T h e average number of syllables per word for each perplexity bin.

increasing frequency of multisyllabic words makes it more and more fashionable to transit t o a weak- stressed syllable following a p r i m a r y stress, sharpen- ing the probability distribution and decreasing en- tropy.

This is verified when we run the stress entropy rate computation for each bin. T h e results for n- gram models of orders 3 through 7, for the case in which secondary lexical stress is m a p p e d to the

"weak" level, are shown in Figure 6.

All of the rates calculated are substantially less than a bit, but this only reflects the stress regu- larity inherent in the vocabulary and in word se- lection, and says nothing about word arrangement. The atomic elements in the text stream, the words, contribute regularity independently. To determine how much is contributed by the way they are glued together, we need to remove the bias of word choice. For this reason we settled on a model size, n = 6, and performed a variety of experiments with b o t h the original corpus and with a control set t h a t con- tained exactly the same bins with exactly the same

sentences, but mixed up. Each sentence in the

[image:5.612.91.533.87.151.2] [image:5.612.81.294.204.387.2] [image:5.612.322.537.205.388.2]1

0.05

0.9

"~ ® 0.85

i

0.8

0.78

Wall Street Journal BINARY stress entropy rates, by pe~exily bin

, i

3-gram model ~ 4-gr~ model -.

5-gram model

S-gram rnodd ~ - . 7-gram model ~ . - -

• . . . ,

il,

J i i i i I I I i

100 200 300 400 500 600 700 800 goo 1000

Language ~ O l e x i t y

Figure 6: n-gram stress entropy rates for ~z, weak secondary stress

corpus were shuffled the same way and sentences differing by only one word were shuffled similarly. This allowed us to keep steady the effects of mul- tiple copies of the same sentence in the same per- plexity bin. More importantly, these tests hold ev- erything constant--diction, syllable count, syllable rate per w o r d - - e x c e p t for syntax, the arrangement of the chosen words within the sentence. Compar- ing the unrandomized results with this control ex- periment allows us, therefore, to factor out every-

thing but word order. In particular, subtracting the

stress entropy rates of the original sentences from the rates of the randomized sentences gives us a fig- ure, relative entropy, that estimates how many bits we save by knowing the proper word order given the word choice. The results for these tests for weak and strong secondary stress are shown in Figures 7 and 8, including the difference curves between the randomized-word and original entropy rates.

The consistently positive difference function

demonstrates that there is some extra stress regu-

larity to be had with proper word order, about a hundredth of a bit on average. The difference is small indeed, but its consistency over hundreds of well-trained d a t a points puts the observation on sta- tistically solid ground.

T h e negative slopes of the difference curves sug- gests a more interesting conclusion: As sentence per- plexity increases, the gap in stress entropy rate be- tween syntactic sentences and randomly permuted sentences narrows. Restated inversely, using entropy rates for randomly permuted sentences as a baseline, sentences with higher sequence probability are rela- tively more rhythmical in the sense of our definition from section 1.

To supplement the ~z binary vocabulary tests we ran the same experiments with ~2 = {0, 1, P } , in-

troducing a pause symbol to examine how stress be-

haves near phrase boundaries. Commas, dashes,

semicolons, colons, ellipses, and all sentence- terminating punctuation in the text, which were re- moved in the E1 tests, were m a p p e d to a single pause symbol for E~. Pauses in the text arise not only from semantic constraints but also from physiologi- cal limitations. These include the "breath groups" of syllables that influence both vocalized and writ- ten production. (Ochsner, 1989). T h e results for these experiments are shown in Figures 9 and 10. Expectedly, adding the symbol increases the confu- sion and hence the entropy, but the rates remain less than a bit. The m a x i m u m possible rate for a ternary sequence is log 2 3 ~ 1.58.

The experiments in this section were repeated with a larger perplexity interval that partitioned the corpus into 20 bins, each covering 50 units of perplexity. The resulting curves mirrored the finer- grain curves presented here.

5 C o n c l u s i o n s a n d f u t u r e w o r k

We have quantified lexical stress regularity, mea- sured it in a large sample of written English prose, and shown there to be a significant contribution from word order that increases with lexical perplexity.

This contribution was measured by comparing the entropy rate of lexical stress in natural sentences with randomly permuted versions of the same. Ran- domizing the word order in this way yields a fairly crude baseline, as it produces asyntactic sequences in which, for example, single-syllable function words can unnaturally clash. To correct for this we modi- fied the randomization algorithm to permute only open-class words and to fix in place determiners, particles, pronouns, and other closed-class words. We found the entropy rates to be consistently mid- way between the fully randomized and unrandom- ized values. But even this constrained randomiza- tion is weaker than what we'd like. Ideally we should factor out semantics as well as word choice, compar- ing each sentence in the corpus with its grammatical variations. While this is a difficult experiment to do automatically, we're hoping to approximate it using a natural language generation system based on link grammar under development by the author.

Also, we're currently testing other d a t a sources such as the Switchboard corpus of telephone speech (Godfrey, Holliman, and McDaniel, 1992) to mea- sure the effects of r h y t h m in more spontaneous and grammatically relaxed texts.

6 A c k n o w l e d g m e n t s

Comments from John Lafferty, Georg Niklfeld, and Frank Dellaert contributed greatly to this paper. The work was supported in part by an ARPA A A S E R T award, number DAAH04-95-1-0475.

[image:6.612.74.287.75.259.2]Wall Street Journal BINARY stress entmpJ rates, by pefideagy bin; secondap/slxess mapped to WEAK

0.81 . . . .

Randomized Wall Street Journal sentences -h-,

o.o

~ ' N * v ~ ,

0.70

0.75

0.74 I I I I I I I I I

100 200 300 400 500 6(:0 700 800 900 10~O

Lan~a9~ pe~pl®aly

i

0,780,77

Wal Street Journal BINARY stpess entropy rate differences, by perplexity b~n; secondary stress mapped 1o WEAK

0.025 ~ , , , ,

WSJ randomized ~ n u s nomaedornized

0.02

0.015

0.005

i I I i i i i i i

100 200 300 400 500 600 700 800 900 1000

Langu~je pe~p~ex~

Figure 7: 6-gram stress entropy rates and difference curve for El, weak secondary stress

0.76

2 0.75

i

0.74"Wail Slmet J~mal BINARY alress enbopy rates, by pel~e~ty bin; seco~daly stress m a p ~ to ~RONG Wall Street Journal BINARY sVess entropy into differences, by p e ~ ~ ; s ~ d a w stm~ ~ to STRONG

0.79 , , 0.024 / , , .

Wag Street Journal sentences ~ I WSJ randon~zed minus nonmndon~zed

Randomized Wall Street Journal sentences -~---- 0.022

/

~*V,¢~

oo2~ ~ ", 0.018

0.014

0,012

0.01 0.73

0.008

0.72, 0.006

0,71 I I I I I I I I I 0,004 I I I I I I I I I

100 200 300 400 500 600 700 800 900 1000 0 100 200 300 400 500 600 700 800 900 1000

Language pelpleagy Language perplexity

Figure 8: 6-gram entropy rates and difference curve for El, strong secondary stress

[image:7.612.85.534.131.315.2] [image:7.612.82.537.460.644.2]0.94

0.93

Wall Street Journal TERNARY stress entropy rates, by per~ex~ty bin; secomlary stress mapped to STRONG 0.97

~ , Randomized wW2 i, Sstl:eel, ~Ur~all :ene~e~c: .+--~-

o.~ ' ~+~t** ,z~

~; ;i '*' "

0.92 ~ %

0.91

i i I I l i i i I

100 200 300 400 500 600 700 800 900 10OO Language perplexity

Wall Street Journal TERNARY stress entropy rate differences, by pmple~ty bin; secondary stress mapped to WEAK 0.05 . ,

0.045

0.04

0.0~5

0.03

0.025

0.02

0.015

0.01

0.005

, i , ,

WSJ randomized minus nonrandomized -,,,--

I I I I I I I I I

100 200 300 400 800 600 700 800 900 1000 Language pe~plexily

Figure 9: 6-gram entropy rates and difference curve for ~ , weak secondary stress

Wall Street Journal TERNARY sYess entropy rates, by perple}iffy bin; secorldaPj sb'ess mapped to STRONG Wall Street Journal TERNARY stress entrppy rate differences, by perplexity b~n; seco~a~ = ~ s ~ to STRONG 0.94 +.L , , , , , , , , , 0.05. , , ,

~a%*~.. * W~dl Street Journal senterces ~ WSJ randomized minus n~mmloralzed ' " ~ ~ Randomized WaO Street Journal sentences -*--.

/ "U+"*% o.o~

o8~ "~!~

0.92 ~ $ ~:~

081 4 , I . ~ ~'~,jj~v~. ,:

~ ' " IN ,.Ii~ Vi~ i, .*

0.9 ' ; ' '

0.89 ~ ~

0.88

0.87 0

0.04

0 . ~

0.0.',

0.025

0.0~

0.01~

0.01

0.005

i i i i i i ~ i i i i i i i i I i i

100 200 300 400 500 000 700 800 ~0 1000 0 100 200 300 ~x}O 5(]0 600 700 800 900 1000 Language pelple.,dly La.~ge perplexity

Figure 10: 6-gram entropy rates and difference curve for E2, strong secondary stress

R e f e r e n c e s

Abercrombie, D. 1967. Elements of general phonet-

ics. Edinburgh University Press.

Baum, P. F. 1952. The Other Harmony of Prose.

Duke University Press. •

Cover, T. M. and J. A. T h o m ~ . 1991. Elements of

information theory. John Wiley & Sons, Inc.

Godfrey, J., E. Holliman, and J. McDaniel. 1992. Switchboard: Telephone speech corpus for re-

search development. In Proc. ICASSP-92, pages

1-517-520.

Halle, M. and J. Vergnaud. 1987. An essay on

stress. The MIT Press.

Harding, D. W. 1976. Words into rhythm: English

speech rhythm in verse and prose. Cambridge Uni- versity Press.

Jassem, W., D. R. Hill, and I. H. Witten. 1984. Isochrony in English speech: its statistical valid- ity and linguistic relevance. In D. Gibbon and

H. Richter, editors, Intonation, rhythm, and ac-

cent: Studies in Discourse Phonology. Walter de Gruyter, pages 203-225.

Kager, R. 1989a. A metrical theory of stress and

destressing in English and Dutch. Foris Publica- tions.

Kager, R. 1989b. The rhythm of English prose.

Foris Publications.

Ochsner, R . S . 1989. Rhythm and writing. The

Whitson Publishing Company.

Parzen, E. 1962. Stochastic processes. Holden-Day.

[image:8.612.85.522.75.486.2] [image:8.612.83.522.78.266.2]