Improved Neural Network based Multi label Classification with Better Initialization Leveraging Label Co occurrence

Full text

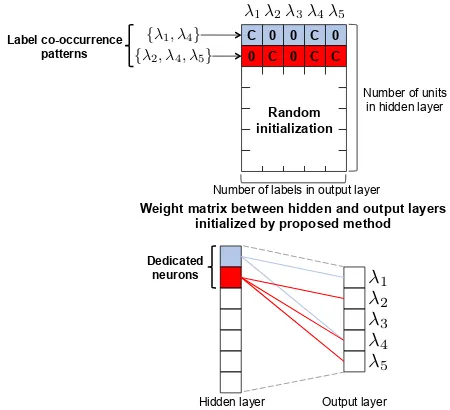

Figure

Related documents

By applying the improved genetic algorithm, for different types of attacks, the optimal number of hidden layers and neurons in a hidden layer can be

Extreme Multi-label classification (XML) is an important yet challenging machine learn- ing task, that assigns to each instance its most relevant candidate labels from an

The best Mean Squared Error performance is achieved with structure of 10 neurons in hidden layer and 4 neurons in output layer.Detection and classification of

The hidden layer of the MLP is implemented using similar way of the input layer, which is responsible to gather classification output data from the MLP and send

For the Cascade Feed Forward Neural Network the i/p layer has 149 neurons, 15 hidden layers with 2 neurons each and one o/p layer with no.of classes being

After data processing, the six input features were extracted.Thirteen M LP neural networks of different number of neuron in hidden layer were created.Each configuration

ANN model with three layers and one hidden layer 8 neurons for x-stress, 6 neurons for y-stress, 8 neurons for z-stress, and 5 neurons for effective stress in one hidden layer

Being the first proposed activation function, it had been proven in the universal approximation theorem that a single hidden layer feedforward neural network with