Fine tune BERT with Sparse Self Attention Mechanism

Full text

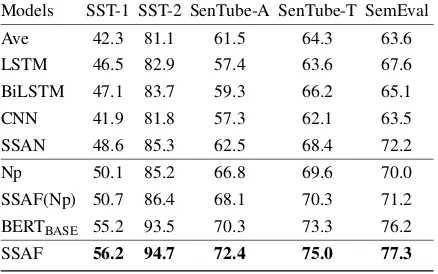

Figure

Related documents

Despite being an innovative model of preventative, team-based care, the future of OASIS is uncertain. As Cindy Roberts laments, “It’s hard to get something that looks the same across

Only one subject (Sl) produced pressure consonants and had no evidence of velopharyngeal incompetence during both the pre- and post-treatment assessments, whereas

The 2,991,798 bp long genome with its 2,461 protein-coding and 78 RNA genes and is a part of the Genomic Encyclopedia of Bacteria and Archaea project.. The species epithet

Yet, the result on the vote against “mass immigration” as well as the empirical evidence on cantonal integration policy making presented in this research note suggest that

The results of antibacterial, antifungal, nutritional value and phytochemical screening activity, table 1, 2, 3, and 4 , reveals that antibacterial, antifungal,

[…] Panopticism is brought to a new conceptual and technologically constituted environment, where it together with other equally important metaphors forms a new framework for

Potential reasons for the poor fit of the DRASTIC vulnerability map in this assessment include: (1) the temporal variability of select DRASTIC parameters, (2) the inability of the

The disease is known as human granulocytic anaplasmosis (HGA; formerly human granulocytic ehrlichiosis) in people, canine granulocytic anaplasmosis (previously canine