Dual Adversarial Neural Transfer for Low Resource Named Entity Recognition

Full text

Figure

Related documents

Our approach extends the work of Fang and Cohn (2016), who present a model based on distant supervision in the form of cross-lingual projection and use pro- jected tags generated

A Multi task Learning Approach to Adapting Bilingual Word Embeddings for Cross lingual Named Entity Recognition Proceedings of the The 8th International Joint Conference on Natural

ages deep neural networks to learn a close feature mapping between the source and target domains, and (ii) parameter transfer ( Murthy et al. , 2017 ), which performs parameter

Figure 4 shows the model architecture when we skip all intermediate LSTM layers and only word embeddings are used to produce the input for the next flat NER layer. Figure 5

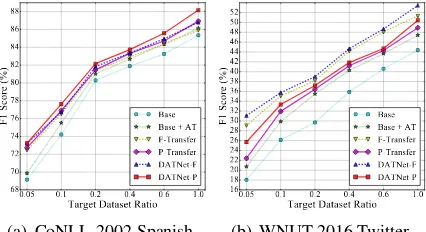

Finally, we look at the relative performance of the dif- ferent direct transfer methods and a target language specific supervised system trained with native and cross-lingual

We described a deep learning approach for Named Entity Recognition on Twitter data, which extends a basic neural network for sequence tagging by us- ing sentence level features

In this paper, we have shown that our neural networks model, which uses bidirectional LSTMs, character- level embeddings, pretrained word embeddings, CRF on the top of the

The first four types of features will be used in the CRFs model, while the word embeddings will be used in the Bi-RNN model; (iii) the base DNER models: two DNER models, based on