ISSN: 2455-8826

Asian Journal of Innovative Research in Science, Engineering and Technology (AJIRSET)

Available online at: www.engineeringjournal.info

RESEARCH ARTICLE

Sixth Sense Technology-Hand Gesture Based Virtual Keypad

Athulya Roy, Farsana TK, Jismy Devasia, Shamya A

*EIE Dept, Vimal Jyothi Engineering College, Chemperi. *Corresponding Author: Email:shamyasanthosh@vjec.ac.in

Abstract

Sixth Sense Technology integrates digital information into the physical world and its objects, making the entire world our computer. It is a wearable gestural interface that augments the physical world around us with digital information and lets us use natural hand gestures to interact with that information. It can turn any surface into a touch- screen for computing, controlled by simple hand gestures. Hand gesture recognition system can be used for interfacing between computer and human using hand gesture. This work presents a technique for a human computer interface through hand gesture recognition that is able to recognize gestures from the American Sign Language hand alphabet. The objective of this thesis is to develop an algorithm for recognition of hand gestures with reasonable accuracy. The segmentation of gray scale image of a hand gesture is performed using thresholding algorithm. Algorithm treats any segmentation problem as classification problem. Total image level is divided into two classes one is hand and other is background. The optimal threshold value is determined. A morphological filtering method is used to effectively remove background and object noise in the segmented image. Morphological method consists of dilation, erosion, opening, and closing operation. ‘Prewitt’ edge detection technique is used to find the boundary of hand gesture in image. Therefore by using hand gestures, it recognizes the images.

Keywords: HCI, MATLAB

Introduction

With current advances in technology, we see a rapidly increasing availability, but also demand, for intuitive Human Computer Interaction(HCI) devices are not only controlled by mouse and keyboard anymore, but we are now using gesture controlled devices in public areas and at our homes. Distant hand gestures in particular removed the restriction to operate a device directly; we can now interact freely with machines while moving around. In this work, we are interested in HCI that does not force users to touch a specific device or to wear special sensors, but that allows for unrestricted use. While there is a great variety of HCI techniques to interact with distant virtual objects, e.g. to select menu entries, there is still a lack for intuitive and unrestricted text input. Although there are ways to input text by using a virtual keyboard on a display or by speech recognition, there are situations where both are not suitable: the rest requires

interaction with a display. Users must speak which is not always possible. Demanding on the surroundings, especially for physically challenged people. With this work, we extend the available text input modalities by introducing an intuitive hand gesture recognition system.

The platform used for the programming is MATLAB. Initially, we used R2012a version. We’ve evolved over millions of years to sense the world around us. When we encounter something, someone or some place, we use our five natural senses which include eye, ear, nose, tongue mind and body to perceive information about it; that information helps us make decisions and chose the right actions to take. But arguably the most useful information that can help us make the right decision is not naturally perceivable with our five senses, namely the data, information and knowledge that mankind has accumulated about everything and which is increasingly all available online.

Fig.1: Gestures with text character Although the miniaturization of computing

devices allows us to carry computers in our pockets, keeping us continually connected to the digital world, there is no link between our digital devices and our interactions with the physical world. Information is confined traditionally on paper or digitally on a screen. Sixth Sense bridges this gap, bringing intangible, digital information out into the tangible world, and allowing us to interact with this information via natural hand gestures. Sixth Sense frees information from its confines by seamlessly integrating it with reality, and thus making the entire world your computer.

Sixth Sense Technology, it is the newest jargon that has proclaimed its presence in the technical arena. This technology has emerged, which has its relation to the power of these six senses. Our ordinary computers will soon be able to sense the different feelings accumulated in the surroundings and it is all a gift of the Sixth Sense Technology newly introduced. Sixth Sense is a wearable gesture based device that augments the physical world with digital information and lets people use natural hand gestures to interact with that information.

Right now, we use our devices (computers, mobile phones, tablets, etc.) to go into the internet and get information that we want. With Sixth Sense we will use a device no bigger than current cell phones and probably eventually as small as a button on our shirts

to bring the internet to us in order to interact with our world.

Sixth Sense will allow us to interact with our world like never before. We can get information on anything we want from anywhere within a few moments .We will not only be able to interact with things on a whole new level but also with people. One great part of the device is its ability to scan objects or even people and project out information regarding what you are looking at.

External devices can also be controlled using hand gestures. This control is advancement from existing system. Existing system can only able to gather the information as well as manipulating the information according to our requirement. This modification to the current system will expand the scope of Sixth Sense Technology. Using this method we can control devices like fan, TV, etc. This system needs transmitter, receiver, microcontroller, etc. to transmit data from the mobile computing device and to receive the signals for controlling different devices.

Literature Review

Maes MIT group, which includes seven graduate students, were thinking about how a person could be more integrated into the world around them and access information without having to do something like take out a phone. They initially produced a wristband that would read a Radio Frequency Identification tag to know, for example, which book a user is holding in a store. They also had a ring that used infrared to communicate by beacon to

Super market smart shelves to give you information about products. As we grab a package of macaroni, the ring would glow red or green to tell us if the product was organic or free of peanut traces whatever criteria we Program into the system.

They wanted to make information more useful to people in real time with minimal effort in a way that doesn’t require any behaviour changes. The wristband was getting close, but we still had to take out our cell phone to look at the information. That’s when they struck on the idea of accessing information from the internet and projecting it. So someone wearing the wristband could pick up a paperback in the bookstore and immediately call up reviews about the book, projecting them onto a surface in the store or doing a keyword search through the book by accessing digitized pages on Amazon Google books.

They started with a larger projector that was mounted on a helmet. But that proved cumbersome if someone was projecting data onto a wall then turned to speak to friend the data would project on the friends face.

Block Diagram

Block diagram shows that the images of hand gestures captured and by processing, the output is projected on the wall.

Working

The camera is used to recognize and track user’s hand gestures and physical objects using computer vision based techniques, while the projector is used to project visual information on walls or on any physical thing around us. Other hardware includes mirror and coloured caps to be used for fingers. The software of the technology uses the video stream, which is captured by the camera and also tracks the location of the tips of the fingers to recognize the gestures. This process is done using some techniques of computer vision. Basically it is a device which is a mini projector and which can be projected on any surface, it carries the information stored in it and also collects information from the web. It is the one which obey hand gestures of ours and gives us what we want to see and know. It is the combined technology of computer along with cell phone. It works when a person hang it on his neck and start projecting

through the micro- projector attached to it. Our fingers works like the keyboard as well as the mouse.

The idea is that Sixth Sense tries to determine not only what someone is interacting with, but also how he or she is interacting with it. The software searches the internet for information that is potentially relevant to that situation, and then the projector over. The different application includes: browsing, virtual Keyboard, face book, you tube, twitter.

Hand Gesture Based Virtual Keypad

Hand gesture recognition system can be used for interfacing between computer and human using hand gesture. This work presents a technique for a human computer interface through hand gesture recognition that is able to recognize 25 static gestures from the American Sign Language hand alphabet. The objective of this thesis is to develop an algorithm for recognition of hand gestures with reasonable accuracy.The segmentation of gray scale image of a hand

gesture is performed using thresholding

algorithm. Algorithm treats any segmentation problem as classification problem. Total image level is divided into two classes one is hand and other is background. The optimal threshold value is determined by computing the ratio between class variance and total class variance. A morphological filtering method is used to effectively remove background and object noise in the segmented image. Morphological method consists of dilation, erosion, opening, and closing operation.

As per the context of the project, gesture is defined as an expressive movement of body parts which has a particular message, to be communicated precisely between a sender and a receiver. A gesture is scientifically categorized into two distinctive categories: dynamic and static.

A dynamic gesture is intended to change over a period of time whereas a static gesture is observed at the spurt of time. A waving hand means goodbye is an example of dynamic gesture and the stop sign is an example of static gesture. To understand a full message, it is necessary to interpret all the static and dynamic gestures over a period of time.

This complex process is called gesture recognition. Gesture recognition is the process of recognizing and interpreting a stream continuous sequential gesture from the given set of inputdata.

Objective

Our main aim is to simulate an actual keypad operation by virtual keypad using hand gestures with the help of a proposed algorithm and develop an algorithm for recognition of hand gestures with reasonable accuracy.

Distant hand gestures in particular removed the restriction to operate a device directly; we can now interact freely with machines while moving around. In this work, we are interested in HCI that does not force users to touch a specific device or to wear special sensors, but that allows for unrestricted use. While there is a great variety of HCI techniques to interact with distant virtual objects, e.g. to select menu entries, there is still a lack for intuitive and unrestricted text input .Although there are ways to input text by using a virtual keyboard on a display or by speech recognition, there are situations where both are not suitable: the rest requires interaction with a display. Users must speak which is not always possible demanding on the surroundings, especially for physically challenged people .With this work; we extend the available text input modalities by

introducing an intuitive hand gesture

recognition system.

In our work, we will combine a vision based hand gesture tracking system with hand gesture recognition to provide a text input modality that does not require additional devices. To the best of our knowledge, we are unaware of vision based hand gesture recognition of whole symbols based on concatenated individual character models. Methodology

Several general purpose algorithms have been developed for image capturing. Thus image capturing needs to be approached from a wide variety of perspectives. As we have already seen that during the capturing of the object light illumination acts as noise. In general noise should be eliminated through processing, also we need that the time required for the processing of the image or frames should be as low as possible, as well as we need to see that

the motion detection of the object should be proper, because if there is no proper motion detection we will not be able to detect and capture the object. In this paper we are confined to capture the object. Different methods of capturing exist utilizing different characteristics e.g., shape, texture, or colour, etc. These methods perform differently depending on the application and are often compared only subjectively.

We choose MATLAB after deciding the various important tasks in our work. We decided that the platform on which we are going to develop our code will be MATLAB, because MATLAB is a high

level technical language and interactive

environment for algorithm development, data analysis, and numeric computation. Using the MATLAB product, we can solve technical computing problems faster than with traditional programming languages, such as C, C++, and FORTRAN. We can use MATLAB in a wide range of applications, including signal and image

processing, communications, the MATLAB

environment to solve particular classes of problems in these application areas.

MATLAB provides a number of features for documenting and sharing our work, we can integrate our MATLAB code with other languages and applications, and distribute our MATLAB algorithms and applications. In MATLAB various video acquisition and analysis functions are pre-defined in MATLAB that would make the development of my work much easier.

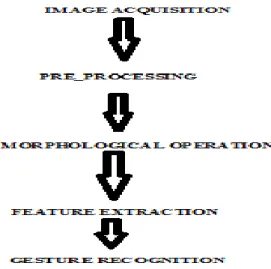

Process Flow

Image Acquisition

The first stage of any vision system is the image acquisition stage. After the image has been obtained, various methods of processing can be applied to the image to perform the many different vision tasks require today. The input images are captured by a smart

phone. The system is demonstrated on a conventional PC/ Laptop computer running on Intel Pentium Dual Processor with 4GB of RAM. Each image has a spatial resolution of 620 x 480 pixels and a gray scale resolution of 32 bit. The system developed can process hand gestures at an acceptable speed. Given a variety of available image processing techniques and recognition algorithms, we have designed our preliminary process on detecting the image as part of our image processing. Hand detection preprocessing workflow is showed in Fig.

The system starts by capturing a hand image from signer with a smart phone towards certain angle with white background. The next process will convert the RGB image into grey scale with either black (0) or white (1). The edge of each object is then computed against the white background. The object can then be segmented and differs greatly in contrast to the background images.

Preprocessing

Changes in contrast can be detected by operators that calculate the gradient of an image. Preprocessing is very much required task to be done in hand gesture recognition system. We have taken total 19signs each sign with 72 images. Preprocessing is applied to images before we can extract features from hand images. Preprocessing consist of:

Segmentation

Image Pre-processing is necessary for getting good results. In this algorithm, we take the RGB image as input image. Image segmentation is typically performed to locate the hand object and boundaries in image. It assigns label to every pixel in image such that the pixels which share certain visual characteristics will have the same label.A very good segmentation is needed to select a adequate threshold of gray level for extract

hand from background .i.e. there is no part of hand should have background and background also shouldn‘t have any part of hand.

Morphological Operation

Segmentation is done to convert gray scale image into binary image so that we can have only two object in image one is hand and other is background. Gray scale images are converted into binary image consisting hand or background. After converting gray scale image into binary image we have to make sure that there is no noise in image so we use morphological filter technique.

Morphological techniques consist of four

operations: dilation, erosion, opening and closing. It can be used to find boundaries and for edge detection. And also used for many pre and post image processing techniques. Relies on two basic operations used to shrink and expand image features.

Feature Extraction

When gesture is given in air, users should tend to give on a video camera in front of them. We exploit this video by projecting in to different frames of the hand gesture on a 2D plane, in front of the users, which has the advantage that it reduces the feature space. The beginning and ending of hand gesture can be easily detected because system provides different time slot and notify the same to users, user move their hands, correspondingly. We found that there were hand and hand movements while giving as is the case in traditional hand gesture. Character and symbol recognition from hand gesture are both based on centroid, which is an established technique in hand gesture recognition.

Feature extraction is very important in terms of giving input to a classifier .Our prime feature is local contour sequence (L.C.S). In feature extraction first we have to find edge of the segmented and morphological filtered image .Prewitt edge detector algorithm is used to find the edge which leads us to get boundary of hand in image.

Gesture Recognition

It is hard to settle on a specific useful definition of gestures due to its wide variety of applications and a statement can only specify a particular domain of gestures. Many researchers had tried to define gestures but their actual meaning is still arbitrary.

Bobick and Wilson have defined gestures as the motion of the body that is intended to communicate with other agents. For a successful communication, a sender and a receiver must have the same set of information for a particular gesture.

As per the context of the project, gesture is defined as an expressive movement of body parts which has a particular message, to be communicated precisely between a sender and a receiver. A gesture is scientifically categorized into two distinctive categories: dynamic and static.

A dynamic gesture is intended to change over a period of time whereas a static gesture is observed at the spurt of time. A waving hand means goodbye is an example of dynamic gesture and the stop sign is an example of static gesture. To understand a full message, it is necessary to interpret all the static and dynamic gestures over a period of time. This complex process is called gesture recognition.

Gesture recognition is the process of recognizing and interpreting a stream continuous sequential gesture from the given set of input data.

For each character, a separate c is trained with training data of multiple people with the extracted features described above as observations. Hand gesture recognition is able to recognize static gestures from the American Sign Language hand alphabet. Develop an algorithm for recognition of hand gestures with reasonable accuracy.

System Overview

The implementation of the proposed algorithm is done using MATLAB. The basic block diagram for the proposed algorithm is shown below.

T The basic block diagram consists of four blocks named as Data Acquisition, Pre- processing, Feature Extraction and Tracking. The functions of these blocks are as follows: Data Acquisition: Data Acquisition means to obtain the video frames using the Image Processing Toolbox. The frames are acquired with the help of the default camera device present in/on your system. Pre-processing: In pre-processing, first we convert the colour image into gray, because it is easy to process the gray image in single colour instead of three colours. Gray images requires less time in processing. Then we apply median filter to remove noise from images or frames obtained from the video. The image or frame filtered out with the help of the command ‗medfilt2 present in the Image

Processing toolbox. Feature Extraction: Selecting the right feature plays a critical role in tracking. The feature selection is closely related to the object representation. Various features required for tracking are colour, edges, optical flow, and texture. In the proposed algorithm, we track the required object using the colour feature, specifically red colour, thus we are focused to track the red colour object/objects in the video. Tracking: Tracking of the real-time objects is done on the basis of the region properties of the object such as Bounding box, Area, Centroid etc. Here Bounding box property is used to track. Hence as the object moves different locations in the video, the Bounding box also moves with it and therefore different values of region properties are obtained and hence the objective of object tracking is achieved.

Vision based analysis, is based on the way human

beings perceive information about their

surroundings, yet it is probably the most difficult to implement in a satisfactory way. Several different approaches have been tested so far.

One is to build a three-dimensional model of the

human hand. The model is matched to images of the hand by one or more cameras, and parameters corresponding to palm orientation and joint angles are estimated. These parameters are then used to perform gesture classification.

Second one to capture the image using a camera

then extract some feature and those features are used as input in a classification algorithm for classification.

In this project we have used second method for modeling the system. In hand gesture recognition system we have taken database from standard hand gesture database. Segmentation and morphological filtering techniques are applied on images in preprocessing phase. This feature is then fed to different stages. We have used these stages to classify hand gesture images.

Conclusion

Simulated an actual keyboard operation by virtual keypad using hand gestures. Developed an algorithm for recognition of hand gestures. Sixth sense technology integrates digital information into the physical world and its objects, making the entire world your computer. The system we have proposed will completely revolutionize the way people would use the computer system. Our product uses only hand gestures would completely eliminates the mouse. Also this leads to a new era of Human Computer

Interaction (HCI) where no physical contact with the device is required. Here we used the hand gesture recognition system instead of using keyboard. Different types of search engines accessed such as GOOGLE, YAHOO etc.

Sixth Sense Technology integrates digital information into the physical world and its objects, making the entire world your computer. It is a wearable gestural interface that augments the physical world around us with digital information and lets us use natural hand gestures to interact with that information. It can turn any surface into a touch-screen for computing, controlled by simple hand gestures.

Clearly, this has the potential of becoming the ultimate "transparent" user interface for accessing information about everything around us. Now, it may change the way we interact with the real world and truly give everyone complete awareness of the environment around us.

1

References

2

1 Monuri Hemantha and SrikanthMV, Simulation of Real Time Hand Gesture recognition for Physically Impaired, International Journal of

3

Advanced Research in Computer and Communication Engineering.

4

2 http://www.pranavmistry.com/projects/sixthsense.

5

3 Gupta Lalit, Suwei MA (2001) Gesture-Based Interaction and Communication: Automated Classification of Hand Gesture Contours ,

6

IEEE transactions on systems, man, and cybernetics part c: applications and reviews, 31(1).

7

4 Panwar M, Mehra P (2011) Hand Gesture Recognition for Human Computer Interaction, in Proceedings of IEEE International Conference

8

on Image Information Processing(ICIIP 2011), Waknaghat, India, 1-6.

9

5 Sadhana Rao (2010) Sixth Sense Technology, Proceedings of the International Conference on Communication and Computational

10

Intelligence,336-339.

11

6 Game PM, Mahajan AR (2011) A gestural user interface to Interact with computer system, International Journal on Science and

12

Technology (IJSAT) II(I):018-027

13

7 Sushmita Mitra (2007) Gesture Recognition A Survey, 37(3).

14

8 Ariffin Abdul Muthalib, AnasAbdelsatar, Mohammad Salameh, andJuhriyansyahDalle,

15

9 Making Learning Ubiquitous With Mobile Translator Using Optical Character Recognition (OCR), ISBN: 978-979-1421-11- 9.ICACSIS

16

2011.

17

10 UlHaq E, PirzadaSJH, Biag MW, Hyunchal Shin (2011) A new hand gesture recognition method for mouse operation, The 5th

18

International Midwest symposium in circuits and systems IEEE, (MWSCAS), 1-4.

19

11 Pandit A, Mehta S, Sabesan S, Daftery A (2009) A simple wearable hand gesture recognition device using iMEMS, International

20

Conference on soft computing and recognition (SOCPAR‟09), P.2009.

21

12 Manchanda K, Bing B (2010) Advanced mouse using trajectory-based gesture recognition, proceeding of the IEEE southeastcon

22

(southeastcon), P. 412-415.

23

13 Reza Azad1, Babak Azad, Imantavakolikazerooni, Real-Time and Robust Method for Hand Gesture Recognition System Based on

Cross-24

Correlation Coefficient, 1IEEE Member, Electrical and Computer Engineering Department, Shahid Rajaee Teacher training University

25

14 Sakthivel S, Anbarasi (2014) A Hand Gesture Recognition System for Human-Computer Interaction, International Journal of Recent

26

Trends in Electrical& Electronics Engg.

27

15 A Study on Hand Gesture Recognition Technique Master of Technology in Telematics and Signal

28

16 Ayatullah Faruk Mollah, Nabamita Majumder, Subhadip Basu and MitaNasipuri (2011) Design of an Optical Character Recognition

29

System for Camera- based Handheld Devices, IJCSI International Journal of Computer Science Issues, 8(4):1 .

30

17 Sadhana Rao S (2010) Sixth Sense Technology, Proceedings of the International Conference on Communication and Computational

31

Intelligence, 2010, Kongu Engineering College, Perundurai, Erode, T.N., India.27-29:336-339.

19 Mistry P, Maes P, Chang L (2009) WUW - Wear Ur World - A Wearable Gestural Interface. In the CHI '09 extended abstracts on Human

34

factors in computing systems. Boston, USA.

35

20 http://www.pranavmistry.com

36

21 http://www.pranavmistry.com