Rochester Institute of Technology

RIT Scholar Works

Theses

Thesis/Dissertation Collections

10-1-1993

A DSP based general self-tuning observer

controller

John F. Seward

Follow this and additional works at:

http://scholarworks.rit.edu/theses

This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion

in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact

ritscholarworks@rit.edu.

Recommended Citation

A DSP BASED GENERAL SELF-TUNING OBSERVER

CONTROLLER

by

John F. Seward, Jr.

A Thesis Submitted

In

Partial Fulfillment

of the

Requirements for the Degree of

MASTER OF SCIENCE

In

Electrical Engineering

Approved by:

(4) Signatures Illegible

DEPARTMENT OF ELECTRICAL ENGINEERING

COLLEGE OF ENGINEERING

Abstract

This

treatise

presents animplementation

of aGeneral

Self-Tuning

Observer

Controller

(GSTOC)

for

adaptivevelocity

controlusing

aTMS320C25 Digital

Signal Processor

(DSP).

The GSTOC

is

a structurebased

on modern controlstate-space

theories,

such asKalman filters

usedassteady

state ortime-varying

optimal stateestimators,

full

statefeedback

using

pole placement orTABLE OF CONTENTS

Chapter

page1.

INTRODUCTION

7

2.

ADAPTIVE CONTROL AND THE SELF-TUNING

REGULATOR

.103.

GSTOC DESIGN

17

3.1

Parameter Identification

17

3.2

Kalman Filter

(Optimum

Observer)

24

3.3

Control Law

34

4.

PLANT

DESCRIPTION

41

4.1

Plant

41

4.2

Mathematical Model

43

5.

DSP IMPLEMENTATION

46

5.1

Parameter Identification

46

5.2

Kalman

Filtering

46

5.3

Control Law

47

5.4

Scaling

47

5.5

Division

48

6.

HARDWARE DESCRIPTION

50

7.

DSP CODE

51

8.

RESULTS

54

9.

CONCLUSIONS

62

10.

REFERENCES

64

11.

SOFTWARE LISTINGS

66

ESTSTATE.LST

78

POLEP.LST

82

LIST OF

TABLES

LIST OF FIGURES

1

.Model

reference adaptive control system11

2.

Self-tuning

regulator12

3.

Implicit

self-tuning

regulator13

4.

Explicit

self-tuning

regulator14

5.

General

self-tuning

observer controller15

6.

LJE DC Motor

module42

7.

System Configuration

50

8.

Open

loop

response56

9.

Closed

loop

response57

10.

Closed

loop

responselOmSec

sampling rate,

closedloop

pole at0.7

. .58

11.

Closed

loop

responselOmSec

sampling

rate,

closedloop

pole at0.5

. .59

1.

INTRODUCTION

In

modern controltheory,

adaptive controlis

animportant

area.Adaptive

control

has

been

aroundfor

atleast

four

decades.

In

the

1950s,

adaptivecontrolsystems were used

to

optimizethe

performanceofinternal

combustionengines andasautopilots

for high

performance aircraft.State

spaceandstability theories

and majordevelopments

in

systemidentification

and parameterestimation,

which contributedto

adaptivecontrol,

evolvedin

the

1960s.

The 1970s

brought

around a muchbetter

understanding

ofthe

underlying

principlesofthe

design

andoperation of adaptivecontrolsystems.Different

estimationschemes were combined with variousdesign

methodsto

implement

adaptive control.The

minicomputer alsohad

become

a prominenttool

for

adaptivecontrolapplications.Soon

afterthe

basic

theory

ofself-tuning,

researchedby

Astrom

andWittenmark

(1973),

minicomputerapplications were

implemented

into industry.

Some

ofthese

applicationsincluded:

ore-crushing (Borisson

andSyding,

1976),

ship-steering (Kallstrom

et

al,

1977),

paper-making (Cegrell

andHedqvist,

1975),

cement-blending

(Keviczky

etal,

1978),

andmany

others.The

Digital

Signal

Processor

(DSP)

wasintroduced

during

the

1980s. The DSP

has

broaden the

opportunitiesto

incorporate

adigital

controlsolutionoverananalog

onein

arapidly growing

market,

suchasin

robotics,

disk

and servocontrolandautomotive engine control.

With

50

nSecinstruction

cycletimes

andspecial

instructions

suchas,

DMOV (data

move)to

implement

the

delay

LT (load

multiplicand),

DMO

V,

MPY

(multiply)

andAPAC (add

resultto

accumulator)

that

executein

oneinstruction

cycle,

high

performance,

sophisticated

digital

controlalgorithms,

such asthe

onesfrom

modern controltheory

canbe

implemented

on aDSP

very

easily.A DSP

canimplement

the

state

controller, the

parameter estimator andthe

stateestimator,

ofanadaptive controller

in

realtime.

This

paper presents animplementation

of an adaptive controllerusing

moderncontrol

theories,

such as state estimation and statefeedback. The

adaptive controller willbe

aDSP based

regulatorto

controlthe velocity

ofaDC

servomotor.

The

servo motor willbe

subjectedto

load disturbances.

The

Texas

Instruments

TMS320C25

was chosento implement the

adaptive controllerbecause

ofits

fast

instruction

cycletime,

extended-precisionarithmetic andadaptive

filter

support.The

TMS320C25 is

capable ofexecuting ten

millioninstructions

per second(10 MIPS). This

throughput is

attainedby

meansofsingle-cycle multiply/accumulate

instructions

withadata

option(MAC/MACD),

eightauxiliary

registerswithadedicated

arithmeticunit,

instructions

set supportfor

adaptivefiltering

and extended-precisionarithmetic,

bit

reversaladdressing,

andfast

I/O

for data intensive

signalprocessing.

The

following

chapterwilldiscuss

adaptivecontrol and afew

ofthe

maintypes

ofadaptive regulatorsand

their

differences. Chapter 3

willdevelop

the

equationsfor

parameterestimation,

using the

Recursive

Least Squares

filter)

and statefeedback

using

Ackerman's formula

that

willbe

usedin

developing

the

General

Self-Tuning

Observer Controller

are alsodeveloped

in

Chapter

3.

The

plantdescription

ofthe

LJE

motortrainer

is

given andthe

state space mathematical model of

the

plantis

developed

in

Chapter

4.

DSP

implementation

ofthe

equationsfrom Chapter

3

are presentedin

Chapter

5,

along

withscaling

anddivision. A

description

ofthe

AIB

interface

board

is

given

in

Chapter 6.

Chapter

7

gives abrief description

ofthe

Software

Development System

usedto

develop

the

GSTOC. Organization

ofthe

algorithms written

for

the

GSTOC

and asummary

ofthe

executionload

onthe

DSP

is

givenis

also givenChapter 7. The

results ofthe

GSTOC

is

presentedin

2.

ADAPTIVE CONTROL

AND

THE

SELF-TUNING

REGULATOR

Over

the

years, there

have been

many

attemptsto

define

adaptive control.There

seemsto

be

ageneral consensusamong

researchers andpracticing

control engineers

that

adaptive control couldbe defined

asthe

control ofuncertain

systems,

with a controller structurethat

includes

a subsystemfor

the

on-line estimation of unknown parameters values or system structure anda subsystem

for

the

generation of suitable controlinputs

based

onthe

estimated values or system structure.

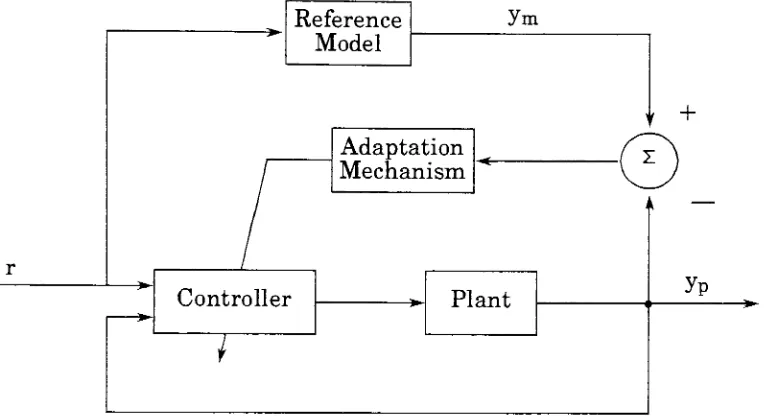

Two

principal approachesto

adaptive control areModel

Reference Adaptive

Control

(MRAC)

shownin

Fig. #1

andSelf-Tuning

Regulator

(STR)

shownin

Fig.

#2

[1].

The basic

principle ofMRAC

is

that the

performance ofthe

systemis

specifiedby

a model andthe

parameters ofthe

regulatorare adjustedbased

on

the

errorbetween

the

reference model andthe

system.MRAC

systems arerelatively easy to

implement

systemswithafairly

high

speed ofadaptation,

asthere

is

no needfor identification

the

plants parameters.As

shownin

Fig.

#1,

the

MRAC

system consistsof aninner

loop,

whichprovidesordinary

feedback

and an outer

loop,

whichadjuststhe

parametersofthe

inner

loop.

In

1973,

a majorbreakthrough in

the

field

of adaptive control wasintroduced

by

Astrom

andWittenmark,

the

idea

of a controllerthat

couldtune

itself

andactually

coinedthe

phrase'self

-tuning regulator'.The

originalself-tuning

regulator

(STR)

wasbased

on stochastic minimum-variance principles andleast

squareestimation.In

self-tuning,the

basic

procedureis

to

select adesign

Reference

Model

ym

Adaptation

Mechanism

Controller

Plant

t

+

0

A

yp

Figure

#1:

Model

reference adaptive control system(MRAC).

recursively

estimatedvalues ofthese

parameters.The idea

ofusing these

estimatesevenif

they

are notequalto the true

values(i.e.,

the

uncertainties are notconsidered)is

calledthe

certainty

equivalenceprinciple.STRs

are animportant

classofadaptive controllers.They

areeasy to

implement

and areapplicable

to

complexprocesses,

with a widevariety

ofcharacteristicsinvolving

unknownparameters,

the

presenceoftime-delay,

time varying

process

dynamics

andstochasticdisturbances.

The

closedloop

systems [image:12.553.94.478.125.335.2]r(t)

Regulator

Design

Process

Parameters

Regulator

Recursive

Parameter

Estimator

u(t)

Plant

[image:13.553.93.474.92.323.2]yP(t)

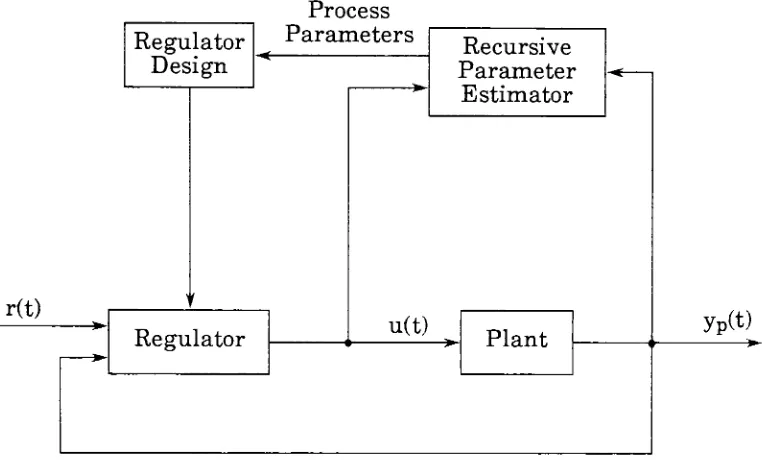

Figure #2:

Self-tuning

regulator.Two

approachesto self-tuning

arethe

implicit

ordirect

STR

algorithm andthe

explicit or

indirect

STR

algorithm.In

the

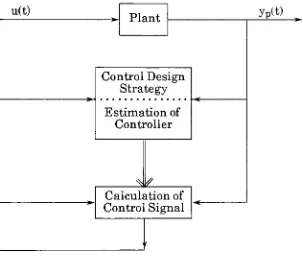

implicit STR

algorithm,

shownin

Fig.

#3,

the

regulatorparameters are updateddirectly.

Implicit

STRs

aredeveloped

onthe

basis

ofpredictivecontroltheory

and requireknowledge

ofthe

systemtime

delay.

In

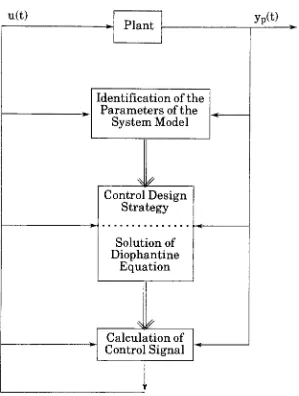

the

explicitSTR

algorithm,

shownin Fig.

#4,

the

regulator parameteridentification

is followed

by

a separatecontrollaw

calculation.The

systemtime

delay

canbe

estimatedas part ofthe

processdynamics. Explicit STRs

u(t)

Plant

yP(t)Control Design

Strategy

Estimation

ofController

^

Calculation

ofControl Signal

i '

Figure

#3:

Implicit

self-tuning

regulator. [image:14.553.161.464.102.358.2]u(t)

Plant

yP(t)Identification

ofthe

Parameters

ofthe

System Model

%.f

Control Design

Strategy

Solution

ofDiophantine

Equation

*

f

Calculation

ofControl Signal

[image:15.553.140.439.83.479.2]''

Figure #4:

Explicit

self-tuning

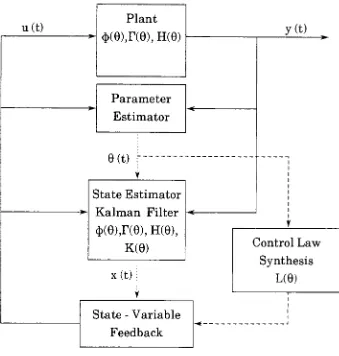

regulator.The

generalself-tuning

observer controller(GSTOC),

shownin

Fig.

#5,

is

aform

ofexplicitself-tuning

regulator whichis

based

on modern controlu(t)

Plant

4>(9),r(0),H(e)

Parameter

Estimator

9(t)

State Estimator

Kalman Filter

4>(9),r(0),

H(6),

K(0)

x(t)

State

-Variable

Feedback

y(t)

Control Law

Synthesis

L(0)

Figure

#5:

General

self-tuning

observer controller.polesare placed

based

onthe

modelparameters obtainedfrom

the

identification

scheme.The basic

GSTOC

algorithmis:

Identify

the

model parametersandformulate

a state space model ofthe

plant

{$(0),

T(0),

H(0)}.

[image:16.553.135.474.77.433.2]Design

a controllaw,

obtaining

L(0).

The GSTOC is

avery

general algorithm asit

does

notspecify

which methodsto

use

to

identify

the

plant model or choosethe

closedloop

estimatororcontrol gains.If

the

GSTOC

is

writtencorrectly

a subroutine callto

the

different

partsof

the algorithm,

different

estimationtechniques

such asRecursive Least

Squares,

Recursive Maximum Likelihood

orStochastic

Approximation

Method

could

be

substitutedto

getthe

best

identification

methodfor

the

job.

Same

reasoning

goesfor

the

estimator or control portions ofthe

algorithm.The

three

portions ofthe

GSTOC

algorithmshownin

Fig.

#5,

parameterestimation,

state estimation and state variablefeedback,

aredeveloped in

the

3.

GSTOC

DESIGN

3.1

Parameter

Identification

Identification

is

the

experimentaldetermination

ofthe

dynamic

behavior

ofprocesses and

their

signals.[3]

For

adaptive control systems on-linein

realtime

identification is

ofprimary

interest

and animportant

part ofthe

STR.

The

on-line methods give estimatesrecursively

as measurements are obtainedand

is

the only

alternativeif

the

identification

is going

to

be

usedin

anadaptive controller or

if

the

processis

time-varying.[4] There

aremany

methods

for

analyzing

data

from

the

experiments.Some

such methods areRecursive

Least

Squares

(RLS),

Extended Recursive Least

Squares

(ERLS),

Recursive

Maximum Likelihood

(RML)

andStochastic

Approximation

Method

(STA).

The

recursive parameteridentification

technique

usedin the

GSTOC

developed in

this

paper willbe

Recursive Least

Squares. Given

the

linear

model

Z(k)

=H(k)0(k)

+

V(k)

(3.1.1)

z(k)

z(k-l)

z(k-N-l)

and

z(k)

=hT(k)0

+

v(k).The

estimation modelfor

Z(k)

is

Z(k)

=H(k)0(k)

(3.1.2)

where

0 is

the

best

estimate of0. The

principleofleast

squares statesthat the

unknownparameters of

0

shouldbe

selectedin

such away that the

loss

function,

J(0(k))

=Z(k)TZ(k)

(3.1.3)

is

minimal.By

writing

(3.1.3)

asJ(0(k))

=[Z(k)

-Z(k)]'[Z(k)

-Z(k)]

(3.1.4)

[Z(k)

-H(k)9(k)]T[Z(k)

-H(k)0(k)]

(3.1.5)

=

ZT(k)Z(k)

-2ZT(k)H(k)0(k)

+

0T(k)HT(k)H(k)0(k)

(3.1.6)

and

by

recalling that

d/dm(bTm)

=b

andd/dm(mTAm)

=2Am

where m andb

dJ(9(k))/de(k)=

+

2HT(k)H(k)9(k)

(3.1.7)

by

setting

dJ(9(k))/d9(k)

=0,

the

estimate of9 is

givenby

9(k)=

[HT(k)H(k)l1HT(k)Z(k)

(3.1.8)

which

is

the

batch formula for

least

squares estimation.To

develop

the

recursiveleast

squaresequations,

in

(3.1.1)

&

(3.1.8)

it

wasassumed

that

Z(k)

containedN

elements withN

>dim 9

= n.If

anothersample

became

available att(k +

1),

it

wouldbe

very costly to

compute9

using

(3.1.8). The

number offlops

to

compute9

is

onthe

orderofn3.The

recursiveapproach

is

to

compute9 for

n =ni

andthen

usethat

estimateof9

to

obtain9

for

n =n2

wheren2

> ni.One

additional measurement z(k+

1)

att(k

+

1)

is

z(k

+

l)

=hT(k

+

l)9(k)

+

v(k+

l)

(3.1.9)

Combining

the

additional measurementwith(3.1.1)

andfrom

(3.1.8)

9(k

+

l)

=[H^k+DHCk + lttWk

+

lJZtk+l)

(3.1.10)

0(k

+

1)

=[HT(k

+ l)H(k + DlHWk + l)z(k

+

1)

+

HT(k

+

l)Z(k

+

1)]

(3.1.11)

then

and

Z(k+1)

=z(k

+

l)

Z(k)

H(k

+

1)

=hT(k

+

l)

H(k)

V(k

+

1)

=v(k

+

l)

* v-1*- -*/

V(k)

9(k)

=P(k)HT(k)Z(k)

(3.1.12)

HT(k)Z(k)

=P-!(k)9(k)

(3.1.13)

P-1(k

+

l)=P1(k)

+

h(k-r-l)hT(k

+

l)

(3.1.14)

9(k+l)

=P(k

+

l)[h(k

+

l)z(k

+

l)+P1(k)9(k)]

(3.1.15)

:

P(k

+

l){h(k

+

l)z(k

+

1)

+

[Px(k

+

l)-h(k

+

l)hT(k

+

l)]9(k)}

(3.1.16)

=

9(k)

+

P(k

+

l)h[z(k

+

l)-hT(k+l)9(k)]

=

9(k)

+

K(k

+

l)[z(k

+

l)-hT(k

+

l)9(k)]

(3.1.18)

where

K(k

+

1)

=P(k

+

l)h(k

+

l)

(3.1.19)

Equations

(3.1.14),(3.1.18)

and(3.1.19)

are calledthe

information

form

ofthe

recursive

least

squares estimator.9(0)

andP_1(0)

canbe

initialized

to

0

andI/a

(0<a<

<1)

respectively.By

expressing

(3.1.18)

as9(k

+

1)

=[I-K(k

+

l)hT(k

+

l)]9(k)

+

K(k

+ l)z(k

+

1)

(3.

1

.20)

it

canbe

seenthat

the

recursiveleast

squaresestimatoris

atime-varying

digital filter

that

is

excitedby

randominputs. There is

acomputationcostin

inverting

the

n xnmatrixP

of(3.1.14).

By

applying the

matrixinversion

lemma

whichis

B1 = A1

+

CTJ^C

(where

all matrixinversions

are assumedto

exist,

A

andB

are nxn,

C

is

mxnand

D is

m xm)then

to

(3.1.14)

resultsin

P(k

+

1)

=P(k)-P(k)h(k+l)[hT(k-r-l)P(k)h(k

+

l)+I]1hT(k

+

l)P(k)

(3.1.21)

and

consequently,

by

substituting

(3.1.12)

into

(3.1.19)

for P(k

+

1)

andsimplifying

K(k

+

1)

=P(k)h(k+l)[hT(k+

l)P(k)h(k

+

l)

+I]"1(3.1.22)

and

simplifying

(3.1.21)

P(k

+

l)

=P(k)

K(k

+

l)hT(k

+

l)P(k)

(3.1.23)

P(k+1)

=[I-K(k

+

l)hT(k

+

l)]P(k)

(3.1.24)

Equations (3.

1.18),

(3.

1.22)

and(3.1.24)

arethe

covarianceform

ofthe

recursiveleast

squares estimator.9(0)

andP(0)

canbe initialized

to

the

same values asin

the informational

form.

It

canbe

seenthat

if

z(k)is

scaler, then

the

covariance

form

ofRLS

requires no matrixinversion,

andonly

onedivision.

In

the

development

ofthe

RLS

formulas

allthe

measurements were weightedby

the

same amount.In

the

caseoftime-varying

parameters,

aforgetting

orthan

pastones.By

introducing

aweighting

matrixW

whichis

diagonal

into

(3.1.3)

J(9(k))

=Z(k)TWZ(k)

(3.1.25)

Then

(3.1.8),

(3.1.14)

and(3.1.19)

couldbe

written as9(k)

=[HT(k)WH(k)]1HT(k)WZ(k)

(3.1.26)

P1(k

+

l)

=P1(k)

+

h(k

+

l)w(k

+

l)hT(k

+

l)

(3.1.27)

K(k

+

1)

=P(k

+

l)h(k

+

l)w(k

+

l)

(3.1.28)

and

(3.1.22)

asK(k

+

1)

=P(k)h(k

+

l)[hT(k

+

l)P(k)h(k

+

l)

+w(k +

l)f1(3.1.29)

the

weighted recursiveleast

squareestimator(covariance

form)

equationsare9(k

+

l)

=9(k)

+

K(k

+

l)[z(k

+

l)-h(k

+

l)9(k)]

(3.1.30)

K(k

+

1)

=P(k)hT(k + l)[h(k

+

l)P(k)hT(k

+

l)

+

w(k-r-l)]-1(3.1.31)

P(k)

=[I

-K(k)h(k)]P(k-l)/w(k

+

3.2

Discrete

Kalman

Filter

(Optimum

Observer)

A

dynamic

system whose statevariablesarethe

estimates ofthe

state variableofanothersystem

is

called anobserver ofthe

latter

system.The

stateestimator

that

is

optimum with respectto the

process noise and observationnoise

is

called aKalman

filter.

The Kalman

filter

wasfirst introduced

by

R. E.

Kalman

in 1960

for discrete

systems.In

the

development

ofthe

GSTOC

arecursive

discrete Kalman filter

withtime varying

gainswillbe implemented.

The

plant will nowinclude

processandmeasurementnoise.x(k

+

l)

=<Sx(k)

-r-Tu(k)

+

w(k)

(3.2.1)

z(k) =

Hx(k)

+

v(k)

(3.2.2)

where

w(k)

andv(k) arethe

process andmeasurementnoise and are assumedto

be

uncorrelatedandhave

gaussiandistributions.

Where

E{w(i)wT(j)}

=Q(i)8

(3.2.3)

E{v(i)vT(j)}

=R(i)8..

(3.2.4)

E{w(i)vT(j)}

=S

=0

(for

alli

andj)

(3.2.5)

and

the

covariance matrixQ(i)

is

positive semidefinite andR(i)

is

positivedefinite

sothat the

inverse

R_1(i)

exists.Disturbance

w(k)may

be

disturbance forces

acting

onthe

system,

errorsin

modeling,

system errorsdue

to

actuators or errorsin

the

translation

ofthe

known

input

u(k)into

physicalsignals.The

disturbance

v(k)is

often usedto

model errors

in

the

measurements madeby

the sensing

instruments,

unavoidable

disturbances

that

actdirectly

onthe

sensors and errorsin

the

state

x(0)

is

unknownbut

the

meanE{x(0)}

=mx(0)

and covariancePx(0)

areknown

andx(0)

is

uncorrelated withw(i)and v(i).To derive

the

recursiveequations

for

the

discrete Kalman

filter,

the

propagation ofthe

means andcovariancesof

the

system(3.2.1)

andthe

effects ofthe

measurements(3.2.2)

need

to

be investigated.

Taking

the

expected value of(3.2.1)

E{x(k

+

1)}

=E{Ox(k)

+

Tu(k)

+

^Fw(k)}

(3.2.6)

and

using

the

linearity

ofthe

expectation operator resultsin

E{x(k+

1)}

=E{$x(k)}

+

E{Tu(k)}

+

E

pFw(k)}

(3.2.7)

and

E{x(k

+

1)}

=$E{x(k)}

+

TE{u(k)}

+WE

{w(k)}

(3.2.8)

because

of<I>,

W,

T

aredeterministic.

Also

because

u(k)is

aknown input

andE{w(k)}

=0

from

being

gaussian,

equation(3.2.8)

canbe

reducedto

E{x(k

+

l)}

=<DE{x(k)}+ru(k)

(3.2.9)

The

state covariancePx(k),

whichis defined

asE{(x(k)

-mx(k))(x(k)

-mx(k))T}

propagates as:

Px(k

+

1)

=E{[x(k

+

l)-mx(k

+ l)][x(k+l)-mx(k

+

l)]T}

(3.2.10)

= <DPx(k)0T

+

^Q(k)T+

OE{[x(k)

-mx(k)]wT(k)WT}

+

WE{w(k)[x(k)

- mx(k)]T}<DT(3.2.12)

Because

mx(k)

is

notrandom andw(k)is

zeromean,

E{mx(k)wT(k)}

=mx(k)

andE{wT(k)}

=0 along

withE{w(k)mxT(k)}

=0.

Since

the

state vectorx(k)

depends

at

the most,

on randominput

w(k-l)

then

E{x(k)wT(k)}

=E{x(k)}E{wT(k)}

=0

and

E{w(k)xT(k)}

=0 then

(3.2.12)

reducesdown

to

Px(k

+

1)

= $Px(k)$T+

iJ/Q(OTT(3.2.13)

The first

term

of(3.2.13)

is

due

to the uncertainty

in

the

plant andthe

secondterm

is

due

to

the

process noise w(n).To determine

the

means and covariance ofthe

outputtake

the

expected valueof(3.2.2)

mz(k) =

E{z(k)}

=HE{x(k)}

=Hmx(k)

(3.2.14)

with

Ev(k)

=0

from

being

gaussian.The

cross covariancebetween

the

state andthe

outputis

Pxz(k)

=E{[x(k)

- mx(k)][z(k)-mztk)]7}

(3.2.15)

=

E{[x(k)

- mx(k)][H(x(k)-mx(k)

+

v(k)]T}

(3.2.16)

=Px(k)HT

(3.2.17)

Pz(k)

=E{[z(k)

-mz(k)][z(k)

-mz(k)]T}

(3.2.18)

=

E{[H(x(k)

-mx(k)

+

v(k)][H(x(k)

-mx(k) +

v(k)]1)

(3.2.19)

= HPx(k)HT

+

R

(3.2.20)

Defining

the

a priori estimationerrorcovariance attime

k

asx(k|k-l) = x(k)

-x(k|k-l)

(3.2.21)

and

the

associated a priori error covariance asP(k|k-1).

The

estimateattime

k

including

the

output measurementz(k),

be denoted

by

x(k|k).The

aposterioriestimationerror

is

then

x(k|k) = x(k)

-x(k|k)

(3.2.22)

and

the

associated aposteriori error covariance asP(k|k).

The

Kalman

filter has

two

steps ateachtime

k. The

time

update whichis

x(k-l|k-l)

updatedto

x(k|k-l)andthe

measurementupdatein

whichthe

measurementz(k)updatesx(k).

The

time

updatesof(3.2.9) & (3.2.13)

arex(k|k-l) =

<Dx(k-l|k-l) +

Tu(k-l)

(3.2.23)

P(k|k-1)

= <DP(k-l|k-l)^T+

*FQ(k-OTT(3.2.24)

The

measurementupdateofthe

Kalman filter

willinvolve

computing the

x(k|k)

=Fz(k)

+

g

(3.2.25)

The

matrixF

andthe

vectorg

needto

be determined

for

the

optimallinear

estimate of

x(k)

givenz(k).By

minimizing

the

mean-squarederror ofJ

=E{x(k|k)xT(k|k)}

(3.2.26)

=

E{tr[(x(k)

-x(k|k))T(x(k)

-x(k|k))]}

(3.2.27)

=

E{tr[(x(k)

-x(k|k))(x(k)

-x(k|k))T]}

(3.2.28)

=

E{tr[(x(k)

-Fz(k)

-g)(x(k)

-Fz(k)

-g)T]}

(3.2.29)

=

E{tr[(x(k)-x(k|k-l)-(Fz(k)

+g-x(k|k-D)

(x(k)

-x(k|k-l)

-(Fz(k)

+

g

-x(k|k-l))T]}

(3.2.30)

by

substituting

mz(k) =Hx(k|k-1)

andPzx(k)

=E{(z(k)

-mz(k))(z(k)

-mz(k))T}

into

(3.2.30)

J

=tr[P(k|k-l)

+

F(Pz(k)

+

mz(k)mzT(k))FT+

(g

-x(k|k-l))(g-x(k|k-l))T

+

2Fmz(k)(g-x(k|k-l))T-2FPzx(k)]

(3.2.31)

by

using

d/dF tr(FXTT)

=2FX

andd/dF

tr(DFX)

=DTXT(for any

matrixF,D

and

X).

J

=2F(Pz(k)

+

mz(k)mzT(k))-2Pzx(k)+

2(g-x(k|k-l)mzT(k))

+

(g-x(k|k-l))(g-x(k|k-l))T

Thus

to

minimizeJ it

is

requiredthat

5J/8g

=2(g

-x(k|k-l)) +

2Fmz(k)

=0

(3.2.33)

and

8J/8F

=2F(Pz(k)

+ mz(k)mzT(k))

-2Pxz(k)

+

2(g

-x(k|k-l)mzT(k))

=0

(3.2.34)

solving

for

g

from

(3.2.33)

g

=x(k|k-l)-FmzT(k)

(3.2.35)

substituting

g

from

(3.2.35)

into

(3.3.34)

resultsin

FPz(k)

Pxz(k)

=0

(3.2.36)

and

solving

for F

F

=Pxz(k)Pz-1(k)

(3.2.37)

substituting

(3.2.35)

&

(3.2.37)

into

(3.2.25)

givesthe

optimallinear

mean-squared estimate

for

x(k)x(k)

=x(k|k-l) +

Pxz(k)Pz-1(k)(z(k)

-mz(k))

(3.2.38)

replacing

Pxz(k),

Pz_1(k)

andmz(k)

withpreviously

computed valuesfrom

(3.2.17),

(3.2.20)

and(3.2.14)

andthe

appropriate substitutionsfor

P(k|k-1)

andx(k|k-l)

into

(3.2.38)

resultin

x(k|k) = x(k|k-l)

+

P(k|k-l)HT(HP(k|k-l)HT+

R)1(z(k)

Equation

(3.2.39)

is

the

optimallinear

estimate a posteriori estimate ofx(k)given

the

a priori estimatex(k|k-l)

andthe

new measurement z(k).In

computing the

a posteriori error covarianceP(k|k)

it is known

that the

linear

mean-squared estimate

is

unbiasedE{x(k)}

=mx(k)orE{x(k|k)}

=0

andusing

(3.2.38)

E{x(k|k)}

=E{x(k)

-x(k|k)}

(3.2.40)

-

E{(x(k)

-x(k|k-l))

-Pxz(k)Pz"1(k)(z(k)

-mz(k))}

(3.2.41)

=

E{x(k)

-x(k|k-l)}

(3.2.42)

=

E{x(k|k-1)}

(3.2.43)

Therefore,

x(k|k)provides anunbiasedestimateofx(k)aslong

asx(k|k-l)is

unbiasedand

from

(3.2.1)

and(3.2.9)

E{x(k|k-1)}

=E{x(k)

-x(k|k-l)}

(3.2.44)

=

E{x(k)

-Ox(k|k)

-Tu(k-l)

(3.2.45)

=

$E{x(k-l)

-x(k|k)}

(3.2.46)

=

0>E{x(k|k)}

(3.2.47)

shows

that

x(k|k-l)providesanunbiased estimateif

x(k-l|k-l)does. If

the

x(0) =

mx(0)

(3.2.48)

which

is

known,

then the

initial

estimateis

unbiased.Using

(3.2.38)

to

find

P(k|k)

P(k|k)

=E{x(k|k)xT(k|k)}

=E{(x(k)

-x(k|k))(x(k)

xfklk))1}

(3.2.49)

=

E{[(x(k)

-x(k|k-l))-

Pxz(k)Pz1(k)(z(k)

-mz(k))]

[(x(k)

-x(k|k-l))

-Pxz(k)Pz1(k)(z(k)

-mz(k))]T}

(3.2.50)

=

P(k|k-1)

-Pxz(k)Pz1(k)Pzx(k)

-Pxz(k)Pz'1(k)Pzx(k)

+

Pxz(k)Pz1(k)Pz(k)Pz"1(k)Pzx(k)

(3.2.51)

or

P(k|k)=

P(k|k-1)

-PxzCWPz-HkJP^k)

(3.2.52)

Using

(3.2.17)

& (3.2.20) into

(3.2.52)

withthe

appropriatesubstitutionby

P(k|k-1)

P(k|k)

=P(k|k-1)

+

R^HPtelk-l)

(3.2.53)

Equation

(3.2.53) is

known

asthe

matrixRiccati

equationfor

P(k|k)

orby

using the

matrixinversion

lemma

P(k|k)= [P(k|k-l)-1

+

(HTR-1H)-1l-1(3.2.54)

K(k)

= P(k|k-l)HT[HP(k|k-l)HT+

RJ-^PCklkJlTR-1(3.2.55)

then

(3.2.39)

couldbe

written asx(k|k)

=x(k|k-l) +

P(k|k)HTR"1[z(k)

-Hx(k|k-1)]

(3.2.56)

x(k|k) =

x(k|k-l) +

K(k)[z(k)

-Hx(k|k-1)]

(3.2.57)

K(k)

is

the

Kalman

gain matrix andit

showthe

influence

ofthe

newmeasurement

z(k)

in

modifying the

aprioriestimate x(k|k-l).The

quantity

[z(k)

Hx(k|k-1)]

is

calledthe

residual andis

an apriorioutputestimationerror,

sinceHx(k|k-1)

providesanestimate ofthe

output.Substituting

K(k)

into

(3.2.53)

givesP(k|k)=

[I-K(k)H]P(k|k-l)

(3.2.58)

The

recursive equationsfor

the Kalman filter

are:x(k|k-l) =

<x(k-l|k-l)

+

Tu(k-l)

(3.2.23)

x(k|k) = x(k|k-l)

+

K(k)[z(k)

-Hx(k|k-1)]

(3.2.57)

K(k)

= P(k|k-l)HT[HP(k|k-l)HT+

R]"1(3.2.55)

P(k-l|k-l)

=[I

3.3

Control Law

In

control systemdesign

it is

desirable

to specify the

closed-loop

dynamic

performanceof

the

system under control oroptimally

controlthe

statevariables and or

the

control variable.The design

ofthe

controller resultsin

the

assignment ofthe

poles ofthe closed-loop transfer

function,

the

methodis

known

as pole-placement.Pole-placement

using

state variablefeedback

is

whereall

the

internal

states are availablefor feedback

eitherin their

measured

quantity

ortheir

estimated quantities.Pole-placement

design

canspeed

up the

response of a sluggish system.The

procedurein

finding

the

gainmatrix

L

oftheGSTOC

willuseAckerman's

formula. Ackerman's

formula

is

based

on atransformation

from

ageneral system matrix$

to that

of a controlcanonical

form. Given

the

discrete

single-input single-output state spacemodel

x(k

+

l)

=<J>x(k)

+

Tu(k)

(3.3.1)

and

choosing the

controlinput

u(k)to

be

alinear

combination ofthe

statesu(k) = -Lx(k)

(3.3.2)

where

L

=[h 12 13

-..InJ

(3-3.3)

x(k

+

l)

=(4>-rL)x(k)

(3.3.4)

Given

the

characteristicequationP(z)

= zn+

Pn-iz""1+

pn-2Zn"2+

....+P1Z+ pn

(3.3.5)

If

the

system of(3.3.1)

is in

the

control canonicalform

shownin

(3.3.6)

x(k

+

l)

=0

10

0

0

1

0

0

-an -ai -Q2 -an-i

x(k)

+

0

0

u(k)

(3.3.6)

with

the

characteristic equationa(z) = |zl-

A|

= zn -l-an.iz11-1+

an.2zn-2+

+

aiz+a0

(3.3.7)

TL

=0

0

h

h

l3

....In

0

0

0

....0

0

0

0

....0

h

I2

13

....In

(3.3.8)

Then

the

closedloop

system matrixis

0

0

1

0

0

1

0

0

-(an

+

li)

-(ai+l2) -(02+ 13)

....-(an-i

+

ln)

(3.3.9)

and

the

characteristic equation of|zl-d>

+

TL|

aszn +

(Qn-i

+

ljz11-1+

(an-2

+

ln-l>n-2+

...+

(ai

+

l2)z+

(an

+

U)

(3.3.10)

then

by

equating

coefficientsin

(3.3.5)

&

(3.3.10)

resultsin

the

gainsli+1

=In

generalthe

system underinterest is

notin

control canonicalform. Given

that the

systemis

reachable,

atransformation

matrixT

needsto

found

to

convert

the

systemto the

form

of(3.3.6).

By

defining

anarbitrary

transformation

ofthe

state vectorx(k)

to

w(k)by

Tx(k)

=w(k)

(3.3.12)

the

new set of state equations arex(k

+

1)

=<>x(k)

+

Tu(k)

(3.3.13)

w(k+

1)

=T$x(k)

+

Tu(k)

(3.3.14)

w(k

+

l)

=TtfPMk)

+

TTu(k)

(3.3.15)

For

the

correct choiceofT,

T<J>T_1canbe

putinto

control canonicalform. Define

Tr^T"1

as

4>c

andTT

asTc.

By defining

a matrix calledthe controllability

matrixwc

=cr

$r

...$n2r

4>nlr]

(3.3.16)

and

wcc

=[rc

<j>crc

...(>cn-2rc

<$>cnlrc](3.3.17)

Wcc

=[TT

(Tr^T-^TT

...(TtfT^Tr

(T$T1)n-1Tr]

(3.3.18)

T

= WCCWC1(3.3.20)

P($)

=$?+

pi^11"1+

p2$n-2+

+pnI

(3.3.21)

where<J)

is

the

system matrixofthe transformed

system(3.3.6). Then

by

using

the

Caley-Hamilton

theorem

P($)

= in+

(pi-ai)^-1+

(p2-a2)rin-2+

+(Pn-an)I

(3.3.22)

The

last

row of<kis

zero exceptin

position n-k whichis

then

t

=[0

0

0

0

1]P($)

(3.3.23)

but

^=(T$T-X)

then

L

=Z.T

=[

00

0

0

UPOtyT-^T

(3.3.24)

=

[0

0

0

0

1]TP($)

(3.3.25)

butT

=WccWc-1and[0

0

0

0

1]WCC

=[

0

0

0

0

1],

then

L

=LWCC

Wc"1 =[

0

0

0

0

UW^P^)

(3.3.26)

which

is

Ackerman's formula.

Given

the

desired

matrixpolynomialac(A)

=An

+

an-iAnl+

...

4-anl.

Then

l

=to o o

...i] [

r

<j>r...<r2r^ri-1

The

next questionthat

needsto

be

answeredis

where shouldthe

closed-loop

poles

be

placed.Some

guidelinethat

governthe

choice ofthe closed-loop

polesare:[5]

Select

abandwidth high

enoughto

achievethe

desired

speed of response.In

practical

systems, there

arelimits

onthe

controlinput. If

the

controlsignalgenerated

by

the

linear feedback law

u(k) = -Lx(k)is

larger

than

possibleor

permissible, there may

be

potentialdamaging

effectsto the

plantordrive

the

plantinto

a non-linear region and(3.3.1)

will notaccurately

model

the

plant.Keep

the

bandwidth low

enoughto

avoidexciting

unmodeledhigh-frequency

effects andundesired responseto

noise.Place

the

poles atapproximately

uniformdistances

from

the

originfor

efficient useof

the

controleffort.The broad

guidelines above allowplenty

oflatitude for

the

specialneedsofindividual

applications.As

aresultfrom

optimizationtheory,

closedloop

polesin the

"Butterworth"

configuration

is

often suitable placement.The

"Butterworth"

configuration

places

the

polesradially

at anuniformdistance from

the

originin left half

plane.

The

roots ofthe Butterworth

polynomialsare givenby:

(s/o0)2m = (-Dm+1

(3-3-28

)

The

farther

away

from

the

openloop

poles,

the

closedloop

poles areplaced,

the

gains

to

effect a changein

the

system poles.Bandwidth

is

determined

primarily

by

its

dominant

poles,

i.e.,

the

poles with real parts closestto the

4.

PLANT DESCRIPTION

4.1

Plant

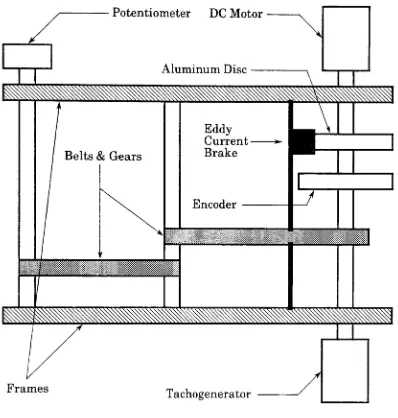

A LJE DC

motormodule,

a generaldiagram

shownin Fig.

#6,

willbe

used asthe

plantin

orderto

develop

the

GSTOC. The DC

motoris

aPhillips

modelMB-12. The

motoris

capable ofbeing

driven

at speeds ofup to

2,500

rpmin

either

direction.

An

analog

voltagein

the

range of+/-5

voltsdetermines

the

speed and

direction

of rotation.A

secondDC

motordriven

directly by

the

first

provides an

analog

feedback

voltagein

the

range of +/-5

voltsproportionalto

the

speed anddirection

of rotation.An

eddy

currentbrake,

which uses apermanent magnet

to

create a magneticfield

in

which analuminumdisc

rotates,

is

usedto

introduce torque

disturbances

onthe

motor.The

brake has

three

settings:1.

OFF,

2.

Mid

position, 3.

Fully

On. The

motor module alsohas

a continuous rotation

potentiometer,

whichis driven

by

an output shaftgeareddown

by

a ratio of9:1

from

the

motor.The

potentiometergives ananalog

feedback

voltagein

the

rangeof +/-2.5

voltsproportionalto the

position ofthe

Potentiometer

DC Motor

Belts & Gears

Eddy

Current

-Brake

Encoder

misn

J

HUHMliMiaWi yuSiittmSStMiii^k

Frames

[image:43.553.79.477.86.504.2]Tachogenerator

4.2

MATHEMATICAL

MODEL

The

mathematicalmodel of athe

LJE DC

servo motor usedin

this

applicationwill

be developed

in

this

section.The

generalelectrical characteristics of aDC

motor

is:

L

di(t)/dt

+

R

i(t)

=V

-emf

(4.2.1)

where

L

is

the

inductance

ofthe

motor armature(henries)

R

is

the

resistance ofthe

motor armature(ohms)

V

is

the

applied voltage(volts)

i(t)

is

the

currentthrough the

armature(amps)

di(t)/dt

is

the

instantaneous

current(amps/sec)

emf

is

the

back

emf=Ke

d9(t)/dt

where

Ke

is

a constant(Volts/rad/sec)

d9(t)/dt is

rotationalvelocity

(rad/sec)

The

general mechanicalcharacteristics of aDC

motoris:

Jm

d29(t)/dt2+

Bd9(t)/dt

+

K9(t)

=TL

- JLd29(t)/dt2(4.2.2)

where

Jm

is

the

motorinertia

(Kg

m2)9(t)

is

rotational position(rad)

d9(t)/dt is

rotationalvelocity

(rad/sec)

d29(t)/dt2

K

is

a stiffness constant(N

m/rad)B

is

adamping

constant(N

m/rad/sec)

JL

is

the

load inertia

(Kg-m2)

TL

is

the

load

torque

=Kp

i(t)

where

KT

is

the torque

constant(N-m/A)

i(t)

is

the

currentthrough the

armatureCombining

andsimplifying

4.2.1

&

4.2.2

andassuming that the

electricaltime

constant

is

much shorterthan the

mechanicaltime

constantyieldsd29(t)/dt2 =

Kj/JR

*V

-(B/J

+

KT

Ke/JR)

*d9(t)/dt

(4.2.3)

Letting

x(t) = d29(t)/dt2x(t) =

d9(t)/dt

the

scaler modelofthe

DC

motorcanbe

defined

asx(t) =

KT/JR

*u(t) -(B/J

+

Kp

Ke/JR)

* x(t)(4.2.4)

Substituting

the

LJE DC

motorparameter valuesR

=2

ohmsKt

=0.007 N-m/A

Ke

=0.008 V/rad/sec

B

=30.3 E

10'6N-m/rad/sec

Jm

=17.5

E

10"6Kg-m2the resulting

equation ofthe

unloadedDC

motoris:

x(t) =

3.4

u(t)

-3.331

x(t)

(4.2.5)

Using

the

Matlab function

c2d and asampling time

oflOmSec

the

discrete

scaler model of

the

DC

motoris:

x(k

+

1)

=0.96723867

x(k)

+

0.03344

u(k)(4.2.6)

wherex(k)

is

rotationalvelocity

state andu(k)is

the

control voltage.Equation

(4.2.6)

is

ofthe

form (3.3.1).

The loaded

scaler model ofthe

DC

motor wasfound

experimentally

andthe

resulting

equationis:

x(t) =

5.59

u(t) -5.4795

x(t)

(4.2.7)

and

the

discrete

equationis:

5.

DSP

IMPLEMENTATION

5.1

Parameter Identification

In

the

parameteridentification

part ofthe

GSTOC,

which usesthe

RLS

informational

form

with aforgetting

factor,

the

unknown parameters of9 in

(3.1.1)

that

wereestimated,

are$

andT

of(3.3.1). The

values ofH

of(3.1.1)

areknown functions based

onthe

pair of observationsz(k)

andu(k)

obtainedin

the

experiment.

The

recursive equations(3.1.30),

(3.1.31)

and(3.1.32)

were usedin

the

GSTOC

algorithm.Bierman's

U-D

covariancefactorization

method[7]

wasused

in

the

RLS

estimationfor

the

matrixP(k)

in (3.1.32).

The

factorization

offers computationalsavings,

numericalstability

and reductionsin

computer storage requirements.The

factorization

statesthat

matrixP(k)

can

be

factored

asP

=U D

UTwith

U

as an unit uppertriangular

matrixandD

is

adiagonal

matrix.Computing

withtriangular

matricesinvolves

fewer

arithmeticoperations,

which

is

preferredin

a realtime

applications.A

three

variableforgetting

factor

was also usedin the

algorithm.The

three

values were:

none,

if

the

estimation errorwasless

than

acertainvalue,

no update wasdone,

a value of0.999

for

slowly changing

parametersand a valueof

0.95 for

fast

changing

parameters.The

initial

valuesfor 9 in

(3.3.1)

werethe

loaded

experimentally

obtainedvalues of$

andV

of(4.2.8).

5.2

Kalman Filter

The

Kalman

filter

willbe

implemented

withstraightline

coding

ofthe

computations.

The

valuefor

R in

(3.2.55)

wasdetermined from data

obtainedfrom

the

experiments.The

valuefor

Q

in

(3.2.24)

was chosento

givethe

best

results.

The initial

valuesfor

P(k-l|k-l)

in

(3.2.58),

P(k|k-1)

in

(3.2.4)

andK(k)

in

(3.2.55)

wereobtainedusing

the

DLQE

Matlab function

onthe

parametersfrom

(4.2.8)

andthe

chosenvaluesofR

andQ.

5.3

Control Law

The

computing

ofthe

gain matrix wasimplemented

with straightline

coding

of

Ackerman's formula (3.3.27).

The initial

value ofthe

polefor

the

desired

characteristicequation was chosen as not

to

produce alarge

valued gainmatrix.

5.4

Scaling

When

using

fixed-point

arithmeticit

is

usually necessary to

performscaling

onthe

controllerto

be

implemented.[6]

Two

objectivesofscaling

areto

fit

the

data

whichis

computedduring

the

course of stateequationcalculationsinto

the

limited

number range ofthe

DSP,

sothat

overflows are avoided withoutprovoking

excessive signal quantation effects andthe

otheris

to

alterthe

coefficients

in

such away that

they

fit

into

the

coefficientnumberrange.Input /

outputscaling transforms the internal

fractional

representations ofanumber

to

the

external physicalvariables.The

input

to the

motor(output

ofthe

D/A)

is

+/-5

volts.A

-I-1 to

-1in

the DSP

would represent a+

5

voltto

-5volts

input

to the

motor.The

scaling

ofthe

numbers usedthe

GSTOC

algorithm

had

to

be

watchcarefully

so as notto

overflowthe

computed5.5

Division

Since

the

TMS320C25 doesn't have

adivide

instruction,

away

ofdoing

aninverse

operationhad

to

be implemented.

One

way to

accomplishthis

is

to

useapproximation

techniques

using

the

solution ofnon-linearequations.This

methodrequires

that

a solutionfor

the

equationg(x)

=0

(5.5.1)

be

found. One

meansfor

find

a solutionfor

(5.5.1)

is

by

Newton-Raphson

iteration.

Consider

the

Taylor

series expansionfor

g(x):g(x

+

h)

=g(x) +

hg\x)

+

r(x,h)(5.5.2)

wherer(x,h)

is

the

remainder andcanbe

consideredsmall andg'(x)is

the

first

derivative

of g(x).Leaving

offthe

remainderandin terms

ofincremental

valuesofx

the

approximation:g(x[i

+

l])

= g(x[i])+

{x[i

+

l]

x[i]}g'(x[i])(5.5.3)

Solving

for

x[i+

1]

and withg(x)

=0

yieldsthe

approximationx[i

+

1]

= x[i]-g(x[i])/g'(x[i])

(5.5.4)

thus

x[i+

1]

willconvergeto

asolution ofg(x) =0.

To

find

aninverse

ofanumberc(1/c)

onecanwrite:g(x[i]) =

l/x[i]

-c =

0,

(5.5.5)

substituting

in

(5.5.4)

andsimplifying

gives:x[i

+

l]

=x[i]{2-cx[i]}

(5.5.6)

The

initial

guessfor

x[0]

canalsobe

approximated.The

inverse

ofcas:1/c

= l/m*2-e(5.5.7)

A

good seedfor

the

iteration

ofthe

inverse

of c wouldbe

2e.

One

musttake

precautionsusing this

methodin

that

for

certainvaluesofc,

6.

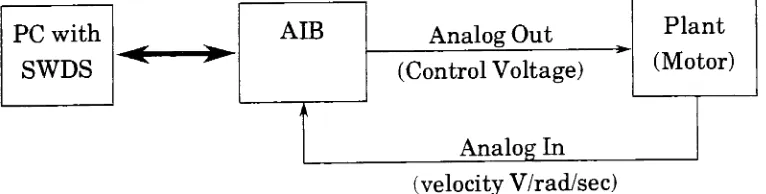

HARDWARE DESCRIPTION

A

TMS320

Analog

Interface Board

(AIB)

provided aninterface

from

the

TMS320C25

to the

plant.The AIB

is

ananalog-to-digital,

digital-to-analog

conversion

board

used as apreliminary

target

system withthe

SWDS

orin

astandalone configuration.

The

AIB

has

the

following

setoffeatures:

A

16

-bit

Analog

to

Digital

Convertor

with a-10Vto

+

10V analog

input

range with a maximum conversion

time

of17uSec.

A 16

-bit Digital

to

Analog

convertor witha-10Vto

+

10V

analog

outputrange with a

3uSec

settling time.

Sampling

rateclock withaprogrammablerange of5MHz to 76Hz

withausable range of

58.8KHz to 76Hz

(limited

by

the

conversionrangeofthe

A/D).

On-board

memory 4K

x16

to 64K

x16 for

standaloneoperation. [image:51.553.95.478.454.551.2]Anti-aliasing

andsmoothing

filters.

Fig. #7

showsablock diagram

ofthe

system configuration usedin the

GSTOC,

PC

withSWDS

AIB

Analog

Out

Plant

(Control

Voltage)

Analog

In

(Motor)

,*

(velocity

V/rad/sec)

7.

DSP CODE

The

development

andtesting

ofthe

GSTOC

algorithm wasdone

onthe

Texas

Instruments Software Development System (SWDS). The SWDS

is

aPC-resident software

development/debugging

tool

withthe

following

features:

Full

speed40

MHz TMS320C25 DSP

48

Kbytes (24 K

16-bit

words) of no wait stateRAM

RAM

configurablebetween

program anddata

spacememory

8

breakpoints

oninstruction

acquisitionWindow-oriented

debugger

screenData

logging

allowing

I/O

operationsto

disk

files

The

completeGSTOC

codeis

listed in Appendix A. The

codefor

the

GSTOC

algorithm

is

organized asfollows:

INITIALIZE

load

constantsfrom

programmemory to

data

memory

clearvariables

initialize

AIB

board

wait

for

interrupt

ISRO

get

input

z(k)compute

ERR

= z(k) - ye(k|k-l)Parameter Estimation

computepredictionerr

PERR

=HT(k)

*THETA(k)

update

diagonal,

off-diagonalelementsofP

andthe

gain valuesU(i,i),U(ij)

andBB*PERR

State Estimation

update

the

covariancematrixcalculate

the

Kalman

gainvaluesupdate

the

state estimateupdate

the

errorcovariancematrixPole-placement

Gain

calculate

the

gain matrixGSTOC

calculate

x(k|k)

calculate

the

control signalv(k)

saturate

the

control signalu(k)

update

the

estimatex(k|k-l)return

to

waitfor interrupt

By

using the timer

onthe

TMS320C25,

the

number of cyclesfor

eachpart ofthe

GSTOC

algorithm wasdetermined,

andthe

results are shownin

Table #1.

From Table

#1 the

total

executiontime

for

the

interrupt

service routineis

77uSec,

therefore the

maximumsampling

frequency

is 12.9 KHz. This

Code

sectionBytes

usedCycles

Initialize

40

Input

15

Parameter

Estimation

170

390

State

Estimation

100

156

Regulator

parameters

37

106

Rounding

routine

21

*GSTOC

103

[image:54.553.148.406.74.373.2]Total

394

770

Table #1:

Code

space and cyclesfor

eachpartof

the

GSTOC

algorithm. *included

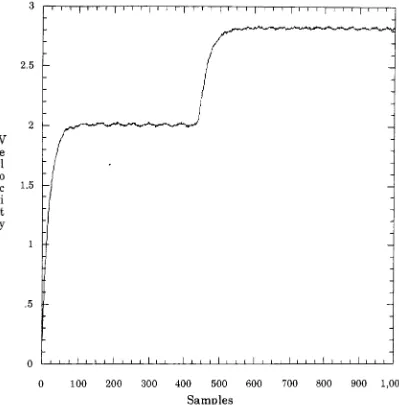

8.

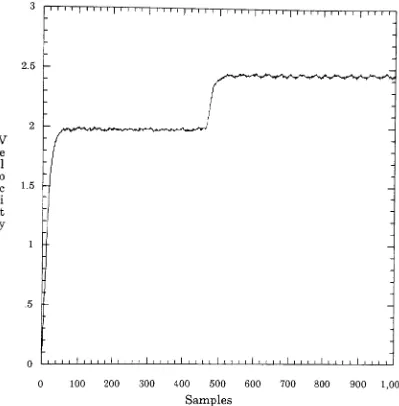

RESULTS

The data for

the

following

graphswere obtainedby

sampling

the

outputusing

the

analog to

digital

converteronthe

AIB. The

value at eachsampling

instant

was stored

into

data

memory

ofthe

SWDS. Then

using the

SWDS,

the

data

was

dumped

to

afloppy

disk. The data

wasthen

convertedfrom

the

hex

format

to

a usableform

that

couldbe

graphed.The

velocity

data

graphs show aresponse

to

astep

referencevelocity

input

andthen

after400

samples,

astep

torque

disturbance

was appliedto the

system.The

following

graphsillustrate

the

effects of closedloop

adaptive control.Fig.

#8

showsthe open-loop

responseto

adecrease in

the

load

torque.

In

the

open-loop

configurationany

changesin

the

load

torque,

causesuncompensatedchanges

in

the

motor speed.In Fig. #8

the

decrease in

torque

atthe

load

increased

the

speedofthe

motorfrom

anominal118

rad/secto

168

rad/sec,

whichwas

to

be

expected.The

speed ofthe

motorincreased

by

42%.

Also,

any

parameter variationssuch as

tolerances

between

motors andwear overtime

willcausechanges

in

the

speedofthe

motor.Fig. #9

showsthe

effectto

adecrease in load

torque

for

aunity

feedback

loop

controller.The

unity

feedback

will reducethe

sensitivity,

ofthe

motor,

to

changesin

the

load

torque.

In Fig.

#9,

the increase

in

motorspeedto the

changein

load

torque

from

the

nominalto 144

rad/sec,

a22% increase. Fig. #10 is

the

adaptivecontroller witha

10

mSecsampling

rate andthe

closedloop

poleis

set at0.7.

The

closedloop

poleat0.7

was chosenas notto

generatehigh

gain valuesin

the

poleplacementfeedback

controller.As

shownin

Fig.

#10,

there

is

very

little

overshootdue

to the

closeapproximation ofthe

closedloop

poleto the

error

in the velocity

and slowconvergence.Fig.

#11 is

with

the

closedloop

pole set at

0.5. In

this

figure

there

is

ajust

noticeableincrease in

the

overshootfrom Fig.

#10,

due

to

moving

the

closedloop

polefarther

away

from

the

openloop

systempole,

which causesthe

feedback

gainsto

increase

thus

driving

the

plant

harder. The increase

gain wasthought to

decrease

the

errorin the

output velocity.

In Figs. #10 &

#11,

the

10

mSecsampling

rate cause errorsin

the

parameter estimation algorithmproducing

slow convergence andlarge

steady

state errorsin

the

output velocity.Fig.

#12

is

the

adaptivecontrollerwith a

1

mSecsampling

rate andthe

closedloop

pole set at0.7. In Fig.

#12 the

overshoot

due

to the

faster

sampling

rateis

due

to the

positionofthe

openloop

pole

being

closerto the

unit circlecausing

a37.5%

overshoot.This high

overshoot shouldn't

be

a problem.This figure

shows nosteady

state errorin

the

output velocity.The

adaptationis

completein approximately

115

samples,

which at

1

mSecis 115

mSec.Fig. #13

is the

responseto the

decrease

in torque

with

the

closedloop

poleset at0.9.

The

overshootis just

noticeably

lower,

but

still

high

at35%.

Fig.

#13

showsthe

samezerosteady

state errorin

the

n I I I I I T

"1

i i i|

i ' i]

i i i prn i|

i i ip

i i i | i i ip

r_1 I I 11 I I I I I L_Lj i i

I

i i iLj

i iL

J I I LJ I L I 1 I I 10

100200

300

400

500

600

700

800

900

1,000

Samples

[image:57.553.75.474.121.526.2]2.5

V

e

1

o

c

i

t

y

1.5

' ' ' '

' ' ' ' '

I

' ''I

' ' 'I

' ' ' i|

i i i >|

i i i i|

i i i i|

i i i i|

i i i i.5

i i i i i i ' ' i I i i i ' I i i i i I i i i i I i i i i I i i i i I i i i i l i i i i I

0 100

200

300400

500

600

700

800

900

1,000

Samples

[image:58.553.75.474.118.524.2]2.5

-2

-V

e

1

o

c

i

t

y

1.5

.5

-1 '

| ' '

|

i i i|

i i i|

i i i|

t i i [n i

|

i i ip

r_i_j i l_i i_ I i i i I i i i I ' i i I i ' i

I

i i iI

i i iI

i_ i i i i i0

100200

300

400500

600

700800

900

1,000 [image:59.553.74.474.105.521.2]Samples

Figure #10:

Closed

Loop

Response

with10

mSecsampling

andclosedloop

0

I

i i iI

i i iI

i i iLj

i iI

i i iI

i i iL_i

i i I ' i i I i i i 1 i0

100

200

300

400

500

600

700

800

900

1,000

Samples

Figure

#11: