Dialogue Act Classification with Context Aware Self Attention

Full text

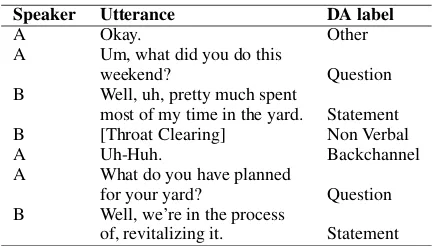

Figure

Related documents

To show that our model can select sentence parts that are related to domain aspects, we visualize the self-attention results on some tweet examples that are correctly classified by

In this paper, we explore the use of AMs to learn the context representation, as a manner to differ- entiate the current utterance from its context as well as a mechanism to

Previous studies separately exploit sentence-level contexts and document- level topics for lexical selection, neglecting their correlations. In this paper, we propose a

Context aware Natural Language Generation for Spoken Dialogue Systems Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics Technical Papers,

textual information which has not previously been explored in existing deep learning models for DA classification; (2) we propose a dual-attention hi- erarchical recurrent

Two, Consider all the turns as one single input of text (may be separated by EOS tokens) and from this learn the target label. But, none of the options are truly context

We evaluate our model on the Alex Context natural language generation (NLG) dataset of Duˇsek and Jurcicek ( 2016a ) and demonstrate that our model outperforms the RNN- based model

In this paper, we have described our approach towards building a language-independent context aware query translation, replacing the language re- sources with the rich