in music production

Wilson, AD and Fazenda, BM

Title

Perception & evaluation of audio quality in music production

Authors

Wilson, AD and Fazenda, BM

Type

Article

URL

This version is available at: http://usir.salford.ac.uk/30255/

Published Date

2013

USIR is a digital collection of the research output of the University of Salford. Where copyright

permits, full text material held in the repository is made freely available online and can be read,

downloaded and copied for noncommercial private study or research purposes. Please check the

manuscript for any further copyright restrictions.

PERCEPTION & EVALUATION OF AUDIO QUALITY IN MUSIC PRODUCTION

Alex Wilson and Bruno Fazenda

Acoustics Research Centre

School of Computing, Science and Engineering

University of Salford

Salford, UK

alex.wilson199@gmail.com

ABSTRACT

A dataset of audio clips was prepared and audio quality assessed by subjective testing. Encoded as digital signals, a large amount of feature-extraction was possible. A new objective metric is pro-posed, describing the Gaussian nature of a signal’s amplitude dis-tribution. Correlations between objective measurements of the music signals and the subjective perception of their quality were found. Existing metrics were adjusted to match quality perception. A number of timbral, spatial, rhythmic and amplitude measures, in addition to predictions of emotional response, were found to be re-lated to the perception of quality. The emotional features were found to have most importance, indicating a connection between quality and a unified set of subjective and objective parameters.

1. INTRODUCTION

A single, consistent definition for quality has not yet been offered, however, for certain restricted circumstances, ‘quality’ has an un-derstood meaning when applied to audio. Measurement techniques exist for the assessment of audio quality, such as [1] and [2], how-ever such standards typically apply to the measurement of quality with reference to a golden sample; what is in fact being ascertained is the reduction in perceived quality due to destructive processes, such as the effects of compression codecs, in which the audio being evaluated is a compressed version of the reference and the deteri-oration in quality is measured [3].

Such descriptions would not strictly apply to the evaluation of quality in musical recordings. This study is concerned with the audio quality of ‘produced’ commercial music where there is no fixed reference and quality is evaluated by comparison with all other samples heard. This judgement is based on both subjective and objective considerations.

In systems where objective measurement is possible, there is still disagreement regarding which parameters contribute to quality and the manner of their contribution. The work of Toole indicates that, with loudspeakers, even a measure as trivial as the on-axis amplitude response does not have a simple relationship to quality [4]. Toole suggests evidence of many secondary factors influenc-ing listener preference, even based on geography. For example, [5] describes a specific studio monitor as having a ‘European’ tone, citing a consistent, subjective evaluation leading to this descrip-tion.

With music being described by Gurney as the‘peculiar delight which is at once perfectly distinct and perfectly indescribable’[6], this use of language is typical in audio and does present some prob-lems. Lacking a solid definition for audio quality, each listener can

apply their own criteria, making it highly subjective.

[7] describes a series of subjective parameters (including spa-tial impression and tonality) and suggests applicable measurement methodologies. The concept of the existence of an ideal amount of a given signal parameter to yield maximal subjective quality ratings for a given piece of music is one that will be explored.

If quality is highly subjective the aspects of the subject which are of influence can be investigated. [8] found that an expert group of music professionals was able to distinguish various recording media from one another, based on their subjective audio quality ratings of classical music recordings. While a CD and cassette displayed a distinct difference in quality, formats of higher fidelity than CD were not rated significantly higher than the CD. The ex-pertise of the listener is therefore thought to be a factor in quality-perception, due to ability to detect technical flaws.

In summary, audio quality, as applied to music recordings, is predicted to be based on both subjective and objective mea-sures. This paper will investigate both aspects using independent methodologies, and subsequently attempt to determine what corre-lations exist and how the subjective and objective evaluations can be linked, to lead towards a quality-prediction model.

2. METHODOLOGY

The hypotheses under test are as follows:

1. There are noticeable differences in quality between samples 2. Listener training has an influence on perception of quality 3. Familiarity with a sample is related to how much it is liked 4. Quality is related to one or more objective signal parameters

2.1. Subjective Testing

To obtain subjective measures of the audio signals a listening test was designed in which subjects listened to a series of audio clips and answered simple questions on their experience.

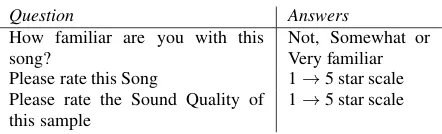

Question Answers

How familiar are you with this song?

Not, Somewhat or Very familiar Please rate this Song 1→5 star scale Please rate the Sound Quality of

this sample

1→5 star scale

2.1.1. Audio Selection

All audio samples were 16-bit, 44.1kHz stereo PCM files. 55 sam-ples were used, each a 20 second segment of the song centered around the second chorus (where possible) with a one second fade-in and fade-out. This forecast the test duration at 20-25 mfade-inutes. Based on the guidelines described in [9], listener fatigue was con-sidered negligible. The audio selection process was influenced by the test hypotheses. As familiarity was investigated, there needed to be a number that were unfamiliar to all and some familiar to all. To achieve this, six songs by unsigned Irish artists were used. The bulk of samples used were from 1972 to 2012, with two 1960s samples, and was predominately pop and rock styles.

2.1.2. Test Delivery

The listening test was delivered using a MATLAB script, which displays text, plays audio and receives user input. Controlled tests took place in the listening room at University Of Salford. The room has been designed for subjective testing and meets the re-quirements of ITU-R BS. 1116-1, with a background noise level of 5.7dBA [10]. While the test ran on a laptop computer subjects were seated at a displaced monitor and keyboard, minimising dis-tractions as well as reducing fan noise from the computer. Audio was delivered using Sennheiser HD800 headphones and the order of playback was randomised for each subject.

Unlike methods described in Section 1, this experiment con-tains no reference for what constitutes highest or lowest quality. In this case, what is being tested is that which the subject does natu-rally, listening to music. In more rigorously-controlled testing with modified stimuli, there is a risk of gathering unnatural responses to unnatural stimuli. In order to test normal listening experiences, each audition was unique and the audio had not been treated in any way, other than loudness equalisation to mimic modern broad-cast standards or programs such as Spotify. Loudness levels were calculated using the model described in [11]. These predictions agreed well within-situmeasurements performed using a Brüel & Kjær Head And Torso Simulator (HATS) and sound level meter. For testing, samples were auditioned at an average listening level of 84dBA, measured using the HATS and sound level meter.

Additional subjects were tested in less controlled circumstances, outside of the listening room, using Sennheiser HD 25-1 II head-phones. A small number of subjects were tested in both controlled and uncontrolled circumstances and displayed a high level of con-sistency, permitting further uncontrolled tests, most of which took place in quiet locations at NUI Maynooth and Trinity College Dublin.

2.2. Objective Measures

In order to characterise the audio signals the features in Table 2 were extracted from each sample. Feature-extraction was aided by the use of the MIRtoolbox [12].

Feature Description

Crest factor Ratio of peak amplitude to rms amplitude (in dB)

Width 1 - (cross-correlation between left and right channels of the stereo signal, at a time offset of zero samples)

Rolloff Frequency at which 85% of spectral energy lies below[13]

Harsh energy Fraction of total spectral energy contained within 2k-5kHz band

LF energy Fraction of total spectral energy contained within 20-80Hz band

Tempo Measured in beats per minute Gauss See Section 2.2.1

Happy Prediction of emotional response[14] Anger Prediction of emotional response[14]

Happy and Anger were chosen from a set of five classes, in-cluding Sadness, Fear and Tenderness. The latter three were re-jected due to weak correlation with quality ratings obtained.

Rolloff describes the extent of the high frequencies and the overall bandwidth, especially when combined with LF energy, rep-resenting the band reproduced by a typical studio subwoofer, al-though this could extend to 100 or 120Hz in some units. Various ranges were compared to quality ratings and the 20-80Hz range was found to be most highly correlated.

Harsh energy was based on author experience and the accounts of mix engineers, where this range was said to imbue a ‘cheap’, ‘harsh’, ‘luxurious’ or ‘smooth’ character. Again, various bands were compared to quality ratings and 2k-5kHz was found to be most highly correlated.

2.2.1. Gauss - A Measure of Audible Distortion

The classic model of the amplitude distribution of digital audio is a modified Gaussian probability mass function (PMF) with zero-mean. Note: ‘histogram’, ‘probability density function’ and ‘PMF’ are often used interchangeably but the unique distinctions are used here. Most commercially-released music prior to the mid-1990s adheres to this model, particularly when there is sufficient dynamic range and the mix consists of many individual elements.

Hard-limiting becomes a feature of the PMF with the onset of the ‘loudness war’ [15], where the extreme amplitude levels as-sume higher probabilities, sometimes exceeding the zero-amplitude probability to become the most probable levels.

A more recent phenomenon has been the presence of wider peaks in the interim values, as seen in Figure 1b, to avoid clipping as described above. This can be caused by a number of issues, such as the mastering of mixes already limited and the limiting of individual elements in the mix, such as the drums. Reports suggest it is not uncommon in modern audio productions to use multiple stages of limiting in the mix process to prepare for the limiting it will receive during the mastering process [16].

[image:3.595.57.278.105.172.2]fea-−1 −0.5 0 0.5 1 0

500 1000 1500

f = Histogram

Normalised Sample Amplitude

Average Occurances per second

−1 −0.5 0 0.5 1

0 20 40 60 80 100 120 140 160 180

f prime

x

dy/dx

r2 = 0.9988

Data Smoothed fitted curve

(a)Tori Amos - ‘Crucify’ - 1991

−1 −0.5 0 0.5 1

0 100 200 300 400 500 600

f = Histogram

Normalised Sample Amplitude

Average Occurances per second

−1 −0.5 0 0.5 1

0 10 20 30 40 50

f prime

x

dy/dx

r2 = 0.4026

Data Smoothed fitted curve

[image:4.595.51.291.88.346.2](b)Amy Winehouse - ‘Rehab’ - 2006

Figure 1:Depiction of Gauss feature

ture. The histogram was evaluated using 201 bins, providing a good trade-off between runtime, accuracy and clarity of visualisa-tion. In order to evaluate the shape of the distribution, particularly the slope and the presence of any localised peaks, the first derivate was determined. For the ideal distribution this had a Gaussian form (see Figure 1a) so ther2

of a Gaussian fit was calculated for each sample. This was used as a feature describing loudness, dynamic range and related audible distortions, referred to as ‘Gauss’.

3. RESULTS

[image:4.595.314.546.96.220.2]The total number of subjects tested was 24; 9 female and 15 male. Expertise was 12 expert, 5 musician and 7 neither. The mean age was 27 years. With 55 audio samples and 24 subjects, 1320 audi-tions were gathered and analysis was performed on this dataset.

Table 3:Results of 3-way ANOVA

int. level Source d.f. Quality Like

F p F p

1 Sample 54 7.78 0.00 7.74 0.00 1 Expertise 2 4.50 0.01 3.95 0.02 1 Familiar 2 17.62 0.00 204.47 0.00 2 S*E 108 0.94 0.65 0.85 0.87 2 S*F 94 1.08 0.29 1.30 0.03 2 E*F 4 3.16 0.01 3.20 0.01 3 S*E*F 106 1.06 0.34 0.95 0.61 The results of a 3-way ANOVA, shown in Table 3, show that each main effect is significant (p < 0.05), for quality and like, in addition to a significant second-level interaction between exper-tise and familiarity for quality and two second-level interactions

3.2 3.4 3.6 3.8 Naive

Musician Expert

1−way ANOVA − Quality, grouped by Expertise

Quality rating

(a) 1-way ANOVA: Mean Quality, grouped by subject group

20 22 24 26 28 Naive

Musician Expert

One−way ANOVA − TimeTaken grouped by Expertise

Time Taken per sample (s)

[image:4.595.331.529.514.592.2](b) 1-way ANOVA: Time Taken, grouped by subject group

Figure 2:Results of subjective test

for like (Sample/Familiar and Expertise/Familiar). To investigate further, one-way ANOVA tests were performed with post-hoc mul-tiple comparison and Bonferroni adjustment applied.

The mean quality ratings for the audio samples ranged from 2.12 to 4.29. The result in Table 3 supports test hypothesis #1, that certain samples are perceived as higher-quality than others.

Mean quality scores were significantly lower for the expert group than the naïve group (F(1,2) = 3.42, p= 0.03, see Fig-ure 2a). This provides support for test hypothesis #2, that a lis-tener’s training has an influence on quality-perception. Compar-ing the expert and musician groups shows that the groups agree on quality. The expert group were more critical of quality than the naïve group indicating that factors such as distortion and dynamic range compression were more easily identified. The mean time taken to evaluate each 20-second sample varied according to ex-pertise, with the naïve group responding significantly quicker than the other two groups (F(1,2) = 12.16, p= 0.00), shown in Fig-ure 2b. As their quality ratings were also higher, this indicates that the naïve group was less aware of what to listen for or simply less engaged in the experiment, further supporting hypothesis #2.

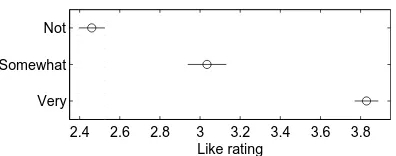

2.4 2.6 2.8 3 3.2 3.4 3.6 3.8 Very

Somewhat Not

1−way ANOVA − Like, grouped by Familiarity

Like rating

Figure 3:1-way ANOVA: Mean Like, grouped by familiarity

[image:4.595.50.290.578.677.2]3.1. Historical Study of Features

The scattered data in Figure 4 is smoothed by local regression us-ing weighted linear least squares and a second-degree polynomial model method, with rejection of outliers.

Figure 4a shows Gauss plotted against release year. It should be noted that the compact disc format was released in late 1983. Songs from earlier time periods are represented by remastered re-leases and their Gauss values should be considered estimates.

[image:5.595.323.532.219.398.2]The values in the years immediately following the release of the CD show a ‘hi-fi’ period, with corresponding high quality rat-ings (see Figure 4c). This begins to subside in 1990, when the amplitude limits of the CD are reached and signals begin to be subjected to hard-limiting. What follows is a period of increasing loudness and distortion, until a practical limit is reached in 1997. Figure 4b shows anger values regularly exceed the expected range at the same time as the loudness war. The data then indicates a re-turn to more Gaussian-like amplitude distributions in recent years.

1965 1970 1975 1980 1985 1990 1995 2000 2005 20100 0.5

1 1.5 2 2.5 3

Years

−log10(1−GAUSS)

GAUSS − Timeline of Ratings

(a)Gauss by Release Year

1965 1970 1975 1980 1985 1990 1995 2000 2005 2010 1

7 10 15 20 25 30 35

Years

Anger

Anger − Timeline of Ratings

(b)Anger by Release Year

1965 1970 1975 1980 1985 1990 1995 2000 2005 2010 1

2 3 4 5

Years

Rating

Timeline of Ratings

Like Data Quality Data Like Smoothed Quality Smoothed

(c)Subjective Ratings by Release Year

Figure 4:History of features

3.2. Correlation Between Features

The correlation between variables is shown in Figure 5. This illus-trates that the emotional predictions are comprised of other fea-tures, for example, anger is correlated with loudness measures (crest factor and gauss), spectral measures (harsh energy) and the other emotional prediction feature, happy. Also evident is the sim-ilarity between crest factor and gauss. Harsh energy and LF energy are related by definition, as both are proportions of the total spec-tral energy. The correlation not immediately obvious is between harsh energy and tempo, with slower tempo samples having less energy in the 2kHz to 5kHz band.

Figure 5:Correlation matrix, showingr2of linear fit between vari-ables. P values are in italics and determine cell-shading.

3.3. Objective Measures Compared to Quality

The expected output of themiremotionfunction is in the range 1 to 7, extending to a likely 0 to 8 [14]. While happy scores ranged from 1.7 to 7.2, anger scores ranged from 2 to 32. Due to this range, the analysis is performed on a logarithmic scale. The Gauss feature values have a range of 0 to 1 but most lie between 0.9000 and 0.9999. To better approximate a linear plot, the data plotted is equal to−log10(1−Gauss), which magnifies this upper range.

Little correlation was found between objective parameters and the like variable. However, individual features were significantly correlated with subjective quality ratings. This is shown in Figure 6, where each point is the mean quality value for each sample over all subjects, and the trend lines shown are best fit lines ascertained using linear regression.r2values range from 0.0831 to 0.3532 and

all correlations were found to be significant, withp <0.05 (apart from the sample subset with above-optimal width, see Section 4.5). By these significant correlations, test hypothesis #4 is supported.

4. DISCUSSION

[image:5.595.75.264.286.704.2]8 10 12 14 16 18 2 2.5 3 3.5 4 4.5 CREST FACTOR dB Quality

r2 = 0.1211

p = 0.0092

1 2 3

2 2.5 3 3.5 4 4.5 GAUSS −log10(1−Gauss) Quality

r2 = 0.1484

p = 0.0290

4000 6000 8000 10000 2 2.5 3 3.5 4 4.5 ROLLOFF Frequency (Hz) Quality

r2 = 0.1350 p = 0.0058

0.15 0.2 0.25 0.3 0.35 2 2.5 3 3.5 4 4.5 HARSH ENERGY

Fraction of Freq.Spec

Quality

r2 = 0.1885 p = 0.0009

0.02 0.04 0.06 0.08 2 2.5 3 3.5 4 4.5 LF ENERGY

Fraction of Freq.Spec

Quality

r2 = 0.0831

p = 0.0328

100 150 200

2 2.5 3 3.5 4 4.5 TEMPO BPM Quality

r2 = 0.1519

p = 0.0033

0.4 0.6 0.8

2 2.5 3 3.5 4 4.5 HAPPY Log10(Happy) Quality

r2 = 0.2108 p = 0.0004

0.5 1 1.5

2 2.5 3 3.5 4 4.5 ANGER Log10(Anger) Quality

r2 = 0.2382 p = 0.0002

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

2 2.5 3 3.5 4 4.5 WIDTH 1−SLR Quality

r2 = 0.3532

p = 0.0045

r2 = 0.0370

[image:6.595.62.276.81.630.2]p = 0.2759

Figure 6: Comparison of perceived quality and objective mea-sures, showing linear regression and associatedr2and p values

4.1. Emotional Response Predictions

Themiremotionfeatures used (happy and anger) were highly cor-related with quality, yielding some of the highestr2values of the features in Figure 6. This suggests that the perception of quality

is linked to the ‘emotion class’ in which the sample belongs and the listener’s emotional reaction to the sample; high quality rat-ing were awarded in instances of high happy coefficient and low quality ratings for high anger coefficient. Moreover, this result in-dicates a connection between quality and a unified set of subjective and objective parameters, as themiremotionfeatures are objective measures designed to predict subjective responses.

It should be noted that the algorithm used for prediction was originally trained by audio entirely from film soundtracks [14]. It is likely that these samples were classical and electronic styles and recorded with ample dynamic range. The samples used here yield a range of values suggesting a weakness in the assumptions of the original methodology - the predictions may not be reliable for commercial music from a wide timespan. For example, ‘Sober’ by Tool (1993) scores 3.9 for anger, compared to ‘Teenage Dream’ by Katy Perry (2010) scoring 10.5. Additionally, ‘Raining Blood’ by Slayer (1986) scores similarly to ‘With Or Without You’ by U2 (1987), rated 4.5 and 4.6 respectively - the similar release time ruling out extreme production differences in the previous example. Supported by Figure 4b, it is suggested that anger shows good correlation with quality due to the features which make up the pre-diction, although in this case it may not be a good prediction of the actual emotional response of the listener, due to differences in pop/rock music to the original training set of film scores.

4.2. Spectral Features

Quality ratings were higher in cases of high rolloff and high LF energy, relating to wider bandwidth. LF energy also relates to pro-duction trends and advancements in technology as the ability to capture and reproduce these low frequencies has improved over time due to a number of factors, including the use of synthesis-ers and developments in loudspeaker technology, such as the use of stronger magnet materials allowing smaller cabinet volumes, which are more easily installed in the home.

That high harsh energy is related to low quality shows the sen-sitivity of the ear at these frequencies, and this measure displays one of the highest correlations.

4.3. Amplitude Features

The relationship between crest factor (as a measure of dynamic range) and quality suggests that listeners can identify reduced dy-namic range as a determinant of reduced quality. Despite a dif-ferent methodology this supports recent studies which refute that hypercompressed audio is preferred or achieves greater sales [17]. The newly derived Gauss metric worked well as a means of classifying the most distorted tracks from those less so, by encod-ing fine structure in the signal’s PMF. With issues relatencod-ing to loud-ness and dynamic range compression receiving much attention in the community this new feature can be used to gain insight into the perceptual effects of loudness-maximisation.

4.4. Rhythmic Features

4.5. Spatial Features

While one linear model was not appropriate for width, an optimal value is found close to 0.17, where quality ratings reach a peak. This precise value was likely influenced by headphone playback, where sensitivity to width is enhanced. Due to this relationship, the width plot in Figure 6 shows two linear fits, with the dataset divided into values above and below 0.17.

The data indicates that there is an increase in perceived qual-ity in going from monaural presentation to an ideal stereo width. However, wider-still samples saw no significant change in quality. For the reference of the reader, the samples used that measured closest to this optimal width are ‘Sledgehammer’ by Peter Gabriel (1986), ‘Superstition’ by Stevie Wonder (1972) and ‘Firestarter’ by Prodigy (1996).

The optimal width was narrower than the mean width (0.25). This may be due to recent attempts in popular music production to produce wider mixes, where this modern width may be presenting as lower-quality due to coincidence with modern dynamic range reduction.

5. CONCLUSIONS AND FURTHER WORK

Correlations between objective measures of digital audio signals and subjective measures of audio quality have been found for the open-ended case of commercial music productions. Dynamics, distortions, tempo, spectral features and emotional predictions have shown correlation with perceived audio quality.

A new objective signal parameter is proposed to unify crest factor, clipping and other features of the audio PMF. This feature works better than crest factor alone for identifying quality, and an analysis of how the feature varies with release year provides an insight into production trends and the evolution of the much-discussed loudness war. Some further work is needed to improve performance, identify a more robust feature or to test the use of the histogram itself as a feature vector.

Due to the correlations between objective measures and quality-perception it is anticipated that quality scores can be predicted by means of the extracted signal parameters. A number of possible implementations are being explored at the time of writing.

With only a relatively small test panel, the results are indica-tive rather than conclusive. Additional subjecindica-tive testing would be required to increase confidence in the findings. While con-cepts have been proven a greater number of audio samples and subjects would be needed for future development. Such a listen-ing test would be well-suited to a mass-participation experiment, conducted on-line. The robustness of feature based quality predic-tions would benefit from this larger dataset, towards the goal of automatic quality-evaluation and subsequent enhancement.

6. REFERENCES

[1] ITU-R BS.1534-1, “Method for the subjective assessment of intermediate quality levels of coding systems,” Tech. Rep., International Telecommunication Union, Geneva, Switzer-land, Jan. 2003.

[2] ITU-T P.800, “Methods for objective and subjective assess-ment of quality,” Tech. Rep., International Telecommunica-tions Union, 1996.

[3] Amandine Pras, Rachel Zimmerman, Daniel Levitin, and Catherine Guastavino, “Subjective evaluation of mp3 com-pression for different musical genres,” inAudio Engineering Society Convention 127, 10 2009.

[4] Floyd E. Toole, “Loudspeaker measurements and their rela-tionship to listener preferences: Part 1,” J. Audio Eng. Soc, vol. 34, no. 4, pp. 227–235, 1986.

[5] Lorenz Rychner, “Featured review: Neu-mann KH 120 A Active Studio Monitor,” http://www.recordingmag.com/productreviews/2012/12/59.html, Accessed feb 1, 2013, 2012.

[6] Edmund Gurney, The Power of Sound, Cambridge Univer-sity Press, 2011, originally 1880.

[7] W. Hoeg, L. Christensen, and R. Walker, “Subjective assess-ment of audio quality - the means and methods within the ebu,”EBU Technical Review, pp. 40–50, Winter 1997. [8] Richard Repp, “Recording quality ratings by music

profes-sionals,” inProc. Intl. Computer Music Conf., New Orleans, USA, Nov. 6-11, 2006, pp. 468–474.

[9] Raimund Schatz, Sebastian Egger, and Kathrin Masuch, “The impact of test duration on user fatigue and reliability of subjective quality ratings,”J. Audio Eng. Soc, vol. 60, no. 1/2, pp. 63–73, 2012.

[10] ITU-R BS.1116-1, “Methods for the subjective assessment of small impairments in audio systems including multichan-nel sound systems,” Tech. Rep., International Telecommuni-cations Union, Vol. 1, pp. 1–11, 1997.

[11] Brian R. Glasberg and Brian C. J. Moore, “A model of loud-ness applicable to time-varying sounds,” J. Audio Eng. Soc, vol. 50, no. 5, pp. 331–342, 2002.

[12] Olivier Lartillot and Petri Toiviainen, “A matlab toolbox for musical feature extraction from audio,” inProc. Digital Au-dio Effects (DAFx-07), Bordeaux, France, Sept. 10-15, 2007, pp. 237–244.

[13] George Tzanetakis and Perry R. Cook, “Musical genre clas-sification of audio signals,” IEEE Transactions on Speech and Audio Processing, vol. 10, no. 5, pp. 293–302, 2002. [14] Tuomas Eerola, Olivier Lartillot, and Petri Toiviainen,

“Pre-diction of multidimensional emotional ratings in music from audio using multivariate regression models,” inInternational Conference on Music Information Retrieval, Kobe, Japan, Oct. 26-30, 2009, pp. 621–626.

[15] Earl Vickers, “The loudness war: Background, speculation, and recommendations,” inAudio Engineering Society Con-vention 129, 11 2010.

[16] David Pensado, “Into The Lair #47 - Creat-ing Loud Tracks with EQ and Compression,” http://www.pensadosplace.tv/2012/09/18/into-the-lair-47-creating-loud-tracks-w-eq-and-compression/, accessed April 4, 2013, September 2012.

[17] N.B.H. Croghan, K.H. Arehart, and J.M. Kates, “Quality and loudness judgments for music subjected to compression limiting.,” J Acoust Soc Am, vol. 132, no. 2, pp. 1177–88, 2012.