CSEIT172486 | Received : 18 July 2017 | Accepted : 29 July 2017 | July-August-2017 [(2)4: 328-334]

International Journal of Scientific Research in Computer Science, Engineering and Information Technology © 2017 IJSRCSEIT | Volume 2 | Issue 4 | ISSN : 2456-3307

328

Overflow : Multiple Site Awareness for Big Data Management

and Scientific Workflows on Clouds

Swaroopa Shastri, Mahesh Sajjanshetty

Department of Computer Studies and Computer Applications, Visvesvaraya Technological University,

Centre for PG Studies, Kalaburagi, Karnataka, India

ABSTRACT

The worldwide organization of cloud server farms is empowering expansive scale logical work processes to enhance execution and convey quick reactions. This phenomenal topographical appropriation of the calculation is multiplied by an expansion in the size of the information taken care of by such applications, conveying new difficulties identified with the effective information administration crosswise over destinations. High quantity, low potentials or price related exchange offs are only a couple worries designed for together cloud suppliers and clients with regards to taking care of information crosswise over server farms. Existing arrangements are constrained to cloud-gave capacity, which offers low execution in light of fixed cost plans. Thusly, work process engines necessity to make up alternates, accomplishing execution at the cost of difficult framework setups, keep expenses, decreased solid quality and reusability. We present Overflow, a unchanging information administration framework for logical work processes running crosswise over topographically disseminated destinations, meaning to receive monetary rewards from this geo-differing qualities. Our answer is condition mindful, as it screens and representations the worldwide cloud framework, contribution extraordinary and unsurprising information taking care of execution for exchange price and period, inside and crosswise over sites. Overflow suggests an arrangement of pluggable administrations, assembled in an information researcher cloud set. They give the applications the likelihood to screen the basic framework, to endeavour smooth information pressure, deduplication and geo-replication, to assess information administration expenses, to set an exchange off amongst cost and period, and enhance the exchange procedure consequently. The outcomes demonstrate that our framework can show exactly the cloud execution and to use this for proficient information scattering, having the capacity to reduce the money related expenses and exchange time by up to three times.

Keywords: Big Data Management, Cloud Server, Higgsboson Disclosure, Google Cloud, Bio-Informatics, VM

I.

INTRODUCTION

The all around appropriated server farms cloud foundations empower the quick advancement of vast measure applications. Cases of such requests running as cloud administrations crosswise over locales run from office synergistic devices worldwide securities exchange examination devices to entertainment services and logical work processes. The majority of these applications are conveyed on numerous destinations to use closeness to clients through substance conveyance systems. Other than serving the nearby customer asks for, these administrations need to keep up a worldwide rationality for mining inquiries,

upkeep or observing operations, that require extensive information developments.

Problem Statement

include an immense number of factual experiments for attesting possible significant district of interests (e.g. connects amongst mind areas and qualities). This handling takes demonstrated to benefit significantly beginning a transference crosswise over destinations. Other than the requirement for extra register assets, applications need to conform to a few cloud suppliers’requirements, which require them to be sent on geologically appropriated site.

Objective of the study

To begin with the administration use deduplication applications call the check deduplication (Data, Destination Site) capacity to confirm in the

@Metadata Registry of the goal site if (comparable) information as of now exist. The verification is done in view of the one of a kind ID or the hash of the information. On the off chance that the information be present, the exchange is supplanted through the report of the information at goal.

This takes the greatest additions, togetherperiod and cash insightful, amongstentirely density strategies. On the other hand if the information exist not officially display at the goal site, their mass can even now conceivably be decreased by relating pressure calculations. Regardless of whether to invest energy and assets to put on such a calculation and the determination of the calculation herself are choices that we permission to clients, who identify the request semantics.

We will likely make exact estimations however in the meantime to stay nonexclusive with our model, paying little respect to the followed measurements or the earth changeability. The administration supports client educated pressure related choices, that is, compression– time or compression–cost pick up estimation.

Scope of the study

The multi-site cloud is comprised of a few topographically circulated server farms. An application that has numerous running occasions in a few organizations over different cloud server farms is alluded to as a multi-site cloud application. Our concentrate is on such applications. In spite of the fact that applications could be conveyed crosswise over

sites having a place with various cloud sellers theyexistavailable of the extent of this work.

II.

METHODS AND MATERIAL

Transforming geo-differences into geo-repetition requires the information or the condition of uses to be dispersed crosswise over locales. Information developments are period and asset expending and it is in efficient for applications to interrupt their principle calculation with a specific end goal to perform such operations.

Applications basically show the information to be motivated and the goal by means of an API work appeal, i.e., Duplicate(Information, End). At that point, the administration plays out the geological reproduction by means of multi-way exchanges, while the application proceeds continuous. Repeating information opens the potential outcomes for various enhancement systems. By utilizing the beforehand presented benefit for evaluating the cost, the georeplication service can improve the process for price or implementationperiod. To this reason, applications are furnished with a discretionary restriction when do the capacity. By differing the estimation on this subject parameter in the section of 0 and 1, applications resolve demonstrate a greaterheaviness for rate (i.e. an estimation of 0) or for period (i.e. an estimation of 1), which thus will decide the measure of assets to usage for repeating the information. This remains finished by questioning the cost estimation benefit for the base in addition most extreme circumstances, the particular price forecasts, and after that utilizing the arrangement guideline as a slider to take in the middle of them.

III.

LITERATURE SURVEY

framework and can be conveyed on both private and open mists. This paper portrays the plan of e-SC, its API and its utilization in three distinctive contextual analyses spirit information representation, medicinal information catch and examination, and invention holdings anticipation [2].In this proposed system we are portraying the WAS trade in worldwide and show the information in sequence order, as we bring in the underlying plan and model discharge of Stork Cloud, and reveal its viability in huge information contacts cater-cornered over topographically removed capacity destinations, server farms, and teaming up foundations[3].Writing study is fundamental visit to investigate the issue area and handle top to bottom learning on related field, which can be necessary discovery to get worry of the current problem. In the region of massive framework improvement, we need to direct different prerequisite assembling so as to know the issue legally. Be that as it may, genuine test starts when we need to settle on tools and developments which could suit best to take care of the proposed issue [4].Writing study helps us to discover the likely most proficient way to address the issue, which would just not tackle the issue, but rather in a productive and least demanding conceivable way [5].

IV.

Existing System

The handiest alternative for dealing with information disseminated over a few data centers is to depend on the current distributed storage administrations. This approach permits to exchange information between subjective endpoints by means of the distributed storage and it is received by a few frameworks with a specific end goal to oversee information developments over wide-zone systems.

Other than capacity, there are few cloud-gave administrations that emphasis on information dealing with. Few of them utilize the land circulation of information to decrease potentials of information exchanges. Amazon's Cloud Front, for example, utilizes a system of edge areas around the globe to store duplicate static substance near

The issue of booking information concentrated work processes in mists accepting that records are recreated in different execution sites.

Then again, end-framework parallelism can be misused to enhance usage of a private way by methods for parallel streams or simultaneous exchange. Be that as it may, one ought to likewise consider framework design since particular nearby imperatives may present bottlenecks. One problem with every one of these methods is that they can't be ported to the clouds, meanwhile they definitely depend on the fundamental system topology, obscure at the client level.

Disadvantage:

These existing works cannot decrease the economic cost and exchange time.

V.

Proposed System

In this framework, we propose Overflow, a completely mechanized single and multi-site programming framework for logical work processes information administration.

We propose an approach that improves the work process information exchanges on mists by methods for versatile exchanging between a few intra-site record exchange conventions utilizing setting data.

We construct a multi-route exchange approach crosswise over middle hubs of different data centers which total transmission capacity for proficient between destinations exchanges.

Our proposed work can be utilized to boost huge scale work processes through a wideprocedure of pluggable administrations that scale and enhance prices, provide bits of information on the earth execution and allowsmoothinformation pressure, deduplication and geo-replication.

The virtual machine chooses the shortest path among all the virtual machine to send the file to the destination of virtual machine.

Advantages:

Our proposed work can decrease the economic costs and exchange time by up to three times. We can also know distance between the Virtual

VI.

RESULTS AND DISCUSSION

System Design :

Architecture

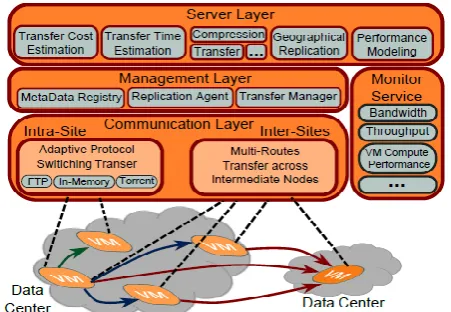

Figure 1: Extendible server based structural design of the overflow system

Meta Data Registry

It contains the positions of records in virtual machine.

It make use of memory and the table contain document identification name (e.g., title, client distribution group etc.) and areas.

Replication Agent

The replication specialist is a helper part to the sharing usefulness, securing adaptation to non-critical failure over the hubs.

The benefit keeps running as a foundation procedure inside each VM.

In-Memory

It targets little documents exchanges or arrangements which have vast extra memory.

Module Description

In my project there are Four Modules 1. Cloud Formation

2. Upload File & File Request 3. Multipath Selection

4. Smart data Compression & Replication

Cloud Formation

Now this section, we frame the cloud. At this point we produce single cloud specialist co-op. The situation screens site subtle elements, pragmatic machine points of interest, information register and transmission period.

We create locales. All site takes interesting identification then associate through cloud service provider. The situation see neighbour site indirect elements.

At that point we create pragmatic machine. At this timeall pragmatic machine takes special identificationat that moment associate by alluring site. This one see neighbour pragmatic machine points of interest.

Upload File & File Request

In this module, each VM can transfer a record into its own particular stockpiling. These subtle elements are put away in information register. If another VM need to get to this record, he sent the

document demand to Source VM.

Multipath Selection

In this module, the source pragmatic machine needs toward lead record addicted to goal pragmatic machine.

Toward diminish price and exchange period, this one need pick most brief way among basis pragmatic machine toward goal pragmatic machine.

So it finds the Multipath utilizing Multipath Selection calculation then locate the most brief way.

Smartdata Compression & Replication

Big information size is too huge. In the event that any source VM send this huge information to goal, its cost and exchange time is expanded.

To handle this issue, we should pack this huge information to little information. So we apply savvy information pressure strategy.

Finally, the source VM reproduces its keen packed information to goal VM.

Figure 2: Screen Shots Shows Cloud Service Provider

ENTER SITE ID

Figure 3: Screen Shots Shows Enter site id

DISPLAY SITE-1(DATA CENTER-1)

Figure 4: Screen Shots Shows Display site-1(Data center-1)

SITE-1 CONNECTED SUCCESSFULLY (DATA CENTER-1)

Figure 5: Screen Shots Shows Site-1 connected successfully (Data center-1)

ENTER VIRTUAL MACHINE ID

Figure 6: Screen Shots Shows Enter Virtual Machine id

DISPLAY VIRTUAL MACHINE-1

Figure 7: Screen Shots Shows Display Virtual Machine-1

Figure 8: Screen Shots Shows Site-1 Connected Virtual Machine-1

ENTERED SITE ID OF VIRTUAL MACHINE-2

Figure 9: Screen Shots Shows Entered site id of Virtual Machine-2

UPLOAD DATA INTO OWN DATABASE VM1

Figure 10: Screen Shots Shows Upload data into own database VM1

FILE REQUEST HAS BEEN SENT FROM VM-2 TO VM-1

Figure 11: Screen Shots Shows File request has been sent from VM-2 to VM-1

SHORTEST PATH CHOOSEN SUCCESSFULLY

Figure 12: Screen Shots Shows Shortest path chosen successfully

CSP SHOWS DETAILS

Figure 13: Screen Shots CSP Shows details

Figure 14: Screen Shots Shows Transfer time details

TRANSFER TIME WITH GRAPH

Figure 15: Screen Shots Shows Transfer time with Graph

VII.

CONCLUSION

The project presents Over-Flow, an information administration system for logical work processes running in huge, physically spread and extremely powerful conditions. Our framework can successfully utilize the rapid systems associating the cloud server farms through advanced convention fine-tuning and blockage shirking, whereasoutstanding non-meddlesome and simple to convey. Over-Flow exists utilized as a part of generation on the Azure Cloud, as an information administration backend for the Microsoft GeneralOperative work process motor.

VIII.

FUTURE ENHANCEMENT

Supported by these outcomes, we idea to additionally investigate the effect of the metadata contact on the general work processperformance. For logical work processes taking care of numerous little documents,

this can turn into ablockage, therefore we ideatowardsexchange the per site @metadata registries through a worldwide, various levelled unique. Besides, amotivatingway to investigate is the neareraddition between Overflow on taking care of surges of information in the cloud and also other information preparing for motors. To this end, an allowance of the semantics of the programming interface is required.

VI REFERENCES

[1]. R. Tudoran, A. Costan, R. R. Rad, G. Brasche, and G. Antoniu,"Adaptive file management for scientific workflows on the azure cloud," in BigData Conference, 2013, pp. 273-281. [2]. H.Hiden, S. Woodman, P.Watson, and J.Cała,

"Developingcloud applications using the e-science central platform." In Proceedings of Royal Society A, 2012.

[3]. B. e. a. Calder, "Windows azure storage: a highly availablecloud storage service with strong consistency," in Proceedings of the Twenty-Third ACM Symposium on Operating Systems Principles,ser. SOSP '11, 2011, pp. 143-157.

[4]. T. Kosar, E. Arslan, B. Ross, and B. Zhang, "Storkcloud:Data transfer scheduling and optimization as a service," in Proceedings of the 4th ACM Science Cloud '13, 2013, pp. 29-36.