Leveraging Syntactic Constructions for Metaphor Identification

Kevin Stowe

University of Colorado, Boulder kevin.stowe@colorado.edu

Martha Palmer

University of Colorado, Boulder martha.palmer@colorado.edu

Abstract

Identification of metaphoric language in text is critical for generating effective semantic rep-resentations for natural language understand-ing. Computational approaches to metaphor identification have largely relied on heuristic based models or feature-based machine learn-ing, using hand-crafted lexical resources cou-pled with basic syntactic information. How-ever, recent work has shown the predictive power of syntactic constructions in determin-ing metaphoric source and target domains (Sullivan, 2013). Our work intends to ex-plore syntactic constructions and their rela-tion to metaphoric language. We undertake a corpus-based analysis of predicate-argument constructions and their metaphoric properties, and attempt to effectively represent syntac-tic constructions as features for metaphor pro-cessing, both in identifying source and tar-get domains and in distinguishing metaphoric words from non-metaphoric.

1 Metaphor Background

Metaphor can be understood as the conceptual-ization of one entity using another. Lakoff and Johnson’s seminal work shows that metaphors are present at the cognitive level and expressed lin-guistically (Lakoff and Johnson,1980). A typical conceptual metaphor mapping is ARGUMENT IS WAR, in which ARGUMENT is structured through

the domain ofWAR:

1. Hedefendedhis position through his publica-tions.

2. Her speechattackedhis viewpoint.

The term ”linguistic metaphor” is used to indi-cate these types of words and phrases. We will focus on linguistic metaphor, as identifying these utterances as metaphoric is critical for generating

correct semantic interpretations. For instance, in the examples above, literal semantic interpreta-tions of ’defend’ and ’attack’ will yield nonsen-sical utterances: a phynonsen-sical position cannot rea-sonably be defended by a publication, nor can a speech physically attack any kind of entity.

Automatic metaphor processing tends to in-volve two main tasks: identifying which words are being used metaphorically (here called metaphor

identification), and attempting to provide an accu-rate semantic interpretation for an utterance (here called metaphor interpretation). The first has largely been approached as a supervised machine learning problem, typically using lexical semantic features and their interaction with context to learn the kinds of situations where lexical metaphors ap-pear. The problem of metaphor interpretation is more complex, with approaches including the im-plementation of full metaphoric interpretation sys-tems (Martin,1990), (Ovchinnikova et al.,2014), identification of source and target domains (Dodge et al., 2015), developing knowledge bases ( Gor-don et al.,2015), and providing literal paraphrases to metaphoric phrases (Shutova,2010), (Shutova,

2013).

In both identification and interpretation sys-tems, syntax tends to play a limited role. Many systems rely only on lexical semantics of target words, or use only minimal context or dependency relations to help disambiguate in context (Gargett and Barnden,2015), (Rai et al.,2016). Others rely on topic modeling and other document and sen-tence level features to provide general semantics, and compare the lexical semantics to that, ignor-ing the more ”middle”-level syntactic interactions (Heintz et al.,2013). While these approaches have been effective in many areas, there is evidence that figurative language is significantly influenced by syntactic constructions, and thus if they can be represented more effectively, metaphor processing

capabilities can be improved.

We will examine five kinds of predicate-argument constructions in corpus data to assess their metaphoric distributions and usefulness as features for classification. Our contribution is twofold. First, we examine the LCC metaphor corpus, which includes source and target an-notations, to determine their use in predicate-argument constructions (Mohler et al.,2016), and employ syntactic representations as features to im-prove source/target classification. Second, we in-vestigate predicate-argument constructions in the VUAMC corpus of metaphor annotation ( Praggle-jaz Group,2007), and employ syntactic features to predict metaphoric vs non-metaphoric words.

2 Metaphor and Constructions

Recent metaphor research has indicated that con-struction grammar can be employed to deter-mine the source and target domains of linguistic metaphors (Sullivan, 2013). In many cases, cer-tain constructions can determine what syntactic components are allowable as source and target do-mains. For example, verbs tend to evoke source domains. The target domain is then evoked by one or more of the verb’s arguments (from Sullivan pg 88):

1. thecinemabeckoned(intransitive)

2. thecriticismstunghim (transitive)

3. Meredithflunghiman eager glance (ditran-sitive)

In these instances, the verb is from the source domain and at least one of the objects is from the target. However, arguments can also be neu-tral and don’t necessarily evoke the target domain. Pronouns like ’him’ in (2) and (3) don’t evoke any domain. The optionality of domain evocation makes it harder to predict which elements of the construction participate in the metaphor. Despite this limitation, this analysis shows that syntactic structures beyond the lexical level can be indica-tive of source and target domains. To better un-derstand how these structures determine metaphor, we explored metaphor-annotated corpus data for predicate-argument constructions.

3 Computational Approaches

While metaphor processing has largely been fo-cused on capturing lexical semantics, there have

been a variety of approaches that incorporate syntactic information. Many computational ap-proaches focus on specific constructions, per-haps indicating the need to classify different metaphoric constructions through different means. The dataset of (Tsvetkov et al., 2014) provides adjective-noun annotation which has been exten-sively studied (Rei et al., 2017), (Bulat et al.,

2017). A particularly promising approach is that of (Gutierrez et al.,2016), who use compositional distributional semantic models (CDSMs) to repre-sent metaphors as transformations in vector space, specifically for adjective-noun constructions. An-other relevant approach is that of (Haagsma and Bjerva, 2016) who use clustering and selectional preference information to detect metaphors in predicate argument constructions, including verbs with objects, subjects, and both. Their highest F1 is 57.8 for verbs with both arguments.

Many systems that rely heavily on lexical re-sources also include some dependency informa-tion. (Rai et al.,2016) and (Gargett and Barnden,

2015) use a variety of syntactic features including lemma, part of speech, and dependency relations. However, both systems are feature-rich and these syntactic elements’ contribution is unclear. (?) use lexical features along with contrasting those fea-tures between the target word and its head. (Dodge et al.,2015) employ a variety of constructions in identifying metaphoric source and target domains. They identify a broad range of constructions and use these as templates that metaphoric expressions can fill. Our work expands on this idea by formal-izing the constructions into features for statistical metaphor identification.

Perhaps the most syntactically oriented metaphor identification system is that of (Hovy et al., 2013), who uses syntactic tree kernels to identify metaphor. They use combinations of syntactic features via tree kernels and semantics via WordNet supersenses and target word embed-dings. Our approach expands on this by exploring different syntactic representations and incorporat-ing semantics through word embeddincorporat-ings into the syntactic structures.

4 Corpus Analysis

variety of source-target patterns in each construc-tion’s argument structure, an in-depth analysis of how these constructions and their metaphoric properties are distributed is still needed. We exam-ined the predicate argument constructions they an-alyze by using hand-annotated metaphor corpora to better understand the distributional patterns that occur. This allows us to make predictions about what kind of constructions and arguments are use-ful for metaphor identification and interpretation and what might be a computationally feasible way to implement them.

While they examine many kinds of construc-tions, most of them seem based almost entirely on the lexical semantics of the words involved, and thus can be captured simply by effectively rep-resenting the meaning of individual words. Do-main and predicative adjective constructions fall into this category: the construction is identified by the type of adjective, which needs to be rep-resented at the lexical level. The more interesting cases are argument structure constructions, which take many forms. Sullivan identifies nine different argument structure constructions that each have their own source and target properties:

1. Intransitive

2. Transitive

3. Intrasitive Resultative 4. Transitive Resultative 5. Ditransitive

6. Equation

7. Predicative AP 8. Predicative PP 9. Simile

To identify the use of metaphor in these con-structions, we will rely on two resources: the LCC metaphor corpus and the VUAMC corpus. The freely available portion of the LCC corpus con-tains approximately 7,500 source/target pairs, al-lowing for a more in-depth look at metaphoric se-mantics. The VUAMC contains approximately 200,000 words of text with each word tagged as metaphoric or non-metaphoric. This allows for large scale analysis of metaphoricity versus non-metaphoricity at the word level.

4.1 Identifying Constructions

To examine metaphors in these corpora, we need a method for automatically identifying predicate-argument constructions. The VUAMC corpus, as a subsection of the BNC baby, comes with

gold-standard dependency parses. For the LCC dataset, we used the dependency parser from Stan-ford Core NLP tools (Manning et al.,2014). These parses are sufficient to identify intransitives, tran-sitives, and ditransitive constructions. Verb in-stances that have an indirect object are ditransi-tive, those that lack an indirect object but have a direct object are transitive, and those that lack ei-ther but have a subject are intransitive. Copulas are marked in the dependency parses, so we can eas-ily identify equative constructions. While similes can take many forms, Sullivan’s work focuses on simile constructions that consist of a copular verb and the word ’like’. This oversimplifies to some degree, as many similes don’t need a copula (’she fretted like a mother hen’, ’they flew like bats’), but it allows us to create a subset of equative con-structions that represent copular similes.

This analysis is necessarily limited, as the we cannot automatically capture more complex con-structions via dependency parses, and many of these are often metaphorically rich. While we un-derstand this limitation, we believe that we can uti-lize syntactic features of these basic constructions as a starting point, with a future goal of expanding to more complex examples.

Also note that we only identify the surface re-alization of these constructions - any dropped ar-guments or missing elements that aren’t in the de-pendency parse aren’t considered a part of the con-struction. Thus we see examples of typically di-transitive verbs (like ’give’) that occur intransi-tively and transiintransi-tively, as they lack overt direct and indirect objects.

5 LCC Analysis

In order to identify constructions in the LCC data, we extracted syntactic relations from the de-pendency parses, using the basic patterns previ-ously defined to identify predicate argument con-structions. This allows us to identify the five dif-ferent constructions: intransitives, transitives, di-transitives, equatives (copulas), and similes (ana-lyzed as a subset of equative constructions). For each construction found, we can identify the pred-icate and the predpred-icate’s arguments, and determine for each whether they are identified as metaphoric and whether they belong to the source or target do-main.

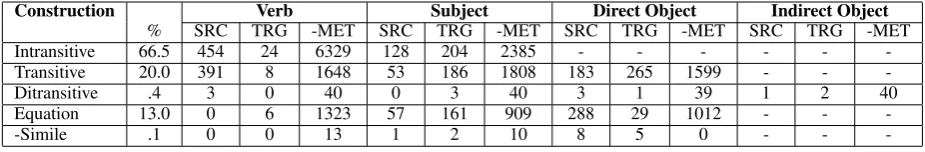

Figure 1: Counts of metaphoric items in the LCC. Each bar represents the total instances of argument in each construction, as well as the percentage of items that belong to source and target domains.

The vast majority of constructions in the LCC are intransitive, transitive, and equative. Ditransi-tives (.4%) and similes (.1%) are exceedingly rare. This may be because the similes found are only the verbal type: instances of a copula with the word ’like’. Other similes are likely missed by this au-tomatic approach.

The majority of metaphoric verbs (92%) are source domain items, supporting Sullivan’s claims. Subjects and objects tend to be from the target domain (61% each). Ditransitive verb con-structions are relatively rare, with only 43 found, and only 3 of those containing a metaphoric verb.

Figure1shows the counts of source and target items in the LCC data, based on construction and argument of the construction. Note that in equa-tive constructions, direct objects are almost always source domain items, showing a parallel between copular arguments and verbs. This is likely due to the predicative nature of the direct objects of cop-ular verbs.

5.1 Source and Target Identification

Given that verbs and their argument structures have varying distributions of source and target do-main items, we believe that these syntactic struc-tures can be effectively employed in the classifi-cation of source and target domain words. While identifying source and target domains at the sen-tence level requires lexical and sentential seman-tics and may not require syntactic information, identifying lexical triggers can be improved by us-ing better syntactic representations. To this end we set up a classification task for identifying source and target elements.

The LCC contains phrase-level annotations for source and target elements. We split each sen-tence into words, projecting the source and target annotations to the word level. From this, we de-veloped three classification tasks: (1) identifying source words, (2) identifying target words, and (3) identifying any metaphoric word (either source or target). Our classification scheme focuses on verbs and nouns, as these are the elements that compose the syntactic structures in question.

We developed a set of different representations designed to capture construction-like structures, and employ them for source/target classification. This approach follows the intuition of (Hovy et al.,

2013): ”metaphorical use differs from literal use in certain syntactic patterns”. We implemented this theory by developing various representations of constructional syntax and pairing them with lex-ical semantic features.

For our lexical semantics component, we ex-perimented with the word embeddings from word2vec (Mikolov et al., 2013), using the pre-trained Google News data, as well as the Glove embeddings (Pennington et al.,2014). We found in validation that the Google News vectors yielded slightly better performance, and so those were used in further experiments.

5.2 Syntactic Representations

Construction Verb Subject Direct Object Indirect Object

% SRC TRG -MET SRC TRG -MET SRC TRG -MET SRC TRG -MET

Intransitive 66.5 454 24 6329 128 204 2385 - - -

-Transitive 20.0 391 8 1648 53 186 1808 183 265 1599 - -

-Ditransitive .4 3 0 40 0 3 40 3 1 39 1 2 40

Equation 13.0 0 6 1323 57 161 909 288 29 1012 - -

--Simile .1 0 0 13 1 2 10 8 5 0 - -

-Table 1: % Metaphor by Construction (LCC). For each predicate, the count of source (SRC), target (TRG), and non-metaphoric (-MET) instances are counted, as well as those for all of each construction’s defining arguments.

from relevant contexts.

5.2.1 Predicate Argument Construction

For a basic integration of syntax, we used the above corpus analysis technique to identify which predicate-argument construction the verb token belongs to. This results in a one-hot vector rep-resenting either an intransitive, transitive, ditransi-tive, equaditransi-tive, or simile construction. This pro-vides basic, purely syntactic knowledge of how many arguments this particular instance of a verb currently has. For nouns, we extend this to include which slot in the construction the noun is filling (subject, direct object, indirect object) in addition to the type of predicate-argument construction.

5.2.2 Head and Dependent Features

Including representations of the head word and dependent words of the word to be classified is a straightforward way to include basic syntactic information. For verbs, this mainly involves the dependents, although many verbs also have head words. We include a concatenation of the aver-age embedding over the word’s dependents and the embedding of the word’s head.

5.2.3 Dependency Relations

A more general and perhaps more powerful way of converting dependency relations into syntacti-cally relevant features is to include the specific de-pendency relations for each dependent of the tar-get. For verbs, these include things like subjects, direct objects, adverbial modifiers, nominal mod-ifiers, passive subjects, and more. Capturing the fine-grained dependencies for each verb is analo-gous to determining the exact syntactic construc-tion it is being realized in. Combining this feature with the embeddings of dependents and heads is a promising avenue for linking syntax and seman-tics.

5.2.4 VerbNet Class

VerbNet is a lexical semantic resource that groups verbs into classes based on their syntactic behavior (Kipper-Schuler,2005). It categorizes over 6,000 verbs into classes, each of which contains syntac-tic frames that the verbs in the class can appear in. It also contains distinct senses, allowing it to distinguish between different verb uses in context. Previous approaches have employed VerbNet as a lexical resource (Beigman Klebanov et al.,2016), but aggregated the senses of each verb, removing the syntactic distinctions that VerbNet makes for different word senses.

We ran word-sense disambiguation to deter-mine the VerbNet class for each verb token (Palmer et al., 2017). We included one-hot vec-tors representing verb senses for each token, and combining this with knowledge of the particular constructions and the lexical semantics provided by embeddings for each token gives syntactically motivated information about the semantics of the utterance. For noun identification, we include the VerbNet class of the head of that noun.

5.3 Experiments

[image:5.595.68.531.63.139.2]set for each classification task, judged by the im-proved performance of each feature over the base-line. The classification was split into three tasks: identifying source items, identifying target items, and identifying metaphoric (either source or tar-get) from non-metaphoric. The results of these ex-periments are in table2.

From these results we can see that classifying source-domain words in the LCC data is harder than classifying target-domain words. This may be because of the broad range of domains, as the corpus contains 114 possible source domains. Tar-get items are much easier to classify, likely be-cause the dataset contains only a limited number (32) of target domains. Embeddings are effective at representing semantics, and they can accurately determine the domain of lexical items, allowing for easy classification of target items.

Our syntactic features show mixed results. Adding sentential context is consistently effec-tive, showing that naive contextual approaches are helpful. Adding dependency embeddings is also consistently effective, supporting our hypothesis that knowledge of syntactic properties can be help-ful in metaphor classification. Other syntactic fea-tures are inconsistent, especially in predicting the metaphoricity of verbs. Selecting only the feature sets that showed improvement over the baseline yields the best results for most categories.

6 VUAMC Analysis

The LCC allows for an in-depth examination of source and target domains, but is relatively small compared to the VUAMC. We can use the VUAMC data to inspect the distribution of word metaphoricity with regard to argument struc-ture constructions. While Sullivan’s work focuses on source and target domain elements and not whether or not words are used metaphorically, we can examine the binary classifications in the VUAMC to provide insight into the distribution of metaphoric verbs and the predicate-argument constructions they participate in. Counts of ar-gument structure verbs and arar-guments and their metaphoricity are shown in table3.

From the data in table3, we can see clear dis-tinctions between different constructions and the metaphoricity of their arguments. Verbs in in-stransitive constructions are much less likely to be metaphoric than those used in transitives, and both less so than those in ditransitive constructions.

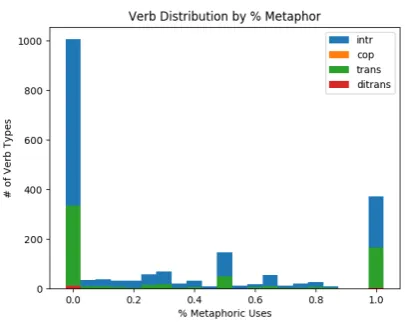

Figure 2: Verb types by percent of metaphoric use in each construction. Each bar represents the number of verb types that match the X axis for percentage of metaphoric usages.

The VUAMC chooses not to mark copular verbs as metaphoric, and only one instance was found of equative constructions having a metaphoric verb.

We might expect that different constructions would also impact the distribution of the predi-cates’ arguments. However, from the data we see that verb arguments are fairly consistent. Indirect objects in ditransitive constructions were never ob-served to be metaphoric, but direct objects are be-tween 11% and 16% metaphoric throughout. Sub-jects vary from 2.8% in ditransitives to 11.7% in equative constructions. One distinctive feature is that subjects are much less likely than objects to be metaphoric.

The overall distribution of metaphoric uses by verb construction shows that the more arguments that are present in the construction, the more likely the verb is being used metaphorically. For fur-ther evidence, we can examine the distribution of metaphoric usages on a verb-specific basis.

[image:6.595.308.511.70.231.2]Verbs Nouns

Features Src Trg Met Src Trg Met

Baseline (Embedding,1-word context) .467 .316 .483 .440 .701 .597 +Context .494 .545 .436 .487 .705 .593

+Dependent Embeddings .482 .421 .444 .570 .717 .631

+Dependency Relations .488 .384 .482 .486 .718 .601

+Argument Construction .459 .461 .457 .456 .661 .598

[image:7.595.116.481.62.192.2]+VerbNet Class .467 .555 .473 .433 .684 .589 Best Combination .551 .600 .505 .519 .705 .630

Table 2: Classification of Source and Target elements in the LCC Corpus. Metaphor (MET) is the classification of a word as either Source or Target against non-metaphoric words.

Verb Predicate Subject Direct Object Indirect Object

% +M -M %Met +M -M %Met +M -M %Met +M -M %Met

Intransitive 75.1 5118 24301 17.4 284 4627 5.8 - - -

-Transitive 13.1 1517 3612 29.6 119 3125 3.7 654 4475 12.8 - -

-Ditransitive .2 24 35 40.7 1 35 2.8 9 50 15.2 59 0 0

Equation 11.6 1 4548 .02 449 3376 11.7 468 3736 11.1 - -

--Simile .1 0 35 0.0 2 28 6.7 7 26 21.2 - -

-Table 3: % Metaphor by Construction (VUAMC). For each predicate, the count of metaphoric (+M) and non-metaphoric (-M) instances are counted, as well as those for all of each construction’s defining arguments.

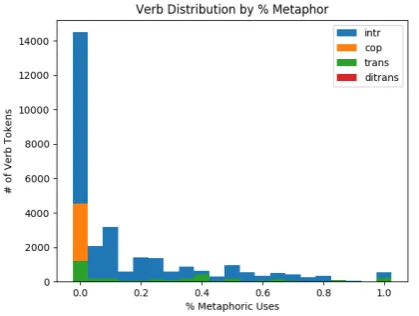

Figure 3: Verb tokens by percent of metaphoric use in each construction. Each bar represents the number of verb tokens that belong to verb types that match the X axis for percentage of metaphoric usages.

rare, but copula tokens are very common and al-most always literal.

We extended this analysis by examining the dis-tribution of the verb types that can appear intran-sitively, tranintran-sitively, and ditransitively. Our hy-pothesis in studying these verbs is that the type of construction the verb appears in is predictive of that verb’s metaphoric use, independent of the verb’s overall behavior. Eleven verbs appeared in all three constructions, and the analysis of their

metaphoricity is presented in figure4.

From the distribution in the VUAMC corpus, the data indicates that the type of argument struc-ture construction does not significantly change the distribution of metaphoricity. The verbs generally have the same percentage of metaphoric usages regardless of which construction they appear in. Only ’give’ appears in more than 2 instances of the ditransitive, and its distribution mirrors that of its use in other constructions.

Two components from our corpus analysis stand relevant for automatic metaphor processing. First, in broad scope over all verb tokens, predicates’ metaphor distributions are dependent on the kind of construction they occur in. Second, the verb itself is critical, as each verb tends to follow the same pattern of metaphoricity throughout its con-structions. This supports our belief that identifica-tion of metaphor requires modeling of the interac-tion of syntactic and semantic informainterac-tion.

6.1 Metaphor Identification (VUAMC)

[image:7.595.76.518.237.314.2] [image:7.595.69.279.379.537.2]Figure 4: Counts of metaphoric uses by verb and construction for those verbs that occur in intransitive, transitive, and ditransitive constructions

used a split of 76/12/12, using a linear SVM. For metaphoric identification in the VUAMC, all of the syntactic features improved classifica-tion over the baseline for verbs. For nouns, the dependency embeddings and VerbNet class of the noun’s head were effective. For both, combin-ing all of the syntactic representations yields the best performance. While this classification based on syntactic is slightly lower than some recent experiments (Beigman Klebanov et al., 2016), it shows improvement over using purely lexical se-mantics, and we believe the incorporation of better syntactic representations can be used to improve metaphor identification systems.

7 Conclusions

The type of syntactic construction a verb is present in provides unique evidence of how it is being used metaphorically. It is important to effectively

inte-Model Verbs Nouns

Baseline (Embedding, 1-Word context) .339 .303

+Context .488 .224

+Dependency Embeddings .425 .349

+Dependency Relations .466 .393

+Argument Construction .471 .289

+VerbNet Class .418 .330

+All .531 .505

Table 4: Results of adding different syntactic models for VUAMC verb classification.

[image:8.595.306.526.503.591.2]art performance, we believe that improving repre-sentations of syntactic constructions can provide some benefit to metaphor processing.

To that end, our future goals include explor-ing better representations of the interaction be-tween syntax and semantics. Models like syntactic tree kernels, compositional distributional semantic models, and other syntactically driven methods are likely to improve classification if they can prop-erly combine syntactic and semantic representa-tions. Additionally, as different constructions are likely to yield different types of metaphoricity, we aim to employ ensemble methods that incorporate construction-based knowledge to select the most effective classifier, and extending our approach to identifying source and target domains in addition to lexical triggers.

Acknowledgements

We gratefully acknowledge the support of the De-fense Threat Reduction Agency, HDTRA1-16-1-0002/Project #1553695, eTASC - Empirical Ev-idence for a Theoretical Approach to Semantic Components and a grant from the Defense Ad-vanced Research Projects Agency 15-18-CwC-FP-032 Communicating with Computers, a sub-contract from UIUC. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not neces-sarily reflect the views of any government agency.

References

Beata Beigman Klebanov, Chee Wee Leong, E. Dario Gutierrez, Ekaterina Shutova, and Michael Flor. 2016. Semantic classifications for detection of verb metaphors. InProceedings of the 54th Annual Meet-ing of the Association for Computational LMeet-inguistics (Volume 2: Short Papers), pages 101–106, Berlin, Germany. Association for Computational Linguis-tics.

Luana Bulat, Stephen Clark, and Ekaterina Shutova. 2017. Modelling metaphor with attribute-based se-mantics. InProceedings of the 15th Conference of the European Chapter of the Association for Compu-tational Linguistics: Volume 2, Short Papers, pages 523–528, Valencia, Spain. Association for Compu-tational Linguistics.

Ellen Dodge, Jisup Hong, and Elise Stickles. 2015.

Metanet: Deep semantic automatic metaphor anal-ysis. In Proceedings of the Third Workshop on Metaphor in NLP, pages 40–49, Denver, Colorado. Association for Computational Linguistics.

Andrew Gargett and John Barnden. 2015. Modeling the interaction between sensory and affective mean-ings for detecting metaphor. InThird Workshop on Metaphor in NLP, pages 21–30, Denver, CO.

Jonathan Gordon, Jerry Hobbs, Jonathan May, and Fabrizio Morbini. 2015. High-precision abductive mapping of multilingual metaphors. InProceedings of the Third Workshop on Metaphor in NLP, pages 50–55, Denver, Colorado. Association for Computa-tional Linguistics.

E.Dario Gutierrez, Ekaterina Shutova, Tyler Marghetis, and Benjamin Bergen. 2016. Literal and metaphor-ical senses in compositional distributional semantic models. In Proceedings of the 54th Annual Meet-ing of the Association for Computational LMeet-inguistics (Volume 1: Long Papers), pages 183–193, Berlin, Germany. Association for Computational Linguis-tics.

Hessel Haagsma and Johannes Bjerva. 2016. Detect-ing novel metaphor usDetect-ing selectional preference in-formation. InProceedings of the Fourth Workshop on Metaphor in NLP, pages 10–17, San Diego, Cal-ifornia. Association for Computational Linguistics.

Ilana Heintz, Ryan Gabbard, Mahesh Srivastava, Dave Barner, Donald Black, Majorie Friedman, and Ralph Weischedel. 2013. Automatic extraction of linguis-tic metaphors with lda topic modeling. In Pro-ceedings of the First Workshop on Metaphor in NLP, pages 58–66, Atlanta, Georgia. Association for Computational Linguistics.

Dirk Hovy, Shashank Shrivastava, Sujay Kumar Jauhar, Mrinmaya Sachan, Kartik Goyal, Huying Li, Whit-ney Sanders, and Eduard Hovy. 2013. Identify-ing metaphorical word use with tree kernels. In

Proceedings of the First Workshop on Metaphor in NLP, pages 52–57, Atlanta, Georgia. Association for Computational Linguistics.

Karen Kipper-Schuler. 2005. VerbNet: A broad-coverage, comprehensive verb lexicon. Ph.D. thesis, University of Pennsylvania.

George Lakoff and Mark Johnson. 1980. Metaphors We Live By. University of Chicago Press, Chicago and London.

Christopher D. Manning, Mihai Surdeanu, John Bauer, Jenny Finkel, Steven J. Bethard, and David Mc-Closky. 2014. The Stanford CoreNLP natural lan-guage processing toolkit. InAssociation for Compu-tational Linguistics (ACL) System Demonstrations, pages 55–60.

James H. Martin. 1990. A Computational Model of Metaphor Interpretation. Academic Press, Inc.

Michael Mohler, Mary Brunson, Bryan Rink, and Marc Tomlinson. 2016. Introducing the lcc metaphor datasets. InProceedings of the Tenth International Conference on Language Resources and Evaluation (LREC 2016), Paris, France. European Language Resources Association (ELRA).

Ekaterina Ovchinnikova, Ross Israel, Suzanne Wertheim, Vladimir Zaytsev, Niloofar Montazeri, and Jerry Hobbs. 2014. Abductive inference for interpretation of metaphors. InProceedings of the Second Workshop on Metaphor in NLP, pages 33– 41, Baltimore, MD. Association for Computational Linguistics.

Martha Palmer, James Gung, Claire Bonial, Jinho Choi, Orin Hargraves, Derek Palmer, and Kevin Stowe. 2017. The pitfalls of shortcuts: Tales from the word sense tagging trenches. Essays in Lexi-cal Semantics and Computational Lexicography - In Honor of Adam Kilgariff.

Jeffrey Pennington, Richard Socher, and Christo-pher D. Manning. 2014. Glove: Global vectors for word representation. InEmpirical Methods in Nat-ural Language Processing (EMNLP), pages 1532– 1543.

Pragglejaz Group. 2007. MIP: A method for iden-tifying metaphorically used words in discourse.

Metaphor and Symbol, 22(1):1–39.

Sunny Rai, Shampa Chakraverty, and Devendra K. Tayal. 2016. Supervised metaphor detection using conditional random fields. In Proceedings of the Fourth Workshop on Metaphor in NLP, pages 18– 27, San Diego, California. Association for Compu-tational Linguistics.

Marek Rei, Luana Bulat, Douwe Kiela, and Ekaterina Shutova. 2017. Grasping the finer point: A su-pervised similarity network for metaphor detection. In Proceedings of the 2017 Conference on Empiri-cal Methods in Natural Language Processing, pages 1537–1546, Copenhagen, Denmark. Association for Computational Linguistics.

Ekaterina Shutova. 2010. Automatic metaphor inter-pretation as a paraphrasing task. In Human Lan-guage Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, pages 1029–1037, Los Angeles, California. Association for Computa-tional Linguistics.

Ekaterina Shutova. 2013.Metaphor identification a in-terpretation. InSecond Joint Conference on Lexical and Computational Semantics (*SEM), Volume 1: Proceedings of the Main Conference and the Shared Task: Semantic Textual Similarity, pages 276–285, Atlanta, Georgia, USA. Association for Computa-tional Linguistics.

Karen Sullivan. 2013. Frames and Constructions in Metaphoric Language. John Benjamins.