Validation of OMS II

A validation of the Margin Model OMS II for equity and index

products used by Nasdaq OMX

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 2 (38)

Validation of OMS II, 2014

Revision history:

Date Version Description Author

2014-11-18 1.0 Initial draft Bengt Jansson

2014-12-01 1.1 Draft Bengt Jansson

2014-12-08 1.2 Final version Bengt Jansson

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 3 (38)

Validation of OMS II, 2014

Table of contents

1. Background ... 4

1.1 General ... 4

1.2 Legal environment ... 4

2. Input to the validation ... 4

2.1 Documentation at NOMX Clearing ... 4

2.2 Numerical analysis of OMS II ... 5

2.3 Discussions ... 6

2.4 Special issues ... 6

3. Theoretical framework of the model ... 6

3.1 Background on VaR and Margin models ... 6

3.2 Basic OMS II calculations ... 8

3.3 Purpose & Limitations ... 15

3.4 Statistical significance ... 19

3.5 Risk Factors ... 20

3.6 Academic and industry references ... 20

3.7 Key assumptions ... 20

3.8 Historical references ... 21

4. Parameters ... 21

5. Numerical data ... 22

5.1 Introduction ... 22

5.2 Back testing ... 22

5.3 Stress testing... 25

5.4 Sensitivity analysis ... 30

6. Conclusions... 35

6.1 Changes from previous validation ... 35

6.2 Input to the validation ... 35

6.3 Theoretical framework of the model ... 35

6.4 Parameters ... 35

6.5 Monitoring process... 36

6.6 Recommendations ... 36

7. Information ... 37

7.1 Tables ... Error! Bookmark not defined. 7.2 Figures ... Error! Bookmark not defined. 7.3 References ... 37

8. Appendices ... 38

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 4 (38)

Validation of OMS II, 2014

Validation of OMS II

1.

Background

1.1 General

NASDAQ OMX Clearing AB (“NOMX Clearing”) provides clearing and Central Counterparty (“CCP”) services. In order to prudently manage these services NOMX Clearing uses a large number of different models. This report is the validation of the margin model OMS II.

The purpose of a validation of models is to ensure the theoretical and empirical soundness of the models used by the CCP. The validation report should ensure transparency on the models used by the CCP for the benefit of:

Board of Directors, NASDAQ OMX Clearing AB. Competent Authorities

Internal Audit and Audit Committee Other stake holders

1.2 Legal environment

NOMX Clearing was at the 19th of March 2014 authorised as Central Counterpart (CCP) to offer services and activities in the Union in accordance with Regulation (EU) No 648/2012 of the European Parliament and of the Council of 4 July 2012 on OTC derivatives, central counterparties and trade repositories1.

The legal framework that governs NOMX Clearing is therefore the EMIR framework, Regulation (EU) No 648/2012 and supporting delegated Regulations 148/2013, 149/2013, 150/2013, 151/2013, 152/2013, 153/2013, 285/2014, 667/2014, 876/2013, 1003/2013 and the implementing Regulations 484/2014, 1247/2012, 1249/2012.

The Regulation of particular interest for this validation is Delegated Regulation No 153/2013 “supplementing Regulation (EU) No 648/2012 of the European Parliament and of the Council with regard to regulatory technical standards on requirements for central counterparties”.

2.

Input to the validation

2.1 Documentation at NOMX Clearing

2.1.1 Previous validation of OMS II

In NOMX Clearing’s application for being a authorised as a CCP and to offer services and activities in the Union in accordance with Regulation (EU) No 648/2012 a validation of the margin model OMS II was amended. This validation will act as an important building block for this new validation. The full document name is: “Validation of OMS II ver 1.1, 2013”

1

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 5 (38)

Validation of OMS II, 2014

2.1.2 Margin methodology guide for OMS II

This guide is in line with Nasdaq OMX standard when it comes to documentation available for the member community. It is meant as a general guide and not necessarily as a technical document describing the actual IT implementation of the model. The full document name is: “OMS II Margin Guide v 1.0”

2.1.3 OMS II Model Instructions

This is an internal document that describes more of the technical part of the model and the audience is internal risk management and IT. The document name is: “OMS II model instructions”

2.1.4 Policies

A lot of policies will be included as input to this validation. The following list will name the most prominent policies in this aspect:

Policy for the Validation of Models: The starting point for the construction, reporting set up and the content of the validation is the policy for validating model that is approved by the Board of Directors at NOMX Clearing. The full document name is: “Model Validation Policy NOMX (140801)”

Policy for setting risk parameters: NOMX Clearing has developed policies that regulate how risk parameters should be estimated. The full document name is: “Risk Parameter Policy NOMX (140319)”

Policy for back testing of models: NOMX Clearing has developed policies that regulate how back testing should be conducted from a theoretical, and very general, point of view. More specific guidelines can be found for specific models. The full document name is: “Back testing Policy NOMX (130513)”

Policy for stress testing of models: NOMX Clearing has developed policies that regulate how stress testing should be from a theoretical, and very general, point of view. More specific guidelines can be found for specific models. The full document name is: “Stress Test Policy NOMX (140228)”

Policy for sensitivity testing of models: Nasdaq OMX has developed policies that regulate how sensitivity testing should be from a theoretical, and very general, point of view. More specific guidelines can be found for specific models. The full document name is: “Sensitivity testing and analysis Policy (130909)”

2.2 Numerical analysis of OMS II

NOMX Clearing has ongoing numerical procedures as place to deliver numerical output from each margin model that its use. The numerical data can be roughly divided into three separate parts:

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 6 (38)

Validation of OMS II, 2014

2.3 Discussions

In any validation a large part of the information received must be thoroughly discussed with the personal at the CCP. The following persons are however prime sources of information to this model validation:

Karl Klasén, Risk Management department of NOMX Clearing

Torbjörn Bäckmark, Risk Management department of NOMX Clearing

2.4 Special issues

OMS II has been validated (Validation OMS II, NOMX, 2013) in the year 2013 in connection with the application for being a authorised as a CCP and to offer services and activities in the Union in accordance with Regulation (EU) No 648/2012. Since a margin model must, and should, be validated on a yearly basis each validation will be updated with new issues as:

New added functionality to the margin methodology

New types of instruments or markets added to the group of instruments and markets in which the margin model is used

Changed financial environment as different volatility in the market New distribution of counterparts as increased risk towards certain firms

New legislation that changes the rules thereby contradicts assumptions made in the model

A section in the validation will specifically target differences between validations to facilitate reading.

3.

Theoretical framework of the model

3.1 Background on VaR and margin models

3.1.1 Basic VaR methodologies

For a portfolio of investments it is often important to calculate risk measures that try to capture the risk in one separate number. For the past decade the family of risk measures that are most used for this is usually referred to as Value at Risk (“VaR”). VaR tries to state the following:

“With a certain probability X there will be no losses for this portfolio exceeding Y in the next N days”

Probability: A VaR calculation is made with a confidence level, X. In many cases this level is quite high (99%) because it is the losses in the distribution tail that are of interest.

N days: A VaR calculation also needs a time horizon. A longer period will give a higher value compared to a shorter one.

Y: This is the VaR measure.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 7 (38)

[image:7.595.66.505.51.278.2]Validation of OMS II, 2014 Figure 1: Basic VaR principle

There are a lot of different mathematical methods to calculate a VaR measure, all with different advantages and weaknesses. The main challenges are to estimate the following:

The volatility of the instruments in the portfolio

The correlation between the instruments in the portfolio

When these challenges have been solved, or rather when the approximations to use have been decided; the calculations are quite straightforward from a mathematical point of view.

The merits of a VaR model are in its predictability. That means that a model that overestimates the risk is equally bad as a model that underestimates the risk. This implies that different models are suitable for different type of underlying markets (gold, currency, etc.), instruments (derivatives, linear products, etc.) and market states (Low volatile market, high volatile market, crashing market, etc.).

When a VaR model is chosen as a model to use it is prudent to spend some time contemplating what market and state it should be used to provide information on. A model itself can of course be poorly implemented from a mathematical or technical point but the waste majority of problems come from using the model in a non fitting environment.

3.1.2 Basic margin methodologies

Early margin models pre-date VaR models by several decades. This can be seen in many of the architectural decisions that have been done in these models. The pre-conditions for these models included:

Limited computer power, in some cases the computational force that was necessary to actual perform the calculations were done on the “super computers” of the time. Limited research and familiarity of portfolio theory, the introduction of derivatives

might not be entirely new but the scale and organization of the trading and clearing certainly was.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 8 (38)

Validation of OMS II, 2014

All these pre-conditions lead to some joint features of all the traditional commercially available margin models2.

Mainly designed for linear products (futures)

Step motherly treatment of time dependency for contracts with the same underlying but different expiry.

Low, or no, correlation between instrument based on different, but correlated, underlying.

The calculations are very dependent on accurate market prices on exactly those instruments that can be found in the cleared portfolio. No accurate way to use “price factors”. In practice these models depend on settlement prices from a corresponding market place.

It might seem that margin models are very crude, full of drawbacks and of limited value. It is true that the underlying functionality of a traditional margin model is a little bit blunt but it gets the job done! The models are resilient against changes in correlation structure and can easily adopt to high volatility states in the market. They are easy to explain to external parties and easy to predict.

3.2 Basic OMS II calculations

3.2.1 Instruments

The OMS II model was originally designed for options and only after that forwards was introduced. The model is used internationally to calculate margins for several types of instruments but NOMX Clearing uses the OMS II model for Equity and Index products including futures, forwards and options.

3.2.2 The basic building blocks

3.2.2.1 Vector files

The OMS II model uses a scenario approach for calculation of margins3 by calculating the worst possible exposure that a portfolio of instruments might reasonably provide over a specified liquidation period4 and a considered set of scenarios.

It is of course the case that the OMS II margin system can be used to calculate other results than margins depending on the parameter used, but for this validation the system can be thought of producing a margin number.

The basic building block in OMS II is what is called the “vector file”. This consists of a matrix with two dimensions, changes in price is one dimension and changes in implied volatility in the other dimension.

Building vector files for each position in the clearinghouse5 is the start of all calculations done by OMS II.

2

Commercial margin models (traditional) are SPAN, OMS II and to some extent TIMS. Of these models “Standard Portfolio Analysis of Risk”, or SPAN, developed and implemented by the Chicago Mercantile Exchange (CME) in 1988 is the most well known.

3

See Appendix I Definitions for definition of margins 4

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 9 (38)

Validation of OMS II, 2014

The implied volatility direction is often called just “volatility” in documentation and data files so it can be some confusion when it comes to parameters but it is always the volatility used in option pricing that is discussed when referred to as just volatility.

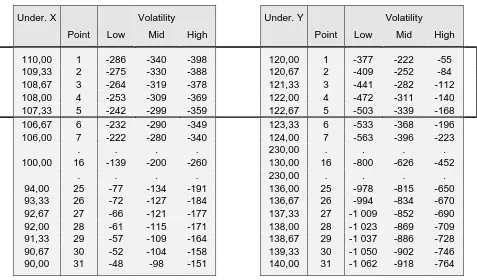

In the below figure an example of an empty such vector file is shown.

Volatility

Price Point Low Mid High

High price 1

2

3

4

5

.

.

.

27

28

29

30

[image:9.595.70.331.181.406.2]Low price 31

Table 1 : Basic vector file

For each position in a portfolio the following steps are done to fill the above matrix:

1. For the underlying instrument6 of the position (equity or index futures and forwards) the margin settlement price is obtained from information vendors (or in the case when the underlying instrument is traded on Nasdaq OMX directly from the exchange). 2. For the instrument of the position (equity or index options or futures) the implied

volatility is calculated (more on implied volatility calculation later in the document). 3. Parameters for the underlying instrument are taken from CDB (“central data base”).

This defines how much the price of the underlying instrument can move during the liquidation period.

4. Parameters for the instrument of the position are taken from CDB (“central data base”). This defines how much the implied volatility can move during liquidation period.

5. With this information the vector file is created.

A vector file for a sold call option with 6 month to expiry, strike 100 and risk free interest rate of 2% would look like this in a vector file. Do notice that the implied volatility is divided in three points. In reality the vector file also have the bid ask spread in volatility. In the following vector file the “correct” side of the spread is chosen dependent on position to facilitate reading.

5

Or rather for each position that the clearing house uses OMS II methodology to calculate margin for. 6

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 10 (38)

Validation of OMS II, 2014

The basic building block in OMS II is what is called the “vector file”. This consists of a matrix with two dimensions, changes in price is one dimension and changes in implied volatility in the other dimension. I a very similar nature to the with spread margin model SPAN the OMS II algorithm consist of manipulations of the vector files. In the below figure an example on such vector file is shown.

Volatility

Price Point Low Mid High

110,00 1 -13,17 -15,65 -18,32 109,33 2 -12,65 -15,17 -17,86 108,67 3 -12,14 -14,69 -17,41 108,00 4 -11,64 -14,23 -16,96 107,33 5 -11,15 -13,77 -16,51

. . . .

100,00 16 -6,40 -9,19 -11,97

. . . .

[image:10.595.71.331.185.402.2]92,67 27 -3,06 -5,57 -8,16 92,00 28 -2,82 -5,29 -7,85 91,33 29 -2,61 -5,02 -7,55 90,67 30 -2,40 -4,76 -7,26 90,00 31 -2,20 -4,51 -6,97

Table 2 : Example on instrument vector file (Call option example)

The negative sign is because a sold option only has obligation and thus a negative market value. For each underlying that any of the customers has positions in (options, forwards and futures defined on this underlying) will have a vector file calculated as in Table 2 above. For each position defined in this underlying a “positional vector” file is created by adding the individual data to the instrument vector file. In this way each point in the matrix displays the market value for that specific scenario.

For an option it is only a multiplication of the contact size and number of contracts that is done.

For a forward the instrument vector file must be used together with the contracted forward price to create the corresponding P/L for each point.

For a future the situation is exactly as for a forward. The only difference is that in end of day calculations the market value has been deducted as variation margin. The market value is therefore by definition zero in standard evening margin calculations. Now when each position in a portfolio has a corresponding positional vector file next level of calculation is done. Do notice that up to this point the calculations has been very similar to the more wide spread margin model SPAN. The really difference between the models are how correlation is handled.

3.2.3 Correlation in OMS II

3.2.3.1 General

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 11 (38)

Validation of OMS II, 2014 3.2.3.2 Perfectly correlated underlyings

OMS II handle correlation between positions by looking at correlation between the underlying instruments. This means that all options, forwards and futures that have the same underlying equity or index are considered to be perfectly correlated.

Do notice that there are parameters that decide if all instruments based on one underlying instrument should be totally correlated. For an underlying equity as “Ericsson” this means as an example that NOMX Clearing decide via the parameters if forwards with different time to expiry should be perfectly correlated or not. Currently the settings of these parameters are that all instruments based on the same underlying shows perfect correlation. In equity markets this is not an unusual assumption due to the low time dependencies that instruments based on the same underlying equity shows compared with more time dependent markets as interest rate or electricity markets.

[image:11.595.71.327.349.595.2]Take all positional vector files with this underlying instrument and put them in a “stack” as in Figure 2. Perfect correlation then means that the same “point” in each vector file is summed up and a new vector file is created which consists of the sum of all positions in instruments with the same underlying.

Figure 2: Several instruments with the same underlying

This means that after this step all positions based on one underlying in the portfolio has one resulting vector file.

3.2.3.3 Perfectly uncorrelated underlyings

In NOMX Clearing perfectly uncorrelated settings are usually set between different underlyings. This means that the worst case scenario is chosen for each sum vector file per underlying. In the below example there is two different uncorrelated underlyings X and Y.

Underlying X Volatility

Point Low Mid High

[image:11.595.71.331.727.783.2]Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 12 (38)

Validation of OMS II, 2014 109,33 2 -1582 -1896 -2233

108,67 3 -1518 -1837 -2176 108,00 4 -1455 -1779 -2119 107,33 5 -1394 -1721 -2064

. . . . .

100,00 16 -800 -1149 -1497

. . . . .

[image:12.595.70.335.97.265.2]92,67 27 -382 -696 -1020 92,00 28 -353 -662 -982 91,33 29 -326 -628 -944 90,67 30 -300 -595 -907 90,00 31 -275 -564 -871

Table 3 : Total sum vector file for underlying X

The negative sign say that this instrument has a margin for the instrument owner (portfolio).

Underlying Y Volatility

Point Low Mid High

190,00 1 6586 7823 9161 190,67 2 6327 7583 8931 191,33 3 6072 7347 8703 192,00 4 5821 7114 8478 192,67 5 5575 6885 8255

. . . . .

200,00 16 3200 4595 5986

. . . . .

[image:12.595.70.333.665.781.2]207,33 27 1528 2786 4080 208,00 28 1412 2647 3926 208,67 29 1303 2512 3776 209,33 30 1199 2381 3628 210,00 31 1101 2255 3484

Table 4 : Total sum vector file for underlying Y

Another underlying X that has an all positive vector file (could be a bought option) is then added to the portfolio. With two uncorrelated underlyings the worst case scenario for each vector file can be in any point. For underlying X it will be point (1,3) -2 290 and for Y it will be the point (31,1) 1 101. The sum is be -1 189. From technical perspective a new vector file is created with the same value in each as in the following Table 5. Do notice that in this example the volatility part also is treated as totally uncorrelated. If necessary the system can keep the correlation in volatility to perfect and thereby treat each “column” in the file separately in the calculations.

X & Y Volatility

Point Low Mid High

1 -1189 -1189 -1189

2 -1189 -1189 -1189

3 -1189 -1189 -1189

4 -1189 -1189 -1189

5 -1189 -1189 -1189

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 13 (38)

Validation of OMS II, 2014

16 -1189 -1189 -1189

. . . .

27 -1189 -1189 -1189

28 -1189 -1189 -1189

29 -1189 -1189 -1189

30 -1189 -1189 -1189

[image:13.595.70.332.99.202.2]31 -1189 -1189 -1189

Table 5 : Total sum vector file for underlying X & Y

Last step for the portfolio is to search the above vector file for the lowest value (-1 189) which constitutes the margin call for this portfolio (if it only consists of positions defined in underlying X & Y).

3.2.3.4 Underlyings with some correlation

For instruments based on different underlyings that show significant correlation there is a method within OMS II that is used to include correlation aspects. This method is called “window method”. This methodology was primarily developed for interest rate instrument but can be applied generally for all types of instruments and markets within the OMS II model. Two instruments vector files based on two different underlyings give the following situation:

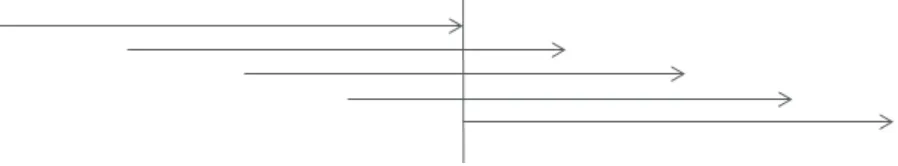

Under. X Volatility Under. Y Volatility

Point Low Mid High Point Low Mid High

110,00 1 -286 -340 -398 120,00 1 -377 -222 -55 109,33 2 -275 -330 -388 120,67 2 -409 -252 -84 108,67 3 -264 -319 -378 121,33 3 -441 -282 -112 108,00 4 -253 -309 -369 122,00 4 -472 -311 -140 107,33 5 -242 -299 -359 122,67 5 -503 -339 -168 106,67 6 -232 -290 -349 123,33 6 -533 -368 -196 106,00 7 -222 -280 -340 124,00 7 -563 -396 -223

. . . . 230,00 . . . .

100,00 16 -139 -200 -260 130,00 16 -800 -626 -452

. . . . 230,00 . . . .

94,00 25 -77 -134 -191 136,00 25 -978 -815 -650 93,33 26 -72 -127 -184 136,67 26 -994 -834 -670 92,67 27 -66 -121 -177 137,33 27 -1 009 -852 -690 92,00 28 -61 -115 -171 138,00 28 -1 023 -869 -709 91,33 29 -57 -109 -164 138,67 29 -1 037 -886 -728 90,67 30 -52 -104 -158 139,33 30 -1 050 -902 -746 90,00 31 -48 -98 -151 140,00 31 -1 062 -918 -764

Table 6 : Two vector files with different underlyings

If these two instruments are totally uncorrelated the total margin would be calculated summing up worst cases from both files (-398 + -1 062 = -1 461). If the instruments are totally correlated the total margin would be calculated summing up the same points from both files and the worst point is calculated to be (-48 + -1 062 = -1 110).

[image:13.595.84.535.363.642.2]Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 14 (38)

Validation of OMS II, 2014

This model is interesting in the way that it does not try to capture the actual behaviour between two (or several) instruments in one number as the mathematical correlation factor. It looks at how the correlation effects are in the extreme cases. This makes this model a very conservative one to use since the effect of the model will be mainly decided by the extreme movements i.e. the more turbulent days in the historical data.

The downside is that estimating the window sizes is quite complicated and demands much numerical work. It involves looking at each historical event and estimating the minimum window size for each date.

This is described in the following Table 7.

Under. X Volatility Under. Y Volatility

Point Low Mid High Point Low Mid High

110,00 1 -286 -340 -398 120,00 1 -377 -222 -55 109,33 2 -275 -330 -388 120,67 2 -409 -252 -84 108,67 3 -264 -319 -378 121,33 3 -441 -282 -112 108,00 4 -253 -309 -369 122,00 4 -472 -311 -140 107,33 5 -242 -299 -359 122,67 5 -503 -339 -168

106,67 6 -232 -290 -349 123,33 6 -533 -368 -196 106,00 7 -222 -280 -340 124,00 7 -563 -396 -223

. . . . 230,00 . . . .

100,00 16 -139 -200 -260 130,00 16 -800 -626 -452

. . . . 230,00 . . . .

[image:14.595.72.549.248.528.2]94,00 25 -77 -134 -191 136,00 25 -978 -815 -650 93,33 26 -72 -127 -184 136,67 26 -994 -834 -670 92,67 27 -66 -121 -177 137,33 27 -1 009 -852 -690 92,00 28 -61 -115 -171 138,00 28 -1 023 -869 -709 91,33 29 -57 -109 -164 138,67 29 -1 037 -886 -728 90,67 30 -52 -104 -158 139,33 30 -1 050 -902 -746 90,00 31 -48 -98 -151 140,00 31 -1 062 -918 -764

Table 7 : Two vector files in the window method

In the upper part of the vector file it is a little rectangle that restricts the area were max and min combination are compared. The worst case is (-286 + -503= -789). From this situation the window “slides” down the vector file and produces new values for each position. Here the volatility part is totally correlated. If treated as uncorrelated the worst case would be (398 + -503= -901).

The extreme cases are easy to understand, a window that spans the entire vector file would equal an uncorrelated case. If the window only covers one row it is fully correlated. Window sizes are described in percentage of the valuation interval.

3.2.4 Controlling the algorithm

In the previous sections the general calculations were described but there are a lot of settings in OMS II that really can alter the way margin is calculated.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 15 (38)

Validation of OMS II, 2014

ups this is not the case since the relative effect of the interest rate in option pricing is limited.

Dividend yield : Dividend yield used when evaluating options. To properly evaluate American options on futures, the dividend yield is set equal to the risk free interest rate.

Adjustment for erosion of time value

: The number by which the number of days to maturity will be reduced when evaluating held options. This is a way of adjusting for the fact that when the defaulting parts positions are neutralized the time value of the options has decreased. Valuation points OMS II differs from SPAN in that the numbers of valuation

points are a parameter. For equity products this is 31 points and when used for interest rate products this was 201 points.

Adjustment of futures : Adjustment factor (spread parameter) for futures. Since futures have a fixing price there are no bid/ask values that can be used to treat bought and sold positions on different side of the spread.

Highest volatility for bought options

: Applies only to bought options and effectively limits the value of the option since volatility is one of the most important part of the valuation.

Lowest volatility for sold options

: Applies only to sold options and effectively limits the lower value of the option since volatility is one of the most important part of the valuation.

Volatility shift parameter : Fixed parameter that determines the size of the volatility interval. In the previous section a three step interval was used for volatility. The normal parameter here is 10% (in absolute terms)

Volatility spread : Defines the spread for options. The spread parameter is a fixed value.

Highest value bought in relation to sold options

: Min. spread between the values for bought and sold options if spread is too small the value of the bought option is decreased Adjustment for negative

time value

: If the theoretical option value is lower than the intrinsic value, the price is adjusted to equal the latter.

There are numerous other parameters that decide the behaviour of OMS II but the above parameters can be considered some of the more important, and interesting, parameters.

3.3 Purpose & Limitations

3.3.1 Instruments and markets

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 16 (38)

Validation of OMS II, 2014

Both forward and futures are adequately handled in the model and combination of derivatives on the same underlying is nicely handled.

Both Equities and index products show limited time dependencies compared with commodities7 and interest rate markets. In technical terms this mean that forward/futures prices can be estimated from spot prices by simple discounting. Of course this include taking into account dividends for both index and equities8. This is also accommodated by the OMS II model by calculating forward prices from spot prices including time dependent dividend estimates as parameters in the system.

The OMS II model has been used historically for a large amount of different markets and instruments but now days it is used for equity and index instruments on the Nordic market. For this purpose the model is very well suited.

3.3.1.1 Options

Options are the standard premium paid types and no future style options are handled within OMS II (nor traded on the exchange). The volumes in option contracts are standard American and European type of options. The behaviour and pricing of these products are considered so standard that closer descriptions for these are not necessary. It can however be mention that OMS II has a library of formulas for this purpose that incorporate analytical as well s common lattice models for the pricing of these options.

There is however currently some position in Cash-or-nothing Binary options. The valuation of these instruments is not complicated and is done within the Black & Scholes framework according to the following formulas with standard notation:

( )

( ) r T t ( ( ))

C t e d t (1)

( )

( ) r T t ( ( ))

P t e d t (2)

2

ln ( )

2 ( )

S

r T t

K d t T t (3)

Even though the binary payoff function can be a challenge in the area were strike is close to market value of the underlying and maturity close to expiry this is manageable because of the low volumes in these instruments. From Risk Management point of view it is also possible to hedge these instruments to large extent by using standard option contracts9.

3.3.2 Market conditions for the model

3.3.2.1 General on market state

The financial market is not a quiet, stable and calm system. On the contrary, markets tend to have different states that are defined in different volatilities and correlation structures for the underlying prices in the market.

7

Especially power markets as electricity or gas 8

Both decided dividends but also future estimates of dividends 9

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 17 (38)

Validation of OMS II, 2014

[image:17.595.71.495.142.343.2]A very crude way of connecting different models to market states can be done according to the following picture:

Figure 3: Market movements and correlation

This is only indicative on the usage of models but can be useful for the validation since it points at interesting areas which should be investigated in this document. A VaR model has its merits by its ability to accurately predict risks. Its usual usage is very much focused on day to day markets. Sometimes used as risk mandate calculation in funds or trading environment. This means that the model is used were market movements are small, or rather were the larger amount of market movements can be found.

Traditional margin models tend to treat correlation very step motherly and concentrate on individual volatility on separate underlying. The movements are in many cases caused by individual events for the underlying and uncorrelated with the rest of the market. This could be before annual reports or media coverage attributed to certain company specific events. OMS II is a traditional conservative margin calculation model. The areas were the model show strengths is exactly that area when market movements are “large”. The margin calculated typically show resilience against these large movements.

This feature also indicates that when margin models are accused for “over margining” it is in some situations only a misconception on what market state the model should be used. A traditional margin model will always (and should also have this feature) produce higher numbers than a traditional VaR when used in a normal day to day market environment.

Stress test models are used in those rare events when market crashes lurks. In these areas the number of observations is very rare making statistical measures uncertain10. For these stress tests it is usual to consider high positive correlation in the methods, “everything moves together in a crash”.

3.3.2.2 Correlation within on underlying instrument

For all risk models, which margin models are a part of, the issue of correlation is always the most debated and questioned aspect of the design.

10

This is the usual objection when using a VaR model as stress test model with high confidence level.

Market movements Correlation

Margin models

VaR models Stress test

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 18 (38)

Validation of OMS II, 2014

For OMS II the correlation has two aspects, and one of them is correlation within instrument defined on the same underlying but with different time to expiry. As an example the question could be asked if a forward with one month to expiry based on equity is perfectly correlated with a forward based on the same equity but with two years to expiry. In OMS II the current setting11 is that these products are treated as perfectly correlated. As discussed before this implies that there are no time dependencies in these products. Equities and Index products are certainly the most stable underlying markets in this aspect and movement in equity spot price will influence the forward price directly. The changes in forward price that are not caused by changes in the spot price are caused by changes in future dividend expectations, tax issues etc but there is not a perfect correlation between the two instruments.

Would this lead to situations were OMS II under margining situations were two forwards based on the same underlying but with opposite direction are present in a portfolio? Yes definitely! Would it lead to situations where this would be a problem? From the perspective of the clearing house this would not be the case. Individual equities could show this from time to time but on average for all equities in the market this would not lead to significant under margin situations.

It is also the case that OMS II has minimum spread parameters that would cushion this effect because the instruments would be priced on separate sides of the spread.

The alternative is to include correlation effects12 within equity, and index, products with the same underlying. This would lead to a significantly more complex set up for the customers to handle when it comes to replicating margins. It would also increase the difficult task of explaining margin behaviour for counterparts in NOMX Clearing. The benefit would be very limited from the clearing house perspective.

It is important to identify that this is the same discussion that takes place if looking at an interest rate product. If the underlying is the spot rate of a credit provider (as STIBOR as an example) then the term structure is a way of linking the future value to the spot rate. For interest rate products this is the normal mind set and this is also what makes it quite difficult to accommodate traditional interest rate products in traditional margin models. In most cases clearing houses construct different interest rate index products and have futures defined on these indexes to be able to use the traditional margin models without constructing very complex margin parameter set ups.

3.3.2.3 Correlation between instrument

This aspect of correlation is what people ordinary means with correlation in equity markets. This is also one area which is hard to “solve”. Using a covariance-variance methodology as an analytical VaR model would do is not really an option for clearinghouses for these types of models.

OMS II has no correlation between different underlyings. In Figure 3 it can be seen that OMS II is used in an area where it is hard to actually know which correlation to use because of the changes in correlation structure for larger movements in the underlying values.

11

As discussed this is really a parameter setting but OMS II within NOMX Clearing this is the way this situation has been handled since the creation of the model and therefore this issue is discussed here as part of the model

12

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 19 (38)

Validation of OMS II, 2014

3.3.3 Calculation issues

In history all margin models were used to calculate margin numbers. During the last year the models has also been used in looking at intraday risk. A margin model looks at how much margin a counterpart should pay at a certain point in time (end of day) even if the actual calculations are mad intraday. A risk model on the other hand calculates the risk at that specific moment.

The set up for intraday margin calculation compared with end of day calculations differs in some aspects. In the end of day calculations it is perfectly clear which options that have been exercised or not. This is especially true on the expiry date since at the end of the day the position exists as a delivery position or does not exist at all (not exercised and therefore it is removed).

All intraday calculations must do some decisions regarding expiring options and also for options that have exercise opportunities build in as American style options. Also forwards that expiry must be dealt with in the calculations.

NOMX Clearing has historically worked a lot in this area and the OMS II system has many parameters that are used to optimize the usage of the OMS II model in the intraday calculations.

The other area in which intraday margin calculations has a challenge is in the prices used. End of day calculations use official prices that in most cases are provided by exchanges. Intraday prices mean that the clearing house must use some sort of technique to get prices for instruments. In NOMX Clearing there are a system called “price server system” that handles this issue. This system is not within the scope of this validation but in short the system does calculate accurate real time prices for all instruments that are cleared. This is very much as a larger bank would handle their intraday need for accurate prices.

In late 1980th when OMS II was implemented the calculating power was limited and therefore OMS II was run on high end machines at the time. This was a long time ago and the calculation time of OMS II is not really an issue, except for more extreme intraday calculations that would be used for supervising risk for HFT trading.

NOMX Clearing does not use the OMS II model in such a way and therefore the current use of OMS II has no limitations when it comes to time issues connected to CPU usage.

3.4 Statistical significance

As previously discussed a traditional margin model has not a statistical predictability as a VaR model would have. The purpose for the model is to calculate a “high enough” margin that ensures the stability of the clearing house.

The parameters for scanning ranges, which are the main risk parameter for most margin models, are usually calculated using some sort of statistical method. There are some trusted methods in this area:

Pure normal distribution (or log normal) where standard deviation is calculated for the look “back period”13

Actual historical distribution

Some chosen extreme value distribution technique

13

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 20 (38)

Validation of OMS II, 2014

All these models do have a statistical significance to them and can be evaluated with back testing techniques.

For a margin model the back testing for small portfolios with a limited number of underlyings would then in effect show the back testing for the scanning risk parameter methodology. When the portfolios shows a larger number of underlyings the lack of correlation between these underlyings would show a back testing pattern with very few “testing exceptions”14.

3.5 Risk Factors

When designing a risk model in general the key decision is to decide what factors that do, and should, affect the result of the model. This will of course be governed by what instruments that is traded and on what type of markets.

For equity and index markets where forwards, futures and standard options are traded the obvious risk factors are movements of the underlying equity and index together with movements in implied volatility for options on these equities and indices.

Other risk factors as interest rate used in option pricing, dividend estimations, etc are other risk factors that could be candidates to include in a risk model given another more complicated set of products.

For OMS II the chosen risk factors (underlying equity and index together with movements in implied volatility for options on these equities and indices) works well and has been used since the introduction of the model at NOMX Clearing.

3.6 Academic and industry references

There are no direct academic research that functioned as the beginning of traditional margin model. The usage of this model predates the interest on risk model that was introduced with VaR at the end of the 1980th.

There is of course academic references for all the option and forward instrument pricing formulas that is used in the OMS II calculation library but all these models are (as previously stated) quite standard and wide spread.

When it comes to industry standard OMS II sets its own foot print here because the model has been extensively used by, and sold to, numerous foreign clearinghouses since early 1990th.

3.7 Key assumptions

The OMS II model is as previously stated a rather old model with few assumptions build into the model, there are two basic assumptions:

All instruments that the model calculates margin for have accurate market prices externally given15.

The parameters that define the generation of vector files should describe as accurately as possible the future risk factor movements in the environment in which the model is used.

14

See Appendix I Definitions for definition of testing exception 15

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 21 (38)

Validation of OMS II, 2014

3.8 Historical references

All mathematical models should be evaluated based on the usage of the model. It is virtually impossible to have an opinion on a margin model if it is unclear what is expected of it.

OMS II was developed in late 1980th and does live up to the description of margin methodologies made in section 3.1.2.

Calculation power was limited at this time so the basic set up with 31 valuation points for equity products was really something that could not be set to large. The design of the model could therefore not be to numerically complicated, because of constrains in computational power. Since the model was developed in parallel with the introduction of exchange traded, and cleared, derivatives in Sweden it was also important that the model could be explained to members and external stakeholders. So a rather simple and efficient model was the priority over a complex “black box” model.

In Sweden the options were introduced prior to forward and futures and the decision to use something else than SPAN was primarily because of that SPAN was mainly designed for linear products as futures.

OMS II has been, and is still used, for OTC equity and index contracts that are admitted from clearing members for clearing. The contract is however very similar to the exchange traded ones and pose no really challenge for NOMX Clearing when it comes to pricing.

Using OMS II as a general margin model for more complicated OTC products with high degree of tailor made feature would on the other hand be very hard and the usage of OMS II margin model will most likely be limited to current usage.

4.

Parameters

NOMX Clearing uses a numerical methodology for estimating scanning range parameters. The look back period is one year and a confidence level of 99.2%. On top off this there is a procyclicality buffer off 25% for scanning ranges were not 10 years of historical data is used. The numerical approach does rely on history to estimate future behaviour but do not make any assumptions16 on actual distribution of the equity market.

16

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 22 (38)

Validation of OMS II, 2014

5.

Numerical data

5.1 Introduction

The OMS II margin model has been in use for a very long time and to accurately draw conclusions from the data there must first be some feeling for the composition of the market17 were the model is used. The following table and graph show the distribution on different instrument types.

Instrument type Initial Margin (SEK)

Index future -5 812 064 042

Stock option -2 745 734 357

Index option -1 127 118 672

Stock forward -626 977 858

Sum -10 311 894 930

linear -6 439 041 900

Non linear -3 872 853 030

Sum -10 311 894 930

Table 8 : Margin per instrument type Figure 4: Margin per instrument type

The currency distribution is as follows:

Currency % of IM IM / Currency (SEK)

SEK 96,3% -9 933 969 463

DKK 2,4% -247 394 958

EUR 1,1% -111 257 178

NOK 0,2% -19 273 331

[image:22.595.71.523.209.388.2]Sum 100% -10 311 894 930

Table 9 : Currency mix in the equity and index market

One can see that even if there are instruments defined in other currencies than SEK it is not any volumes in them.

5.2 Back testing

5.2.1 General

Back testing is a technique that is used to control that the behaviour of a mathematical model behaves as expected/desired. NOMX Clearing has an automated back testing functionality that produces data on a daily basis. The back testing results are presented on a monthly basis to the NASDAQ OMX Clearing Risk Committee. The implemented back testing functionality is a clean back testing were the composition of the tested portfolio is adjusted for the following parts:

17

[image:22.595.71.352.436.553.2]Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 23 (38)

Validation of OMS II, 2014

Changes in market prices for underlyings

Changes in the portfolio composition as trading and contracts that expires, during the period i.e. new trades are removed and trades that have expired are artificially

included in the market value.

Do notice that implementing a clean back testing functionality in a clearing house is virtually impossible to do without development in the clearing system. Having access to normal margin calculations and market values in a data warehouse will not be sufficient.

[image:23.595.72.521.300.383.2]Another thing that is important is that the x days period do not become a “sliding” window. Technically this will cause auto correlation to the time series. In practice autocorrelation (and clustering of breaches) is present in most financial data but overlapping periods should be avoided to not count price movements several times in the analysis. In the figure beneath one can see that each breach will be counted x times for a sliding x day period.

Figure 5: Overlapping test periods

For the back testing presented for the OMS II methodology the sliding effects have been adjusted for.

NOMX Clearing has two different automatic back testing set ups:

Traditional enterprises back testing which is a daily check of how the calculated initial margin of actual portfolios copes with the actual market movements.

A relatively new model level back testing in which the initial margin of theoretically constructed positions checks against the actual historical market movements.

There is a broad range of different reports that aim at describing the results from the back testing program at NOMX Clearing. This involves both types of back testing and both will be included in this additional validation.

5.2.2 Data

The back testing analysis is conducted with one year of data (20131031 – 20141031). Considering that the model has been in use for a considerable amount of time means that the drawbacks of not analysing a longer period is limited.

The current markets that are margined using OMS II are Nordic equities and indices. The data is of course limited to these markets.

5.2.3 Enterprise level back testing

There are two aspects of the OMS II margin methodology that will be investigated here. Firstly the size of the portfolios is divided in three categories with an equal amount of portfolios: The division is made with Initial Margin as a measure.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 24 (38)

Validation of OMS II, 2014

1 -20 301 070

2 -86 987

[image:24.595.71.302.94.159.2]3 8 272

Table 10 : Size of the groups for back testing

Before the actual stress test calculations a definition is needed.

Offset percentage, “OP”: A portfolio can have positions that will give off set between

positions in different derivatives series but with the same underlying. In many circumstances this is referred to as Time Spread effect. It is also the case that options that differs by strike or time to expiry also will be correlated in both underlying but also in implied volatility18.

By looking at the difference between naked margins (NM) i.e. margin without correlation effects between series and Initial margin (IM) the correlation effect can be measured

NM IM

OP

NM

(4)

This measure will divide the portfolios according to the following table.

OP Nr OP

1 0%

2 >0 < 10% 3 > 10% < 50% 4 >50% < 80%

[image:24.595.70.521.561.740.2]5 > 80%

Table 11 : Size of the groups for back testing

It is an interesting measure and the different groups will have different types of counterpart according to size and trading. To have a high OP number for one underlying the trading strategy needs to be quite hedged but with a large number of different series with this underlying. Large counterparts are usually not hedged in all underlyings and since this factor is calculated for the whole portfolio larger counterparts typically can be found in the middle.

Size group NR OP Nr Breaches Nr Observations NR CONF_LEVEL

1 1 65 254 329 99,97%

1 2 3 34 511 99,99%

1 3 11 93 647 99,99%

1 4 3 17 456 99,98%

1 5 7 3 732 99,81%

2 1 132 330 069 99,96%

2 2 4 15 461 99,97%

2 3 9 44 481 99,98%

2 4 0 10 984 N/A

18

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 25 (38)

Validation of OMS II, 2014

2 5 9 2 609 99,66%

3 1 173 349 617 99,95%

3 2 3 4 270 99,93%

3 3 10 14041 99,93%

3 4 4 6935 99,94%

3 5 104 6093 98,29%

Sum 537 1 188 235

Table 12 : Back test table

A breach is defined as an increase (or decrease) in the market value of the portfolio in any day during liquidation period currently two days.

The analysis is quite straight forward. First of all it can be concluded that the amount of breaches is quite low. The increase in breaches with OP is very small and this is what should be expected (and of course wanted) because the OMS II margin methodology do give correlation within one underlying and this table give confidence that this is appropriate. If not the number of breeches would be significantly higher than the table show. Summing over OP Nr give the following:

Size group NR OP Nr Breaches Nr Observations NR CONF_LEVEL

1 N/A 89 403 675 99,98%

2 N/A 154 403 604 99,96%

3 N/A 294 380 956 99,92%

Sum 537 1 188 235

Table 13 : Back testing table with summed OP Nr

The behaviour of the OMS II methodology does give the desired behaviour for the stress test.

Note: It could probably be more beneficial to exclude accounts with positive or very small (in absolute number) Initial margin since these accounts will show peculiarities from the beginning. Positive numbers mean sold options and numbers in the area of zero will create breaches just from rounding numbers.

5.2.4 Model level back testing

In a model level back testing theoretical portfolios are created and then back tested against history to look for vulnerable combinations of instruments that might be handled in a les optimal way by the OMS II margin model.

9 different portfolios are created with OMX positions which will be investigated. They consists of both futures and options and are all tested during the period 2013-11-05 - 2014-03-31.

The back testing is done for one and two days. The margin coverage (MC) is calculated and is how much more margins there is compared to the market value on the back testing. A margin coverage of 100% means that the margin is just enough. A value over 100% means that here is more than sufficient margin to cover for the back testing losses.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 26 (38)

Validation of OMS II, 2014

1 Front month future vs back month future

-251 -289 -1 382 551% 478%

2 Front month future vs one-year-out future

-917 -713 -1 382 151% 194%

3 Front month future vs front month ATM option

-1780 -1593 -8 738 491% 549%

4 Front month future vs six-months-out ATM option

-1651 -1799 -9 706 588% 540%

5 Front month call-put spread -2517 -3703 -9 256 368% 250%

6 Six-months-out call-put spread -2815 -3468 -9 708 345% 280%

7 Front month ATM option vs six-months-out ATM option

-1707 -1957 -2 746 161% 140%

8 Front month ITM option vs front month OTM option

-1884 -2964 -3 358 178% 113%

9 Six-months-out ITM option vs six-months-out OTM option

[image:26.595.73.529.59.319.2]-677 -821 -2 141 316% 261%

Table 14 : Back test of theoretical portfolios, 2013-11-05 – 2014-03-31

From the above table one can see that none of the portfolios breached in the back test. Since NOMX Clearing allow for spread positions with spread parameters it is interesting to investigate if these parameters can be a cushion for spread positions. From the tale it can be concluded that spread positions can be handled appropriately within OMS II.

These kinds of reports are currently produced by NOMX Clearing on a regular basis which is a very nice addition to the overall margin framework.

5.3 Stress testing

5.3.1 Set up

Stress testing is to test how a model behaves if “large” changes are made in the basic assumptions. First an investigation is done regarding how the model works if the market values are stressed. In a way this is a back testing against fictive market movements. Secondly an investigation is made to deduct if the model works within the desired financial environment. Is it used for the type of distribution of instruments and positions that the model is meant to work within?

5.3.2 Stressed market conditions

Back testing the model against actual market movements decides if the model works as desired on a day to day basis. By constructing a time series of “fictive” very large market movements the behaviour of the model within a much stressed market can be observed. NOMX Clearing performs stress testing with two different sets of stress test scenarios:

A structured scenario approach called CCaR (Clearing Capital at Risk) where the entire equity market moves up or down. Each equity moves according to maximum movements for some time period back. Changes in Implied volatility are treated as individual scenarios.

Historical stress scenarios from three actual historical occurrences are also used as scenarios.

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 27 (38)

Validation of OMS II, 2014

DATE ID MV IM MARGIN

2013-11-11 n -584 429 -214 965 002 -215 549 430 2013-11-12 n -228 056 -208 640 257 -208 868 313 2013-11-11 x 242 200 153 -207 450 598 34 749 554 2013-11-12 x 219 643 107 -189 023 126 30 619 981 2013-11-11 y 107 330 -196 656 186 -196 548 856 2013-11-12 y 214 215 -188 136 458 -187 922 243 2013-11-11 z -67 451 244 -560 325 093 -627 776 337 2013-11-12 z -58 622 330 -552 047 004 -610 669 334

∙ ∙ ∙ ∙ ∙

∙ ∙ ∙ ∙ ∙

∙ ∙ ∙ ∙ ∙

2014-11-25 n -17 296 283 -541 955 528 -559 251 810 2014-11-26 n -17 237 889 -544 853 362 -562 091 251 2014-11-25 x 406 914 833 -675 931 836 -269 017 003 2014-11-26 x 387 094 339 -668 751 454 -281 657 115

2014-11-25 y 0 -476 945 695 -476 945 695

2014-11-26 y 0 -505 336 678 -505 336 678

[image:27.595.74.526.661.780.2]2014-11-25 z -117 152 608 -647 208 026 -764 360 634 2014-11-26 z -103 330 796 -636 276 060 -739 606 856

Table 15 : Stress test table with market value, initial margin and margin, SEK

Now the three different historical scenarios are defined:

Date Name Historical Extreme Event Affected markets Market move summary

1987-10-29 SMV 1987 Stock market crash Equity Equities down

2008-12-09 SMV 2008 Post Lehmann unrest Equity and F/I Equities up, rates down

2012-06-07 SMV 2012 Euro crisis aftermath F/I Rates up

Table 16 : Historical Stress test scenarios, SEK

Running the above defined stressed markets for the four clients will produce potential losses for some scenarios. If the margin in an account cannot cover for the market value that the account get for a scenario, then the portfolio get a potential loss. For each scenario all the four portfolios are ordered according to losses. It is expected that the first scenario will produce largest losses since it is defined in equity movements and the other have interest rate parts. The result is the following:

DATE ID MV IM MARGIN SMV 1987 SMV 1987 Loss

2014-06-09 n -8 319 062 -822 973 145 -831 292 207 -1 438 291 645 -606 999 437 2014-06-10 n -7 731 265 -820 893 564 -828 624 829 -1 432 840 124 -604 215 294

2014-11-20 x 307 573 384 -655 815 833 -348 242 449 -759 424 419 -411 181 970 2014-11-25 x 406 914 833 -675 931 836 -269 017 003 -669 845 060 -400 828 057

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 28 (38)

Validation of OMS II, 2014

2014-08-12 y 0 -167 427 000 -167 427 000 271 379 130 438 806 130

2014-06-30 y 0 -168 850 752 -168 850 752 281 417 920 450 268 672

2014-10-24 z 78 149 936 -481 765 559 -403 615 623 602 878 042 1 006 493 665 2014-10-27 z 75 811 128 -482 371 334 -406 560 206 628 401 539 1 034 961 745

Table 17 : Stress test losses for SMV 1987, SEK

DATE ID MV IM MARGIN SMV 2008 SMV 2008 Loss

2014-10-16 n -27 437 294 -385 722 602 -413 159 895 541 153 242 954 313 138 2014-10-15 n -31 466 323 -405 425 123 -436 891 446 569 447 305 1 006 338 751

2014-08-08 x 63 334 935 -127 530 211 -64 195 276 218 121 790 282 317 066 2014-08-07 x 61 821 375 -137 039 016 -75 217 641 225 609 451 300 827 092

2014-11-27 y 0 -508 166 910 -508 166 910 -914 654 220 -406 487 310 2014-11-26 y 0 -505 336 678 -505 336 678 -909 550 560 -404 213 882

[image:28.595.69.524.209.408.2]

2014-08-11 z -31 336 654 -649 345 068 -680 681 722 -1 022 598 443 -341 916 721 2014-08-08 z -9 430 646 -640 230 304 -649 660 950 -987 508 869 -337 847 919

Table 18 : Stress test losses for SMV 2008, SEK

DATE ID MV IM MARGIN SMV 2012 SMV 2012 Loss

2014-10-16 n -27 437 294 -385 722 602 -413 159 895 130 096 467 543 256 362 2014-10-17 n -21 086 444 -402 209 253 -423 295 697 145 810 875 569 106 572

2014-08-08 x 63 334 935 -127 530 211 -64 195 276 102 431 575 166 626 851 2014-08-07 x 61 821 375 -137 039 016 -75 217 641 104 534 815 179 752 456

2014-08-12 y 0 -167 427 000 -167 427 000 -101 606 400 65 820 600 2014-06-30 y 0 -168 850 752 -168 850 752 -101 316 992 67 533 760

[image:28.595.70.524.433.626.2]

2014-10-24 z 78 149 936 -481 765 559 -403 615 623 -113 272 226 290 343 397 2014-10-27 z 75 811 128 -482 371 334 -406 560 206 -115 799 459 290 760 747

Table 19 : Stress test losses for SMV 2012, SEK

The result is as expected for the different scenarios. At the same time NOMX Clearing uses internal scenarios that are constructed. These are the scenarios:

Name Stressed scenario Affected markets Market move summary

[image:28.595.73.521.686.746.2]SMV B1 Vol 0 Nordic equity market moves up Equity Equities up SMV B2 Vol 0 Nordic equity market moves down Equity Equities down

Author Last update Version Page

Bengt Jansson, zeb/ Risk & Compliance Partner AB 2014-12-09 1.3 29 (38)

Validation of OMS II, 2014

Do notice that “Vol 0” indicates that the implied volatility is not changed in this scenario. There are several scenarios where the implied volatility is shifted too. For this investigation though the implied volatility is left as is. These scenarios are now applied exactly as the actual historical scenarios previously investigated.

DATE ID MV_SEK IM_SEK MARGIN_SEK SMV B1 Vol 0 SMV B1 Loss

2013-11-13 n -5 544 966 -201 050 012 -206 594 977 217 172 764 423 767 742 2013-11-14 n -5 706 495 -197 593 468 -203 299 963 246 596 379 449 896 341

2014-08-08 x 63 334 935 -127 530 211 -64 195 276 200 177 742 264 373 017 2014-08-07 x 61 821 375 -137 039 016 -75 217 641 212 186 460 287 404 101

2014-11-27 y 0 -508 166 910 -508 166 910 -914 654 220 -406 487 310 2014-11-26 y 0 -505 336 678 -505 336 678 -909 550 560 -404 213 882

[image:29.595.72.522.173.368.2]

2014-03-14 z 44 077 561 -540 311 907 -496 234 346 -979 486 720 -483 252 374 2014-03-26 z -965 774 -686 761 452 -687 727 226 -1 139 305 771 -451 578 545

Table 21 : Stress test losses for SMV B1 Vol 0, SEK

DATE ID MV_SEK IM_SEK MARGIN_SEK SMV B2 Vol 0 SMV B2 Loss

2014-05-30 n -10 933 112 -852 135 806 -863 068 918 -1 489 508 799 -626 439 881 2014-06-02 n -11 449 472 -840 590 158 -852 039 631 -1 469 843 215 -617 803 585

2014-11-20 x 307 573 384 -655 815 833 -348 242 449 -777 114 891 -428 872 442 2014-11-24 x 379 005 124 -651 654 992 -272 649 867 -683 746 881 -411 097 014

2014-08-12 y 0 -167 427 000 -167 427 000 271 379 130 438 806 130 2014-06-30 y 0 -168 850 752 -168 850 752 281 417 920 450 268 672

2013-12-18 z -72 491 457 -430 919 664 -503 411 121 416 394 805 919 805 926 2013-12-13 z -45 560 749 -450 662 206 -496 222 955 427 902 591 924 125 546

Table 22 : Stress test losses for SMV B2 Vol 0, SEK

An interesting fact is that the portfolios behave very similar to the SMV of 1987 and the generated scenario B2 Vol 0. This is at least an indication that at much stressed markets the movement tends to be much correlated as is the assumption that scenario B2 Vol 0 is constructed from.

From this investigation it is clear that by adjusting the scanning ranges and keeping no correlation between underlyings in OMS II the model as such can easily be adjusted to supply a cushion for very large movements in the markets.

[image:29.595.74.518.390.589.2]