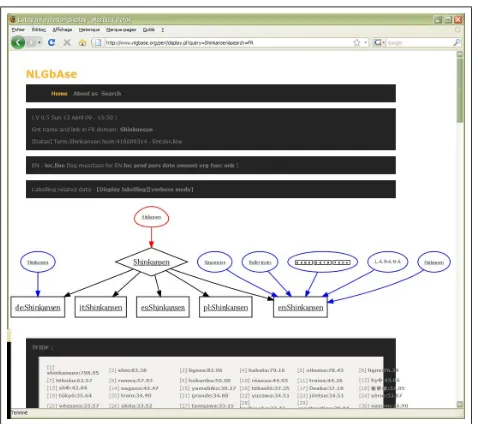

NLGbAse: A Free Linguistic Resource for Natural Language Processing Systems

Full text

Figure

Related documents

Intensified PA counseling supported with an option for monthly thematic meetings with group exercise proved feasible among pregnant women at risk for gestational diabetes and was

In UNIX there is a useful program which will let you automatically compile all of your source files by simply typing the UNIX command make. Furthermore, if you've only changed some

Electric toothbrush application is a reliable and valid test for differentiating temporomandibular disorders pain patients from controls.. Donald R Nixdorf* †1,2 , Azar Hemmaty 3

POPS: Preventing Obesity in Preschoolers Series; IYS: Incredible Years Series; USDA: United States Department of Agriculture; NIFA/AFRI: National Institute of Food

Background: The aim of this study was to compare the functional outcomes and complication rates after distal femoral replacement (DFR) performed with the modular Munich-Luebeck

knee strength testing with modified hand-held dynamometry in patients awaiting total knee arthroplasty: useful in research and individual patient settings. A

It can be conceived that patients with very high serum trough levels of infliximab could be carefully dose reduced, and that on the other hand patients without detectable

At University of Michigan Medical School, we collected multisource assessments from multiple health professionals during our Initial Clinical Experience (ICE) course, a