Abstract— Structured light image systems have been used effectively for precise measurement of 3-D surfaces in a computer image. Their applications are mostly restricted to scanning stationary objects, as tens of images are to be captured for improving a single 3-D scene. This work presents an idea for real-time acquisition of 3-D surface data by a specially coded image system. To achieve 3-D measurement for a dynamic scene, the data acquisition must be accomplished with only single image. A principle of distinctively color-encoded pattern projection is proposed to mean a color matrix for improving the renovation efficiency. The matrix is produced by a special code chain and a number of state transitions. A color projector is limited by computer to generate the desired color patterns in the scene. The unique indexing of the light codes is crucial at this point for colour projection, as it is critical that each light grid be uniquely identified by incorporating local neighbourhoods. Hence 3-D reconstruction can be performed with only local analysis of the single image. A scheme is presented to describe such an image processing method for fast 3-D data acquisition. Practical experimental performance is provided to analyse the efficiency of the proposed methods.

Key Words— 2D, 3D, 2D-3D conversion, Color-coded structure, Gray scales Image, Slide show

I. INTRODUCTION

Computer image is a very important means to obtain the 3-D model of an object. During last 30 years numerous methods for 3-D sensing have been explored. The structured light has made its progress from single light-spot projection to complex coded pattern and subsequently, the 3-D scanning operation speeds up from several hours per image to dozens of images per second. In early 1980 the first stage of feasible structured light systems were originated when the binary coding or gray coding methods were employed.

The pattern resolutions are exponentially increasing amongst the coarse-to-fine light projections and the stripe gap tends to 0, but the stripe locations are easily distinguishable as a small set of primitives is used. Therefore, the position of a pixel now can be encoded precisely. This method is still the most widely used in structured light systems because of its easy implementation.

The main disadvantage is that they cannot be applied to moving surfaces since multiple patterns are to be projected. A technique based on the combination of gray code and phase shifting is often used for better resolution with the disadvantage that larger numbers of projection patterns (e.g., images) are essential. When the aim is to project only one light pattern before capturing a scene image, color stripes are conceived for substituting multiple black/white projections.

II. 2D To 3D

Structured light image systems have been effectively used for accurate measurement of 3-D surfaces in computer image, though their applications are mainly limited to scanning stationary objects. This paper presents an idea for real-time acquisition of 3-D surface data by a specially coded image system. To achieve 3-D measurement for a dynamic scene, the data acquisition must be accomplished with only a single image. A principle of exclusively color-encoded pattern projection is proposed for improving the reconstruction efficiency to design a colour matrix. A special code sequence and a state transitions produce the matrix.

Computer organizes a colour projector to generate the desired color patterns in the scene. The unique indexing of the light codes is crucial here for color projection since it is essential that each light grid be uniquely identified by incorporating local neighbourhoods so that 3-D reconstruction can be performed with only local analysis of a single image. A scheme is presented to describe such a image processing method for fast 3-D data acquisition. Experimental performance results are provided to analyse the efficiency of the proposed methods.

III.COLOR-CODEDSTRUCTUREDLIGHT SYSTEM

The setup of structured light system consists of a CCD camera and a digital projector shown in Fig. 1, similar to the traditional stereo image system. But with its second camera substituted by the light source which projects a known pattern of light on the scene while the other camera captures the illuminated scene. The required 3-D information can be obtained by analysing the deformation of the imaged pattern with respect to the projected one. Here, the correspondences between the projected pattern and the imaged one can be

Conversion of a 2-D Image to 3-D Image and Processing the

Image Based on Coded Structured Light

1T. Gnana Prakash,

2G. Ananth Rao

1

Assistant Professor, CSE Dept., VNR VJIET, Hyderabad, India

solved directly via codifying the projected pattern, so that each projected light point carries some information. When the point is imaged on the image plane, this information can be used to determine its coordinates on the projected pattern[1].

Fig.1: Sensor structure for color coded image

A method is developed for designing the grid patterns that can meet the practical requirements of uniquely indexing for solving uncertain occlusions and discontinuities in the scene. Let P be a set of color primitives, (where the numbers {1,2,..p} representing different colors, e.g.,1=white,2=blue, etc.). These color primitives are assigned to an M*N matrix to form the encoded pattern which may be projected onto the scene[1]. A word from is defined by the color value at location in and the color values of its 4-adjacent neighbors.

If is the assigned color point at row and column in M, then the word for defining this location, is the sequence and is a substring as follows:

If a lookup table is maintained for all of the word values in M, then each word defines a location in M. Then it is known that an M*N matrix has words (m-1)*(n-1). These words are made up of a set W. The color primitives of to the matrix are to be assigned so that there are no two identical words in the matrix.

Furthermore, every element has a color different from its adjacent neighbors in the word.

In this way, each defined location is uniquely indexed, and, thus, correspondence will be of no problem. That is, if the pattern is projected onto a scene, and the word value for an imaged point (u,v) is determined (by determining the colors of that imaged point and its 4-adjacent neighbors), then the

According to the perspective transformation principle, the image coordinates and the assigned code words of a spatial point are correspondent to its world coordinates. A mapping relation must be established between an image point in the image coordinate system and the spatial point in the world coordinate system and are the coordinates of a world point, corresponding with the image coordinates and together with the system calibration parameters, the 3-D information of the surface points can be easily computed.

Effectively, it can guarantee that the measurement system has a limited cost of computation since it only needs to analyse a small part of the scene and identify the coordinates by local image processing. Therefore, the acquisition efficiency is greatly improved.

IV. SEED WORD & FLOOD SEARCHING

For computing the 3-D mesh, we can choose to do it from either the original image data with formula (20) or simply interpolating 3-D coordinates of known grid points in step[1]. The CCD camera (PULNIX TMC-9700) has a 1-inch sensor and a 16-mm lens. A 32-Bit PCI Frame Grabber for machine image by Coerce Imaging Co., Ltd., PC2-Image, is used to capture live images in 640 480 size.

The main computer is a common PC, with a 2.1-GHz CPU and 512-MB RAM, for image processing and 3-D computation. In the experiments, a 44 * 212 encoded pattern generated from a seven-color set is used to illuminate the scene. The grid size is 25 * 25 pixels. The below figure illustrates an image captured by the camera, in which there are about 30*37 = 1110 grid points. A seed word is identified randomly in the image.

Fig. 2: Image captured uniquely encoded light pattern.

three seeds were generated automatically one by one to get the final mesh due to surface discontinuity.

Fig. 3: After 3-D reconstruction.

Then grid points are detected by a flood-search algorithm. Repeating the work until no large area is possible to yield more points, the whole net will be merged from them. The amended mesh after detecting isolated holes and abnormal leaves is also illustrated in Fig. 2. Finally, the 3-D mesh was reconstructed after performing 3-D computation and a typical example is illustrated in Fig. 3.

V. Mesh Amendment and Interpolation

The mesh amendment and grid interpolation procedures are developed in this paper for optimization of 3-D results. The projection of the coded pattern should result in a regular mesh. However, due to the complexity of the scene and uncertainty in image processing, the constructed grid matrix could have some faults (namely holes and leaves).

Fig. 4: Cases of mesh amendment for holes (insertion).

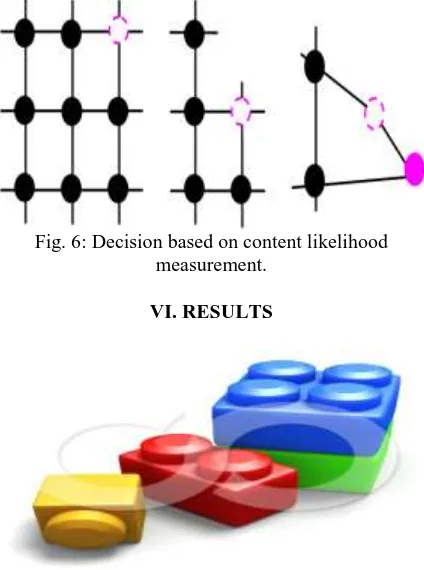

To correct these faults, this research develops a Mesh Amendment Procedure [1] to find and amend them. For some cases, it can decide directly whether “insertion” or “deletion” is necessary to amend the net (as illustrated in Figs. 4 and 5). Under a few other conditions, such an operation has to be determined according to its actual image content and with a likelihood measurement (Fig. 6).

Fig. 5: Cases of mesh amendment for leaves (deletion).

After all possible code words have been identified from the image, it is easy to compute the 3-D world coordinates of these points since the coordinates on both the image (xc, yc) and the projector (xp, yp) are known. This yields a rough 3-D map of the scene. In order to improve the resolution, we may perform an interpolation algorithm on such map. Depending on the application requirements, the interpolation may be only on the segment of two adjacent grid points or inside the square area formed by four regular grid points.

Fig. 6: Decision based on content likelihood measurement.

VI. RESULTS

Fig. 8: A colored image transformed to gray scale image.

Fig. 9: Converting to the colored image.

System testing consists of the following steps: Program(s) testing.

String testing. System testing.

User acceptance testing.

VII. TEST CASES

Test Case1: MODULE: Load Image

FILENAME: LOAD.CS

Table 1: Test case for loading image.

Test Input Obtained Output

Actual Output

Description

Valid Image

File name

Success Success

Test Passed! Image displayed.

Invalid Image

File name

Failed Failed

Test Passed! No preview

available. Try Again.

Test result: The module „Load image‟ is tested and the module is successfully implemented.

Test Case 2: MODULE: Brightness & Contrast FILENAME: brightness.cs

Table 2: Test case for Brightness and contrast module.

Test Case

Input Obtaine d Output

Actual Output

Description

Brightn ess & Contra st

Source Image, value

Success Success Test Passed. Image displayed with

the new set value. Brightn

ess & Contra st

Source Image, value

Failed Failed Test Passed. Invalid image

format. Try Again.

Test result:The module „Brightness and Contrast‟ is

tested and the module is successfully implemented.

Test Case 3: MODULE: 2D to 3D FILENAME: From2.cs

Table 3: Test case for 2D to 3D module.

Test Case

Input obtained Output

Actual Output

Description

Convert Image

Source , Scale

Success Success Test Passed. Image scaled

to 3D.

Convert Image

Source , Scale

Failed Failed Test Passed. Image distorted not

suitable for conversion. Try Again.

Test result:The module „2D to 3D‟ is tested and the

module is successfully implemented.

VIII. DISCUSSIONS AND CONCLUSIONS

REFERENCES

[1] S. Y. Chen, Y. F. Li, Jianwei Zhang, “Image Processing for Realtime 3-D Data Acquisition Based on Coded Structured Light”, IEEE Transactions On Image Processing, Vol. 17, No. 2, February 2008, pp:167-176

[2] M. Ribo and M. Brandner, “State of the art on image-based structured light systems for 3D measurements,” in Proc. IEEE Int. Workshop on Robotic Sensors: Robotic and Sensor Environments, Ottawa, ON, Canada, Sep. 20057, p. 2. [3] J. Salvi, J. Pags, and J. Batlle, “Pattern codification strategies

in structured light systems,” Pattern Recognit., vol. 37, no. 4, pp. 827–849, Apr. 2004.

[4] D. Desjardins and P. Payeur, “Dense stereo range sensing with marching pseudo-random patterns,” in Proc. 4th Canad. Conf. Computer and Robot Image, May 2007, pp. 216–226. [5] F. Blais, “Review of 20 years of range sensor development,” J.

Electron.Imag., vol. 13, no. 1, pp. 231–240, 2004.

[6] S. Osawa, “3-D shape measurement by self-referenced pattern projection method,” Measurement, vol. 26, pp. 157–166, 1999.

[7] C. S. Chen, Y. P. Hung, C. C. Chiang, J. L. Wu, and Range, “Data acquisition using color structured lighting and stereo image,” Image Vis. Comput., vol. 15, pp. 445–456, 1997. [8] L. Zhang, B. Curless, and S. M. Seitz, “Rapid shape

acquisition using color structured light and multi-pass dynamic programming,” in Proc. IEEE 3D Data Processing Visualization and Transmission, Padova, Italy, Jun. 2002, pp. 24–36.

[9] Y. F. Li and S. Y. Chen, “Automatic recalibration of an active structured light image system,” IEEE Trans. Robot. Autom., vol. 19, no. 2, pp. 259–268, Apr. 2003.

[10] T. Lu and J. Zhang, “Three dimensional imaging system,” U.S. Patent 6 252 623, Jun. 26, 2001.

[11] S. Inokuchi, K. Sato, and F. Matsuda, “Range-imaging system for 3-D object recognition,” in Proc. 7th Int. Conf. Pattern Recognition, Montreal, QC, Canada, 1984, pp. 806– 808.

BIBLIOGRAPHY OF THE AUTHORS

1Gnana Prakash Thuraka obtained his B.Tech and

M.Tech from JNT University, Hyderabad in 2006 and 2010 respectively. He has more than 8 years of teaching experience and presently working as Assistant professor in CSE department, VNR VJIET Engineering College; Hyderabad. His Interested research areas include Image Processing, Computer Vision, Data mining, Big Data and Service Oriented Architecture.

2