Enterprise Search Solutions Based on Target Corpus Analysis and

External Knowledge Repositories

Thesis submitted in partial fulfillment of the requirements for the degree of

M.S. by Research in

Computer Science and Engineering

by

NIHAR SHARMA 200707018

nihar@research.iiit.ac.in

Search and Information Extraction Lab International Institute of Information Technology

Hyderabad - 500 032, INDIA March 2011

Copyright c Nihar Sharma, 2011 All Rights Reserved

International Institute of Information Technology

Hyderabad, India

CERTIFICATE

It is certified that the work contained in this thesis, titled “Enterprise Search Solutions Based on Target Corpus Analysis and External Knowledge Repositories” by Nihar Sharma, has been carried out under my supervision and is not submitted elsewhere for a degree.

Acknowledgments

I most sincerely express my gratitude to Dr. Vasudava Varma for his guidance and vision which has led to the realization of this thesis. He has not only been the mentor for this work but also a role model for me in the research domain. I also thank Dr. Prasad Pingali for his ever helpful insights and technical expertise during the early phase of this thesis. I thank my friends Neha, Guru, Rahul & Rahul, Abhijit and Jaideep for their moral support and companionship during this part of my life.

Abstract

A large portion of the data that resides in Enterprises, exists in the form of unstructured textual data. Unstructured data implies collection of natural language text in the form of word documents, HTML pages, plain text files, among others. Structured data on the other hand refers to relational databases, XML documents, etc; where there is a well articulated scheme to represent and store the data. Unstruc-tured data is easy to produce and comprehend by humans, where as it is not trivial for the machines and electronic applications to extract information from it. As unstructured data lacks structure and patterns therefore, in order to target its specific portions for retrieving particular information, full text search has to be carried out.

In between Internet and personal machines, we have the Intranets and data archives of various En-terprise establishments, which have their own set of guidelines for search. A typical enEn-terprise search system would be benefited with the thoroughness of desktop search but would not want to suffer from the inherent slowness of typical on the fly, linear scans used in desktop search. An Internet search approach on the other hand may be too generic to produce precise results even though it would be ad-vantageous for speedy indexing and retrieval. The difference and the uniqueness in the composition of enterprise data makes Enterprise search an entirely different beast. This thesis concentrates on enhanc-ing the relevance of results in query based document retrieval in Enterprise Search (ES). Approaches to implement effective ES are different from standard document retrieval and web search. A number of such past and on-going works in ES research area are discussed in this thesis. Our approaches to ES work with two important open research areas in ES, namely, user context aware ES and resolving the issues of vocabulary bias and narrowness in ES. In user context aware ES, we concentrate on user context aware computerized work environment, to facilitate better understanding of user’s information requirements in ES systems. Issues of vocabulary bias and narrowness arise when there is a difference between vocabulary used for queries and that used in the content (though meaning could be the same). In any form of document retrieval, the result-set is strongly query dependent. As a query represents the information need of a user, a misrepresenting or an ambiguously formed query dilutes the result quality. We try to reduce the difference between the vocabulary used in constructing the query and that used in enterprise content for resolving this issue.

ex-vii pansion and re-ranking. User and work context aware ES is implemented by through a user role based query expansion using local & global analysis techniques and re-ranking result-set by a role based doc-ument classification. External lexicon and thesaurus repositories constructed from open source on-line encyclopedias such as Wikipedia are used as complementary knowledge bases for implementing the query expansion for countering the vocabulary bias issues in the enterprise. This is accomplished by ex-tracting and using a Wikipedia concept thesaurus from the article link structure of Wikipeida for query expansion. The results are re-ranked step using large manually tagged sets rich in categories from all domains of Wikipedia. These tagged sets are used to train a classifies which classifies search results into various enterprise document classes. Documents belonging to the dominant classes in the result-sets are given higher preference in re-ranking. We also introduce broader vocabulary range into result-set with collection enrichment techniques using Wikipedia article text for pseudo relevance feedback. Both supervised and unsupervised classification techniques are used to determine good feedback documents from the pseudo-relevant set.

We evaluate our approahes on two datasets, namely the IIIT-H corpus and the CERC dataset. Role based personalization in ES required a dataset with role based topics and relevance judgments. As no such dataset existed, we customized the IIIT-H intranet data for the same. Rest of the techniques were evaluated on standard ES evaluation platform, i.e. the CERC dataset provided by the TREC Enterprise track. Role based personalization shows improvements for both local and global analysis based query expansion technique as well as for the re-ranking compared to plain test retrieval. Wikipedia concept thesaurus based query expansion reveals gains in recall figures, without diluting the precision in search results. Wikipedia category network based re-ranking method shows moderate improvements in the precision figures for the results. Improvements are also observed in Wikipedia article text based pseudo relevance feedback in comparison to blind relevance feedback without enriching the pseudo relevant set. Between unsupervised and supervised classification based approaches, latter shows better results with upto 11% improvement in mean average precision (MAP) figures.

Contents

Chapter Page

1 Introduction . . . 1

1.1 Web versus Enterprise Search . . . 2

1.2 Research Problems in Enterprise Search . . . 2

1.2.1 Vocabulary bias and narrowness . . . 3

1.2.2 Heterogeneous collection . . . 3

1.2.3 User and work context . . . 4

1.2.4 Test collections . . . 4

1.3 Problem Definition . . . 5

1.3.1 User role and information need . . . 5

1.3.2 Query expansion with Wikipedia concept thesaurus . . . 6

1.3.3 Re-ranking with Wikipedia category network . . . 6

1.3.4 Pseudo relevance feedback with Wikipedia based collection enrichment . . . . 7

1.3.5 Common theme of the thesis . . . 7

1.4 Organization of Thesis . . . 8

2 Background . . . 9

2.1 Enterprise Search . . . 9

2.2 Related Work: User Roles and Search . . . 10

2.3 Related Work: Query Expansion in Enterprise Search . . . 10

2.3.1 Thesaurus based techniques . . . 10

2.3.2 Pseudo relevance feedback . . . 11

2.4 Related Work: Re-ranking . . . 11

2.5 Related Work: Wikipedia and Enterprise search . . . 11

2.5.1 Wikipedia link structure and concept thesaurus . . . 11

2.5.2 Wikipedia categories and re-ranking . . . 13

2.5.3 Wikipedia as lexicon resource . . . 13

2.6 Summary . . . 14

3 Role Based Personalization of Enterprise Search. . . 15

3.1 Enterprise Roles and Search . . . 15

3.2 Role Based Tagging and Indexing . . . 17

3.2.1 Role tagged dataset . . . 17

3.2.2 Role classifier . . . 17

3.2.3 Role based co-occurrence thesaurus . . . 19

CONTENTS ix

3.3 Query Formulation . . . 22

3.3.1 Boolean logic . . . 22

3.3.2 Parametric search . . . 23

3.4 Role Based Query Expansion . . . 24

3.4.1 Global analysis techniques . . . 26

3.4.2 Local analysis techniques . . . 27

3.5 Role Based Re-ranking . . . 28

3.6 Experiments and Evaluations . . . 28

3.6.1 Data set: IIIT intranet . . . 29

3.6.2 Roles . . . 29

3.6.3 Search Engine and Query Formulation . . . 29

3.6.4 Query Expansion . . . 30

3.6.5 Indexing . . . 31

3.6.6 Retrieval and Re-ranking . . . 31

3.6.7 Results . . . 31

3.6.8 Comments . . . 32

3.7 Summary . . . 33

4 Query Expansion and Re-ranking with domain independent knowledge . . . 34

4.1 ES and Wikipedia . . . 35

4.1.1 Wikipedia knowledge-base and structure . . . 38

4.1.2 Wikipedia corpus . . . 39

4.2 Wikipedia Concept Vocabulary . . . 39

4.2.1 Concepts . . . 39

4.2.2 Article names . . . 39

4.2.3 Redirects . . . 40

4.2.4 Anchor-text . . . 40

4.2.5 Vocabulary set . . . 40

4.3 Wikipedia Concept Thesaurus . . . 41

4.3.1 Two way link analysis . . . 43

4.3.2 Link co-category analysis . . . 43

4.3.3 Relation confidence score . . . 43

4.4 Concept Representation of Search Query . . . 44

4.5 Query Expansion . . . 48

4.6 Enterprise Document Classes . . . 50

4.6.1 Mapping document classes to Wikipedia categories . . . 50

4.6.2 Training corpus from Wikipedia articles . . . 52

4.6.3 Re-ranking search results . . . 52

4.7 Experiments and Evaluation . . . 53

4.7.1 CSIRO dataset . . . 53

4.7.2 Query Expansion with Concept Thesaurus . . . 53

4.7.2.1 Baseline Setup . . . 54

4.7.2.2 Concept Thesaurus setup . . . 54

4.7.2.3 Results . . . 54

4.7.2.4 Comments . . . 55

x CONTENTS

4.7.3.1 Classifier training with Wikipedia Categories . . . 56

4.7.3.2 Enterprise dataset and Classification . . . 57

4.7.3.3 Results . . . 57

4.7.3.4 Comments . . . 58

4.8 Summary . . . 58

5 Wikipedia as a Vocabulary Resource for Pseudo Relevance Feedback . . . 59

5.1 Language model for information retrieval . . . 60

5.2 Selecting good feedback documents from PR Set . . . 61

5.2.1 Pseudo relevant set . . . 62

5.2.2 Unsupervised approaches . . . 62

5.2.2.1 Clustering documents . . . 62

5.2.2.2 Probabilistic topic models . . . 63

5.2.3 Supervised approaches . . . 63

5.2.3.1 Features for enterprise corpus documents . . . 64

5.2.3.2 Features for Wikipedia articles . . . 65

5.2.3.3 Training . . . 66

5.3 Experiments and Evaluation . . . 66

5.4 Summary . . . 67

6 Conclusions . . . 68

6.1 Unified view of presented approaches . . . 68

6.2 Role based personalization . . . 69

6.2.1 Future of role based personalization . . . 69

6.3 Wikipedia based approaches . . . 70

6.3.1 Concept thesaurus for query expansion . . . 70

6.3.1.1 Future of query expansion . . . 70

6.3.2 Re-ranking with category network . . . 70

6.3.2.1 Future of re-ranking . . . 71

6.3.3 Pseudo relevance feedback . . . 71

6.3.3.1 Future of PRF . . . 71

6.4 Summary . . . 71

List of Figures

Figure Page

3.1 Directory structure of training corpus . . . 18

3.2 The working of Indexing Functionality. . . 22

3.3 Block Diagram showing the flow of Query Formulation functionality. . . 23

3.4 Design of QE module . . . 25

3.5 An example execution of the global analysis phase on a sample query. . . 26

4.1 A typical Wikipedia article page . . . 36

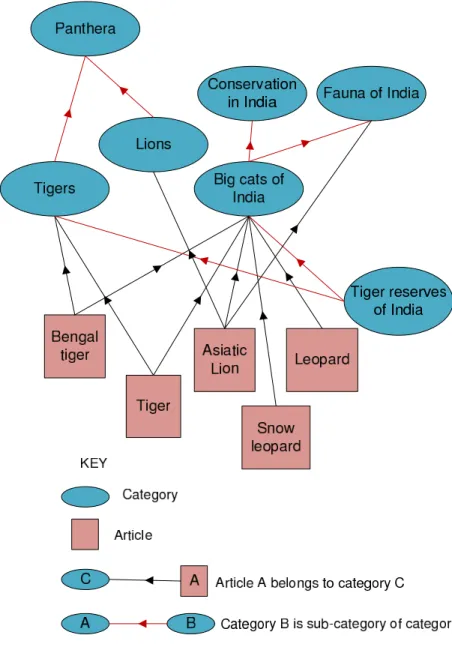

4.2 A small real category network example from wikipedia. . . 37

4.3 Few examples of redirects . . . 40

4.4 Wikipedia Link Structure and Creation of Vocabulary set and Thesaurus . . . 42

4.5 Concept Representation and QE Module . . . 46

4.6 Wikipedia Category section on the article page . . . 50

List of Tables

Table Page

3.1 The evaluation results of Role Based Enterprise Search . . . 32

4.1 Sample query expansion . . . 54

4.2 The evaluation results for Wikipedia CT based Query Expansion module . . . 55

4.3 Classification of 20 News Group data . . . 56

4.4 Precision figures for Re-ranking Experiment . . . 57

Chapter 1

Introduction

A huge surge in information retrieval applications has been observed in last couple of decades. Emerging from its initial days as a helping tool in library sciences, information retrieval has emerged into one of the most essential electronic applications. This is credited largely to the growth of Internet. Enterprises too started moving to digital domain and storage and retrieval of large amounts of digital data became the primary need for the early enterprise IT resources. Advances in the database manage-ment models and storage devices have paved a path for organizing the enormous data. Though modern enterprises are well networked but not soundly informed about their own data. Typical text search sys-tems were employed to retrieve information but they became outdated with time. More popular deasrch engines from the web domain have also been tried for enterprise information retrieval but were not as effective as they were expected to be. As we will see, there are many fundamental differences be-tween enterprise and web content that requires enterprise search problem to be tackled independently of generic or web search.

A formal explanation of ES has been presented by Hawking in [17] which can be summerized as, ES includes search of the organization’s external and internal websites as well as other electronic media such as databases, emails etc. Enterprise Search (ES) is a critical performance factor for an organization in today’s information age. The volume of electronic information has reached to proportions which are unmanageable by the classic retrieval models. Better algorithms have been designed to retrieve both structured (relational databases) and unstructured information (natural text) for businesses. However, retrieval of unstructured information in an enterprise environment is a non trivial task as the relevance is the prime objective unlike its counterpart, the web search, which focuses on speed[17].

The paradigm has shifted towards semantic search for both web and ES[12]. Search strategies have come a long way since the classical retrieval models. Techniques likes query expansion, re-ranking, se-mantic indexing and so forth have empowered search from being a simple text retrieval tool to powerful multimedia information extraction discipline. ES being a significantly different environment from web search, draws heavily from semantic search[34]. However, there still is an immense scope for

improve-ments in ES and we try to achieve some of that with the work presented in this thesis. Before we go and define our problem statement, let’s discuss the the enterprise environment and its search requirements

1.1

Web versus Enterprise Search

Enterprises are business driven entities and have a strong sense of purpose. Naturally, productivity is the fundamental reason for incorporating the applications that operate within an enterprise. Search re-quirements for an enterprise are not so different and are meant to instigate productivity. Unlike the web search, ES is expected to have a strong inclination towards relevance of results[34][55]. By relevance, we mean those answers which satisfy the user for a given search query. Enterprise search has a relatively narrow definition of relevance. Web search users do not have a strong expectation about search results whereas ES users expect the few most relevant documents in the results[34][17]. As popularity of pages govern the ranking in web search, users also mold their expectations to see popular pages in the results. However, most of the times a correct answer(s) is the requirement in ES.

Authority over content creation is another factor that makes enterprise data different for the web. While the Internet contains the collective creations of many authors and the composition is very democratic, enterprise content is manufactured to dispense information rather than to advertise and attracting atten-tion of users. User guidelines are narrow and strict for creating documents and there is a strict access control over the collection[34]. Often it is observed that only a small fraction of users are involved in writing as compared to number of users accessing. Same effect is seen in the vocabulary of documents, which is very author biased, mostly formal and domain specific.

Web pages also have a strongly linked structure through hyper-links among them. Most of the tex-tual data on the web is presented in the form of HTML pages which is rendered by the web browsers into a human readable form. On the other hand, textual data in enterprises is very heterogeneous in nature. Apart from the structured data (relational databases, XML files), unstructured or semistructured data carrying text in natural language can be present in variety of repositories like file systems, HTTP web servers, Lotus notes, Microsoft exchange etc. Moreover, the presence of hyper-links among enter-prise documents is very scarce even in the HTML content[6]. This poses a strong challenge in using popularity based ranking algorithms like PageRank[5][35] and HITS in ES.

1.2

Research Problems in Enterprise Search

Information retrieval community faces many challenges for ES. Apart from the problems tackled in the traditional information retrieval field (for example crawling, query sense disambiguation, query expansion, pseudo relevance feedback, indexing, ranking etc), we list some of the main research areas specific to ES [17] which require considerable attention from the research fraternity.

1.2.1 Vocabulary bias and narrowness

In a typical document search, users express a set words which they think best represent their re-quirement for the kind of information they seek in the documents and they expect to find the same in search results. Here, we would like to point out the difference between query and information need. Information need is the abstract notion about the information contained in the content of documents that a user has in his mind when he commences a document search. On the other hand, query is a concrete representation of information need through words. A user tries to articulate his information requirement by putting up best representational words he can think of as the query.

As the search requirements within an enterprise are mostly business driven, it implies that in an en-terprise, results satisfying a particular information need for a given context can be assumed to remain more or less a constant set for different users. However, the effects that individual users have over a result-set retrieved for a particular information need is through the queries they form. This brings out a very interesting point about ES, which is about user queries. The result set is strongly query depen-dent and the query represents the information need. The question is how well the query substitutes the information requirement. For example, a user looking for documents containing information about automobiles and gives the query as ‘cars’. A simple query term matching based search will not produce results that contain the words like ‘truck’, ‘bus’, ‘SUV’, ‘automobile’ but not ‘car’. So, query does not completely represent the requirement in this example.

The above example brings out another observation about the document content. Earlier we pointed out the nature of enterprise content, stating the factors that govern the composition of data. Vocabulary bias is another factor that effects the results for given search requirements. In the above example, even if user’s query is ‘automobile’, the result set might not carry documents that are from that domain but lack the term ‘automobile’. The power of a language to express information with so many different words makes it hard to relate information need with expected results. This means even if a user produces a comprehensive query, it is the vocabulary of the indexed documents that will infer the result-set.

1.2.2 Heterogeneous collection

Enterprises tend to have heterogeneous collections. Hard efforts are required for seamless work-ing of ES indexwork-ing and crawlwork-ing systems for different document types like web/HTML content, email threads, relational database entries, presentations, word and PDF type documents, OCR based docu-ments, spreadsheets. Different document types have huge differences in their structure. For example the document length, a database table can have records of equivalent lengths where as the web content may vary a lot. Then there is the problem of fetching the documents. The protocols vary with the storage systems, server types etc. For example, fetching public facing directories in a file system may require FTP protocol, web content may require HTTP protocol and the databases may need proper socket and

user account details. Many different types of parsers are also required to resolve to issue of heteroge-neous collections. Plain text documents may be indexed trivially, but almost all other formats of text based documents/repositories are stored as binary files in their respective formats. No single parser may be sufficient to extract text from the entire enterprise collection. Even if enterprises are aware of such nature and distribution of data, they tend to stick with the existing composition because of economic reasons as changing the software profiles and people training may incur significant additional operating costs on the enterprise. This presents a hard problem of dealing with the nature of documents across the enterprises.

1.2.3 User and work context

Enterprise environment is more regulated than the web. Enterprises offer web based portals to em-ployees for working and transacting with the enterprise data. These portal require a login and implement access based security features on the basis of user role, project development, geography etc. The point here is that the work session of enterprise is aware of user details, which could be used to facilitate the ES systems to better understand the information requirements of the user. While security features have matured, there is still a strong requirement for incorporating the user context into the search. Consider an example, a university has two roles, meteorologist and software developer. A strict work related query like ‘cloud’ is ambiguous as it could imply clouds in the sky or cloud computing. Query sense disambiguation is one aspect where user context can be applied. Similarly user context could be used for a context based indexing and ranking of results.

1.2.4 Test collections

There is a strong requirement of a comprehensive test collection and related evaluation data. Signif-icant efforts have been channeled through TREC enterprise track1through email collection and CSIRO (public facing web-pages of Commonwealth Scientific and Industrial Research Organization (CSIRO) website) dataset, but those do not comprehensively represent an enterprise composition. Datasets that exist today only focus on the document relevance for query sets but areas like heterogeneous collections (crawling and parsing related research), user context knowledge, enterprise query logs etc are absent in the open source collections. Their is a good reason for this. Most private institution have intellectual property, trade secrets, confidential policies, operations flow information and many other valuable as-sets within their enterprise data. Such information cannot be released to public domain. This poses an interesting and very challenging research area of creating realistic ES test datasets.

1

1.3

Problem Definition

In this thesis we have worked on two of the areas in ES that we described in the previous section, namely, user and work context aware ES and working out the issues of vocabulary bias and narrowness in ES.

Our aim is to enhance the relevance of results through traditional Information retrieval approaches like the query expansion and re-ranking in ES. We implement user and work context aware ES by taking up a user role based query expansion through local & global analysis techniques and re-ranking result-set by a role based document classification. For enhancing the vocabulary bias issues in the enterprise we implement query expansion by bringing in external lexicon and thesaurus repositories constructed from open source on-line encyclopedias like Wikipedia. We also introduce broader vocabulary range into result-set with collection enrichment techniques for pseudo relevance feedback. For re-ranking of results we estimate dominant document classes in the result-set by taking help of document classifiers trained with large manually tagged datasets like the Wikipedia categories.

We now explain the individual aspects of the thesis in details.

1.3.1 User role and information need

We pointed out the need to exploit the user and work context because such information is more easily available in enterprise environment. We present an approach to bring in role based personalization into ES. We achieve this by two means, role based query expansion and role based re-ranking. The approach assumes the knowledge of user roles in advance. It also assumes presence of a role tagged subset of enterprise collection which we refer to as training corpus.

Query expansion works with both global and local analysis techniques where global analysis uses Word-Net to broaden the query sense and a role based thesaurus constructed from term co-occurrence statistics of training corpus for introducing role specific terms in the query. Local analysis techniques use a role based flavor of pseudo relevance feedback where only top results relevant to given user role are selected for feedback.

Re-ranking of result-set is achieved by pushing up document which are more relevant to given user roles. Both local analysis and re-ranking techniques use the role relevant scores of documents stored in the search index for achieving their purpose. Role relevant scores are determined with the help of a Naive Bayes document classifier trained using documents from the training corpus. Role based person-alization of ES is explained in detail in chapter 3 and respective evaluation is also presented.

1.3.2 Query expansion with Wikipedia concept thesaurus

The issues of document vocabulary and query mismatch are frequently observed both in web and enterprise search. This effect is more prominent in enterprise collection as the expected relevant results are fewer. There are many ways to go about increasing the terms in the query that represent the same information need. Often dictionaries are employed for this and synonyms help in increasing the term coverage. But the dictionaries (for example WordNet) are limited in scope as they are manually cre-ated by few experts. One of the most popular open source thesaurus, the WordNet, covers only generic language lexicon like nouns, pronouns, adjectives, verbs etc. Such dictionaries severely lack phrases and named entities. Moreover they do not provide an in-depth coverage of vocabulary used in popular domains like science, culture, mathematics, engineering and technology, medicine, law, politics etc.

An alternative to dictionaries is Encyclopedias which have a greater grasp of topics. Wikipedia2 is one such open source encyclopedia which is arguably the largest and most frequently updated knowl-edge repository on the planet. Wikipedia provides concepts from different domains and their respective descriptions in form of articles. The article are tagged by categories. Content creation in Wikipedia is open to its users (which is the Internet community) and is continuously verified through an active group of volunteers. While it has been often argued that the quality of content in Wikipedia is below than that of any published encyclopedias[51], but we argue that the scale and topic coverage outshines its quality issues.

Wikipedia on its own is meant for human users. But we propose techniques to extract knowledge from its article and category link structure that could be easily used in ES as a thesaurus for query expan-sion. We propose our algorithms to build a concept vocabulary and a concept thesaurus from the link structure data of Wikipedia. Concept vocabulary is used to map the query from term space to concept space. Once query is mapped to concept space, concept thesaurus gives likely concepts for expansion that are related to query concepts. Another important aspect of this approach is to treat complex queries as phrases for mapping them to concept space. Expanded query in mapped back to the term space by using article names that represent the concepts. We explain this process in details and present evaluation results using CERC collection in chapter 4.

1.3.3 Re-ranking with Wikipedia category network

We propose to use large manually tagged sets rich in categories from all the domains of Wikipedia to re-rank the result-set. This approach assumes that certain enterprise document classes exist in the enterprise collection. Using the class names and their respective sets of concise defining vocabulary we give an approach to map enterprise classes to Wikipeida categories. Once we get representational categories we use articles belonging to these categories as training set of a Bayesian document classifier.

2

Every document in the collection is then given probabilities of them belonging to each document class. While searching, dominant enterprise classes are identified in the top k results and documents belonging to these dominant classes are pushed up in the result-set. The details of re-ranking with Wikipedia Category network approach is discussed in chapter 5 along with its evaluation.

1.3.4 Pseudo relevance feedback with Wikipedia based collection enrichment

Relevance feedback based expansion models are frequently used to increase precision of search re-sults in information retrieval. In absence of manual feedback, pseudo relevance feedback using top k search results as the relevant document set is employed for query expansion[46][48]. It is also observed that enriching the feedback set with external corpus may enhance search results[37][13]. We propose an approach of using pseudo relevance feedback based on query likelihood model (language based retrieval model[39]) where we enrich the feedback set with Wikipedia article content.

Our approach uses a mixed set of top k enterprise document from the result set fetched for a query and Wikipedia articles which have a high cosine similarity between their content and the query. We incorporate classification techniques to estimate good feedback documents from pseudo relevant set. We use both supervised and unsupervised classification for estimating good feedback documents. Un-supervised techniques involve K Nearest Neighbor (KNN) clustering of the pseudo relevant set and then clusters are populated with Wikipedia articles using the article likelihood probability model that we pro-pose. Nearest clusters to the query provide good feedback documents.

We use another unsupervised technique based on probabilistic topic modeling[11]. We discover latent topics in pseudo relevant set. Query terms give activated latent topics which in turn provide matching documents for feedback. Supervised technique involves Bayesian classification of pseudo relevant set into good and bad feedback documents. We extract a number of features from both enterprise docu-ments and Wikipedia articles to train the classifier. These approaches are explained in chapter 5 along with their evaluation.

1.3.5 Common theme of the thesis

The four techniques that we have introduced in the above sub-sections have a strong underlying com-mon theme which we will describe now. Every natural language gives the power to write an abstract piece of information in many forms, with many different words. Since the unstructured text is cre-ated for human understanding, it is often assumed that human intelligence will connect different styles, forms and vocabulary used for the writing with the inherent information. It is also true, because humans can identify and disambiguate meaning of terms from their context, but it is a hard problem for search systems which rely on search indexes carrying information about a single or a sequence of terms and their association with documents. There are of-course many ways to go around this problem. Natural

language processing techniques involving sentence level parsing can best relate words to abstract infor-mation units but are computationally very intensive and require difficult grammar to be implemented manually or statistically through manually tagged corpus. A simpler idea is to work with queries and try to extract the most collection-relevant meaning from them. But, as the index only contains the terms that occur in enterprise collection, we can add terms to the query to relate it to the collection without too much diluting its original intended meaning; or we can add terms to broaden its term coverage without adding much noise to the meaning so that terms present in collection are also captured in the query. In all our techniques, which implement query expansion, our intent is exactly the same. We derive structures from the target (enterprise corpus) and external collection (Wikipedia) for term addition to the query without disturbing its intent.

Our re-ranking ideas are also analogous across different approaches that we have tried to implement. Basically we look for documents which belong to dominant classes present in the topkresult-set and then try to push them up in the result ordering. In role based approach, the dominant class is predeter-mined as the selected user role, while in Wikipedia category based class identification, we find dominant classes from the result set and then implement re-ranking.

1.4

Organization of Thesis

We dicusses the ongoing work in research community that is relevant to our work in Chapter 2. We present our motivations and novelties present in our approaches. We first give generic approaches to enterprise search and narrow down to the contemporary research happening in the field of user roles, query expansion, re-ranking and use of Wikipedia with enterprise search.

We give the techniques for involving role context information into enterprise search in chapter 3. The role based personalization presented in that chapter, involves query expansion and re-ranking based on role based training corpus and the respective evaluation. Chapter 4 presents the approach to use Wikipedia article and category link structure to create a domain independent concept thesaurus for query expansion in enterprise search. The chapter also explains the re-ranking techniques based on the Wikipedia category network. A modified pseudo relevance feedback technique for enterprise search by inclusion of Wikipedia article content in the feedback set is explained in chapter 5. The chapter also presents supervised and unsupervised classification techniques to determine good feedback documents out of the pseudo relevant set along with experimental setup and evaluation. Chapter 6 is the last chapter of this thesis and it summarizes our work. We also give some of the future directions that this research can grow into, and conclude the thesis.

Chapter 2

Background

This chapter our literature study in the field of information retrieval with attention on enterprise search (ES). The listed works have been motivational as well as challenging for us. As the focus of our work is on query expansion and re-ranking, this chapter presents research focused to these two aspects.

2.1

Enterprise Search

Among the earlier works, Hawking [17] presents a number of areas that can be explored in enterprise search. The author also lists the scope of many advanced information retrieval techniques for providing solutions to enhance enterprise search. Our focus mainly remains on ad hoc retrieval of documents containing electronic text data. Fagin et al.[15] discuss the differences and common factors between the web and enterprise Intranets. They differentiate the web and enetrprice search by dicussing the strongly linked structure of the web versus business driven enterprise data. The authors argue that techniques specific to web search, like PageRank[5] and HITS[27] can be helpful but not sufficient. The authors also analyze the problems involved in implementing good enterprise search solutions.

Semantic Web technologies have been applied in the context of enterprise by Fisher et al. in [1] for improving the knowledge management using ontologies but they do not focus on improving the search effectiveness. Their work describes the heterogeneous nature of the enterprise data. The issues arising because of the scale of distributed data are also discussed. Their paper describes a variety of structural and syntactical difference in the enterprise documents and how a number of protocols are employed for data access. However, they argue that the main emphasis in ES is always on relevance and speed of search in spite of the given issues. The authors also present classification techniques for categorizing data into precise domains through the notion of tagging documents with the associated metadata accord-ing to a predefined schema. Demartini [12] formally presents the application of semantic techniques in enterprise search. His work has motivated us to go beyond classical information retrieval methods and to build statistical learning systems for implementing effective ES solutions.

2.2

Related Work: User Roles and Search

Demartini [12] tries to explore a co-operation of semantic web and IR systems for ES and has pre-sented an open idea about the role based personalization on ES, which has led us to explore the area in detail. A substantial amount of research is going on in the area of Enterprise roles. But we have observed that the enterprise roles are mostly studied from security, access control[3][38][24][36] and enterprise modeling’s point of view. We did not come across any significant work involving enterprise roles and information retrieval. The lack of such research in spite of the industrial demand (funded research projects) motivated us to look deeper into role based personalization of ES.

We focused on annotated datasets on the basis of roles for extracting term associations and role promi-nence classification in documents for query expansion and re-ranking. As far as document annotation is concerned in ES, there are some significant parallels in academia and we will mention few contempo-rary research works.

Dmitriev et al. in [14] have presented their work with document annotation in enterprise environment and suggest that annotations can help enterprise search. Their main contribution is through user an-notations of enterprise documents which they exploit for feedback. They point out that the controlled environment and lack of spam makes it easier to collect annotations in enterprise search. Two meth-ods, which are explicit and implicit feedback, are suggested by their work to collect annotations. We were encouraged by their arguments to incorporate annotated or tagged document sets for enhancing ES through query expansion and re-ranking, however, we kept our focus to the domain of roles and assumed that an annotated set for enterprise roles exists as a prerequisite to our work.

2.3

Related Work: Query Expansion in Enterprise Search

Disambiguation of search queries and Query Expansion are our prime research areas. In [29], Liu et al. describe the inherent nature of user queries to be ambiguous and present an approach for word sense disambiguation of search terms. Chirita et al. in [8] have presented views on the ambiguous nature of short search queries and gives an approach to query expansion by implicitly personalizing the search output.

2.3.1 Thesaurus based techniques

Zhang et al. present a WordNet based query expansion techniques in [54], where they assign weights for candidate query expansion terms selected from WordNet and ConceptNet by a technique they de-scribe as spreading activation. In their work, the weighting task is transformed to a coarse-grained classification problem. The work attempts to identify relations between query difficulty and effective-ness of expansion. Pinto et al. in [38] propose query expansion by incorporating a semantic relatedeffective-ness

measure on the concepts of WordNet thesaurus. Their work tries to find representational concept of the query terms and then use WordNet database to identify expansion terms.

2.3.2 Pseudo relevance feedback

Pseudo relevance feedback is perhaps the most popular local analysis technique used in query ex-pansion. Salton et al. first presented a compilation of many techniques to implement pseudo relevance feedback in [43]. Their paper also included the inclusion of the Rocchio algorithm [41] and the Ide dec-hi variant along with evaluation of several variants[20]. Some variants of blind feedback advocated that the results not judged as relevant should be considered as non-relevant set, however Schutze et al.[46] and Singhal et al.[48] showed that query expansion works better with only using a relevant set formed with topkresults.

2.4

Related Work: Re-ranking

Re-ranking is another area where we share our interests with many researchers. Re-ranking is ap-plied in order to build a more intuitive and perceptive presentation of search results from the user’s point of view. Sieg et al. in [47] present the idea of re-ranking with user profile based ontologies and Zhuang et al. try to achieve the same using query log analysis[56].

In another work that implements re-ranking, Zhu et al. present an idea for pushing up navigational pages (e.g., queries intended to find product or personal home pages, service pages, etc.) in the search results for enterprise search[55]. Their approach is to identify navigational pages through an off-line phase and build a separate index for them. They associate terms with these pages and then identify a queries that are intended for navigational pages. For such queries, the desired pages are presented among top results.

2.5

Related Work: Wikipedia and Enterprise search

A lot of areas are being researched for ES involving open knowledge-bases like Wikipedia1. This section lists few such works which are also relevant to our ideas involving Wikipedia structure and content for enhancing ES.

2.5.1 Wikipedia link structure and concept thesaurus

Our motivation arises from one of our previous works on role based personalization in enterprise search[50]. The system used role specific training documents to build a word co-occurrence thesaurus,

1

which along with WordNet was employed for query expansion. We noticed that the concept coverage of a manually constructed thesauri like WordNet is mostly limited to single word concepts and they severely lack named entities. This restricted the outlook of the query expansion module of a multi-word search query to a bag of words perspective.

A growing recognition of Wikipedia as a knowledge base among information retrieval research groups across the globe was another motivation for us to proceed in this direction. Medelyan et al.[30] give a comprehensive study of ongoing research work and software development in the area of Wikipedia semantic analysis. Their findings reveal a large number research groups involved with Wikipedia thus indicating its increasing popularity. A number of developments with Wikipedia content and link struc-ture analysis are going on, which are related to our work.

Kotaro et al. in [25] present an approach for creating thesaurus from large scale web dictionaries using the hyper-link statistics present in their HTML content. They concentrate on Wikipedia and analyze the article and category links for building up a large scale thesaurus. They introduce the notion of path frequencies and inverse backward link frequencies in a directed link graph of Wikipedia concepts for finding the closeness of the articles. Our thesaurus generation is similar to their approach but we analyze both inter-article and article-category links for thesaurus generation. We use their concept of backward link frequencies for finding the conceptual narrowness of articles. Our motivation to create a concept thesaurus is not to have a dictionary but a knowledge repository that matches Wikipedia concepts to user queries and then find associated concepts (hence terms) for the query.

Ito et al. in [21] present their approach of constructing a thesaurus using article link co-occurrence where they relate articles with more than one different path between them, within a certain distance in the link graph. They argue that the link co-occurrence analysis to generate a dictionary is more scalable than link structure analysis, however they do not make use of category information. Their work too, is meant for a generic dictionary and they do not suggest any implementation involving the thesaurus for document search.

The idea of using Wikipedia as a domain independent knowledge base is consolidated by the finding of Milne et al.[32]. They give a comprehensive case study of Wikipedia concept coverage of domain spe-cific topics. The authors have done a comparative analysis of concepts and relations between Wikipedia and Agrovoc2 (a structured thesaurus of all subject fields in Agriculture, Forestry, Fisheries, Food se-curity and related domains) and establish that Wikipedia can be a cheap and descent substitute to high cost manually created domain specific thesauri. However, their work does not implement a method to extract a domain specific thesaurus. They only prove the breadth of Wikipedia concept coverage. This was encouraging for us as we used a general domain independent thesaurus extracted from wikipedia

2

link structure. In spite of the lack of domain specialization we felt that the topics and concept belonging to most domain and enterprises will be covered in this thesaurus.

Milne et al. in [33] introduce a search application using Wikipedia powered assistance module namely Koru, that interactively builds queries and ranks results. They use wikipedia re-directs for building up a synonym set and study the disambiguation pages for word sense disambiguation. Their work primarily focuses on the terms of wikipedia article names. Our thesaurus construction however focuses on con-cepts (abstract entities described by articles) and their representational vocabulary. We go a step beyond collecting synonyms and focus on the closeness of different related concepts. They do present methods to incorporate thesaurus in search specially for query expansion, however, their methods depend on ac-tive user involvement during the search cycle.

Our technique using concept thesaurus for query expansion works by first finding concepts that rep-resent query and then the expansion terms from the concept names that are related to the query repre-sentational concepts. This work is motivated from a similar technique presented by Zhang et al.[54] where they look for representational concept of the query terms from WordNet concept repository and then use WordNet database to identify expansion terms. The major difference in our technique and this work is the phrase based approach for finding representational Wikipedia concepts.

2.5.2 Wikipedia categories and re-ranking

Kaptein et al. suggest a method to incorporate Wikipedia categories for ad hoc search[23]. They give an approach to automatically generate target categories as surrogates for manually assigned categories. Our work however used Wikipedia categories as substitute for enterprise document classes instead of queries. Their idea none the less motivated us to look into the Wikipedia category network for re-ranking in ES.

2.5.3 Wikipedia as lexicon resource

Our Wikipedia based feedback approach makes use of language based retrieval models for search and query expansion through feedback. We use the query likelihood model for document retrieval as suggested by Ponte et al[39]. This model was further enhanced by Miller et al.[31] and Hiemstra[19] The feedback approach for query expansion in language model based retrieval has been suggested by Zhai et al. in [52] and we use that for the same purpose. Our work has also implemented a probabilistic topic modeling for discovering latent topics and for finding document relationships with the topics. This was achieved through latent Dirichlet allocation or LDA as suggested by Blei et al. in [11]

Diaz et al[13] have proposed a method to incorporate information from external text collection for pseudo relevance feedback using language model technique. By making use of language relevance

model for query expansion they showed good improvements in the results. Their aim was to broaden the vocabulary range of the collection and hence for feedback based expansion. They suggest the use of high quality corpus which is comparable to the target corpus. We carry forward this work by incorpo-rating methods to ensure quality documents are available in the feedback set. One major difference is that our methods specifically uses wikipedia text as external corpus because of its distributed and demo-cratic content creation and its open source nature. We also argue that the consistency in the structure of Wikipedia articles (the consistent sectioning of article text) can be used ustilized to better seggregate the training test and therefore, achieve better training.

Peng et al. in [37] describe the the incorporation of alternate lexicon representations through collec-tion enrichment techniques for query expansion. Sparse nature of enterprise data and Intranets in terms of less hyper-links iand the strong biases towards vocabulary usage by the users is discussed. However, their approach uses a relatively bigger set of similar documents in content as compared to the target corpus, while our focus is to use a general purpose rich text resource such as Wikipedia to enrich the pseudo relevant set. We also use classification (both supervised and unsupervised) based approach for selecting good feedback text from the external corpus.

Cao et al. argue the inaccuracy of the basic assumption made by pseudo relevance feedback in [7]. They point out that most frequent terms in feedback documents may not be the best expansion terms. The authors further add that expansion terms discovered by orthodox techniques may frequently be un-related to the query and harmful for the retrieval. He et al.in [18] further extend this work by moving the classification from term to document level. They apply classification techniques to identify the high-quality feedback documents, or in other words, to remove the low-high-quality ones. They also present a list of features which they use for classification process.

In [28], Li et al. give an approach to use Wikipedia as external corpus for improving Weak Ad-Hoc Queries. Their work also involves pseudo relevance feedback, but they assume the entire pseudo rele-vant set as relerele-vant and do not incorporate any classification of good or bad feedback documents.

2.6

Summary

This chapter presented many ongoing researches in the field of general information retrieval and ES, which are important from our point of view. As our focus is mainly on query expansion and re-ranking aspects of search, this chapter presented some of the relevant findings and accomplishments achieved by a large research community involved with information retrieval and more specifically ES. We have tried to capture the very latest in research for our synthesis of ideas. Coming chapters will present our approaches to enhance ES and their respective evaluations.

Chapter 3

Role Based Personalization of Enterprise Search

We present a role based approach for personalizing Enterprise Search (ES) in this chapter. In an enterprise, a role defines a set of guidelines that govern the work profile, information access and the ownership level of an employee [9]. The role of an employee has also an implicit effect on the kind of information required by him or her from the enterprise data. We embed the role based retrieval approach into the two basic aspects of an ES system, which are the query formulation and the indexing process. We discuss the relevance of role based search in section 3.1. Section 3.2 introduces core techniques which make use of a role based tagged document set to train a document classifier and create a co-occurrence thesaurus. The same section also discusses indexing technique for parametric and role based search. Section 3.3 presents query formulation, boolean logic and parametric search. Section 3.4 dis-cusses the role based Query Expansion involving global and local analysis techniques and section 3.5 puts forward a role relevant re-ranking step. Experiments for evaluating hte proposed aprroaches in this chapter are described in Section 3.6 along with observed results.

3.1

Enterprise Roles and Search

Segregation of employees into well defined and distinct roles is a key aspect of modern enterprises. Role division felicitates distribution of tasks and authority in a systematic manner. Abstractly, roles can be viewed as crisp guidelines that define the job profile of an employee in an enterprise. With advent of computers, electronic storage and networking, it has become easier to effectively share the collective intellectual wealth in an enterprise. This knowledge sharing is considered to be one of the most impor-tant factors for a successful and smooth functioning organization. The data in large to medium sized enterprises is available through distributed systems in various forms like text, images, videos. Majority of this information is in text form which either resides in structured format (relational databases, spread-sheets etc) or as semi-structured or unstructured form [40] involving natural text documents.

The Intranet or the internal web is one example of information sharing and access system for enterprises. Unlike Internet, where content creation is democratic and uncontrolled; and information access is with-out discrimination, Enterprise data is proprietary knowledge involving considerable investments (time, money, research) and trade secrets of a company [2]. For example, a software company like Microsoft would not want the source code of Windows operating system to be available freely in public domain when its sales are the primary source of revenue. So, the data of enterprise being of considerable value, its information flow and access is often regulated for various reasons. A high level policy document or email contemplating the lay-off targets for example, should not be seen by entry level employees. On the other hand a solution to a particular problem faced frequently in developing the core product should have more reach in order to avoid such complication. Hence with roles, information discretion is desired by enterprises. Various role based access strategies are being investigated by the research and development community to address this requirement. But now we will see that along with the security’s perspective of role based access of data, it is vital to have an information retrieval’s point of view when it comes to roles and information in an enterprise.

As we discussed, the electronic data is available on distributed systems in enterprises and Intranets facilitate its access to users (by user we mean people working with the enterprise data). As the size and scale of data outgrew human abilities to browse, enterprises implemented full text search systems to meet user information needs for searching documents using keywords or queries. Queries are nothing but a set of terms that from an information seeker’s point of view, best represent his or her information need. Same keywords have different meanings in context of different roles and users tend to formulate ambiguous, inaccurate and misrepresenting queries. Consider two roles in a Database Solutions firm, a software developer and a hiring officer. While both may want to query about ‘networking, the developer may expect results related to computer networks, on the other hand hiring officer would expect infor-mation about social networking. This problem can be resolved to a reasonable extent with role based personalization of ES. An example of ambiguous query could be ‘bush’ meant for former US president Bush. Bush means a plant type and here the meanings have no relation what so ever.

Enterprise data adheres to strong guidelines governing the content creation. It is also regulated and strongly related to the context and business and operations of enterprise. This presents an interesting scenario in ES, where a number of aspects about the data are known while designing a search system. User roles and role based information needs, as we mentioned earlier, is one such aspect. Another facet is the meta-data which is much more visible and structured [10] as compared to Internet. Also, the enterprise documents are work related, well formed and most importantly lack spam. We considered these properties of enterprise data in order to design a role centric search system involving parametric search which uses the meta-data; and information retrieval techniques like Query Expansion (QE hence-forth) and re-ranking based on tagged content. Our idea is to implement an ES solution which will be a role based personalization of enterprise document retrieval process. We make use of a tagged set of

the enterprise corpus, having a collection of documents manually classified and marked on the basis of roles, in order to train a document classifier and to construct a word co-occurrence statistics based thesaurus for every role. For role based QE, we use the co-occurrence thesaurus along with WordNet for the global analysis of queries and implement a role based flavor of classical pseudo relevance feedback based QE using co-occurrence statistics of top k results as part of local analysis. The role classifier is used to reorder the search results according to the user role.

3.2

Role Based Tagging and Indexing

This section discusses the main building blocks on which our role based search works. Ou approach assumes that the role definitions of an enterprise are not within the scope of this work and we do not have to deal with role engineering or mining. Although work has been going on for automated and semi-automated extraction of roles in an Enterprise [49] [45] [53], for our experiments we chose to create the roles manually. We are also not concerned about implementing any form of access control.

3.2.1 Role tagged dataset

In order to discover the vocabulary associated with various roles we use a training corpus which contains documents tagged with role labels. We select a subset of the enterprise document collection (enterprise corpus) and manually classify the documents it contains, into various categories which cor-respond to the information that belongs to or is used by respective roles. Each document in the training corpus is categorized by one label and is kept in the respective directory. Directory structure of training corpus looks like figure 3.1. In our implementation we do not use nested roles and our directory hier-archy is also flat. however, a tree like structure is also possible as it is a matter of extra effort by the humans classifying the training corpus.

3.2.2 Role classifier

One of the primary uses of role tagged subset of the enterprise corpus is to train a document classifier for indexing process. When the search results are presented to a user, our aim is to produce an ordering of documents which promotes the documents that are more closely associated with the selected role. If every document in the enterprise collection is already classified and tagged with proper role information, the ranking process becomes much easier. But a manual classification of a large corpus can be extremely impractical. Training corpus described in the previous sub-section is just a small part of a much larger collection. So, we rely on machine learning techniques to achieve an automated classification. We propose to use a Naive Bayes document classifier for tagging documents with role relevant scores. The choice of the classifier is just to put forward the concept and we do not advocate any particular classifier (the choice of classifier depends on many factors like the collection size, on-line capabilities etc). There are a number of classifiers available and all have their own strengths. We preferred a Naive Bayes

Figure 3.1 Directory structure of training corpus

classifier because of the ease of its implementation. We will try to explain briefly what happens during document classification. We imagine that there aremdocument classes each corresponding to one of themroles in the enterprise. A document can be modeled as a set of words. The Niave Bayes model also assumes independent probability ofithword (w

i) in a document that occurs in particular classCj.

This can be stated as a conditional probabilityP(wi|Cj). The probability of a documentDgiven a class

Cj is

P(D|Cj) =

Y

wi∈D

P(wi|Cj) (3.1)

In order to determine the class of a documentDBayes theorem is used as following

P(Cj|D) = P(Cj) P(d) ·P(D|Cj) =⇒P(Cj|D) = P(Cj) P(d) · Y wi∈D P(wi|Cj) (3.2)

P(wi|Cj)can be determined by using the training corpus with maximum likelihood estimate.

Y wi∈D P(wi|Cj) = X D∈Cj tfwi(D) |D| (3.3)

Here we take the ratios of number of occurrences ofwiinCj and total terms inCj;dis a document in

the training corpus belonging to classCj;tfwi(d)is the term frequency ofwiindand|D|is term count

inD. Equation 3.2 gives us the probability of an unseen document belonging to a class. The sum of probabilities of a document for all classes is 1.

Once we train the classifier by estimatingP(wi|Cj)using the training corpus, we classify the remaining

documents in the collection and give them the role relevant scores. Suppose that for documentDi, the

probability that it belongs toRole1 is 0.7 and that is belongs toRole2is 0.3, in a two role system. The role relevant score vector of this document will be

~

Di = (< Role1,0.7>, < Role2,0.3>) (3.4) Role relevant score information is then utilized while the documents are indexed. This is explained later in section 3.2.4.

3.2.3 Role based co-occurrence thesaurus

We also propose an automatically derived role based thesaurus using word co-occurrence statistics over a collection of documents in an enterprise. We use the training corpus which contains documents classified based on the existing roles. These documents can be used to automatically induce a role based lexicon (this would introduce role specific terms while expanding the query). A window of ‘L’ consec-utive words (we assumeL = 8 words) slides over all the documents, and the words that exist in this window are considered to be co-occurring. Stop words are neglected while constructing the thesaurus and it is constructed in such way that it stores word to word co-occurrences as well as the frequency of the co-occurrences. To better understand the technique of creating thesaurus based on co-occurrence model, consider the example where we have the following text being scanned by the thesaurus builder

The giant panda lives in a few mountain ranges in central China, mainly in Sichuan province, but also in the Shaanxi and Gansu provinces Due to farming, deforestation and other development, the panda has been driven out of the lowland areas where it once lived.

The text is then striped of the stop-words and punctuation marks and we end up with the following giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

In the first iteration given in the box below, the sliding window starts at the beginning of the text and the words ‘giant’, ‘panda’, ‘lives’, ‘few’, ‘mountain’, ‘ranges’, ‘central’ and ‘china’ are observed to be co-occurring.

−→

giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

In next iteration (the following box), the co-occurrence observation window slides one to the right making ‘panda’, ‘lives’, ‘few’, ‘mountain’, ‘ranges’, ‘central’, ‘china’ and ‘mainly’ as co-occurring words. So after this iteration ‘giant’ and ‘panda’ or ‘panda’ and ‘mainly’ co-occur but the words ‘giant’ and ‘mainly’ do not. The process is repeated till the all of the text is analyzed in a document. The we start again for remaining documents. An important point to note here is that number of windows in a documentDwill be|D| −Li.e. the word count ofDminus the window size.

−→

giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

−→

giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

−→

giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

−→

giant panda lives few mountain ranges central China mainly Sichuan province Shaanxi Gansu provinces Due farming deforestation development panda driven out lowland areas where once lived

· · · · · ·

After completely analyzing the tagged training set, we compile the final co-occurrence thesaurus on the basis of the probability of finding two words co-occurring in given window verses the probability of finding the same words co-occurring in all other roles. LetR be the set of all roles such thatR = {R1, R2, ..., Rn}(here we havenpossible roles) The probability that termsti andtj are co-occurring

in a particular roleRkis given by equation 3.5.

P(ti, tj|Rk) = P d∈Rk P L∈d fL(ti, tj) P d∈Rk (tf(d)− |L|) (3.5)

where,dis a document inRk,Lis a co-occurrence observation window ind;fL(ti, tj) = 1iftiandtj

3.5 actually gives the ratio of number of windows in documents in Rk where ti and tj co-occur to

the total number of windows. Our approach aims to include those terms in role based co-occurrence thesaurus which are seen together more for the given role as compared to other roles. The probability that the termstiandtj co-occur in all other roles apart fromRkis given by equation 3.6

P(ti, tj|Rk) = P d∈R−Rk P L∈d fL(ti, tj) P d∈R−Rk tf(d)−docs(R−Rk)· |L| (3.6)

The symbols in equations 3.5 and 3.6 have same meaning. R−Rk implies the set of document that

belong to all the role exceptRk. The confidence score of two terms co-occurring for given roleRk is

taken as the difference in the probability of finding then in documents of given role and the probability of finding them together in other roles.

Conf idenceRk(ti, tj) = P d∈Rk P L∈d fL(ti, tj) P d∈Rk tf(d)−docs(Rk)· |L| − P d∈R−Rk P L∈d fL(ti, tj) P d∈R−Rk tf(d)−docs(R−Rk)· |L| (3.7)

Equation 3.7 gives us the metric to rank terms as more co-occurring than others.

3.2.4 Indexing

Most documents have an added structure apart from their actual content. Digital documents have machine recognizable metadata associated with every document which carries information about the date of creation, author, file format etc. These are also known as the fields of metadata. While creating a parametric index, we create document posting lists for every field. Of-course, the field’s range of values should be finite to accomplish such an index. Additional fields which are not part of the metadata (e.g domain name, url etc) could also be included into parametric index while scanning or crawling through the enterprise corpus. Parametric index often helps in narrowing down the search range by selecting certain values for different parameters (author name or date, for example) along with the actual query.

In addition to parametric index, it is also vital to have a role based index of the enterprise docu-ments in order to implement role based personalization. We propose a model for indexing in figure 3.2, which will take care of the needs of role based personalization. This model is built with the help of two mappings, namely term to document mapping and document to role mapping. Term to document mapping is the actual indexing done by all the search engines, where the term-document associations are stored as term frequency (tf) and inverse document frequency (idf). This mapping is stored for a normal text based search engine, but this does not cater to the requirements of role based personaliza-tion. So a new Document to Role mapping is added to the indexing process. Document to Role mapping

Figure 3.2 The working of Indexing Functionality.

takes care of role based personalization. This mapping stores the percentage of relevance of a document to the available roles. We use a Naive Bayes document classifier that we described above, where the tagged training corpus is used for training. The format is

-Document1: (role1, 0.35), (role2, 0.51), (role3, 0.13), (role4, 0.01)

This mapping reveals how relevant a document is for a role and the same could be used to re-rank the results.

3.3

Query Formulation

We propose the use of two effective methods for formulating a Query. These methods allow the user to form a better structured query rather than just a set of keywords that may ultimately result in retrieving bad documents, much to the dislike of the users. These two methods are Boolean logic and Parametric Advanced Search.

3.3.1 Boolean logic

Boolean logic is a method for query based information retrieval in which search terms are combined with boolean operators like AND, OR and NOT. These operators are described below

Boolean Logic Query Extract Operators Add control keywords Rephrase query Advanced search page Set default values

Fetch values for parameters

Query with control keywords

Boolean queries Parametric

search

Figure 3.3 Block Diagram showing the flow of Query Formulation functionality.

• AND: This operator is used to retrieve documents which contain all the term specified before and after the operator.

• OR: This operator is used to retrieve documents which contain any of the term specified with the operator.

• NOT: This operator is used to retrieve the documents which do not contain the term specified after the operator.

Figure 3.3 explains the flow of boolean logic functionality in steps along with parametric search. We use a set of predefined control keywords for representing the operators. The output of query formulation will look similar as the following

‘CONTROL-WORD Query-Term1 CONTROL-WORD Query-Term2 .’

3.3.2 Parametric search

Through advanced parametric search, a number of options are available to the user to give specific information about the desired documents. The options designed for the advanced parametric search

were inspired by available parameters in popular Internet advanced searches like Google1, Yahoo 2, Google Scholar3etc. We decided on following options as search parameters.

• File Format (file:)

• Date (date:)

• Author (auth:)

• Domain (site:)

• URL (url:)

We initially set these options to default values in the advanced search page depending on the selected user role. Once all parameters are finalized by the user, we add the required predefined control keywords to the search query. Figure 3.3 explains the steps involved in advanced parametric search functionality. The parametric search makes use of the parametric index described in section 3.3 for filtering the results.

3.4

Role Based Query Expansion

This section describes a QE module where we try to incorporate various heuristics to traditional QE techniques in order to implement role based personalization. QE uses two techniques, namely global analysis and local analysis. Role information is assumed to be available in advance to the QE module. Both global and local analysis work on individual query terms and do not regard multi-term queries as single entity i.e. both techniques work with bag-of-words approach. Our system provides a role selec-tion opselec-tion in the user interface which also takes the user query.

Global analysis involves thesauri to find term associations with query terms. We use WordNet4 as a general purpose thesaurus for lexicon coverage and a role based thesaurus (described in section 3.2.3) for giving emphasis to terms belonging to selected roles. Local analysis methods tries to make use of relevant documents to the query. This approach gives a general model to gather terms for QE using relevant and non-relevant set of documents for the query produced by the relevance feedback process. We use this model for blind relevance feedback to compute role relevant co-occurring terms with query terms by analyzing topkresults retrieved from the first pass of the original query. These methods are described in more detail in the following subsections.