Searching the Web by Voice

Full text

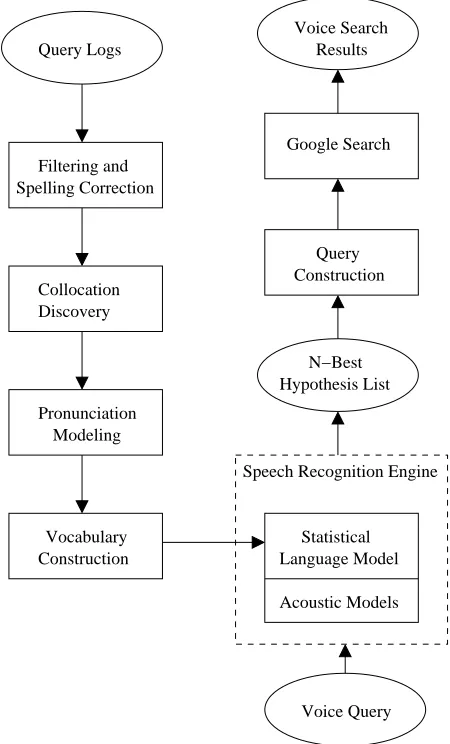

Figure

Related documents

Section-B : 10 questions, 2 questions from each unit, 5 questions to be attempted, taking one from each unit, answer approximately in 250 words.. Total marks

Taken together, our contrasting findings on entrepreneurial talent and intent raise an interesting question: if those who tend to start a business are less

Downloaded from https://academic.oup.com/jnci/article-abstract/106/8/dju169/911122 by Periodicals Department , Hallward Library, University of Nottingham user on 17 October 2018..

Accordingly, pups exposed to excess exogenous leptin in early postnatal life developed a cardiovascular phenotype that mirrored offspring of obese dams, with

Their efforts have engaged state and national government entities, content partners from various organizations, other state library agencies and many libraries and cultural

Carl Pasquale was PROPERLY RECLASSIFIED from a Systems Analyst 1 to a Network Services Technician 3, classification specification number 67193, during the relevant time. period