IBM

®

Tivoli

®

Software

IBM Tivoli Workload Automation

V8.6 Performance and scale

cookbook

Document version 1.1

Monica Rossi

Leonardo Lanni

Annarita Carnevale

© Copyright International Business Machines Corporation 2011.

US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp.

IBM Tivoli Workload Automation V8.6 Performance and scale cookbook

C

ONTENTS

List of Figures... v

List of Tables ... vi

Revision History ...vii

1 Introduction... 1

2 Scope ... 2

3 HW and SW configuration ... 5

3.1 Distributed test environment configuration ... 5

3.2 z/OS test environment configuration... 12

3.3 Test tools ... 15

4 Results... 16

4.1 z/OS test results ... 16

4.1.1 Cross dependencies in mixed environment TWSz -> TWSd ... 16

4.1.2 Cross dependencies in homogenous environment TWSz -> TWSz ... 17

4.1.3 Tivoli Dynamic Workload Console for z/OS Dashboard ... 18

4.2 Distributed test results ... 18

4.2.1 Cross Dependencies in mixed environment TWSd -> TWSz... 18

4.2.2 Cross Dependencies in homogenous environment TWSd -> TWSd ... 19

4.2.3 Agent for z/OS ... 20

4.2.4 Dynamic scheduling scalability ... 21

4.2.5 Tivoli Dynamic Workload Console Dashboard ... 23

4.2.6 Tivoli Dynamic Workload Console Graphical plan view ... 23

4.2.8 Tivoli Dynamic Workload Console concurrent users ... 26 4.2.9 Tivoli Dynamic Workload Console Login ... 28

IBM Tivoli Workload Automation V8.6 Performance and scale cookbook

L

IST OF

F

IGURES

Figure 1 - Tivoli Workload Automation... 2

Figure 2 - Tivoli Workload Automation performance tests... 3

Figure 3 - Tivoli Dynamic Workload Console tests environment ... 5

Figure 4 – Dynamic scheduling scalability environment... 7

Figure 5 - Cross Dependencies d-d environment ... 8

Figure 6 - Cross Dependencies d-z environment ... 9

Figure 7 – Agent for z/OS environment ... 10

Figure 8 - TDWC Dashboard environment ... 11

Figure 9 – Cross dependencies z-z environment ... 12

Figure 10 - TDWC for z/OS Dashboard environment... 13

Figure 11 – Cross dependencies z-d environment... 14

Figure 12 - RPT schedule for TDWC concurrent users test ... 27

Figure 13 - Optthru with 1.5 GB heap size ...viii

Figure 14 - Optthru with 3.0 GB heap size ... ix

Figure 15 - Subpool with 1.5 GB heap size ... x

Figure 16 - Subpool with 3.0 GB heap size ... xi

Figure 17 - Gencon with 1.5 GB heap size...xii

L

IST OF

T

ABLES

Table 1 – TDWC HW environment ... 6

Table 2 – TDWC SW environment ... 6

Table 3 – Dynamic scheduling scalability HW environment ... 7

Table 4 – Dynamic scheduling scalability SW environment ... 7

Table 5 – Dynamic scheduling scalability HW environment ... 8

Table 6 – Dynamic scheduling scalability SW environment ... 8

Table 7 – Cross Dependencies d-z HW environment ... 9

Table 8 – Cross Dependencies d-z SW environment... 10

Table 9 – Agent for z/OS HW environment ... 10

Table 10 – Agent for z/OS SW environment... 11

Table 11 – TDWC Dashboard HW environment ... 11

Table 12 – TDWC Dashboard SW environment... 12

Table 13 – Cross dependencies z-z HW environment ... 12

Table 14 – Cross dependencies z-z SW environment ... 13

Table 15 – TDWC for z/OS Dashboard HW environment ... 13

Table 16 – TDWC for z/OS Dashboard SW environment ... 14

Table 17 – Cross dependencies z-d HW environment ... 15

Table 18 – Cross dependencies z-d SW environment ... 15

Table 19 - TDWC 8.6 vs. TDWC 8.5.1 % improvements ... 25

IBM Tivoli Workload Automation V8.6 Performance and scale cookbook

R

EVISION

H

ISTORY

Date Version Revised By Comments

26/09/2011 1.0 M.R. Ready for editing review 19/10/2011 1.1 M.R. Inserted comments after review

Introduction

1 Introduction

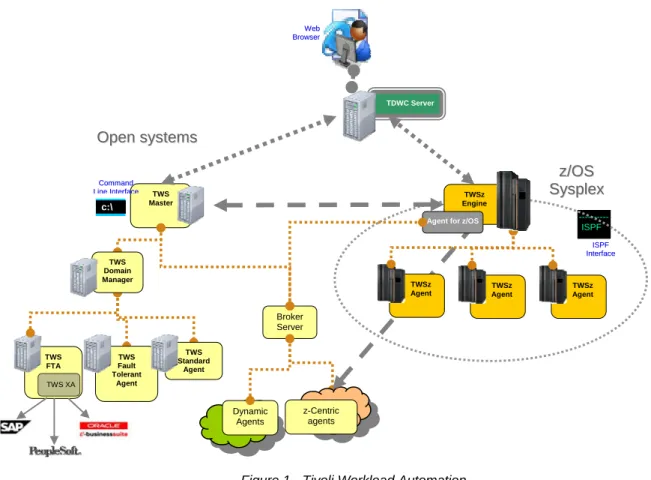

Tivoli Workload Automation is a state-of-the-art production workload manager, designed to help you meet your present and future data processing challenges. Its scope encompasses your entire enterprise information system, including heterogeneous environments.

Pressures on today's data processing environment are making it increasingly difficult to maintain the same level of service to customers. Many installations find that their batch window is shrinking. More critical jobs must be finished before the morning online work begins. Conversely, requirements for the integrated availability of online services during the traditional batch window put pressure on the resources available for processing the production workload.

Tivoli Workload Automation simplifies systems management across heterogeneous environments by integrating systems management functions. There are three main components in the portfolio:

Tivoli Workload Scheduler for z/OS The scheduler in z/OS® environments

Tivoli Workload Scheduler

The scheduler in distributed environments

Dynamic Workload Console (A web-based, graphical user interface for both Tivoli Workload Scheduler for z/OS and Tivoli Workload Scheduler).

Depending on the customer business needs or organizational structure, Tivoli Workload Automation distributed and z/OS components can be used in a mix of configurations to provide a completely distributed scheduling environment, a completely z/OS environment, or a “mixed” z/OS and distributed environment. Using Tivoli Workload Scheduler for Applications it manages workload on non-Tivoli Workload Scheduler platforms and ERP applications.

Scope

Figure 1 - Tivoli Workload Automation

2 Scope

This document provides some results for the Tivoli Workload Automation V8.6 performance tests. In particular we provide some performance results for the involved components, which are highlighted in red in Figure 2:

Tivoli Workload Scheduler cross dependencies in mixed and heterogeneous environments

Tivoli Dynamic Workload Console performance tests and concurrent users support

Tivoli Workload Scheduler dynamic scheduling scalability

Tivoli Workload Scheduler agent for z/OS

Dynamic Agents z-Centric agents Broker Server TWS Master TWS FTA TWS XA TWS Fault Tolerant Agent TWS Standard Agent O Oppeennssyysstteemmss C Coommmmaanndd L LiinneeIInntteerrffaaccee c:\ z z//OOSS S Syysspplleexx W Weebb B Brroowwsseerr TDWC Server ISPF I ISSPPFF I Inntteerrffaaccee TWSz Agent TWSz Agent TWSz Agent TWSz Engine Agent for z/OS

TWS Domain Manager

Scope

Figure 2 - Tivoli Workload Automation performance tests

Tivoli Workload Scheduler V8.6 for z/OS

Cross dependencies in mixed environments: z/OS engine --> distributed engine The performance objective is to demonstrate that the run time of 200,000 jobs with 5000 cross-dependencies is comparable (not greater than 5%) with the run time of a 200,000 jobs with 5000 dependencies on the same engine. For this target the baseline is represented by current run time of 200,000 jobs with 5000

dependencies on the same engine.

Cross dependencies: z/OS engine --> z/OS engine

To define a cross-dependency between a job and a remote job, the user must create a shadow job representing the matching criteria that identifies the remote job. When the remote engine evaluates the matching criteria it sends the ID of the matching job to the source engine. This information is reported in the plan and can be verified by the user using user interfaces. The performance objective is to demonstrate that Tivoli Workload Scheduler can match and report into the plan the IDs of 1000 remote jobs in less than 10 minutes.

Tivoli Dynamic Workload Console for z/OS Dashboard feature

Dynamic Agents z-Centric agents Broker Server TWS Master TWS FTA TWS XA TWS Fault Tolerant Agent TWS Standard Agent O Oppeennssyysstteemmss C Coommmmaanndd L LiinneeIInntteerrffaaccee c:\ z z//OOSS S Syysspplleexx W Weebb B Brroowwsseerr TDWC Server ISPF I ISSPPFF I Inntteerrffaaccee TWSz Agent TWSz Agent TWSz Agent TWSz Engine Agent for z/OS

TWS Domain Manager

Scope

Performance objective: Dashboard with 2 engines managed and 100,000 jobs in plan should be better by 10% with respect to the same feature in Tivoli Dynamic Workload Console V8.5.1.

Tivoli Workload Scheduler V8.6

Tivoli Dynamic Workload Console Dashboard performance improvement

The Tivoli Dynamic Workload Console dashboard response time will be improved of about 10% respect the same feature in Tivoli Dynamic Workload Console V8.5.1.

Tivoli Dynamic Workload Console Graphical Plan View performance

The Tivoli Dynamic Workload Console graphical Plan View must be able to show a plan view containing hundreds of nodes and links within 1 minute. Typical plan view containing 1000 job streams with 300 dependencies must be shown within 30 seconds.

Cross dependencies in mixed environment: Distributed engine --> z/OS engine The performance objective is to demonstrate that the run time of 200,000 jobs with 5000 cross-dependencies is comparable (not greater than 5%) with the run time of a 200,000 jobs with 5000 dependencies on the same engine. For this target the baseline is represented by current run time of 200,000 jobs with 5000

dependencies on the same engine.

Cross Dependencies: Distributed engine --> Distributed engine

To define a cross-dependency between a job and a remote job, the user must create a shadow job representing the matching criteria that identifies the remote job. When the remote engine evaluates the matching criteria it sends the ID of the matching job to the source engine. This information is reported in the plan and can be verified by the user using user interfaces. The performance objective is to demonstrate that Tivoli Workload Scheduler can match and report into the plan the IDs of 1000 remote jobs in less than 10 minutes.

Agent for z/OS

This solution provides a new type of agent (called Agent for z/OS) that enables Tivoli Workload Scheduler for distributed users to schedule and control jobs on the z/OS platform. The objective is to demonstrate that Tivoli Workload Scheduler can manage a workload of 50,000 z/OS jobs in one production day (24 hrs).

Dynamic scheduling scalability

The performance objective is to have Tivoli Workload Scheduler dynamically manage the running of heavy workload (100,000 jobs) on hundreds of Tivoli Workload Scheduler Dynamic Agents.

HW and SW configuration

Performance objective: Tivoli Dynamic Workload Console V8.6 must be better than Tivoli Dynamic Workload Console V8.5.1.

Tivoli Dynamic Workload Console login

Performance objective is that Tivoli Dynamic Workload Console must be able to support hundreds of concurrent logins.

Tivoli Dynamic Workload Console concurrent users

Performance objective is that Tivoli Dynamic Workload Console must be able to support hundreds of concurrent users, with some of them using graphical views.

3

HW and SW configuration

This chapter covers all the aspects used to build the test environment: topology, hardware, software and scheduling environment. It is split into two subsections: one for distributed tests and one for z/OS tests.

3.1 Distributed test environment configuration

For distributed tests, six different environments were created.

Figure 3 shows the topology used to build the environment to perform specific Tivoli Dynamic Workload Console-based tests: graphical view, concurrent users. This will be referred to as ENV1.

HW and SW configuration

In the following tables you can find hardware (Table 1) and software (Table 2) details of all the machines used in the above-mentioned test environment.

ENV 1 PROCESSOR MEMORY

TWS server 4 X Intel® Xeon™ CPU 3.80 GHz 5 GB DB server 4 x Dual-Core AMD Opteron(tm) Processor 2216 HE 3 GB TDWC Server 4 X 2 PowerPC_POWER5 1656 MHz 4 GB RPT Server 2 x Dual Core Intel Xeon 1.86 GHz 12 GB RPT Agent 2 x Dual Core Intel Xeon 1.60 GHz 8 GB

Table 1 – TDWC HW environment

ENV 1 OS TYPE SOFTWARE

TWS server RHEL 5.1 (2.6.18-53.el5PAE) TWS V8.6 DB server SLES 11 (2.6.27.19-5-default) DB2: 9.7.0.2 TDWC Server AIX 5.3 ML 08 TDWC V8.6 RPT Server Win 2K3 Server sp2 RPT 8.2

RPT Agent RHEL 5.1 RPT 8.2

Browser Win 2K3 Server sp2 Firefox 3.6.17

Table 2 – TDWC SW environment

For Tivoli Dynamic Workload Console scalability and performance test scenarios, the scheduling environment was composed of:

100,000 jobs

5000 job streams, each containing 20 jobs

180,000 follows dependencies between job streams (with an average of 36 dependencies per job stream)

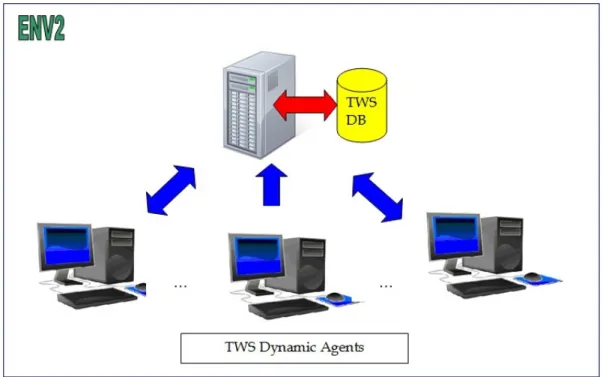

Figure 4 shows the topology used to build the environment to perform Broker scalability tests. This will be referred to as ENV2.

HW and SW configuration

Figure 4 – Dynamic scheduling scalability environment

In the following tables you can find hardware (Table 3) and software (Table 4) details of all the machines used in the above-mentioned test environment.

ENV 2 PROCESSOR MEMORY

TWS + DB server 4 x Intel® Xeon® CPU X7460 2.66GHz 10 GB TWS Dynamic Agents 7 x (4 x Intel® Xeon® CPU X7460 2.66GHz) 7 x 10 GB

Table 3 – Dynamic scheduling scalability HW environment

ENV 2 OS TYPE SOFTWARE

TWS + DB server AIX 6.1 ML 02 TWS V8.6 + DB2 9.7.0.2 TWS Dynamic Agents Win 2K3 Server sp2 TWS V8.6

Table 4 – Dynamic scheduling scalability SW environment

For Dynamic scheduling scalability scenarios the scheduling environment was composed of 100,000 jobs defined in 1000 job streams following this schema:

10,000 heavy jobs in 100 job streams with AT dependency every 5 minutes

40,000 medium jobs in 400 job streams with AT dependency every 3 minutes

HW and SW configuration

Jobs and job streams are all defined in a dynamic pool (hosted by broker workstation) containing all the agents of the environment.

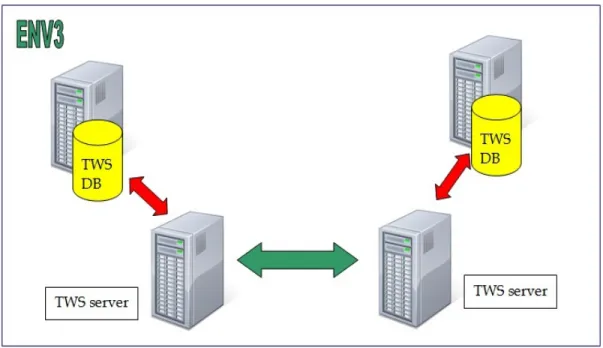

Figure 5 shows the topology used to build the environment to perform specific cross dependencies tests: Tivoli Workload Scheduler distributed -> Tivoli Workload Scheduler distributed. This will be referred to as ENV3.

Figure 5 - Cross Dependencies d-d environment

In the following tables you can find hardware (Table 5) and software (Table 6) details of all the machines used in the above-mentioned test environment.

ENV 3 PROCESSOR MEMORY

DB server 4 x Dual-Core AMD Opteron(tm) Processor 2216 HE 6 GB TWS Server 4 X Intel® Xeon™ CPU 3.80 GHz 5 GB TWS Server 4 X Intel® Xeon™ CPU 2.80 GHz 8 GB

Table 5 – Dynamic scheduling scalability HW environment

ENV 3 OS TYPE SOFTWARE

DB server SLES 11 (2.6.27.19-5-default) DB2: 9.7.0.2 TWS Server RHEL 5.1 (2.6.18.53.el5PAE) TWS V8.6 TWS Server SLES 10.1 (2.6.16.46-0.12-bigsmp TWS V8.6

Table 6 – Dynamic scheduling scalability SW environment

For cross dependencies there are two different scheduling environments. For cross dependencies in homogeneous environments the scheduling environment is simple:

HW and SW configuration

1000 jobs defined on each engine and 1000 cross dependencies between them. For Cross dependencies z-z, Tivoli Workload Scheduler current plan on the first z/OS system contains 1000 shadow jobs that refer to jobs of Tivoli Workload Scheduler current plan on the second z/OS system.

Figure 6 shows the topology used to build the environment to perform specific Cross Dependencies in a mixed environment tests: Tivoli Workload Scheduler distributed -> Tivoli Workload Scheduler for z/OS. This will be referred to as ENV4.

Figure 6 - Cross Dependencies d-z environment

In the following tables you can find hardware (Table 7) and software (Table 8) details of all the machines used in the above mentioned test environment.

ENV 4 PROCESSOR MEMORY

DB server 4 x Dual-Core AMD Opteron(tm) Processor 2216 HE 6 GB TWS Server 4 X Intel® Xeon™ CPU 3.80 GHz 5 GB

TWS FTA 4 X Intel® Xeon™ CPU 2.80 GHz 8 GB

TWS FTA 4 X Intel® Xeon™ CPU 2.66 GHz 8 GB

TWS for z/OS CMOS z900 2064-109 - 2 dedicated CPU 6 GB

Table 7 – Cross Dependencies d-z HW environment

ENV 4 OS TYPE SOFTWARE

HW and SW configuration

TWS Server RHEL 5.1 (2.6.18.53.el5PAE) TWS V8.6 TWS FTA SLES 10.1 (2.6.16.46-0.12-bigsmp TWS V8.6 TWS FTA RHEL 5.1 (2.6.18.53.el5PAE) TWS V8.6 TWS for z/OS z/OS 1.11 TWS for z/OS V8.6

Table 8 – Cross Dependencies d-z SW environment

For cross dependencies in a mixed environment:

200,000 jobs in plan defined on the local engine (5000 jobs defined on master, 195,000 jobs defined on two Fault Tolerant Agents), 5000 jobs defined on remote engine and 5000 cross dependencies.

Master CPU and Remote Engine limit = 200

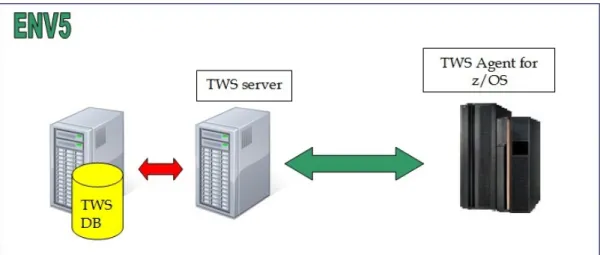

Figure 7 shows the topology used to build the environment to perform specific TWS Agent for z/OS tests. This will be referred to as ENV5.

Figure 7 – Agent for z/OS environment

In the following tables you can find hardware (Table 9) and software (Table 10) details of all the machines used in the above-mentioned test environment.

ENV 5 PROCESSOR MEMORY

DB server 4 x Dual-Core AMD Opteron(tm) Processor 2216 HE 6 GB TWS Server 4 X Intel® Xeon™ CPU 3.80 GHz 5 GB TWS for z/OS CMOS z900 2064-109 - 2 dedicated CPU 6 GB

Table 9 – Agent for z/OS HW environment

ENV 5 OS TYPE SOFTWARE

HW and SW configuration

TWS Server RHEL 5.1 (2.6.18.53.el5PAE) TWS V8.6 TWS for z/OS z/OS 1.11 TWS for z/OS V8.6

Table 10 – Agent for z/OS SW environment

For Agent for z/OS tests there are different scheduling environments. The first one contains 50,000 jobs distributed in 1440 job streams in the following way:

15 jobs with duration of 1 second

15 jobs with duration of 30 seconds

5 jobs with duration of 1 minute

The other environments always consist of 1440 job streams with different numbers of jobs up to 180,000.

The last environment in Figure 8 (not belonging to performance team) is related to Tivoli Dynamic Workload Console Dashboard tests and it will be referred to as ENV6.

Figure 8 - TDWC Dashboard environment

In the following tables you can find hardware (Table 11) and software (Table 12) details of all the machines used in the above-mentioned test environment.

ENV 6 PROCESSOR MEMORY

TWS + DB server 4 x Intel® Xeon® CPU X7460 2.66GHz 3 GB TWS + DB server 4 X Intel® Pentium® IV 3.0 GHz 3 GB TDWC Server 4 X Intel® Pentium® IV 3.0 GHz 2 GB

HW and SW configuration

ENV 6 OS TYPE SOFTWARE

TWS + DB server Win 2K8 Server sp1 TWS V8.6 + DB2 9.7.0.2 TWS + DB server Win 2K3 Server sp2 TWS V8.6 + DB2 9.7.0.2 TDWC Server Win 2K3 Server sp2 TDWC 8.6 Browser Win 2K3 Server sp2 Firefox 3.6.17

Table 12 – TDWC Dashboard SW environment

For Tivoli Dynamic Workload Console Dashboard tests the scheduling environment consisted of 4 different plans: 3 plans of 25,000 jobs and 1 plan of 100,000 jobs.

3.2 z/OS test environment configuration

For z/OS tests 3 different environments have been built up.

Figure 9 shows the topology used to build the environment to perform Cross dependencies test: Tivoli Workload Scheduler for z/OS -> Tivoli Workload Scheduler for z/OS. It will be referred as ENV7.

Figure 9 – Cross dependencies z-z environment

In the following tables you can find hardware (Table 13) and software (Table 14) details of all the machines used in the above-mentioned test environment.

ENV 7 PROCESSOR MEMORY

TWS for z/OS CMOS z900 2064-109 - 2 dedicated CPU 6 GB TWS for z/OS CMOS z9 2096-BC - 1 dedicated CPU 8 GB

HW and SW configuration

ENV 7 OS TYPE SOFTWARE

TWS for z/OS z/OS 1.11 TWS for z/OS V8.6 TWS for z/OS z/OS 1.11 TWS for z/OS V8.6

Table 14 – Cross dependencies z-z SW environment

For Cross dependencies z-z, Tivoli Workload Scheduler current plan on the first z/OS system contains 1000 shadow jobs that refer to jobs of Tivoli Workload Scheduler current plan on the second z/OS system. All the Tivoli Workload Scheduler jobs on the second z/OS system are time dependent or in a “waiting state”.

Figure 10 shows the topology used to build the environment to perform Tivoli Dynamic Workload Console Dashboard for z/OS tests. This will be referred to as ENV8.

Figure 10 - TDWC for z/OS Dashboard environment

In the following tables you can find hardware (Table 15) and software (Table 16) details of all the machines used in the above mentioned test environment.

ENV 8 PROCESSOR MEMORY

TWS for z/OS CMOS z900 2064-109 - 2 dedicated CPU 6 GB TWS for z/OS CMOS z9 2096-BC - 1 dedicated CPU 8 GB TDWC Server + z/OS connector 4 X 2 PowerPC_POWER5 1656 MHz 4 GB

Table 15 – TDWC for z/OS Dashboard HW environment

ENV 8 OS TYPE SOFTWARE

TWS for z/OS z/OS 1.11 TWS for z/OS V8.6

HW and SW configuration

TDWC Server + z/OS connector AIX 5.3 ML 08 TDWC V8.6

Browser Win 2K3 Server sp2 Firefox 3.6.17

Table 16 – TDWC for z/OS Dashboard SW environment

For the Tivoli Dynamic Workload Console for z/OS dashboard there are two equal

scheduling environments on the z/OS systems involved and both production plans contain:

100,000 jobs

100 workstations

Jobs by status: 51200 complete, 19800 error, 290 ready, and 28710 waiting. Figure 11 shows the topology used to build the environment to perform specific Cross Dependencies tests: Tivoli Workload Scheduler for z/OS -> Tivoli Workload Scheduler distributed. This will be referred to as ENV9.

Figure 11 – Cross dependencies z-d environment

In the following tables you can find hardware (Table 17) and software (Table 18) details of all the machines used in the above-mentioned test environment.

ENV 9 PROCESSOR MEMORY

DB server 4 x Dual-Core AMD Opteron(tm) Processor 2216 HE 6 GB TWS Server 4 X Intel® Xeon™ CPU 3.80 GHz 5 GB

HW and SW configuration

TWS FTA 4 X Intel® Xeon™ CPU 2.66 GHz 8 GB

TWS for z/OS CMOS z900 2064-109 - 2 dedicated CPU 6 GB

Table 17 – Cross dependencies z-d HW environment

ENV 9 OS TYPE SOFTWARE

DB server SLES 11 (2.6.27.19-5-default) DB2: 9.7.0.2

TWS Server RHEL 5.1 (2.6.18.53.el5PAE) TWS 8.6 TWS FTA SLES 10.1 (2.6.16.46-0.12-bigsmp TWS 8.6

TWS FTA RHEL 5.1 (2.6.18.53.el5PAE) TWS 8.6

TWS for z/OS z/OS 1.11 TWS for z/OS 8.6

Table 18 – Cross dependencies z-d SW environment

For cross dependencies z-d the Tivoli Workload Scheduler current plan contains 200,000 jobs with the following characteristics:

190,000 jobs that have no external predecessors

5000 jobs that have external predecessors

5000 shadow jobs (predecessors of the previous jobs) that point to Tivoli Workload Scheduler distributed jobs

Tivoli Workload Scheduler for z/OS configuration: 1 Controller + 2 Tracker on the same system but only one tracks the events. Tivoli Workload Scheduler Master CPU and Remote Engine limit = 200.

3.3 Test tools

For z/OS environment: Resource Measurement Facility (RMF) tool.

RMF is IBM's strategic product for z/OS performance measurement and management. It is the base product to collect performance data for z/OS and sysplex environments to monitor system performance behavior and allow you to optimally tune and configure your system according to related business needs. The RMF data for Tivoli Workload Scheduler for z/OS has been collected using the Monitor III functionality.

For Tivoli Dynamic Workload Console specific tests: IBM Rational Performance Tester 8.2 and Performance Verification Tool (PVT), an internal tool.

Using the PVT tool you can automatically perform single user performance test scenarios, using the J2SE Robot class to programmatically send system events such as keystrokes, mouse movements and screen captures. In this way the Robot class has been extended to provide coarse-grained APIs to perform, in an automated way, with any browser, scripts that simulate the user interaction with the Tivoli Dynamic Workload Console, and able to record the response/completion time for any desired interaction step, based on screen pixels color change analysis. The PVT tool also allows, after being configured and tuned

Results

on to the target testing system, to easily reproduce and rerun, in an automated way, single user performance tests to monitor how any interaction step with Tivoli Dynamic Workload Console is fast, and to log response times in a specified file.

For performance monitoring on distributed servers: nmon, top, topas, ps, svmon.

4 Results

This chapter contains all the results obtained during the performance test phase. It is split into two sections: one for distributed test results and one for z/OS test results

4.1 z/OS test results

This section presents the results of the z/OS performance scenarios described in Chapter 2. Detailed test scenarios and results have been grouped into three subsections, one for each performance objective.

4.1.1 Cross dependencies in mixed environment TWSz -> TWSd

Scenarios:

1. Run a plan of 200,000 jobs with 5000 external traditional dependencies between 10,000 non-trivial jobs defined on Tivoli Workload Scheduler for z/OS controller (baseline Tivoli Workload Scheduler for z/OS V8.5.1).

2. Run a plan of 200,000 jobs with 5000 cross dependencies between 10,000 non-trivial jobs defined on a remote distributed engine.

Objective: dependencies resolution time should be equal to or not worse than 5% of the baseline.

Results:

All scenarios completed successfully. Performance objective has been reached. CPU usage and memory consumption have been collected during the running of both scenarios and no issues occurred.

Tuning and configuration All scenarios run on ENV9. Operating systems changes:

Set ulimit to unlimited (physical memory for java process)

Results

Init parameter MAXECSA (500)

Embedded Application server (eWAS) changes: Resource.xml file

Connection pool for "DB2 Type 4 JDBC Provider": maxConnections=”50”

Server.xml file

* JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

Database DB2 changes:

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, to improve and optimize performance:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Number of secondary log files (LOGSECOND) = 40

4.1.2 Cross dependencies in homogenous environment TWSz -> TWSz

Scenario:

1. 1000 cross engine job dependencies will be defined and the elapsed time between the time the new plan was created (and put in production) and the matching remote job ID are received will be measured.

Objective: the elapsed time should be less than 10 minutes.

Test scenario completed successfully. Performance objective has definitely been overachieved.

Tuning and configuration All scenarios run on ENV7. Operating systems changes:

250 initiators defined on the system and 200 used by Tivoli Workload Scheduler

Results

4.1.3 Tivoli Dynamic Workload Console for z/OS Dashboard

Scenarios:

1. Tivoli Dynamic Workload Console V8.5.1 Dashboard with 2 engines managed and 100,000 jobs in plan

2. Tivoli Dynamic Workload Console V8.6 Dashboard with 2 engines managed and 100,000 jobs in plan

Objective:

Objective: Tivoli Dynamic Workload Console V8.6 Dashboard response time should be improved by 10% against Tivoli Dynamic Workload Console V8.5.1.

Results:

All scenarios completed successfully. The objective of 10% has been overachieved by a very huge improvement of 80%.

Tuning and configuration All scenarios run on ENV8. Operating systems changes:

250 initiators defined on the system and 200 used by Tivoli Workload Scheduler

Init parameter MAXECSA (500)

4.2 Distributed test results

This section presents the results of the distributed performance scenarios described in

Chapter 2. Detailed test scenarios and results have been grouped into two subsections one for Tivoli Workload Scheduler engine and one for Tivoli Dynamic Workload Console scenarios.

4.2.1 Cross Dependencies in mixed environment TWSd -> TWSz

Scenarios:

1. Run a plan of 200,000 jobs with 5000 external traditional dependencies between 10,000 non-trivial jobs defined on the Tivoli Workload Scheduler engine (baseline Tivoli Workload Scheduler V8.5.1).

2. Run a plan of 200,000 jobs with 5000 cross dependencies between 10,000 non trivial jobs defined on remote distributed engine.

Objective: dependencies resolution time should be equal to or not worse than 5% of the baseline.

Results

Results:

All scenarios completed successfully. Performance objective has been reached. CPU usage and memory consumption were collected during the running of both scenarios and no issues occurred.

Tuning and configuration All scenarios run on ENV4. Operating systems changes:

Set ulimit to unlimited (physical memory for java process)

250 initiators defined on the system and 200 used by Tivoli Workload Scheduler

Init parameter MAXECSA (500)

Embedded Application server (eWAS) changes: Resource.xml file

Connection pool for "DB2 Type 4 JDBC Provider": maxConnections=”50”

Server.xml file

* JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

Database DB2 changes:

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, to improve and optimize performances:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Number of secondary log files (LOGSECOND) = 40

4.2.2 Cross Dependencies in homogenous environment TWSd -> TWSd

Scenario:

1. 1000 cross engine job dependencies will be defined and the elapsed time between the time the new plan was created (and put in production) and the matching remote job ID are received will be measured.

Objective: the elapsed time should be less than 10 minutes. Results:

Results

Test scenario completed successfully. Performance objective has been definitely overachieved.

Tuning and configuration All scenarios run on ENV3.

4.2.3 Agent for z/OS

Scenarios:

1. Schedule a workload of about 50,000 jobs in 24 hours on a single agent with 1,000,000 events (Note: events are not related to 50,000 jobs submitted from distributed engine, but are generated from other jobs scheduled/running on the z side)

1.a Happy path - All jobs completed successfully (no workload on distributed side)

1.b Alternative flow - Introduced some error condition (no workload on distributed side)

1.c Alternative flow - All jobs completed successfully (workload on distributed side)

2. Breaking point limit research starting from 36 jobs/min up to system limit

Objective: Workload supported. Results:

All scenarios completed successfully. Moreover the system is able to complete successfully up to 125 jobs per minute that is 180K jobs in 24 hours. CPU usage and memory consumption were collected during the running of all scenarios and no issues occurred.

Tuning and configuration All scenarios run on ENV5. Operating systems changes:

Set ulimit to unlimited (physical memory for java process)

250 initiators defined on the system and 200 used by Tivoli Workload Scheduler

Init parameter MAXECSA (500)

Embedded Application server (eWAS) changes: Resource.xml file

Results

Connection pool for "DB2 Type 4 JDBC Provider": maxConnections=”50”

Server.xml file

* JVM entries: initialHeapSize="2048" maximumHeapSize="3096"

Database DB2 changes:

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, to improve and optimize performances:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Number of secondary log files (LOGSECOND) = 40

4.2.4 Dynamic scheduling scalability

Scenarios:

1. Schedule a workload of 100,000 jobs to be distributed on 100 agents in 8 hours 2. Schedule a workload of 100,000 jobs to be distributed on 500 agents in 8 hours Objective: workloads supported.

Results:

All scenarios completed successfully. CPU usage and memory consumption of server side were collected during the running of all scenarios and no issues occurred.

Tuning and configuration All scenarios run on ENV2. Operating systems changes:

Set ulimit to unlimited (physical memory for Java process) Embedded Application server (eWAS) changes:

Resource.xml file

Connection pool for "DB2 Type 4 JDBC Provider": maxConnections=”150”

Server.xml file

* JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

Results

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, to improve and optimize performances:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Number of secondary log files (LOGSECOND) = 40

Tivoli Workload Scheduler side changes:

In the JobDispatcherConfig.properties file, add the following sections to override default values:

# Override hidden settings in JDEJB.jar

Queue.actions.0 = cancel, cancelAllocation, cancelOrphanAllocation Queue.size.0 = 10

Queue.actions.1 = reallocateAllocation Queue.size.1 = 10

Queue.actions.2 = updateFailed Queue.size.2 = 10

# Relevant to jobs submitted from Tivoli Workload Scheduler bridge, when successful Queue.actions.3 = completed Queue.size.3 = 30 Queue.actions.4 = execute Queue.size.4 = 30 Queue.actions.5 = submitted Queue.size.5 = 30 Queue.actions.6 = notification Queue.size.6 = 30

This is because the default behavior consists of 3 queues for all the actions: we experienced that at least 7 queues are needed, each having a different dimension.

In ResourceAdvisorConfig.properties file add the following sections:

#To speed up resource advisor TimeSlotLength=10

MaxAllocsPerTimeSlot=1000 MaxAllocsInCache=50000

This is just to improve broker performance to manage jobs.

Set broker CPU limit to SYS (that is unlimited)

This is because the notification rate is slower than the submission rate so the job dispatcher threads are queued waiting for notification status: in this way the maximum Tivoli Workload Scheduler limit (1024) is easily reached even if the queues are separate.

Results

4.2.5 Tivoli Dynamic Workload Console Dashboard

Scenarios:

1. Tivoli Dynamic Workload Console V8.5.1 Dashboard with 4 engines managed + 1 disconnected. Plan dimensions: 100,000 jobs for one engine and 25,000 jobs for the rest of them.

2. Tivoli Dynamic Workload Console V8.6 Dashboard with 4 engines managed + 1 disconnected. Plan dimensions: 100,000 jobs for one engine and 25,000 jobs for the rest of them.

Objective: Response time is the interval between the time instant when the user clicks to launch the Dashboard feature, and the completion time instant, that is when the server has completely returned a full response to the user. Response time should be improved by 10%.

Results:

All scenarios completed successfully. Each scenario was run 10 times. Response times show the improvement is higher than objective: it is about 74%.

Tuning and configuration All scenarios run on ENV8.

Embedded Application server (eWAS) changes: Server.xml file

JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

4.2.6 Tivoli Dynamic Workload Console Graphical plan view

Scenarios:

1. Tivoli Dynamic Workload Console V8.6 Graphical view of a plan containing 1000 job streams and 300 dependencies

Objective: Response time is the interval between the time instant when the user clicks to launch the Plan view task, and the completion time instant, that is when the server has completely returned a full response to the user. Response time should be < 30 seconds. Results:

Test scenario completed successfully. Performance objective has been overachieved. Tuning and configuration

All scenarios run on ENV1.

Results

Server.xml file

JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

4.2.7 Tivoli Dynamic Workload Console single user response times

Tivoli Dynamic Workload Console V8.6 single user response time test activities consist of testing the response time of significant interaction step between a single user and the Tivoli Dynamic Workload Console server.

Response time is the interval between the time instant when the user clicks (or performs any triggering action) to launch a particular task, and the completion time instant, that is when the server has completely returned a full response to the user. The test is executed with just a single user interacting with the server, with the purpose to measure how fast and reactive the server is in providing the results, in normal and healthy conditions. Data has been compared to Tivoli Dynamic Workload Console V8.5.1 GA baseline results. Scenarios:

1. Manage engines: access the manage engines panel to check how fast the panel navigation is

2. Monitor jobs: run a query to monitor the job status in the plan (result set: 10,000 jobs)

3. Next page: browse the result set of a task to check how fast it is to access the result next page

4. Find job: retrieve a particular job from the result set of a task 5. Plan view: run the graphical plan view

6. Job stream view: run the graphical job streams view

Objective: demonstrate that response times of new release Tivoli Dynamic Workload Console V8.6 are comparable with those of the baseline Tivoli Dynamic Workload Console V8.5.1, with a tolerance of 5%.

Results:

All scenarios completed successfully. Each test was repeated 10 times, collecting minimum, average, maximum response time, and standard deviation.

It was observed that the standard deviation values are very small, meaning that the server, in normal conditions, is able to grant and maintain the same performances as time goes by. Another interesting observation comes from the fact that response times are very small for almost all the scenarios; the only one requiring a non-negligible time for completion are the plan view and the monitor jobs tasks; this is anyway normal, because the result set of the query is very large, and anyway the most of the time required to complete the

interaction step is consumed by the engine to retrieve the required data; focusing on the plan view task, it requires a long time because the plan is very complex, and the graphical rendering operation to draw such a complex object requires some time to complete.

Results

Tivoli Dynamic Workload Console V8.6 response times are better than the Tivoli Dynamic Workload Console V8.5.1 response times, scenario by scenario. Improvement is not negligible in the most of the cases, as can be seen in Table 19.

Task TDWC 8.6 vs. TDWC 8.5.1 ManageEngine +52 % MonitorJobs +44 % NextPage +75 % FindJob +57 % PlanView +23 % JobStreamView +19 % Table 19 - TDWC 8.6 vs. TDWC 8.5.1 % improvements

Tuning and configuration All scenarios run on ENV1.

Embedded Application server (eWAS) changes: TWS Server.xml file

JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

TDWC Server.xml file

JVM entries: initialHeapSize="1536" maximumHeapSize="1536"

Although it could have been possible to freely extend the Tivoli Dynamic Workload Console eWAS heap size because of the 64-bit environment, it was decided to use this particular value to generate data comparable, for the same server settings, with that coming from the Tivoli Dynamic Workload Console V8.5.1 concurrency test baseline.

To have more information about server responses and behavior when increasing the heap size or changing the garbage collection policy, see the appropriate appendix.

Database DB2 changes:

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, to improve and optimize performances:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Results

4.2.8 Tivoli Dynamic Workload Console concurrent users

The first test proposed is the “102 concurrent users + PVT Tool”. This test has the purpose of monitoring system performance, response times, functional correctness, when 102 users access the Tivoli Dynamic Workload Console V8.6 performing different tasks against it, and having the PVT Tool provide a constant further workload simulating a user that, in a continuous way, runs a small query to retrieve some scheduling objects.

The 102 concurrent users run the following 7 tasks:

1. All jobs in SUCCESS: users run a query to retrieve all the jobs in plan that are in SUCCESS status; plan contains about 10,000 jobs in that status

2. All jobs in WAIT: users run a query to retrieve all the jobs in plan that are in SUCCESS status; plan contains about 4000 jobs in that status

3. All Job streams in plan: users run a query to retrieve all the job streams in the plan plan contains about 5000 job streams

4. Browse job log: users run a query to retrieve a particular job, on which the job log is displayed

5. Monitor prompts: users run a query to retrieve all the prompts in the plan, selects the first one retrieved and reply yes to unblock it

6. Graphical Job stream view: users run a query to retrieve a job stream, and launches the graphical job stream view on it

7. Graphical Impact view: A user run a query to retrieve a job stream, and launches the graphical impact view on it.

Users are launched by RPT so that all must start in 210 seconds (3.5 minutes). This means that a new user is launched by RPT about every 2 seconds. When a new user is launched by RPT, the decision of which task is run by the user is taken according to the schedule in Figure 12:

Results

Figure 12 - RPT schedule for TDWC concurrent users test

The schedule was repeated 4 times, while the PVT Tool was always kept working even during the interval between one run and the next one.

Objective:

No functional problems: all users must be able to successfully complete their own tasks, with no exceptions, errors, or timeout

Quality assurance: Tivoli Dynamic Workload Console V8.6 server resources usage must be less than the Tivoli Dynamic Workload Console V8.5.1 server resources usage (as baseline), with a tolerance of 5%

Additional user, simulated by PVT Tool, must have response times lower than 4 times the same response times obtained with Tivoli Dynamic Workload Console V8.5.1.

Results:

All scenarios completed successfully. The RPT Schedule was repeated 4 times, while the PVT Tool was always kept working even during the interval between one run and the next one.

CPU usage presents an increase in performance from Tivoli Dynamic Workload Console V8.5.1 to Tivoli Dynamic Workload Console V8.6, with a non-negligible improvement in the CPU average usage and maximum usage.

On the other hand, Tivoli Dynamic Workload Console V8.6 needs more memory (on average) during the test execution, compared with Tivoli Dynamic Workload Console V8.5.1. The increase is due to the fact that Tivoli Dynamic Workload Console V8.6 works on eWAS 7.0 release, while Tivoli Dynamic Workload Console V8.5.1 is based on eWAS 6.1. Another very important reason is the fact that Tivoli Dynamic Workload Console V8.6 is based on Tivoli Integrated Panel (TIP), and the introduction of this layer between eWAS and Tivoli Dynamic Workload Console V8.6 (not existing in the old release) is paid for in terms of an increase in the memory used during the test execution.

There is a significant improvement both for average read and write activities and network activity passing from Tivoli Dynamic Workload Console V8.5.1 to Tivoli Dynamic Workload Console V8.6.

Finally, there is a strong improvement also for the additional user response time that has an average and a maximum time much smaller than the baseline.

Tuning and configuration All scenarios run on ENV1.

Embedded Application server (eWAS) changes: TWS Resource.xml file

Results

TWS Server.xml file

JVM entries: initialHeapSize="1024" maximumHeapSize="2048"

TDWC Server.xml file

JVM entries: initialHeapSize="1536" maximumHeapSize="1536"

Although it could have been possible to freely extend the Tivoli Dynamic Workload Console eWAS heap size because of the 64-bit environment, it was decided to use this particular value to generate data comparable, for the same server settings, with that coming from the Tivoli Dynamic Workload Console V8.5.1 concurrency test baseline.

To have more information about server responses and behavior when increasing the heap size or changing the garbage collection policy, can see the appendix.

Database DB2 changes:

All the tests have been run using a dedicated database server machine. Database DB2 has been configured with the following tuning modifications, in order to improve and optimize performances:

Log file size (4KB) (LOGFILSIZ) = 10000

Number of primary log files (LOGPRIMARY) = 80

Number of secondary log files (LOGSECOND) = 40

4.2.9 Tivoli Dynamic Workload Console Login

Scenario:

This test is very simple and consists of 250 users launched in 60 seconds, each running the log in scenario.

Log in

Wait 60 seconds

Log out

Each user has the following roles: TDWBAdministrator, TWSWebUIAdministrator. Objective:

No functional problems: all users must be able to successfully login with no exceptions, errors, or timeout

Response time: response time must be similar to that obtained running the same scenario with Tivoli Dynamic Workload Console V8.5.1, with a tolerance of 5%. Results:

Results

Test goals were successfully met, both for the functional and quality part, and in the comparison with baseline Tivoli Dynamic Workload Console V8.5.1.

Tuning and configuration All scenarios run on ENV1.

Embedded Application server (eWAS) changes: Server.xml file

A

PPENDIX

:

TDWC GARBAGE COLLECTOR

POLICY TUNING

This appendix provides tuning information for Tivoli Dynamic Workload Console V8.6 to reach best performances, acting on the WebSphere Application 7.0 Java Garbage collection policy.

It contains details about thr concurrency test scenario (the same as paragraph 4.2.8) schedule run against Tivoli Dynamic Workload Console V8.6, varying 3 different garbage collection policies and crossing them with 2 distinct Java heap size configurations. The goal is to identify the Tivoli Dynamic Workload Console server configuration that provides best performances and results, just acting on:

Garbage collector policy

Heap size (initial and maximum)

The Rational Performance Tester schedule has then been run against Tivoli Dynamic Workload Console V8.6, configuring the eWAS 7.0 with 3 distinct Java Garbage Collection policies:

optthruput

subpool

gencon

Tests have been repeated configuring the eWAS heap size on 2 distinct settings: 1. min heap size = 1.5 GB (1536 MB), max heap size = 1.5 GB (1536 MB) 2. min heap size = 3.0 GB (3072 MB), max heap size = 3.0 GB (3072 MB) First heap configuration has been used to compare obtained data with baseline coming from Tivoli Dynamic Workload Console V8.5.1 data. It is important to note that Tivoli Dynamic Workload Console V8.5.1 was built on eWAS 6.1, whose architecture was 32-bit based, limiting this way the maximum heap size, for memory addressing reasons, to about 1.7 GB.

Second heap configuration has been used to highlight eventual improvements obtained extending the heap size. This comes from the fact that eWAS 7.0, being 64-bit architecture based, and running on 64-bit architecture AIX server, allows the heap size to be extended to any value, bound only by the system physical RAM available.

In this case, the AIX server having 4 GB of RAM, a reasonable value of 3 GB was chosen to run the concurrency test schedule scenario.

To enable eWAS 7.0 to work with each of the 3 tested garbage collection policies, the following modification was made on the server.xml file contained inside the folder

<TDWC_HOME>/eWAS/profiles/TIPProfile/config/cells/TIPCell/nodes/TIPNode/se rvers/server1/

Garbage collector policy

server.xml modifications Heap first config Heap second config

optthruput default config initialHeapSize="1536" maximumHeapSize="1536" initialHeapSize="3072" maximumHeapSize="3072" Subpool genericJvmArguments=" -Djava.awt.headless=true -Xgcpolicy:subpool" initialHeapSize="1536" maximumHeapSize="1536" initialHeapSize="3072" maximumHeapSize="3072" Gencon genericJvmArguments=" -Djava.awt.headless=true -Dsun.rmi.dgc.ackTimeout=10000 -Djava.net.preferIPv4Stack=true -Xdisableexplicitgc -Xgcpolicy:gencon -Xmn320m -Xlp64k" initialHeapSize="1536" maximumHeapSize="1536" initialHeapSize="3072" maximumHeapSize="3072"

Table 20 - Garbage collector and heapsize configurations

Results:

It is important to note that provided data is obtained at an average of about 20 iterations. The gencon garbage collection policy seems to be the one that leads to shorter response times, while the default optthru policy produces the worst response times. The subpool policy offers typically an intermediate result.

In the second case, the subpool garbage collection policy seems to have the best behavior for response times of most significant steps inside the tests in the RPT schedule for concurrency; gencon offers intermediate results, while the default optthru policy seems to have the worst response times.

For the optthru default garbage collection policy, best results, in terms of average response times for the most significant steps, have been obtained with the first heap size

configuration, in the most of the cases; then, if it is decided to use for any reason this policy, there is no need to increase the heap size to 3 GB, the 1.5 GB configuration being enough.

Regarding the Subpool garbage collection policy, the configuration with heap size set to 3.0 GB both for initial and maximum size seems to have a small improvement for the response time, with the exceptions of the queries for jobs in SUCC and all the job streams in plan. Then, the decision to set the first or the second heap size configuration should be taken also considering the available server configuration, specifically in terms of physical memory available.

For the gencon garbage collection policy, response times seems to be better with the first heap configuration (initial and maximum heap size set to 1.5 GB), with the exception again of the jobs in SUCC and all job streams in plan queries.

The following data was obtained from the native_stderr log files provided by eWAS. To be able to gather in this file the garbage collector activities, inside the file server.xml, the VerboseModeGarbageCollection attribute has been set to “true”. This can have some

minimal impacts on performances, but is a price worth paying because then the activities of the garbage collection during the test phase can be analyzed.

Figure 18 - Gencon with 3.0 GB heap size

Conclusions

Matching the initial analysis (comparison between response times of different garbage collection policies, for a given heap size configuration), with the second one (comparison between heap size configurations for a given fixed garbage collection policy), it is possible to make the conclusion is that the best eWAS configuration, for the given Tivoli Dynamic Workload Console V8.6 server platform hardware, and the given workload concurrency scenario, seems to be gencon garbage collection policy with the initial and maximum heap size set to 1.5 GB (with 4 GB of physical RAM available, 64-bit environment).

®

© Copyright IBM Corporation 2011 IBM United States of AmericaProduced in the United States of America

US Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult your local IBM representative for information on the products and services currently available in your area. Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM product, program, or service may be used. Any functionally equivalent product, program, or service that does not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not grant you any license to these patents. You can send license inquiries, in writing, to:

IBM Director of Licensing IBM Corporation North Castle Drive Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such provisions are inconsistent with local law:

INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS PAPER “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you. This information could include technical inaccuracies or typographical errors. Changes may be made periodically to the information herein; these changes may be incorporated in subsequent versions of the paper. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this paper at any time without notice.

Any references in this document to non-IBM Web sites are provided for convenience only and do not in any manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the materials for this IBM product and use of those Web sites is at your own risk.

Neither IBM Corporation nor any of its affiliates assume any responsibility or liability in respect of any results obtained by implementing any recommendations contained in this article. Implementation of any such recommendations is entirely at the implementor’s risk.

IBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not give you any license to these patents. You can send license inquiries, in writing, to:

IBM Director of Licensing IBM Corporation

4205 South Miami Boulevard

Research Triangle Park, NC 27709 U.S.A.

All statements regarding IBM's future direction or intent are subject to change or withdrawal without notice, and represent goals and objectives only.

This information is for planning purposes only. The information herein is subject to change before the products described become available.

IBM Tivoli Workload Automation V8.6 Performance and scale cookbook

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. If these and other IBM trademarked terms are marked on their first occurrence in this information with a trademark symbol (® or ™), these symbols indicate U.S. registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the web at "Copyright and trademark information" at http://www.ibm.com/legal/copytrade.shtml.

Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries.

Linux is a registered trademark of Linus Torvalds in the United States, other countries, or both.

Microsoft, Windows, Windows NT, and the Windows logo are trademarks of Microsoft Corporation in the United States, other countries, or both.

UNIX is a registered trademark of The Open Group in the United States and other countries.

Java and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its affiliates. Other company, product, or service names may be trademarks or service marks of others.