Algorithms for Hyper-Parameter Optimization

Full text

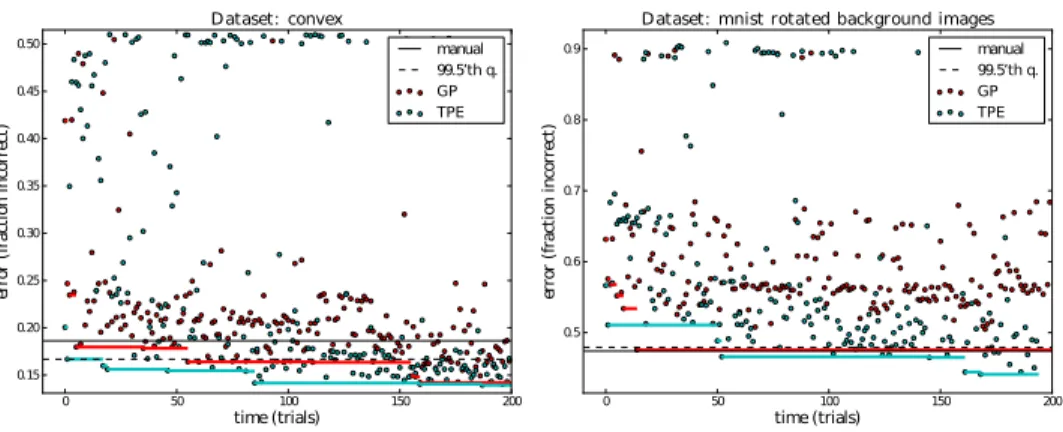

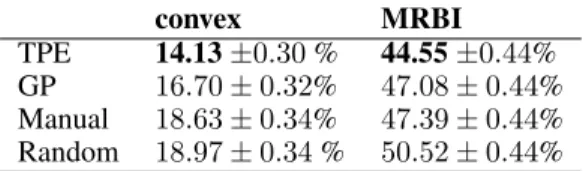

Figure

Related documents

The respondent simply contends that the applicants had no legal right to compel it to decide and communicate its decision but only a right to sue on the assumption that it was

Center representative navigate to registration link in MKCL ARABIA EGYPT. website ( www.mkcl.com.eg ), center will be redirected to the first registration form

– OMB memorandum requires all federal agencies to implement a breach notification policy to safeguard personally identifiable information within 120 days of the date of the

• Agreement shall be reached by the company and employees as to which day shall taken as a RDO when such an entitlement is due. It is agreed a company roster system may

Knowledge representation of our agents is based on dynamic multiply sectioned Bayesian networks (DMSBNs), a class of cooperative multiagent graphical models.. We pro- pose a

Student Assessment in online learning: Challenges and effective practices. Pathways to Practice: A Family Life Education

The activities that Angelika Börsch-Hau- bold presents here may be used to demonstrate that the realm of smell resides in chemistry and that our noses are exquis- itely

Its efficacy has also been reported in the staining of posterior plaque removal in pediatric cataracts[13] but to the best of our knowledge there are very few reports[14,15] of