International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075,Volume-8, Issue- 9S2, July 2019

Abstract: The world today is completely secured with most recent advancements. Consequently the security is still a huge issue. Biometric provides high security with more precision which recognizes the individual dependent on their physiological qualities of a person by utilizing their biometrics. It aims that the biometric will build security, dependability and agreeableness in the most recent innovation of PC framework. The mainstream MIPS based cryptography processor is utilized for equipment and programming items and guidelines require cryptography keys length for higher security level. Merging biometric with MIPS cryptography processor is as a possible arrangement. We utilize new way to deal with Network security utilizing MIPS constructed crypto processor situated in light of contactless palm vein biometric framework. This methodology considers NOC limitations and its topology. It gives greater security with less key length and there is no compelling reason to store any private key anyplace. Blow fish algorithm is more secure to analyze other symmetric key calculations, and deliver best outcome for less handling time and adjusts to build the key size of blowfish calculation.

Keywords: Security, Cryptography, Biometrics, MIPS and Blowfish algorithm.

I. INTRODUCTION

Biometrics provides security benefits over the range, from IT sellers to end clients, and from security framework designers to security framework clients [1]. Stimulation of utilizing biometric data is that biometric framework gives programmed acknowledgment of an individual dependent on some notable highlights or qualities controlled by the person. Sensor innovation is likewise enriched, this is another inspiration factor. Biometric frameworks have been created dependent on regular biometric attributes, for example, unique mark, facial highlights, iris, hand geometry, voice, penmanship, and so on. A biometric is defined by consumption of an element that is; exceptionally one of a kind - with the goal that the shot of any two individuals having a similar trademark will be negligible, stable - so the element does not change after some time, and be effortlessly obtained - with the end goal to give accommodation to the client, and counteract deception of the element. Unique mark acknowledgment is the most tested strategy for biometric ID. A similarly new biometric attribute has been found as far as the palmprint [2]. Solid and one of a kind qualities of palmprint legitimize its high ease of use. Like unique finger impression, the palmprint

Revised Manuscript Received on July 18, 2019.

R.Sridevi, Associate Professor & Head, Department of Data Analytics & BVoc(N/W & MA), PSG College of Arts & Science, Coimbatore, Tamilnadu, India.

S.Selvi, Associate Professor in Computer Science, PSG College of Arts & Science, Coimbatore, Tamilnadu, India.

has one of a kind highlights, specifically, primary lines, particulars highlights, delta focuses, wrinkles, and edges. Moreover, a more general surface region of the palm (as against the surface region being caught for the finger impression) stimulates more number of one of kind characteristics. Subsequently, palmprint biometric is recognized to develop rapidly as a dependable acknowledgment framework. In any case, a twisting of pictures because of difficulties of obtaining pulls down the correctness of a palmprint acknowledgment framework [3]. Research is as yet required to handle the issues emerging out of situating, turning, and extending the palm. Also, the greater size of the obtaining gadget does not permit its utilization over cell phones. Contact based securing may equally be viewed as foul. The famous MIPS based cryptography processor is utilized for equipment and programming items and models require cryptography keys length for higher security level [4, 5]. Merging biometric with MIPS cryptography processor is as a conceivable arrangement. Our principle objective is to give arrange security employing MIPS constructed crypto processor situated in light of contactless palm vein biometric framework. This policy considers NOC requirements and its topology. It furnishes greater security with less key length and there is no compelling reason to store any private key anyplace. Blowfish algorithm is more secure to think about other symmetric key calculations, and deliver best outcome for less handling time and adjusts. To expands the key size of blowfish calculation.

II. LITERATURE REVIEW

From this section we have reviewed and assessed the security and protection issue of existing exploration works and investigate the downsides of the numerous articles.

Mehreen Ansar [6] This Review paper is about the security of bio metric layouts in cloud databases. Biometrics is ended up being the best confirmation technique. Be that as it may, the principle concern is the security of the biometric format, the procedure to remove and put away in the database inside a similar database alongside numerous other. Numerous strategies and techniques have just been proposed to anchor layouts, however everything accompanies its upsides and downsides, this paper gives a basic diagram of these issues and arrangements. Inadequacies of DNA stockpiling can be evacuated with some proficient calculation like Genetic Algorithm for seeking and planning asked for information from the DNA information bases.

Progressing Biometric Security Concern with

Blowfish Algorithm

Deficiencies of quantum cryptography can be lessened byutilizing cross section based cryptography subsequently anchoring our layouts all the more proficiently. As clients are expanding step by step ID of a biometric format additionally requires more assets to figure, store, concentrate and output records of a database to discover most ideal match. This Review paper proposed another distributed computing condition, which recommends future work by applying mixture of Cognitive and Quantum key appropriation while putting away biometric formats in cloud DNA information base.

R. ArunPrakash et al [7] In this paper we present another anticipated convention, BEBA (Biometric encoding and Biometric validation) to beat all the security issues in cloud environment. The vast majority of the wellbeing issues are related with validation and data insurance regarding cloud security coalition (CSA). In BEBA convention, biometric encryption has been accommodated cloud purchaser's significant data and personality check has been used remarkably. Character check has been joined with layout insurance related to four totally unique and intense (RC4, RSA, AES and 3DES) encryption calculations for aggregated security. Blowfish has been utilized in information insurance and key security administration. Receiving this convention has given decent outcomes while inspecting with existing work and every single defenseless place has been considered for enhanced security.

Tonimir Kišasondi [8],In this paper we depict that client confirmation strategies dependent on hash capacities like MD5, NT/NTLM and SHA-1 can be effectively traded off. We utilized strategies that use cryptanalytic tables dependent on time memory tradeoff methods (TMTO) and we investigated certain constraints on this methodology. We propose enhancements to this method and extra ideas like parallelized creation and questioning of tables which enhance speed and memory proficiency of the whole methodology. With those changes another idea for TMTO systems is made. We additionally portray vulnerabilities dependent on physical access to a section of a PC organize foundation that can bargain any semi secure framework. Concerning vulnerabilities we present various biometric confirmation techniques that can limit or invalidate this security dangers.

Jie Wu,[9], et al. think about the protection danger in multihop remote systems, with this risk where assaults, for example, movement investigation and stream following can be basically occurred by a vindictive challenger because of the Public climate of the remote medium. For this system coding has the idle one to keep these assaults since the coding/blending process is sure at middle hubs. Be that as it may, with the basic misuse of system coding can't finish the objective once adequate bundles are made by the challenger. In other path, with the assistance of existing security safeguarding procedures of onion steering, the coding/blending nature keeps the likelihood of misusing. For this the creators propose arrange coding based protection saving technique next to the activity examination in multihop remote systems. They utilize the homomorphic encryption component on the Global Encoding Vectors (GEVs), their plan gives two noteworthy security saving highlights, bundle stream untraceability and message

content privacy, for capability keeping the activity examination assaults. Moreover, the proposed technique keeps the arbitrary coding highlight, and each sink can gain ground the source bundles by reversing the GEVs with a high probability.

Yan Sun [10] et al approaches depends on offering individuals from the area data assemble keys (GKs) that enables them to decode the area data. For this GK administration this paper proposes a Rebalancing calculation to protect rekeying execution with GK administration. This article conveys the free coupling all through a system, in this manner permits outsider control. This paper gives a convention like appropriate key dispersion, Multimedia Internet Keying (MIKEY), and Logical Key Hierarchy (LKH) convention. These conventions are utilized to protect progressive area data conveyance for supple area security control for efficacious message conveyance and gathering administration multifaceted nature. Henceforth it doesn't bolster the multicast correspondence. What's more, they were computational expense is likewise high. They were client secrecy issue from this methodology.

III. PROBLEM DEFINITION

Existing asymmetric encryption algorithms require the capacity of the secret private key. Put away keys are regularly secured by ineffectually chosen client passwords that can either be speculated or gotten through animal power assaults. This is a frail connection in the general encryption framework and can possibly trade off the uprightness of touchy information. Joining biometrics with cryptography is viewed as a conceivable arrangement yet any biometric cryptosystem must have the capacity to beat little varieties present between several acquisitions of the comparable biometric with the end goal to deliver predictable keys. This is defenseless to assault by programmers. This makes huge issue in hilter kilter cryptography.

• Cryptography comes at expense. The expense is as far as time and cash - The utilization of open key cryptography requires setting up and support of open key foundation requiring the nice looking money related spending plan.

• It need to store in such a place which is shielded from unapproved getting to.

• The security of cryptographic method depends on the computational trouble of numerical issues.

• The significant shortcoming of Normal

cryptography framework dependent on lopsided calculations requires the capacity of mystery keys.

• It incorporates area, security dangers, undertaking, expected number of clients, client conditions, existing information, and so on.

IV. PROPOSED METHOD

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075,Volume-8, Issue- 9S2, July 2019

operated the comparative key for both the encryption anddecoding. AES has leverage over different calculations as far as encryption time, unscrambling time and throughput. Likewise it demonstrated that Blowfish has a superior execution than 3DES and DES. Furthermore, unmistakably 3DES has just about 1/3 throughput of DES, or as it were it needs multiple times than DES to process a similar measure of data. The blowfish algorithm is more secure to look at other symmetric key calculations, and create greatest outcome for less preparing time and adjusts. The key size of blowfish calculation 128 to 448, it gives more protection to the messages and gives incredible security to look at other symmetric calculations. It employs design positioning strategy to rank the movement and it is critical to recognize the personal conduct standards in consecutive learning action with the assistance of the procedure mining method.

a. Data Preprocessing

Preprocessing is a background to upgrade the idea of data to be aware with the mining system. Incredible data will give uncommonly profitable and captivating learning. In the proposed structure, data preprocessing is done in 4 significant ways: (I) data cleaning, (ii) trademark assurance, (iii) change, and (iv) blend. In the wake of social occasion the data they may duplicate data were exit in the database [42]. Repeating of data may cause colossal time of data getting ready time and besides it requires much venture to process comparative data. So to avoid the reiterated data this model is useful to save the time examination.

b. Image Enhancement

Poor complexity is one of the imperfections found in gained picture. The impact of that imperfection has incredible effect on the differentiation of picture. At the point when differentiate is poor the difference upgrade technique assumes an imperative job. For this situation the dim level of every pixel is scaled to enhance the differentiation. Picture upgrade is done to enhance the distinction of the got biometric highlight. The biometric highlights like unique finger impression being picked up should be upgraded with the goal that they can be utilized for further investigation. This procedure is done to dispose of repetitive pixels from a picture and accomplish the brilliance and complexity.

c. Segmentation

Image segmentation is the way toward parceling a computerized picture into various portions Image segmentation is normally used to find items and limits in picture, picture division is the way toward doling out a mark to each pixel in a picture to such an extent that pixels with a similar name share certain visual attributes. Segmentation is an imperative strategy to expel important information from confused remedial pictures. Segmentation has wide application in therapeutic field. As the delayed consequence of picture division, set of parts which inside and out spreads entire picture. Segmentation precision chooses the unavoidable accomplishment or disillusionment of motorized examination framework Segmentation is the path toward doling out a name to every pixel in an image to such

a degree, to the point that pixels with a comparative check share certain visual characteristics.

d. Template matching using blowfish algorithm

Format coordinating is a system used to decision districts in picture which are like layout. In tumor recognition process, a layout is utilized as a guide for coarsely finding tumor by decision out the edges. A format is made and is moved over the procured picture sequentially and the zone where the layouts coordinate the picture is stamped. The layout is regularly of littler size than picture. The strategy is of incredible significance and is helpful in effectively distinctive palm. Blowfish is categorized as a symmetric square figure calculation. Fundamentally it utilizes a similar mystery key to both the encryption and unscrambling procedure of messages. Here the square size for Blowfish is 64 bits; messages that aren't a result of 64-bits in size must be walked. It utilizes a variable – length key esteem, from 32 bits to 448 bits. It is hold for applications where the key isn't shifted as often as possible. It is generously faster than most encryption calculations when performed in 32-bit chip with tremendous evidence reserves. Blowfish is a keyed symmetric square figure planned in 1993 by Bruce Schneider. Schneider planned Blowfish as a universally useful calculation, expected as an option in contrast to the maturing DES. Blowfish has a 64-bit square size and a variable key length from 32 bits up to 448 bits. 18 sub-keys are gotten from a solitary starting key. It requires add up to 521 cycles to create all required sub keys. It is a 16-round Feistel figure and uses vast key-subordinate S-boxes. In structure it takes after CAST-128, which utilizes settled S-boxes. Blowfish performs well for applications in which keys does not change regularly.

The characteristics of Blowfish are as follows: It has block cipher of 64 bit block.

• The key length is variable and can be up to 448 bits.

• It encodes information on 32 bit microchip at a rate of 18 clock cycles for every byte such a great amount of quicker than DES and IDEA. Unpatent and sans royality.

• It can keep running in under 5k of memory. • It has straightforward structure and execution is simple.

• The primary case of acclaim of Blowfish is Key Scheduling techniques.

Creating the Sub Keys Blowfish utilizes a substantial number of subkeys. These keys must be pre registered before any information encryption or unscrambling.

1. The D- array consists of 18, 32 bits subkeys D1, D2, ……., D18.

2. There are four-32bit S-Boxes with 256 entries each. S1, 0, S1,1,……S1, 255;

S2, 0, S2, 1,……S2, 255; S3, 0, S3, 1,……S3, 255; S4, 0, S4, 1,……S4, 255;

upgrades the security of the square figures. Since, it makes inclusive inquiry of the key space. It is extremely troublesome notwithstanding for some short keys.

V. EXPERIMENTAL RESULTS

There are different execution factors which are utilized to broke down the diverse encryption calculations. The recreation of the calculation is done to play out the standard tests including Avalanche and picture entropy and histogram on Intel Core i7-3770@3.40 GHz processor utilizing MATLAB.

Throughput performance

It is the higher rate of creation or greatest rate at which information can be fingered which have a place with might be conveyed over a physical or sensible connection. It might be influenced by different factors, for example, medium, accessible handling intensity of the framework parts and end-client conduct.

Encryption Ratio

The encryption proportion is the estimation of the aggregate number of information that is to throughput of the encryption calculations is figured by separating the aggregate plaintext in Megabytes scrambled on aggregate encryption time for every calculation. In this manner, if throughput expanded the power utilization is lessening and gives all the more long existence of the framework part.

Encryption and Decryption Time

The time given by calculations absolutely relies upon the speed of the processor and calculation multifaceted nature. Less time calculation enhances the whole activity of the processor.

Level of security

Cryptographic security characterizes whether encryption process is secure against from every single known assault such astime attackand variable plaintext-figure content assault. For exceptionally essential sight and sound application to the encryption procedure ought to fulfill cryptography security.

Image Entropy

The encryption calculation adds additional data to the information in order to make it troublesome for the interloper to separate between the first data and the one included by the calculation. We measure the measure of data as far as entropy, thusly it tends to be said that higher the entropy better is the execution of security calculation. To quantify the entropy (H) for a picture, condition is connected on the power (I) values P(Ii) being the likelihood of force esteem Ii

Blowfish are the most secure and effective calculations. The speed and power utilization of these calculations are better contrasted with the others. If there should be an occurrence of lopsided encryption calculation, RSA is more

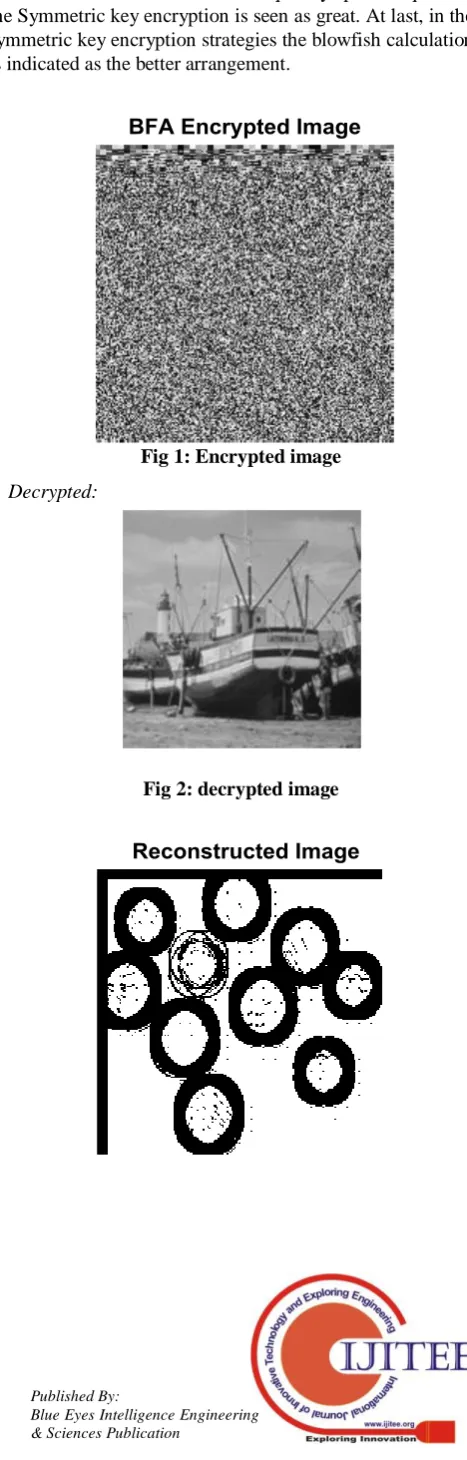

secure and can be utilized for application in remote system due to its great speed, less time and security. In the part of throughput, Throughput is expanded so control utilization is diminished. Throughput is high in blowfish and it is less power utilization calculation consequently speed is quick in the Symmetric key encryption is seen as great. At last, in the symmetric key encryption strategies the blowfish calculation is indicated as the better arrangement.

Fig 1: Encrypted image

[image:4.595.311.545.109.840.2]Decrypted:

International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075,Volume-8, Issue- 9S2, July 2019

Fig 3: reconstruct image

Simulation results for this empathy point are encryption organize. The outcomes demonstrate the predominance of Blowfish calculation over different calculations as far as the preparing time. Another point can be seen here; that requires less time than all calculations with the exception of Blowfish.

Simulation results for this empathy point unscrambling stage. We can discover in decoding that Blowfish is the superior to different calculations in throughput and power utilization.

The comparison between encryption calculations has been led at content and record information documents. We discovered that Blowfish has an execution more prominent than other the other five sorts. Presently we will make a correlation between different sorts of information.

IV. CONCLUSION

Biometric equips high security with more precision which identifies the individual dependent on their physiological or conduct attributes of a person by utilizing biometrics innovation. It reasons that the biometric will build security, dependability and adequacy in the most recent innovation of PC framework. The famous MIPS based cryptography processor is operated for equipment and programming items and principles require cryptography keys length for higher security level. Joining biometric with MIPS cryptography processor is as a possible arrangement. We utilize new way to deal with system security utilizing MIPS constructed crypto processor situated in light of contactless palm vein biometric framework. This methodology considers NOC imperatives and its topology. It gives better security less key length and there is no compelling reason to store any private key anyplace. Blowfish algorithm is more secure to look at other symmetric key calculations, and create best outcome for less handling time and adjusts. To builds the key size of blowfish calculation. In future work will consolidate biometric for upgrade the security of MANET and VANET and furthermore in different applications.

REFERENCES

1. Jain, A., Hong, L., and Pankanti, S. (2000). Biometric identification. Communications of the ACM, 43(2):90-98.

2. Kanth, B. and Giridhar, B. (2010). Gene expression based acute leukemia cancer classifi- cation: A neuro-fuzzy approach. International Journal of Biometrics and Bioinformatics (IJBB), 4(4):136.

3. Anil K. Jain, Ajay Kumar, "Biometrics of Next

Generation:An Overview to Appear in Second

4. U. Uludag, S. Pankanti, S. Prabhakar, and A. K. Jain. Biometric cryptosystems: issues and challenges. Proceedings of the IEEE, 92(6):948{960, June 2004

5. Wang, Xing-Yuan, Sheng-Xian Gu, and Ying-Qian

Zhang. "Novel image encryption algorithm based on cycle shift and chaotic system." Optics and Lasers in Engineering Vol.6, No.8,pp. 126-134,2015

6. Mehreen Ansar, "Biometric Encryption in Cloud

Computing: A Systematic Review", IJCSNS

International Journal of Computer Science and Network Security, VOL.18 No.8, August 2018

7. R. ArunPrakash, "Biometric Encoding and Biometric Authentication (beba) Protocol for Secure Cloud in M-Commerce Environment", Appl. Math. Inf. Sci. 12, No. 1, 255-263 (2018)

8. Tonimir Kišasondi, "Improving Computer Authentication Systems With Biometric Technologies" Croatian Society for Information and Communication Technology, 2006. 166-171

9. Ostovari, Pouya, Jie Wu, and Abdallah Khreishah. "Network coding techniques for wireless and sensor networks." (2013).

10. Y. Sun, T. La Porta, and P. Kermani, "A flexible privacy enhanced location-based services system framework and practice," IEEE Trans. Mobile Comput., vol. 8, no. 3, pp. 304-321, Mar. 2009.

11. M.Pitchaiah, Philemon Daniel, Praveen, "Implementation of Advanced Encryption Standard Algorithm", International Journal of Scientific & Engineering Research (IJSER), Vol.3, No.3, ISSN 2229-5518, 2012. 12. Ritu Pahal and Vikas Kumar, "Efficient Implementation

of AES" ,International Journal of Advanced Research in

Computer Science and Software

Engineering,Vol.3,No.7,ISSN 2277 128X,2013

13. Jasmeet Singh, Harmandeep Singh, "Design and Development of a Rapid AES based Encryption Framework",International Journal of Engineering

Research &

Technology(IJERT),Vol.3,No.10,ISSN:2278-0181,2014. 14. Lawrence E. Bassham,"The Advanced Encryption Standard Algorithm Validation Suite", National Institute of Standards and Technology Information Technology Laboratory Computer Security Division, Vol.14, No.06, pp.789-981, 2002.

15. MilindMathur, "Comparison between DES, 3DES, RC2, RC6, BLOWFISH and AES", National Informatics Center Network NICNET, Vol. 1, No.3, pp. 143-148, 2013.