Rochester Institute of Technology

RIT Scholar Works

Theses

Thesis/Dissertation Collections

6-2006

Real time face matching with multiple cameras

using principal component analysis

Andrew Mullen

Follow this and additional works at:

http://scholarworks.rit.edu/theses

This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contactritscholarworks@rit.edu.

Recommended Citation

Real Time Face Matching With Multiple Cameras Using

Principal Component Analysis

by

Andrew Mullen

A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of

Master of Science in Computer Engineering

Approved By:

Supervised by

Dr. Andreas Savakis

Department of Computer Engineering

Kate Gleason College of Engineering

Rochester Institute of Technology

Rochester, NY

June 2006

Andreas Savakis

Dr. Andreas Savakis - Professor and Department Head

Primary Advisor - R.I T Dept. of Computer Engineering

Shanchieh Jay Yang

Dr. Shanchieh Jay Yang - Assistant Professor

Secondary Advisor - R.I T Dept

.

of Computer Engineering

M.Shaaban

Thesis Release Permission Form

Rochester Institute of Technology

Kate Gleason College of Engineering

Title: Real Time Face Matching with Multiple Cameras Using Principal

Component Analysis

I, Andrew Mullen, hereby grant permission to the Wallace Memorial Library to

reproduce my thesis

in

whole or part.

Andy Mullen

Andrew Mullen

Acknowledgements

Iwouldliketo expressmysincere appreciationtoDr.

AndreasSavakis for hisguidance and encouragement. His

constant interest helpedat everystage toenablethis thesis

tobecompleted.

I amalso gratefultoDr. Shanchieh

Yang

andDr.Muhammad Shaabanfortheircontinualsupportand efforts

toallowmeto overcome obstacles.

Otherpeoplewho provided significant assistance were

Abstract

Face recognitionis arapidly advancing researchtopic due to the largenumberof

applications that can

benefit

from it. Facerecognitionconsists ofdetermining

whetheraknown face ispresent inanimageandis

typically

composedoffour distinctsteps. Thesesteps are face

detection,

face alignment, feature extraction, and face classification [1].The

leading

application for face recognition is video surveillance. The majority ofcurrent research in face recognitionhas focusedon

determining

ifaface is present inanimage,

and ifso, which subject in aknown database is the closest match. This Thesisdeals with face matching, which is a subset of face recognition,

focusing

on faceidentification,

yetit is an area where littleresearchhas been done. Theobjective offacematching is to

determine,

in real-time, the degree ofconfidence to which a live subjectmatches a facial image. Applications for face matching include video surveillance,

determination of identification credentials, computer-human

interfaces,

andcommunicationssecurity.

The method proposedhere employs principal component analysis

[16]

to create amethod of face matching which is both computationally efficient and accurate. This

method is integrated into a realtime systemthat is basedupon atwo camera setup. It is

able to scan the room, detect

faces,

and zoom in for ahigh quality capture ofthe facialfeatures. The image captureisused inaface matchingprocess todetermine iftheperson

found is the desired target. The performance ofthe system is analyzed based upon the

Table

ofContents

Acknowledgements in

Abstract iv

TableofContents V

ListofFigures vii

ListofTables X

Glossary

xi1 .0 Introduction 1

1 .1 Motivation 3

1 .2 Problem Statement 4

1 .3 Outline 6

2.0 Background 7

2.1 Current Researchin Video Face Recognition 8

2.2

Key

Attributes oftheFace Detection Algorithm 92.3 Face Identification Strategies 10

2.4

Key

Attributes oftheFace Classification Algorithm 132.5 Face Recognition Strategies 14

2.6 In Depth LookatPCA 17

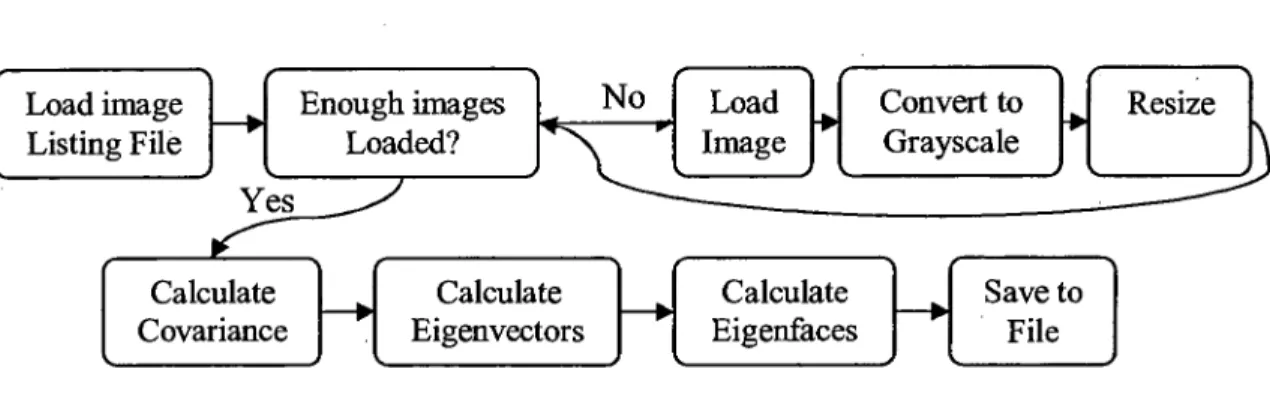

3.0 PCA Implementation 23

3.1 Source Images 23

3.2 PCAAlgorithm 25

3.2.1 Difficulties 27

3 .2.2 Limitations 28

3.3 DistanceMeasure Implementationand

Testing

293.4 DistanceMeasure ResultsandSelection 32

3.5 Eigenfaces 33

4.0 EvaluationofPrincipal Component Analysis 37

4.1 Data Gathered 37

4.1.1 Image SizeandNormalization Method 38

4.1.2 NumberofEigenfaces 41

4.1.3 NumberofImagesper Subject 43

4.1 .4 NumberofSubjectsfor

Training

444.1.5 EvaluationoftheFirst Three Eigenfaces 45

4.1.6 EvaluationofImage Masks 46

5.0 Face

Matching

555.1

Thresholds

andCharacteristics 566.0 Real Time System 60

6.1

Components

616.1.1 GUI 61

6.1.2 Camera 70

6.1.3 Frame 75

6.1.4 Face 76

6.1 .5 Eigenspace 81

6.1.6

Tracking

816.1.7 Threads 82

6.2 DataPath 84

6.3 Integration 85

6.4 OpenCV 86

6.5 IncreasedPrecision Eigenfaces 87

6.5.1 Eigenspace Evaluation 90

7.0 Evaluation 92

7.1 Initial Test 92

7.2 Second Test 96

7.2.1 InclusionofSubject

Tracking

1017.2.2 Confidence Levels 102

7.3 Digital CameraImages 108

7.4 SystemSpeed 110

7.5 System Limitations 113

8.0 Conclusion 115

8.1 Future Work 116

9.0 AppendixA 118

List

ofFigures

Figure 1 - Skin Tone

Concentrationin HSV Colorspace 10

Figure 2

-Average FaceTemplate& EdgeTemplate

[10]

11Figure 3

-SVM

Classifying

Model 12Figure 4- Face

Rotations , 14

Figure 5 - Eigenface Creation Flowchart 25

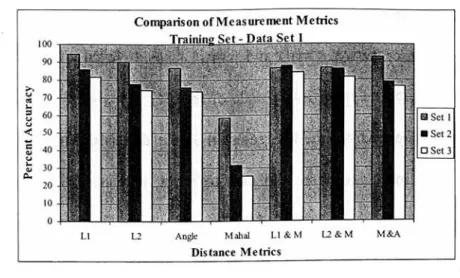

Figure6 - Distance Metric

Testing

Results 33Figure 7

-Average Face 34

Figure 8

-The First Five Eigenfaces 35

Figure 9

-Eigenfaces 50 &

51,

100 &101,

and 150 36Figure 10- NormalizationTest 1 39

Figure 11 - NormalizationTest 2 39

Figure 12- Normalization Test 3 40

Figure 13

-EigenfaceTest 1 42

Figure 14- EigenfaceTest 2 42

Figure 15 - Images

per Subject Test 44

Figure 16- Number

ofSubjects Test 45

Figure 17 - EvaluationoftheFirst Three Eigenfaces 46

Figure 18-Mask Images 47

Figure 19

-Masking

Test Results 47Figure20- Male

Figure23-RetestoftheDistance Metrics 52

Figure 24

-ThresholdSelection forFace

Matching

57Figure 25

-ThresholdData forFace

Matching

58Figure 26

-GUIScreenshot ; 62

Figure 27

-Two views oftheFrameControls 63

Figure 28

-Frame

Display

Area 65Figure29- Face

Component 66

Figure 30

-Eigenspace Component 67

Figure 31

-Match Target Information -68

Figure 32

-Training

theTools 69Figure 33 -CameraView Correspondence Problem 71

Figure 34-2DViewofSimple CVC 72

Figure35

-Face Offset 73

Figure 36 - General Face Proportions 77

Figure 37- Tan-Sigmoid

andLog-Sigmoid Transfer Functions 80

Figure38- FlowchartoftheData Transmissionbetween Components 85

Figure39 - EigenspaceAverage Image Comparison 88

Figure 40- Eigenface Comparison

89

Figure41 - Eigenspace Comparison 90

Figure 42-Examples ofUnmatchedImages 98

Figure 43- False Positive Images

99

Figure 44

-Correctly

Matched ImagesandtheirMatch Scores 100Figure 46-Matches foraThresholdof0% 104

Figure 47-Matches foraThresholdof15% 105

Figure 48

-Matches foraThresholdof25% 106

Figure 49

-MatchesforaThresholdof40% 107

Figure 50

-Imagesof

Andy

inEnvironment A(left)

andEnvironment B(right)

108Figure 51 - Image

Comparison,

EnvironmentA(left),

Video System(right)

109Figure 52

-Equalized,

Grayscaleversion ofFigure 51 1 10Figure 53

-Square Image Size

Testing

-NoNormalization

-Data Set 2 118

Figure 54

-Rectangular Image Size

Testing

- No Normalization- Data Set 2118

Figure 55

-Square Image Size

Testing

- ContrastStretching

- Data Set 21 19

Figure 56

-Rectangular Image Size

Testing

ContrastStretching

DataSet 2 119Figure57-Square Image Eigenvector

Testing

-DataSet 2 119

Figure 58-RectangularImage Eigenvector

Testing

- Data Set 2 120Figure59

-Testing

fornumber ofImagesfor EigenfaceTraining

- Data Set 2 120Figure60- Evaluation

ofthefirst Eigenfaces

List

ofTables

Table 1

-Data

Processing

Pipeline ...83;'.;(X

Table 2

-ResultsfromtheInitial Test 94

Table 3- Results

fromtheSecond Test 97

Table 4- Resultsfor

using 10 frames forrecognition 102

Table 5

Glossary

FaceRecognition

FaceDetection

FaceAlignment

Feature Extraction

FaceClassification

Face

Matching

Eigenface

Yaw

Pitch

Roll

PCA

SVM

SVC

OVC

CVC

ROI

Process of

identifying

aface inanimage.Determining

thelocationand scale ofa face inanimage.Determining

where facialcomponents arelocatedandperforminggeometricnormalization.

Finding

features todistinguish between face images.Matching

asubjectinanimageto animage inadatabase.Real-time determinationofthedegreeof confidencetowhicha

livesubjectmatchesafacial image.

An Eigenvector froma setofface images.

RotationaroundtheYaxis.

RotationaroundtheXaxis.

RotationaroundtheZaxis.

PrincipalComponent Analysis

Support Vector Machine

Scene ViewCamera

Object View Camera

Camera ViewCorrespondence

Chapter

1

Introduction

Face recognition has been a topic of great interest to people in many different

fields.

Numerous

researchers from the disciplines ofPsychology,

Engineering,

ImageProcessing,

and Computer Science are interested indeveloping

intelligent

technologywhich mimics the workings of the human mind. Face recognition has attracted

significant interest because manyofthe approaches usedto

develop

algorithmsarebasedonknowledge ofthe humanbrain. The humanbrain is wondrous

ly

complex and allowspeople to perceive and interpret visual information about an object in moments.

Identifying

aface is so simple and commonplace fora personand yetinherently

difficultto duplicate for a computer. This is

largely

because our understanding ofthe humanbrain is so limited. This lackofknowledge has ledto a plethora of proposed algorithms

forthedevelopmentof computer systemswhichseektorecognizefaces.

Face recognition may be viewed as a combination of four distinct processing

steps. These steps are face

detection,

face alignment, feature extraction, and faceclassification[1]. Face detection

determines

whether a face is present inthe image andfindsthe location andthe size ofthe face. In face alignment, facial

features,

such as theeyes and mouth, are located and used to normalize the geometry of the face. Feature

extraction refers to the process ofselecting a set of

distinguishing

characteristics for aspecific image.

Finally,

faceidentification

uses the features to determine a match in adatabaseofknown images.

The majority of current research in face recognition is focused more on the

theoretical application of the

knowledge

and less on the practical aspects.Existing

database which is the closest matchto a source image. The images are gathered using

digital cameras and

thus,

there are no time constraints for processing ofthe image. Inaddition, the

lighting

is controlled and a flash is usedto producewell illuminated faces.While thisresearchisuseful, it cannotbeeffectively implemented inthemajorityof

real-world situations. The use oflarge databasesmakes the system

inherently

slow, causingunacceptable delays if the subjects are

being

monitored through a video system. Ingeneral, there is no guaranteethat therecognition ofthesubject is correct, because ifthe

subject isnotinthe

database,

itcouldberecognizedincorrectly.This thesis focuses on the problem offace identification in areal-time, dynamic

environment. Instead ofusing a large database of

images,

the systemtypically

uses asingle target image.

However,

it can also use a small set of images. These imagesinclude subjects ofinterest forwhichthe camera systemis searching intheenvironment.

The camera system employs face matching to decide if any ofthe desired targets exist

and determines theconfidence of amatchbetweena subject inthe dynamic environment

and afacetargetinpictorialform.

The differences between the common face recognition system and a face

matchingsystem canbe easilyexplainedthroughan example.

If,

forinstance,

acommonface recognition system was implemented in an airport, it would require a massive

database of face images and would

identify

every person passingby

(assuming

anenormous amount ofprocessing power). Ifthe

identity

ofevery person in the vicinitywas

important,

this system wouldbe justified.However,

ifthesystem wasattempting tosystem would then be able to select the criminals from the multitude offaces. This

system would examine how closely eachpersonresembledthecriminals inthe

database,

searching formatches. Ifthecommon systemwereto usethis smaller

database,

it wouldidentify

everypersonasthecriminalthey

mostcloselyresembledinthedatabase.The face matching

technology

could also be used in a manner similar to afingerprint scan inorder to give someone privileges or access to a system. An ID card

could have an encrypted image of a person's face embedded in it which could be read

whenthecardisswiped at aterminal. Acamerasystem couldthenbeusedtoverifythat

theperson who swipedthecard isthe actualowner oftheID card. Ifthe two match, the

identificationofthesubject is verified andheor shecanbegranted access.

The resulting outcome is that while current face recognition techniques and face

matching are similar concepts, the

development,

implementation,

and outcome ofthesesystems is significantlydifferent.

1.1.

Motivation

Facerecognition is arapidly advancingresearch topic in image processingdueto

the largenumber of applicationsthat canbenefit fromit. The

leading

applicationforfacerecognition is video surveillance. Other applications include determination of

identificationcredentials, human-computer

interfaces,

andcommunicationssecurity. Theintent of such a system is simply to extract information from a live video stream and

conveyittoa useful source.

Ifa system is developed that can match the face ofa subject, the subject can be

which can receivethe

identification

fromthe camerasystem. This openspossibilities foralot of opportunities asidefromstandardvideo surveillance.

1.2.

Problem Statement

Thegoal ofthis thesis isto

develop

areal-time, face matching system which canbeemployedinadynamicenvironment. The critical requirementsforthissysteminclude

theabilitytoobtainhighquality face imagesandtomatchfaces inafractionof asecond.

In order to get the precision required to manipulate and

identify

a face with a videocamera system, two cameraswillbe used. The reasonforthis is thatone ofthe cameras

will be

functioning

athigh levels of optical zoom in orderto get the most accurate faceimage possible.

However,

while zoomedin,

it will only be able to track a very smallportion oftheenvironment. While it ispossibleto start zoomed out,finda

face,

zoominon

it,

and capture animage,

each ofthese camera motions requires motor movement andis

inherently

slow. Thisprocess takestimeand unlessthe subject isstationary, it islikely

to fail. Amore effective method wouldbeto haveone camerasearchingtheenvironment

at low zoom. This camerais called the Scene View Camera (SVC). This camera finds

faces and givesthe locationofthesefaces to thecamerawhich is at ahigherzoomlevel.

This second camera is the Object View Camera (OVC). The two cameras are able to

workintandem,

finding

the locationofthe face andcapturingahighresolutionimage oftheface.

Inaddition to the obvious hardware requirements, the system needs sophisticated

images,

put themina standardizedform,

andfinally

attemptto matchthe face image tothedesiredsubject.

In order to improve the face matching process, the system employs ah

implementation ofprincipal components analysis that has been specifically tailored to

face matching. It is common practice to

develop

a principal components algorithm todetermine ifa region ofthe image

is,

infact,

a face. In order to recognize faces at avariety of pose angles, yet match faces accurately, a support vector machine will be

integrated for face detection. The use ofprincipal components will be solely for the

purposes offace identification.

Each frame captured

by

the SVC undergoes color segmentation to determinepossible face regions. These regions are examined

by

the support vector machine todetermine ifthe region is a face or not. The best face region is used as a guide to

determine the location ofthe subject in the environment. This information is used to

determine the pan, tilt, and zoom of the OVC. Each image captured

by

the OVCundergoes color segmentation aswell to aid in

locating

the face inthe new image. Thishighqualityface imagewillbe examinedfor its facial features. Oncetheeyes and mouth

have been

found,

the image willgo throughbothgeometric normalizationand histogramequalization. The image resulting fromthisprocess is thenexaminedusingtheprincipal

components algorithmto determine if itisa matchforone ofthe target faces.

Lastly,

inorderto allowthe systemto runinrealtime,

allofthesoftware must bestreamlined and efficient so that all ofthis processing can be done on multiple

images

each second. This ensures the system runs smoothly, matching faces ofthose who pass

1.3.

Outline

The

implementation

of a multiple-camera, real-time, face recognition system ispresented inthis thesis. This systemimplements a PCA based feature detection system

that has beentailored forface matching. The

following

chapterdiscusses priorresearchfocusing

onthe myriad of strategies for face recognition. The development and analysisofthe PCA algorithm are detailed in Chapter 3 and Chapter4. The threshold selection

and final

tweaking

of the face matching algorithm are detailed in Chapter 5. Thestructure and implementation ofthe real-time system are described in Chapter 6. The

performance and results obtained from the system are examined in Chapter

7,

followedChapter

2

Background

A Ph.D. student

by

the name ofTakeoKanade,

who is currently Professor atCarnegie Mellon

University,

is considered to have designed and implemented the firstface recognition system in 1973 [2]. Kanade used two computers to examine

approximately 800faces. Hisgoal was to examineand extractthe eyes, nose, andmouth

of the subjects. His research into examining faces excited interest in facial image

processing,

leading

to thedevelopmentoffacialrecognition as it is knowntoday.Serious activity in facerecognitionbegan inthe late80's andearly 90's. One of

themajor reasons forthiswasthatprocessingpowerwas

finally

reachingthepoint wherelargeamounts ofimage processing couldbe donewithout significantwaitingtime.

Early

image processingwas alldoneon stillimages storedonahard drive. It was not possible

to integrate the algorithms into real time because the system simply couldn't do it fast

enough. As the amount ofprocessing power

increases,

so does the ability to run moreand more complex algorithms.

As computing power continues to increase many of these algorithms can be

placedinto a realtimevideo system which allowstheprocessto movefromstatic images

to a dynamic environment.

However,

with this change a significant number of newfactorscomeintoplay. Theenvironment isno longerascontrolled, andthe

lighting,

pose2.1.

Current Research in Video Face

Recognition

Although significant amounts of research have been done on still image face

recognition, research in video face recognition has been sparse according to a literature

survey done

by

Zhaoet al. [3]. Some ofthenotable approaches includetheworkdoneby

Liu et al.

[4]

using adaptive hidden markov models to model human faces in videosequences and Shaohua Kevin Zhou and Rama Chellappa's

[5]

work using meandependent image groupsto

identify

subjects. Thereare fourprimaryreasons researchinthis area has been limited. Typical video environments have poor video quality,

variations in

illumination,

significant changes in subjectpose, andlow imageresolution.Thiscombination offactorsmakes it difficultto obtain agalleryofimage subjects which

canbeused for facerecognitionandsystemtraining.

In order to address the difficulties with video and image quality, a multiple

camera system has been used to enable the maximum effective level of zoom to be

selectedfor

tracking

asubject. Multiplecamera systemshave beenshownto beeffectivefor aiding in

locating

small features on a subject[6],

yet have not been proven to beeffective for face recognition. The combination ofthe two cameras at different zoom

levelswill allow subjectsto be effectivelymonitoredandtrackedathighimagequality.

Insteadofobtainingan extensive galleryof subjectsthrough therealtimesystem,

adatabaseofstillimageswillbeusedtocreate agalleryof subjects which willbeusedto

train the system. The FERET database contains a large number offacial images which

were captured in a controlled environment [7]. The variations present in subjects

2.2.

Key

Attributes

for

theFace Detection

Algorithm

In

developing

a realtime face matching system, it is important tomake sure thatthedesire for accuracydoes not overcomethedesireforspeed. Thecombinationofthese

two metrics determines the overall performance.

Therefore,

even though analgorithmmaybe very accurate, the processingtime required per image must be weighed. Ifthe

processing time is simply too

long,

the performance ofthe system is unacceptable inpractice.

Face matching with multiple cameras requires that the subject is

looking

at onecamera, but canbe detectedwiththeother camera.

Therefore,

the face detectionmethodmust be able to recognize profile views of a subject's face in addition to a direct frontal

view. Inaddition, the distanceofthe subject from thecameras is variable,requiringthe

method usedtobeableto compensate forfacesofvaryingsizes.

Lastly,

sincethe subjectis able to move

freely

whilethey

systemisfunctioning,

the system mustbeable todetectsubjects who are

looking

up or down. All ofthese variations require a face detectionmethod that is versatile, yet very efficient. The detectionofthe face in the frame is but

the first step in a very complicated process, and thus it cannot require too much

processingtime.

Face candidates will be located in the image using color segmentation. Color

segmentation will allow the system to locate regions which contain a large amount of

skintoneswhich shouldbeexaminedto determine ifthe region is aface or not. For this

reason, the face detection method will not be usedacross the entire

image,

but will beHSV (Hue Saturation

Value)

color space and that image processing in this space issimple and effective [8,9]. Adepiction ofthe special

locality

of skin tones in the HSVspaceisshowninFigure 1.

Figure 1- Skin Tone Concentration in HSV Color space

2.3.

Face Detection

Strategies

Template matching isone ofthe simplest methods for

determining

whether a faceobject is present. Template matching is a method which attemptsto search fora surface

that is similarly contoured to a face [10]. This is done

by

creating a surface whoseintensity

values match those predicted fora face. The eyes containthe darkest portionsalongwiththemouth andnostrils, whilethe foreheadand nose arethe brightestportions.

This templateis scaled andplacedover portions ofthe image inorderto determine

likely

canbeused to detect areas inwhichthe prominent edges matchup withexpected facial

features. Although reasonable success at face detection can be obtained using this

method, template matching is limited because it cannot account well for image rotations

which deform the face and may result in a poor match. An example of template

matching isshowninFigure 2below.

Figure 2- Average Face Template&Edge Template

[10]

A more complex method which is similar to template matching is 3-D face

modeling [11]. This method uses a set of generic three-dimensional face objects and

attempts to matchdifferent views of aperson onto one ofthese

fully

three-dimensionalobjects. As an image is mapped onto the generic face object, it will be deformed to

match the subject. The

deforming

process is done using a distance map which mapsfeatures onthe image to the featuresexpected on the 3-D model. Once created, the 3-D

subject model will allow accurate representations ofthe subject's face independent of

facialrotation.

Unfortunately,

due to its complexity it cannotbedone inrealtime and itisnotguaranteedthatenoughimages of a subject willbeavailabletocreatea complete

3-Dface model.

Anotherapproachto face detection is theuse of support vectormachines [12]. A

marginbetweensets ofdata. The supportvectormachinetakestwo sets ofinputdataand

attempts to classify the two types without

being

subject to overtraining. A graphicalrepresentation of how the SVM classifies

linearly

separable data is shown below inFigure 3. Inthefigure

below,

thedark dotsarebeing

separatedfromthelightones.Origin

Figure 3

-SVM

Classifying

ModelIn most cases, support vector machines are used to

identify

a particular objectclass

by

separating it fromotherpossible objects. Inthe case of a facedetecting

SVM,

itis attempting to find the optimal method of

differentiating

betweena face object andtherest ofthe world. Support vector machines are generally quite effective, and can be

trainedusingimages selected

by

the user. The selection of atraining

set would allowtheSVM to recognize faces at various poses. In addition, the input ofthe SVM must be

regulatedtoa specific numberofpixels.

Therefore,

any face region selectedby

thecolorsegmentation algorithm would be resized, allowing the SVM to be

independent

to theThe last face detectionapproach istheartificial neural network[13]. Anartificial

neural network

(ANN)

is alearning

systemthat ismotivatedby

the internal connectionsofthe human brain. An ANN is made up ofinterconnectedneurons called nodes which

respond inparallelto a set ofinput signals. Aneural network consists ofan input layer

of nodes and an output layerof nodes whichtheuser interactswith. Anumber ofhidden

layers of nodes may be present in betweenthese two layers to improvethe accuracy of

the system. Signals fromthe input layerare passedthrough thenodes and are subjectto

an activation rule. If the correspondence at the node is not high enough, it will not

produce a positive output signal.

Unfortunately,

as the complexity of the networkincreases,

the processing time required to compute the results of the network risessharply. Artificialneural networks often require an extensive

training

setandmaynotbeableto effectivelycapturethevariabilityoffacespresentintherealtime system.

Examinationofthe face detection methods outlined above shows that the support

vector machine allows for the largest amount of variability while still maintaining

efficient performance.

Therefore,

the support vector machine will be used for facedetectionintherealtimesystem.

2.4.

Key

Attributes of

theFace Classification Algorithm

When snapping a photograph or a series of photographs of a person, a large

numberof variations can come into play. The

lighting,

levelofzoom, camerafocus,

andquality ofthe captured image can varydue to the characteristics ofthe camera selected.

Ifthesubject

being

photographed is notcompletelystill, then therecanbe changes intheroll, onlyroll canbe compensatedforinaphotograph(orvideo frame). Figure

4,

showsthe relationship ofthese rotations. Due to the abundance of possible variations, the

algorithm selected mustbe

largely

independentofthese sources of error.Yaw

Roll

6

*0Pifcb

Figure4- Face Rotations

With this in mind, the existing face classification algorithms must be evaluated

based on three important criteria. These criteria are the accuracy ofthe algorithm, the

algorithm'srobustness intheface oferror,andtheprocessingtimerequired.

2. 5.

Face Identification

Strategies

A promising method for matching faces appears to be principal component

analysis

[14,15,16,17,

and 18]. This method, pioneeredby

M. TurkandA Petland[16],

uses objects which are called Eigenfaces. Eigenfaces are simply the Eigenvectors

derived from aset offace images. The Eigenvectors represent anew set ofcoordinates,

called an

Eigenspace,

which allow efficient encoding of the most important facialontothe Eigenfaces. The sets of weights fortwo separate images canthenbe compared

in order to assess the degree ofcorrelation between the images. Images ofthe same

person shouldhave very high correlation. Theuse ofEigenfaces has recentlystartedto

emerge astheresearch standard for facedetection because it is quite reliable and nottoo

computationallyintensive.

Researchers have been workingon ways to improvethe standard PCAalgorithm

and have succeeded in multiple ways for specific systems. One ofthe most promising

methods is based on Linear Discriminant Analysis (LDA). LDA reduces the

dimensionality

of a face image in a similar method toPCA,

yet instead ofproducingorthogonal vectors, it produces vectors which

linearly

separate the data as much aspossible [19].

Therefore,

ittheoretically

produces a set of vectors which are moreeffective at face classification than Eigenfaces. The LDA vectors are similar to

Eigenfaces and are often called

Fisherfaces,

sincethey

weredevelopedby

Ronald Fisher.Unfortunately,

inorder to trainsuch a system to be more effective thanPCA,

all ofthevariables which will be encountered

by

test subjects must be in thetraining

set. Suchvariables include

lighting,

pose angle, andexpression. Sincethe system willbe run inadynamicenvironment, ensuring incorporationof all ofthevariables maybe impossible.

Another method,

Evolutionary

Pursuit,

attempts todirectly

improve PCAby

manipulating the Eigenfaces themselves [20].

Evolutionary

Pursuit(EP)

requires aninitial set of Eigenfaces generated

by

a standard PCA algorithm. The resultingEigenfaces arethenputthrougha largeseries oftestsinwhichthe vectors arerotatedand

theperformance ismeasured. Theperformance ofthe resultingvectors is measuredwith

vectors discriminate wellbetween

faces,

yet are still effective at matching a wide rangeof faces. While this method can generate vectors which are more effective than

Eigenfaces,

it is limitedby

the efficiency of the Eigenspace and the additionalcomputationisnotjustified

by

theperformance increase.Independent Component Analysis

(ICA)

wasinitially

developed to separateindividualsignals froma combinationandcanbe employed for facerecognition [21]. It

decomposes a complicated signal into additive subcomponents which are statistically

independent and was firstused for facerecognition

by

Bartlett and Sejnowski[22]. ICAdiffersfrom PCA inthat ituseshigherordermoments inorderto separatethedata. PCA

uses 2n

order momentsand decorrellatesthedata. Whenused for face recognition, ICA

assumes that a face is a combination of a set of unknown source images. These

independent images can be used to

identify

a particular subject. While ICA has beenshownto bemore effective at

distinguishing

differences between images of aset, it doesnotgeneralize welltoimageswhich are notintheset.

Every

human face has a unique, yet similar topological structure. A methodwhich examines this structure in order to determine the

identity

ofthe face is ElasticBunch Graph

Matching

(EBGM)

[23]. In this system, faces are represented as graphswhich have nodes at important features such as the eyes, nose, and mouth. The edges

betweenthe nodes are weighted with the distance betweenthe nodes. In addition, each

node contains information about its feature in the form ofGabor wavelets. While this

method differs greatly from the other methods examined above, it does not achieve the

A final method, Bayesian statistics has been used in conjunction with both

Eigenfaces and Fisherfaces to improve the performance ofthe feature vectors in face

classification. Thisapproach uses a statisticalclassifierto

help

determine the importanceofthe components ofthe feature vector, allowing for more accurate face classification

[24,25].

However,

the use ofBayesian statistics isheavily

dependent on the underlyingfeature set selection and can only marginally improve performance. Thus Bayesian

statistics willnotbeemployedinthis thesis.

2.

6.

In

Depth Look

atPrincipal Component

Analysis

The methodology behind principal component analysis

(PCA)

is related to theentropy of a system; where entropy is a measure ofthe amount ofenergy in a system.

PCA works

by

first gatheringa large setoftarget images. The covariance ofthis imagesetis thenfound. Thecovarianceis a measure oftherelative variation ofthe imageswith

respect to each other. With this

information,

the Eigenvectors and Eigenvalues ofthesystemcanbeproduced. The Eigenvectors forma coordinate system where an

image,

ofpredeterminedsize,canbe located. Ifthe setofEigenvectors is complete, the imagecan

be completelyrecreated from its location inthis coordinate. The advantage ofusing the

Eigenvectorsto describe the image is that the Eigenvectors are produced in such away

that the axes

try

to minimize the entropyofthe system.Minimizing

the entropyofthesystem allows the maximum amount ofdata to be encoded inthe minimum amount of

space. This means that if only a small number of the most important

Eigenvectors,

determined

by

largerEigenvalues,

are used, the majority of theimage

will behundred or so values which correspond to its coefficients in the Eigenspace. While a

reconstructed image is not perfect, it contains the principal components ofthe face

by

which the person can be

identified.

On a side note, ifthe Eigenvectors are viewed asimages lookas if

they

areghostlyfaces,

and are oftenreferredto as Eigenfaces.In order to

determine

theEigenfaces,

many steps must be taken. The generalformofthisprocess wasdone

by

Matthew TurkandAlex Petland[16]. The initialset oftraining

images is defined asIi, F2,

X_.

...tu,

where there are M images ready forprocessing. Theaverage oftheseimages is *P andcanbe found

by

theequation1 M

M^

Each face image will then be processed

by

subtracting the average image to create thenew set offace images marked

by

<DN,

whereO^

=FN

-*P and N =1,

2,

3 ... M. .Thecovariancematrix,

C,

ofthisset ofimages canbe determinedby

T

Thecovariance isgenerally inthe formofC=AA

and canbeput into this form if A is

defined as the set of mean adjusted images A =

[Oi, 02,

<t>3 ... Om]- With thecovariance ofthesystem

defined,

it ispossible to findtheEigenvectors ofthecovariancematrix. The Eigenvectors ofthis matrix with the largest Eigenvalues correspond to the

dimensions ofthesystem withthestrongest correlationinthe dataset.

Unfortunately,

thecovariance matrixis ofthe size PxPwhere P isthenumber ofpixels in the source images. For images ofdecent quality, 128 x 128 pixels, this matrix

needed. It is possible to find the Eigenvectors and

Eigenvalues,

Vi andXi,

ofthe matrixATA suchthat

ATAv;

= Xyi. Ifbothsides ofthis equationare pre-multiplied

by

A,

theequation becomes

AATAv;

=XjAvj. In this equation, it is obvious that

Avi

are theEigenvectors ofthe matrix

AAT,

which is the covariance matrix. The benefit ofthismethod is that the size ofthematrixATA is notbased uponthenumber ofpixels, but on

the number ofimages.

Therefore,

with this method, the principal M Eigenvectors of asystem canbe

determined,

whereMisthenumberofimagesused.In order to make use of this

information,

a "scrambled covariancematrix"

is

calculatedusingtheequation

cXzx*.

thusallowing Cs=ATA. The Eigenvectorsofthis"scrambledcovariance

matrix" canbe

determined using singular value decomposition. These Eigenvectors must now be

multiplied

by

the matrix A in order to get the principal Eigenvectors of the realcovariance matrix. SincethematrixAis a

listing

oftheimages,

thiscanbe donethrougha processofsummingthemultiplicationofthe Eigenvectorsandtheimages fromthedata

set. Theprocessto

develop

therealEigenvectorsofthe system, ul, thereforeisM

K=l

whereL =

1, 2,

3 ... M. Withthisprocess complete, the Eigenfacesare simplytheset ofEigenvectors

pl-The Eigenfaces forma set of vectors which constitutes a newcoordinate system

throughwhichdistancescanbe measured. In orderto getthecoordinates of an

image

inthe image into the Eigenspace. This process israther simple andinvolves the use ofthe

innerproduct. It consists ofsubtractingtheaverage image fromthe imageto project and

thenmultiplying

by

theEigenfacestoobtainthe set of weights. Theweight vectorhasanentry

corresponding

toeachEigenfaceandis beafloating

point number. The creation oftheseweightsis done

by

coK

=pTK(T-P)

whereK=1, 2,

3 ... M.The weight vectoris the set of coordinates

by

whichthe image canbe located inthe Eigenspace.

Therefore,

once the weights of two images can bedetermined,

the"distance"

between the two images can be calculated relative to the Eigenspace. There

are many different distance metrics that can be used. The distance metrics that were

examinedareshownintheequationsbelow [14].

Dist(x,y)

=|

Equation 1

-LtDistance

Dist(x,y)

=J_li(xi-yi)2Equation 2 L2Distance

ZX-XK,-Dist(x,

y) =,X

A=X

J

Equation3- Anglebetween Vectors

Dist(x,y)

=-_?.=ixiyizi

Equation4- MahalanobisDistance

Dist(x,y)

=Equation5

Dist(x,

y)=__]kJ=l

(x,

-yi)2zi

Equation6

-L2&MahalanobisDistance

1*

Dist(x,y)

=-E^xT

Equation7- AngleandMahalanobis

where z=

,

Xi

=Eigenvalueofthe ithEigenvector

A;

Thedistance measures above correspondto two vectors ofweightscorresponding

to two separate images. The first image is assumedto be Xwhere x; is the ith weight in

thevector. The secondimage is assumedtobe Ywherey; isthe ithweight inthe second

image's weight vector. Equation 1 shows that the Ll distance is simply the sum ofthe

absolute value ofdifferences betweentheweights. The L2 distance is similarto the Ll

distance,

yet it computes the totalsquare errorbetweenthe weights. While this normisgenerally considered more useful than the Ll metric, bothwill be included in the tests.

The Mahalanobis distance metric takes into account theproperties ofthe Eigenspace. It

applies aweighting

factor,

z, to thesystem. This factorrelates the Eigenvectorwiththestrength

by

which it is correlated to the dataset.Therefore,

Eigenvectors with highercorrelationto the datasetshouldbeweighted more

heavily

sincethey

are moreimportant.Thus,

the Eigenvalues allow the distance metric to weight the distance based upon howimportantthe

difference

betweenthe two measures actually is.Theselection of principal component analysis as the algorithm for face matching

thismethod. Thealgorithm must, therefore, be adaptedto obtainthe maximum levelof

Chapter

3

PCA

Implementation

Developing

an implementation of the PCA algorithm was no simple task. Inorderto obtain an accurate set ofEigenfaces and successfullytest the system, over 600

images were

individually

preparedby

hand. The algorithm was then implemented andtestedwhilevarying manyparametersinordertoobtainefficient, yetaccurate results.

3.1.

Source Images

In orderto obtain the sheer volume ofimages needed to

develop,

train,

and testthe system,adatabaseoffaceswas necessary. Onesuchdatabase istheFERET database

[26]. The FERET database was sponsored

by

the Face RecognitionTechnology

(FERET)

program which was supportedby

the Department of Defense'sCounterdrug

Technology

Program. The goal ofthe FERET program was todevelop

automatic facerecognitiontechnologiesthat couldbeusedto assistthelawenforcement community.

Inorderto accomplish this task, alarge database offace images was gathered

by

professors atGeorge Mason

University,

independently

ofthealgorithmdevelopers. Theimageswere collected inapartially controlled environmentinorderto maintain adegree

ofconsistency. The samesetupwasusedineachphotographysetup, yettheimages were

collectedonmany differentdayswith slightlydifferentequipment.

The database was

fully

assembled after fifteen sessions whichtookplacebetweenthe fall of1993 andthe spring of1996. The FERET database totals more than fourteen

thousand images in about fifteen hundred sets. Over one thousand subjects were

Inorderto

develop

a cohesive set ofEigenfaces,

the images inthedatabase wereexamined and selected. Since theobjective ofthe

testing

would be to matchanimageofa subject to another image of the same subject, two images of each individual were

needed. The

images

hadtobe similar, yetnot identical. Imageswith variations in facialpose angles, expression,and

lighting

were acceptable candidates. Imageswithvariations.in facial

hair,

glasses, or severe differences were discarded. The images were thengroupedintothreedistinct datasets.

Thedatasets were determined

by

theperceivedlevel ofdifferencesinthe images.Thefirst dataset contained pairs ofimages which were deemedthemost similarto each

other. Theimages inthisset werealso takenatthesame levelofzoom,

lighting,

andhadsmall variations in facialposes angles. Thesecond set consisted ofimages withslightly

larger variations. The images in the third set were considered the oneswith thegreatest

variation. Images inthisset varied

by

zoomlevel, lighting,

larger facialposeangles, andmore extreme expressions. It was this third set that was intended to measure the

generalizationofthealgorithmdeveloped. This set is also themost importantsince once

thealgorithmis integrated into therealtime camerasystem, allofthesevariations would

comeintoplay.

Data Sets 1 and2 each consisted of 100 subjects,

fifty

ofwhich were male andfifty

of which were female. Data Set 3 consisted of77 subjects, 5 1 ofwhichwere maleand 26 ofwhich were female. There were two images ofeach subject selected and no

subject appeared in more than one data set. The images were

finally

cropped in a3.2.

PCA Algorithm

In order to calculate the

Eigenfaces,

the programinput

requires alisting

ofimages,

the desired width and length of theEigenfaces,

and the desired number ofEigenfaces. In order to produce solid Eigenface

images,

the source images must bestandardized inthe same way. A flowchart ofthe steps takenis shownbelowin Figure

5. ( Loadimage ? r Enoughimages led? No f -Load Image > -Convertto -? r Resize

Listing

File Loa( GrayscaleYes Calculate Covariance ? Calculate Eigenvectors ? Calculate Eigenfaces

-? Saveto File

Figure 5- Eigenface Creation Flowchart

Thecovariance ofthe imageset reliesupontheimportant features from differentsubjects

matching up in the same pixel locations. This means that each ofthe source images

shouldbe croppedinthe same manner, centered onthe eyes, and rotated so that the face

image contains no more than 15 degrees of roll. For initial

training

and developmentpurposes this was all done

by

hand. The faces were cropped at thetop

oftheforehead,

bottomofthe chin, and at the base ofthe ears for over500 faces. Ifthe face had more

than 15 degrees ofroll, it was

digitally

rotated so that the roll was as close to zero aspossible.

After all ofthe images were cropped, listings ofthe images were created so that

created with the full name of the images desired with a single filename per line in

alphabeticalorder. This simplified theprocess ofreading inthe

images,

countingthem,

andmost

importantly,

keeping

trackofwhichfaceswere matches.The images are then read into the system one at a time with each image

being

processedbefore

being

addedto alarge input imagearray. The processing ofthe imagesis relatively simple. Each image was resized to a standard width and length in pixels.

Once thiswas completed, the imagewas normalizedto

help

reduce theeffect oflighting

uponthe image. At the completion ofthis step, the system contained an arrayofup to

500 imageswhichhadbeenproperlyprocessed.

In order to determine the Eigenvectors of the

images,

a standard covariancematrixcould not beused since the covariance matrix of a set ofimages sized at 128

by

128 pixels would contain over 250 million entries (228). This data would be nearly

impossibleto contendwith. Itis forthisreasonthatthe"scrambled" covariancematrixis

calculated as mentioned in section 2.6. The scrambled covariance matrix contains the

same number of entries as the number of pixels in the input images. From this

covariance matrix aset ofEigenvectors isdetermined along withthe set ofcorresponding

Eigenvalues. The Eigenvectors are used to create the Eigenfaces through a process of

scaling and adding the initial images to one another as described in Section 2.3. The

resulting Eigenfacevectors are storedto atextfile for laterretrieval and use.

In orderto effectivelyuse the PCAalgorithm, Eigenfaces should be created and

used at the same resolution.

Resizing

the Eigenface objects creates distortions whichaddition, any imagewhichis going to beused in the Eigenspace should be processed in

exactlythesame manner asthe

images

which were usedinthecreation oftheEigenfaces.3.2.1

Difficulties

Thereare many challenges inherent in

developing

asuccessful set ofEigenfaces.The first and foremost among them is the need for clear, pronounced, relevant

Eigenfaces. The only waytoachieve such results istouse alargenumberoffaceswhich

have beencropped and rotated in thesame manner so thateveryprincipal component of

thedifferent face images line up with eachother.

Unfortunately,

it is nearly impossibleto crop each image

by

hand in exactly the same manner. To make matters worse, thepitch and yaw of the face in the images can not be controlled. While these slight

deviations do not matter to the human eye,

they

have unknown and possibly profoundeffects uponthecreationoftheEigenfaces.

Each error in cropping or poor image selection potentially introduces error into

the development ofthe Eigenfaces. Ifenough error is

introduced,

an entire Eigenfacecouldbe devotedto

it,

reducingtheeffectiveness ofthe otherEigenfaces.Unfortunately,

a visual examinationofthe Eigenfaces cannot reveal ifone is valid or not.

Furthermore,

whenthematchingprocedure failsto match a set offaces it isoftenextremely difficultto

3.2.2

Limitations

There aremany

limitations

placed upontheeffectiveness ofthe Eigenfaces basedupon their desired use. For this system, the Eigenfaces are

being

designed for theidentification of the principal components of a subject's face and not for

directly

identifying

faces. Thismeans that the Eigenfacesare always assuminga frontalandnon-rotated view of the face and cannot work effectively with a side view of the face.

Eigenfacesaregenerallytrainedwithtiltedfacesaswellas frontalviewstoallowthemto

recognizefacesthat are

tilted,

as faceobjects. Inthisworkitisassumedthat thefacewillalready be identified before it is passed to the Eigenspace for calculations. It is also

desiredthat the pose angle ofthe face should notplay an important factor in

identifying

whetherornotthe facematches a particular subject. This lineofreasoning clearly leads

to the factthat in

training

theEigenfaces,

itis desiredthatno principal componentsintheEigenspacecorrespondtotheangle ofthe face. Iftoo manyoftheEigenfacescorrespond

to the angle ofthe face instead ofthe facial

features,

the system will match subjectswhoseheads are posedinthesame manner.

Another limitationofthealgorithmisthat it is significantlymoredependentupon

lighting

variationthanpreviouslyreported. Whilethis is notsurprising inretrospect, it isstill a difficult issue to handle. Research showed that an effective Eigenspace was

relatively independent ofthe light source, as

long

as there was enough light to view theface. Forthe purposes ofmatching specific

faces,

the camera needsto produce animagewith crisp, clear features instead ofjust a general view that canbe identified as a face.

intensity

and angle ofthe light. This means that the environment needs to be morecontrolledthan

initially

believed ifperformanceis tobe maintained. It also may have aneffect onhowthealgorithmgeneralizes acrossdifferent environments.

Athird

important

limitofthe algorithmis that the ears, a ratherdistinct featureonmostpeople, cannotbeused to

identify

theface,

withoutserious restrictionsplaced uponthe camera system's environment. The reasonforthis is that the ears stick out from the

face and therefore create pockets above and below them, in a cropped

image,

whichcontain a view ofthe background. Since the background is ever-changing, this adds a

significant amount of noiseto theprocessed image and cloudstheEigenfaces. Thiscould

be avoided

by

putting the camera system into a room with where everything wasfeaturelessandwhite,butwould renderthesystemimpractical. Itwas found

during

earlytesting

that thiswasthe case,hence itisdesirabletocropthefaceatthebaseoftheears.Finally,

the goal offace matching is not to foildisguises,

but toidentify

a morecooperative subject. In this regard, disturbances such as removing glasses, modifying

facial

hair,

and other face occlusions were not trained into the Eigenfaces. It is desiredthat the system work on effectively matching clear facial images. To this end, facial

occlusions were not presentin anyofthe

training

imagesand were nottakenintoaccountinthe finalsystemdesign.

3.3.

Distance Measure

Implementation

&

Testing

Previous research showed that there exist many

different

ways to measure thedistance betweentwo images which had been projected into an Eigenspace [14]. While

instead ofselectingthe best resulting measure fromanotherexperiment. The reasonfor

this decisionwas that the Eigenfaces were trained specifically for this system,

focusing

on certain variables and

being

used ina unique way. The effectiveness ofthe distancemeasures would most

likely

vary, since so much ofthe system would not remain thesame. In orderto test each ofthe distance measures, a systematicprocess ofexamining

thefaces wasdeveloped.

The method developed alloweda large set ofimages to be examined. In the set

eachsubject wasrepresented

by

two images. The process functionedby

taking

the firstimage,

and calculating its distance to each ofthe other images in the set. The imagewhich was closest, yet not identical was considered the best match. The system

displayedthe initial

image,

and the twobest matches foreach ofthedistance metrics, toallow visual confirmationofthe accuracyofthestatistical measure

being

calculated. Thesystem would record whetheror not the imagesselected werecorrect matches. Afterthe

calculations were completethesystemwould pausemomentarilyandthenmove onto the

next image. This pattern would continue until the entire set of images had been

processed. The resultingstatistical informationwas loggedtoafile.

In orderto gather useful statistical

information,

onlyoneparameterwas varied ata time. The test results for each of the variables are displayed in Section 4.1. The

importantvariables inthe

testing

were:- Distance Metric Selection

- The Normalization Method

- Number

ofImagesperSubject

-The NumberofEigenfacesto

Keep

- The

EffectivenessoftheFirst Eigenface

- TheEffectiveness

of

Masking

theImage- The

Effectiveness of Malevs. Female Eigenfaces

The number ofEigenfaces to

keep

was an important variable because while thefirstfew Eigenfaces arerelativelyclear, the last Eigenfaces are significantlydistorted

by

noise. Asaresult, it is importantto determinethepoint at whichanEigenface becomes

useless because it no longer contains any valuable data. In addition to

this,

theeffectiveness ofthe first Eigenface is called into question. Thefirst few

Eigenfaces,

it isgenerally

believed,

are relatedto the most prominent angles oflighting

in the image set[14].

Therefore,

it may be wise to remove these images since it is desired that theEigenspaceisunaffected

by

thelighting

conditions.Asecond importantvariable iswhether or nottomaskthecorners ofthe imageto

remove noise. While this is a pretty standard practice, once again the focus of the

Eigenfaces

being

developed is different and may require a different approach. Theprimary concern ofthe

Eigenfaces,

for this system, is to be able to characterize theprincipalcomponents ofthe face. To thisend, the imagewas croppedvery closelyabout

the face and contains very little ofthe noisy background scene. This makes it possible

that masking the imagewould remove importantportions ofthe image instead ofaiding

in removing noise from the image. The use of a mask could also add unexpected

Lastly,

anintriguing

question arose. The human visual system can easilyrecognize the

difference between

male and female faces. Would itbe useful todevelop

Eigenfaces which could be specificallymale or female? This would be possible in this

system, since user input is required to select the face that is desired for matching. It

would be a simple matter to force the user to input a gender with the desired face.

Despite theseemingly

intuitive

nature ofthis question, very little information seemed tobe available onthewisdomofsuchanapproach.

3.4.

Distance Measure Results & Selection

After some initial

testing

to ensure that the Eigenfacetesting

algorithm wasworking correctly,

testing

began to determine the most effective distance measurement.With so many variables,

taking

measurements with more than two different distancemetrics wouldsimplycreatetoo muchdataandbeginto causeconfusion.

Inordertodrawa usefulconclusion, thealgorithmwasset up inamannersimilar

to what was found in other papers. The image size was set at 128 x 128 pixels, the

imageswere normalized withhistogramequalization, 200 images of unique subjects were

used to train the

Eigenfaces,

and 100 Eigenfaces were used in the distance calculations.100

90

-f-3 60J

u < 50

ComparisonofMeasurementMetrics

Training Set- QataSet 1

Angle Mahal Ll&M L2& M M&A Distance Metrics

Figure 6- Distance Metric

TestingResults

While the Ll distance metric scored the highest in the first data set, it was not

selectedbecause it did not appearto generalize wellacross thedifferent data sets. Since

theEigenspace is intended forareal-life, dynamic environment, themostweight was put

onthe accuracyofthe results in measuring Data Set 3. The Ll & Mahalanobis distance

metric scored the highest in Data Set 3 and had the highest average score. The tight

grouping of the scores and the average above 85% matching accuracy gave it the

definitive lead intheselectionofthedistance metric. The

Ll, L2, Mahalanobis,

Ll &M,

and L2 & M metrics are all

highly

correlated measurements. Since the Ll &Mahalanobis metric was the most successful ofthese

five,

the second metric to test wasselectedas theMahalanobis&Anglemeasurement. Thismeasurement scoredthesecond

highest forthefirstdataset and wasbetterthan the Anglemeasurement alone.

3.5.

Eigenfaces

Inorderto computetheEigenfaces foraparticular set ofdata

images,

theaverageinthedata setbefore processing begins. Theaverageface for Data Set 1 isshownbelow

in Figure 7.

Figure 7- Average Face

Almost all ofthe calculations in regards to

developing

the covariance matrix,Eigenvectors,

and the resulting Eigenfaces are done with 32 bitfloating

point precision.Inorder to maintain this level ofaccuracy, all Eigenfaces and information stored in the

database files for future use are also storedinthe 32 bit

floating

point format. Inordertoview the Eigenfaces

however,

it is necessaryto scale themto thestandard 8 bitgrayscaleversion suitable fora

bitmap

image.To this effect, whensaving or viewing anEigenface throughthe system, a linear

scaling is done whereby the lowest value is scaled to 0 and the highest to 255. This

scaling has been performed on the first five Eigenfaces and can be seen in Figure

8,

Figure 8- The First Five Eigenfaces

The first Eigenfaces arerelativelyclear as intended. The features seem to match

up quite welland adistinct set ofeyes, anose, and a mouth, are clearly visible ineachof

them. An important featureto noteis thegeneral lackofextremes andhow thecontours

on the Eigenfaces appear as one would expect. A good feature to note is the lack of

strange artifacts or noise in the corners ofthe images. The corners ofthe image contain

background image and are not important facial feature data. While it is generally very

difficult to determine what principal components each Eigenface characterizes, the

second and third Eigenface appearto be relatedto the horizontal and vertical

lighting

ofthe subject. This may be importantwhenmoving the algorithminto the real-timesystem

where

lighting

willby

no meansbeconstant.As the order of the Eigenfaces

increases,

and their respective Eigenvaluesdecrease,

theimagesbecome noisier. Thisprogressionisexpectedbecausethesize oftheEigenvalue determines the relevance ofthe corresponding Eigenface. Therefore as the

Eigenvalue

decreases,

the corresponding Eigenface should be capturing less significantFigure 9

-Eigenfaces50&51, 100 &101,and 150

The 50 and 51st

Eigenfaces are still relatively clear face

images,

but the clarityrapidlycontinuestodegrade untilthe 150thEigenface only hintsat facial features. While

the later Eigenfaces may appear to be worthless images containing

largely

noise, aChapter

4

Evaluation

ofPrincipal

Component

Analysis

Thedatapresented inthissection wasrecordedafterinitial

testing

confirmedthattheprincipal component analysis algorithm was working. Itwas also confirmed that the

testing

and statistical measurement aspect ofthe algorithm was working as well. Theinitial results will not be presented

however,

since the data does not reflect the finalimplementationofthealgorithm.

While it is often accepted practice to use square images to trainand producethe

Eigenfaces,

it seemed prudent to test the images in a more natural shape for a face aswell. To this end, the average length and width of the cropped face images were

measured and calculated. Itwas foundthat the croppedimageswereinthe ratio of1.38

to 1 forthe height with respect to the width.

Using

this ratio, several image sizes wereselected andsubsequentlytested.

In order to examine the effectiveness of the system, and how the algorithm

generalized across data sets, the system was trainedand testedwith both Data Set 1 and

DataSet 2. The results forthe two different

training

sets werethenexaminedtogethertohelp

determinethebestmethodfortiaining

thefinal Eigenfaces.4.1.

Data Gathered

In the

following

section, results were obtained to allow the PCA algorithmto beoptimized to produce Eigenfaces which would be effective in the real time

implementation. Datawas gathered foreach ofthe points ofinterest outlined in Section

4.1.1

Image Size

andNormalization

MethodInorderto examinetheeffects ofnormalizationonthe

images,

the firstthree testswere doneusing equalization, contraststretching, and nonormalization.

Equalization,

orhistogramequalization, is an imagetransformationwherebythe image is adjusted so that

each

intensity

level is representedby

an equal number of pixels in the image [27]. Inmost cases, this improves the contrast in the image.

Unfortunately,

it can also causeimperfections in the image to stand out. Contrast stretching attempts to adjust the code

values inthe image sothat alargerrange ofintensities is used. Thenew

intensity

ofthe(

255^

pixel canbe calculated

by IN

(x,

y)=(l(x,

y)

-Min)

for standard grayscale\Max-Min)

images. MaxandMinare themaximum and minimum

intensity

levels inthe image. Forexample, ifall ofthe pixels in an 8 bit image fall in the range of20 to

200,

applyingcontrast stretching

linearly

scales the pixel values to be in the range of0 to 255. Thisincreaseofseventy fivelevels ofimage

intensity

allows forabetterdefined image.The results for the normalizationtests are shownb