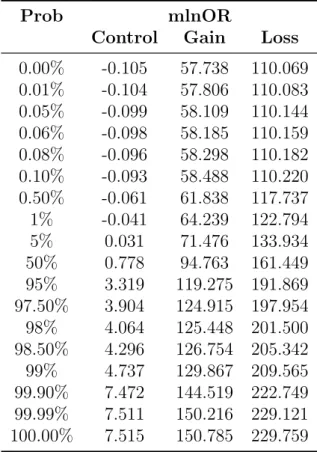

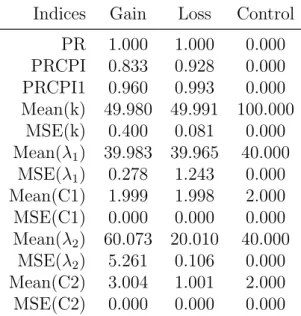

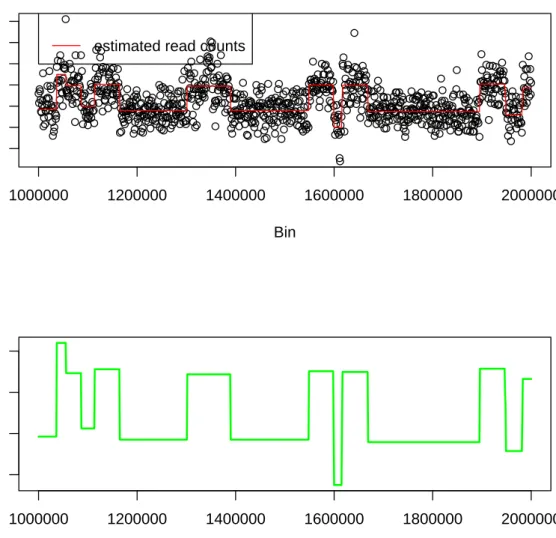

Bayesian Change Point Analysis of Copy Number Variants Using Human Next Generation Sequencing Data

Full text

Figure

Related documents

A sample of 108 women, aged between 35 and 55 years, completed questionnaire measures of body dissatisfaction, appearance investment, aging anxiety, media exposure (television

Prevalence and intensity of helminths found in stenodermatine bats from western Amazonian Brazil, and lick sites from which infected bats were captured.. Helminth Host Prevalence

The Government’s guidelines for external reporting for state- owned companies adopted in November 2007, (see pages 17–18 and 22–31) with special requirements on state-owned companies

The aim of our study was to analyze two sturgeon species, Huso huso and Acipenser ruthenus and their interspecific hybrid - bester, using nuclear markers, in order to set up

Learning and academic analytics in higher education is used to predict student success by examining how and what students learn and how success is supported by

Bankruptcy and Creditor Debtor Rights / Insolvency and Reorganization Law Litigation - Bankruptcy.

Beyond the implications outlined above (indications regarding managerial motives; insight into managerial challenges involved with mergers; identification of value-decreasing

Our consolidation solution is a disciplined, systematic approach that allows clients to realize significant operating cost savings in their enterprise contact centers..