International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

81

Multi-Objective Genetic Algorithm: A Comprhensive Survey

Er. Ashis Kumar Mishra

1, Er. Yogomaya Mohapatra

2, Er. Anil Kumar Mishra

31Department of Computer Science & Engineering, College of Engineering & Technology, Bhubaneswar 2,3Department of Computer Science & Engineering, Orissa Engineering College, Bhubaneswar

Abstract— Genetic Algorithm can find multiple optimal

solutions in one single simulation run due to their population approach. Thus, Genetic algorithms are ideal candidates for solving multi-objective optimization problems. This paper provides a comprehensive survey of most multi-objective EA approaches suggested since the evolution of such algorithms. This paper provides an extensive discussion on the principles of objective genetic algorithm and the use of multi-objective genetic algorithm in solving some problems.

Keywords— Evolutionary algorithm, Genetic algorithm,

optimization, Single-objective, multi-objective genetic

algorithm, Pareto optimal solution, Pareto front.

I. INTRODUCTION

Optimization refers to finding one or more feasible solutions which correspond to extreme values of one or more objectives. Optimization is a procedure of finding and comparing feasible solutions until no better solution can be found. Solutions are termed as good or bad in terms of an objective, which is often the cost of fabrication, amount of harmful gases, and efficiency of process, product reliability, or other factors. A significant portion of research and application in the field of optimization considers a single-objective, although most real-world problems involve more than one objective. The presence of multiple conflicting objectives (such as simultaneously minimizing the cost of product and maximizing product comfort) is natural in many problems and makes the optimization problem interesting to solve. Since no one solution can be termed as an optimum solution to multiple conflicting objectives, the resulting multi-objective optimization problem resorts to a number of trade-off optimal solutions. Evolutionary algorithm (EAs), on the other hand, can find multiple optimal solutions in one single simulation run due to their population approach. Thus, EAs are ideal candidates for solving multi-objective optimization problems. This paper provides a comprehensive survey of most multi-objective EA approaches suggested since the evolution of such algorithms. This paper provides an extensive discussion on the principles of multi-objective genetic algorithm.

II. EVOLUTIONARY ALGORITHMS

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

82

Some of the other advantages of having evolutionary algorithms are that they require very little knowledge about the problem being solved, easy to implement, robust and could be implemented in a parallel environment.

Figure1: Flow Chart of Evolutionary Algorithm A.Types of Evolutionary Algorithm

There are three types of evolutionary algorithms are present.

1. Evolutionary strategy 2. Genetic algorithm 3. Genetic programming.

In this paper we are mainly concerning about genetic algorithm. There are two types of genetic algorithm.

1. single-objective genetic algorithm 2. multi-objective genetic algorithm

Both the algorithms are used to get the optimal solution

.

B.Genetic Algorithm

The genetic algorithms are based on the concept of the natural selection and evolutionary process. It usually keep a set of points known as population. Genetic algorithm [3] maintains a pool of solutions which evolve in parallel over time. In each generation the genetic algorithm construct a new population using genetic operators such as selection, crossover and mutation. Major components [13] of the genetic algorithm are

1. Encoding scheme: This step transforms points in parameters space into bit stream representation known as chromosome.

Example: 0 1 0 1 1 1 0

2. Fitness evaluation: After encoding the fitness of each member are calculated by putting the values in objective function.

3. Selection: After the fitness evaluation of the individuals the proper individuals are selected for the next generation.

4. Crossover: To exploit the potential of gene pool we use cross over operation to generate new chromosome. Crossover is the operator that gives genetic algorithm their strength; it allows different solutions to share information with each other. It operates over two chromosome list.

Example: 1 1 0 1 0 0 string parent1

0 1 1 1 0 1 string parent 2

If we put the two point crossover over the above two strings then the resultant child strings will be as follows. 1 1 1 1 0 1 string child1 0 1 0 1 0 0 string child2

Here the two bits of the parent2 and parent1 will be swapped in order to generate the child.

5. Mutation : Crossover exploits current gene potential but if it does not contain all the information then a mutation operator is used to generate new chromosomes. The most common way of implementing mutation is to flip a bit with a probability equal to a very low given mutation rate.

Example: 0 0 1 1 1 0

If we will put the mutation over the above chromosome then the last bit is converted to 1.

So the resultant chromosome will be 0 0 1 1 1 1

III. OPTIMIZATION

Optimization refers to finding one or more feasible solutions which correspond to extreme values of one or more objectives. Optimization tools should be used for supporting decisions rather than for making decisions, i.e. should not substitute decision –making process. There are two types of optimization single objective optimization and multi objective optimization.

START

INITIALIZE POPULATION

FITNESS EVALUATION

SOLUTION

FOUND

STOP

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

83

A.Single Objective Optimization

When an optimization problem, while modeling a physical system, involves only one objective function, the task of finding the lone optimal solution is called objective optimization and the problem is called single-objective optimization problem (SOP). The main goal of single objective optimization is to find the “best” solution, which corresponds to the minimum or maximum value of a single objective function that lumps all different objectives into one. The type of optimization is useful as a tool which should provide decision makers with insights into the nature of the problem, but usually cannot provide a set of alternative solutions that trade different objectives against each other.

B.Multi Objective Optimization

When an optimization problem involves more than one competing or conflicting objective functions, the task of finding one or more optimum solutions is known as objective optimization and the problem is called multi-objective optimization problem (MOP).Most real-world search and optimization problems naturally involve multiple objectives. Different solutions may produce trade-offs (conflicting scenarios) among different objectives. A solution that is better with respect to one objective requires a compromise in other objectives. On a multi objective optimization [5] with conflicting objectives, there is no single optimal solution. The interaction among different objectives gives rise to a set of compromised solution, largely known as trade-off. So when an optimization problem involves more than one objective function, the task of finding one or more optimum solutions is known as multi-objective optimization.

1. Some Real-World Examples of MOPs

Purchase of an automobile car from a show room Conflicting objectives – Maximize

comfort factor.

Minimize purchase cost.

Product design of an appliance in a factory Conflicting objectives – Maximize

performance (accuracy, reliability etc.). Minimize production cost.

Allocation of facilities in hospitals

Conflicting objectives – Maximize attention time to patients.

Minimize time to provide facilities to patients.

Minimize the cost of operations.

Economic load dispatch in power systems Conflicting objectives – Minimize fuel

cost.

Minimize total real power loss.

Minimize emission

Land use management

Conflicting objectives – Maximize economic return

Maximize carbon sequestration

Minimize soil erosion

Polynomial Neural Network (PNN)

Conflicting objectives – Minimize architectural complexity

Maximize classification accuracy

Predictive data mining classification tasks

Conflicting objectives – Maximize predictive accuracy of classification model

Minimize complexity of the model (size of model)

An Example of MOP: Car-Buying Decision-Making Problem which is widely accepted is explained in detail

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

84

1. If preference based procedure is followed to find a single final solution for a MOP then this information is used initially to decide the values of the weights for the objective functions.

2. If ideal procedure is followed to find a single final solution for a MOP then this information is used latter to decide for a single solution from the set of Pareto optimal solutions.

For the above car-Buying Decision-Making Problem example such high level information is mentioned below:

Total finance available to buy the car Distance to be driven each day Number of passengers riding in the car Fuel consumption and cost

Depreciation value

Road conditions where the car is to be mostly driven

Physical health of the passengers Social status and many other factors

By considering the above high level information, one can decide a particular model of car to be purchased finally.

C. Difference between Single-objective And Multi-Objective Optimization

Besides having multiple objectives, there are a number of fundamental differences between single-objective and multi-objective optimization, as follows

1. Two goals instead of one. 2. Dealing with two search spaces.

We will discuss these in the following subsections.

1. Two goals instead of one:

In a single-objective optimization, there is one goal- the search for an optimum solution. Although the search space may have a number of local optimal solutions, the goal is always to find the global optimum solution. However there is an exception. In the case of multi-objective optimization, the goal is to find a number of local and global optimal solutions, instead of finding one optimum solution. In multi-objective optimization, there are clearly two goals. Progressing towards the Pareto-optimal front is certainly an important goal. However, maintaining a diverse set of solutions in the non-dominated font is also essential.

2. Dealing with two search spaces:

The multi-objective optimization involves two search spaces, instead of one. In a single-objective optimization, there is only one search space- the decision variable space.

An algorithm works in this space by accepting and rejecting solutions based on their objective function values [5]. Here in addition to the decision variable space, there also exists the objective or criterion space.

In any optimization algorithm, the search is performed in the decision variable space. However, the proceedings of an algorithm in the decision variable space can be traced in the objective space.

IV. MULTI-OBJECTIVE GA

Single objective optimization can be used within the multi-objective framework. Most real world search and optimization problems mainly involve multiple objectives. This multi- objective optimization is considered as an application of single- objective optimization for handling multiple objectives. When we consider for single-objective optimization [1] [5] we may have to compromise with other objectives, but in multi-objective optimization we can get better solutions as we consider different objectives.

In the above mentioned decision-making problem of buying a car, solution 1 and solution 2 are the optimal solutions. If a buyer is willing to sacrifice cost to some extent then he will go for solution 1, but if buyer wants better comfort then he will go for the solution 2. So the sacrifice in cost is related to comfort.

Now the question arises, with all of these trade-off solutions in mind, can one say which solution is the best with respect to both objectives? The answer is that none of these trade-off solutions is the best with respect to both the objectives. The reason is that no solution from this set makes both objectives (cost and comfort) look better than any other solution from the set. Thus, in problems with more than one conflicting objectives, there is no single optimum solution. There exist a number of solutions which are optimal. Without any further information, no solution from the set of optimal solutions can be said to be better than any other. As a number of solutions are optimal, in a multi-objective optimization problem many such (trade-off) optimal solutions are important.

Although the fundamental difference between these two optimizations lies in the cardinality in the optimal set, from a practical stand point a user needs only one solution, no matter whether the associated optimization problem is single objective or multi objective.

A.Procedures to Multi-Objective Optimization

There are two types of procedures available for multi objective optimization.

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

85

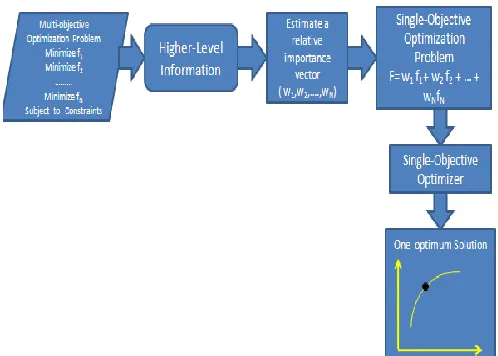

1) Preference-based Multi-objective Optimization Procedure :

Step1 Based on the higher-level information, choose a preference vector w.

Step2 Use the preference vector to construct the composite objective function.

[image:5.612.47.296.271.449.2]Step3 Optimize this composite objective function to find a single trade-off optimal solution by a single objective optimization algorithm (i.e. single objective optimizer).

Figure 2 shows schematically the principles in an ideal multi objective optimization procedure.

In step 1, multiple tradeoff solutions are found. Thereafter, in step –2, higher- level information is used to choose one of the trades of solutions. With this procedure in mind, it is easy to realize that single objective optimization is a degenerate case of multi objective optimization. In the case of single objective optimization with only one global optimal solution, step –1 will find only one solution, there by not requiring us to proceed to step-2. In the case of single objective optimization with multiple global optima, both steps are necessary to first find all or many of the global optima and then to choose one from them by using the higher level information about the problem. If thought of carefully, each trade off solutions corresponds to a specific order of importance of the objectives.

2) Ideal Multi-Objective Optimization Procedure:

Step-I: Find multiple trade-off optimal solutions with a wide range of values for objectives using a multi-objective optimization algorithm (i.e. multi-objective optimizer).

Step-II: Choose one of the obtained solutions using higher-level information.

B. Objectives in Multi Objective Optimization

It is clear from the above discussion that ,in principle, the search space in the content of multiple objectives can be divided into two non overlapping regions, namely one which is optimal and one which is non optimal. Although a two-objective problem is illustrated above. This is also true in problems with more than two objectives. In the case of conflicting objectives, usually the set of optimal solutions contains more than one solution. FIG- 4 shows a number of such Pareto optimal solutions denoted by circles. in the presence of multiple Pareto optimal solutions, it is difficult to prefer one solution over the other without any further information about the problem. If higher level of information is satisfactorily available, then one solution can be found out. However, in the absence of any such information, all Pareto optimal solutions are equally important. Thus, it can be conjectured that there are two goals in a multi-objective optimization:

1. To find out a set of solutions as close as possible to the Pareto optimal front.

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

86

C. Different approaches to obtain tradeoff solutions in MOPS

We can approach to a multi objective problem in three different ways

The Conventional Weighted-Formula Approach : Transforms the multi-objective optimization problem into a single-objective optimization problem by using weighted formula (i.e. user-defined parameters or weights). It is a kind of preference-based multi-objective optimization procedure.

The Lexicographic approach :

Ranks the objectives in order of priority. It is again a kind of preference-based multi-objective optimization procedure.

The Pareto approach :

Finds as many non-dominated solutions as possible and returns a set of non-dominated solutions to the user. It is a kind of ideal multi-objective optimization procedure. Ranks the objectives in order of priority. It is again a kind of preference-based multi-objective optimization procedure.

a. Conventional Weighted-Formula approach

Once the objective function has been defined, this method assigns a numerical weight to each objective function and then combines the values of the weighted criteria into a single objective function by either adding or multiplying all the weighted criteria, thus transforming a MOP into a SOP. Analytically, the objective (or fitness) functions of a given candidate solution is given by one of the following two kinds of formula:

f(x) = n

i=1 wi fi (x)

Or f(x) = i=1n fi(x) wi

Where Wi, i= 1……n denotes the weight assigned to objective function fi(x) and n is the number of objective functions.

Advantages

Has conceptual simplicity and ease of use.

Drawbacks

1. Weight selection is subjective & ad-hoc.

2. Misses the opportunity to find other useful solutions having better trade-off of objectives.

3. Cannot find solutions in a non-convex region of the Pareto front.

4. Different objectives having different units of measurement need suitable normalization process which is often subjective & inductive biased.

5. Has the problem of mixing non-commensurable objectives.

6. Multiple runs of algorithm based on this approach to obtain multiple trade-off solutions for MOP are inefficient as well as ineffective.

b.Lexicographic Approach

This approach proceeds as below Different priorities are assigned to different objectives and then the objectives are optimized in order of their priorities. So when two or more candidate solutions are compared with each other to choose the best one, then their performance measures are compared for the highest-priority objective. If one candidate solution is significantly better than the other with respect to that objective, the former is chosen. Otherwise the performance measures of the two candidate solutions are compared with respect to the second objective. Again, if one candidate solution is significantly better than the other with respect to that objective, then the former is chosen, otherwise the performance measures of the two candidate solutions are compared with respect to the third objective. This process is repeated until one finds a clear winner or until one has used all the objectives. In the latter case, if there is no clear winner, one can simply select a solution optimizing the highest priority objective.

Advantages & Drawbacks of Lexicographic approach Advantages

1. Avoids the problem of mixing non-commensurable objectives in the same formula. It treats each of the objectives separately.

2. Has conceptual simplicity & ease of use.

Drawbacks

1. Specification of tolerance threshold & corresponding degree of confidence for each objective is Subjective & arbitrary.

2. It is sequential by nature.

c. Pareto Approach

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

87

The set of these Pareto optimal solutions forms “Pareto Front”. Pareto optimality is linked to the notion of dominance. A decision vector u is said to strictly dominate

another decision vector ν (denoted by u < v) iff

fi (u) ≤ fi (v) i = 1, ∙∙∙, D and fi (u) < fi (v) for at

least one i

Less stringently, uweakly dominates ν (denoted by u <=

v) iff

fi(u) ≤ fi (ν) i = 1, ∙∙∙, D

A set of M decision vectors {wi} is said to be a

non-dominated set (an estimated Pareto optimal front) if no member of the set is dominated by any other member:

i,j= 1, ∙∙∙, M

The above discussion can be simplified by using the Schaffer function, which is a 3-objective based 2-dimensional MOP. The equations are as below

y1=x12+x22 y2=(x1-1)2+(x2-1)2 y3=x1

2 +(x2-1)

2

In point A, both objective function values can be improved by moving into the region enclosed by the (negative) gradient vectors, while in point B the two objectives are in conflict.

An improvement of one objective causes a deterioration of other objective, which corresponds to the definition of a Pareto optimal point.

Three conflicting objectives

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

88

When we try to optimize several objectives at the same time the search space also becomes partially ordered. To obtain the optimal solution, there will be a set of optimal trade-offs between the conflicting objectives. A multi-objective optimization problem is defined by a function f

which maps a set of constraint variables to a set of objective values. As shown in the above figure, a solution could be best, worst and also indifferent to other solutions (neither dominating nor dominated) with respect to the objective values. Best solution means a solution not worst in any of the objectives and at least better in one objective than the other. An optimal solution is the solution that is not dominated by any other solution in the search space. Such an optimal solution is called Pareto optimal and the entire set of such optimal trade-offs solutions is called Pareto optimal set. As evident, in a real world situation a decision making (trade-off) process is required to obtain the optimal solution. Even though there are several ways to approach a multi objective optimization problem, most work is concentrated on the approximation of the Pareto set.

d. Advantages & Drawbacks of Pareto Approach Advantages

1. Never mixes different objectives into a single formula. All objectives are treated separately.

2. More principled than other two approaches.

3. Can cope up with non-commensurable objectives.

4. Results in a set of non-dominated solutions in the form of a Pareto front and leaves it to user‟s choice a posteriori.

5. Avoids multiple runs of algorithm.

Drawbacks

More complex than other two approaches.

V. CLASSICAL OPTIMIZATION METHODS TO SOLVE MOPS

There are three different classical optimization methods to solve MOPs they are

• Linear Programming (LP), • Dynamic Programming (DP) • Non-Linear Programming (NLP)

These methods combined with weighted-formula approach to solve the MOPs which need to be solved for non-dominated solutions with respect to multiple objectives. They can find at best one solution in one simulation run. They require a large number of simulation runs to arrive at a set of non-dominated solutions. These methods often fail to attain a good Pareto front due to the inherent inefficiency and ineffectiveness of multiple simulation runs.

There are also some Evolutionary Optimization Methods to Solve MOPs they are

• Vector-Evaluated Genetic Algorithm (VEGA) • Non-dominated Sorting GA (NSGA)

• Niched Pareto-GA (NPGA)

• Pareto Archived Evolution Strategy (PAES) These are non-classical, unorthodox and stochastic evolutionary algorithms which mimics nature‟s evolutionary principles to drive their search towards optimal solutions. They use a population of solutions in each iteration (i.e. simulation or run).

A.Vector-Evaluated Genetic Algorithm (VEGA)

VEGA is implemented by Shaffer in 1984 the first MOGA to find a set of non dominated solutions. The GA is called vector evaluated as his GA evaluated an objective vector with each element of the vector representing each objective function.

Neither dominating or dominated

Neither dominating or dominated Solutions dominated by

x (best solution)

Solutions dominating (worst solution)

Pareto Optimal solutions

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

89

VEGA is a straight forward extension of the general GA for multi objective optimization. As more number of objectives has to be handled Schaffer thought of dividing the GA population at every generation into M equal subpopulations randomly. Each subpopulation is assigned a fitness based on a different objective function. In this manner „M‟ objective function is used to evaluate some members in the population.

In order to find intermediate tradeoff solutions Schaffer allowed crossover between any two solutions in the entire population. Schaffer also thought that a crossover between two good solutions each corresponding to a different objective may find offspring which are good compromised solutions between the two objectives. The mutation operator is applied on each individual as usual.

B.Non-dominated Sorting GA (NSGA)

This algorithm was developed by Srinivas and Dev in 1994. The dual objectives in a multi-objective optimization algorithm are maintained by using a fitness assignment scheme which prefers non-dominated solutions and by using sharing strategy diversity among solutions of each non dominated front.

Advantage of NSGA is assignment of fitness is done according to non dominated sets. NSGA progresses towards the Pareto optimal region front-wise.

C.NPGA

Horn et al 1994 have proposed a multi-objective GA based on the non-domination concepts. This method differs from the previous methods in the selection operator. The niched Pareto genetic algorithm (NPGA) uses the binary tournament selection, unlike the proportionate selection method used in VEGAs, NSGAs and MOGAs. Their choice of tournament selection over proportionate selection is motivated by the theoretical studies (Goldberg and Deb, 1991) on the selection operators used in objective GAs. It has been shown that the tournament selection has better growth and convergence properties compared to proportionate selection. However, when a tournament selection (or any other non-proportionate selection) is to be applied to multi-objective GAs, which uses the sharing function approach, there is one difficulty. The working of the sharing function approach follows a modified two armed bandit game playing strategy (Goldberg and Richardson, 1987), which allocates solutions in a niche proportional to the average fitness of the niche. Thus, while using the sharing function approach, it is necessary to use the proportionate selection operator.

In the NPGA, dynamically updated niching strategy, originally suggested in Oeiet al. (1991), is used. During the tournament selection, two solutions i and j are picked at random from the parent population P. They are compared by first picking a subpopulation T of size tdom (<< N)

solutions from the population. Thereafter, each solution i and j is compared with every solution of the subpopulation for the domination. Of the two solutions, if one dominates all solutions of the subpopulation but the other is dominated by at least one solution from the subpopulation, the former is chosen. We call this scenario 1. However, if both solutions i and j are either dominated by at least one solution in the subpopulation or are not dominated by any solution in the subpopulation, both are checked with current (partially filled) offspring population Q. we call this scenario 2. Each solution is placed in the offspring population and its niche count is calculated. The solution with the smaller niche count wins the tournament.

Advantages of the NPGA are that no explicit fitness assignment is needed. While VEGAs, NSGAs and MOGAs are not free from their subjectivity is the fitness assignment procedure, the NPGA does not have this problem. Another advantage of the NPGA is that this is the first proposed multi-objective evolutionary algorithm which uses the tournament selection operator. However, the dynamically updated tournament selection somewhat reduces the elegance with which tournament selection is applied in single objective optimization problems. If the population size tdom is kept much smaller than N, the complexity of the

NPGA does not depend much on the number of objective (M). The NPGA may be found to be computationally efficient in solving problems having many objectives.

D.PAES

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, ISO 9001:2008 Certified Journal, Volume 3, Issue 2, February 2013)

90

Since a (1+1)-ES uses only mutation on a single parent to create a single offspring, this is a local search strategy, and thus the investigators developed their first multi-objective evolutionary algorithm using the (1+1)-ES. If an optimization problem has multiple optimal solutions, a multi-objective evolutionary algorithm (MOEA) can be used to capture multiple optimal solutions in its final population. This ability of an EA to find multiple optimal solutions in one single run makes EAs unique in solving MOPs effectively. The use of EAs as a tool of preference is due to the fact that MOPs are typically complex, with both a large number of parameters to be adjusted, and several objectives to be optimized. MOEAs, which can maintain a population of solutions, are in addition able to explore several parts of the Pareto front simultaneously. However, the major drawback of MOEAs is their expensive computational cost

VI. APPLICATIONS OF MOEAS

In this section we have pointed out some of the real world application case studies on MOEAs. Interested readers may refer to these studied

Researchers Application area

C.Poloni et al. (2000) Aerodynamic shape design

P.DiBarba et al. (2000) Electrostatic micro motor design

A.J.Blumel et al. (2000) Auto pilot controller design

F.B.Zhou et al. (2000) Continuous casting process

M.Lahanas et al. (2001) Dose optimization in brachytherapy

W. El Moudani et al. (2001) Airlines crew rostering

M.Thompson et al. (2001) Analog filter tuning

N. Laumanns et al. (2001) Road train design

D.Sasaki et al. (2001) Supersonic wing design

I.F.Sbalzarini et al. (2001) Micro channel flow optimization.

VII. CONCLUSION

We have dealt a number of salient issues regarding multi-objective evolutionary algorithms. Although there exists many other issues, the topics discussed here are of immense importance. Most of multi-objective studies performed so far involve only two objectives. While dealing with more than two objectives, it becomes essential to illustrate the trade-off solutions in a meaningful manner. We have reviewed a number of different ways in which the non-dominated solutions can be represented.

REFERENCES

[1 ] Kalyanmoy Deb, Indian Institute Technology Kanpur Multi-Objective Optimization Using Evolutionary Algorithm (Feb 2002). [2 ] D.E Goldberg, Genetic Algorithms in Search, Optimization, and

Machine Learning. Addison-Wesley, 1989, Reading, MA,

[3 ] Ying Gao, Lei Shi, Pingjing Yao, Study On Multi-Objective Genetic Algorithm, IEEE, June 28- July 2, 2000.

[4 ] Hisao Ishibuchi, Yusuke Nojima, Kaname Narukawa and Tsutomu Doi, “Incorporation Of Decision Makker‟s Preference into Evolutionary Multi objective Optimization Algorithms”, ACM 1-59593-186-4/06/0007,2006

[5 ] Omar Al Jaddan, Lakishmi Rajamani, C. R. Rao,” Non- Dominated ranked Genetic Algorithm for Solving Multi Objective Optimization Problems: NRGA “, Journal of Theoretical and Applied Information Technolohy, 2008.

[6 ] Kalyanmoy Deb, Amrit Pratap, Sameer Agarwal, and T. Meyyarivan, “A fast and Elitist Multiobjective Genetic Algorithm: NSGA-II “, IEEE Trans on evolutionary computation, V6, N2, 2002, pp, 182-197.

[7 ] Kalyanmoy Deb, “Multiobjective Genetic Algorithms: problem sifficulties and Construction of test functions”, IEEE Evol. Comput, V7, 1999, pp.205-230

[8 ] D. W. corne, N. R. Jerram, J. D. Knowles, and M. J. Oates. “PESA-II: Regionbased Selection in Evolutionary Multiobjective Optimization,” The Genetic and Evolutionary Computation Conf (GECCO2001), California, 2001, pp.238-290.

[9 ] Jinhua Zheng, Charles X. Ling, Zhongzhi Shi, Yong Xie, “Some discussions about MOGAs: Individual relations, Non-dominated Set, And Application on Auto, atioc Nigotiation, IEEE, 2004.