Generalizing Dimensionality in Combinatory Categorial Grammar

Geert-Jan M. Kruijff Computational Linguistics

Saarland University Saarbr¨ucken, Germany gj@coli.uni-sb.de

Jason Baldridge ICCS, Division of Informatics

University of Edinburgh Edinburgh, Scotland jbaldrid@inf.ed.ac.uk

Abstract

We extend Combinatory Categorial Grammar (CCG) with a generalized notion of multi-dimensional sign, inspired by the types of rep-resentations found in constraint-based frame-works like HPSG or LFG. The generalized sign allows multiple levels to share information, but only in a resource-bounded way through a very restricted indexation mechanism. This improves representational perspicuity without increasing parsing complexity, in contrast to full-blown unification used in HPSG and LFG. Well-formedness of a linguistic expressions re-mains entirely determined by the CCG deriva-tion. We show how the multidimensionality and perspicuity of the generalized signs lead to a simplification of previous CCG accounts of how word order and prosody can realize infor-mation structure.

1 Introduction

The information conveyed by linguistic utterances is diverse, detailed, and complex. To properly ana-lyze what is communicated by an utterance, this in-formation must be encoded and interpreted at many levels. The literature contains various proposals for dealing with many of these levels in the description of natural language grammar.

Since information flows between different levels of analysis, it is common for linguistic formalisms to bundle them together and provide some means for communication between them. Categorial gram-mars, for example, normally employ a Saussurian sign that relates a surface string with its syntactic category and the meaning it expresses. Syntactic analysis is entirely driven by the categories, and when information from other levels is used to affect the derivational possibilities, it is typically loaded as extra information on the categories.

Head-driven Phrase Structure Grammar (HPSG) (Pollard and Sag, 1993) and Lexical Functional Grammar (LFG) (Kaplan and Bresnan, 1982) also use complex signs. However, these signs are mono-lithic structures which permit information to be

freely shared across all dimensions: any given di-mension can place restrictions on another. For ex-ample, variables resolved during the construction of the logical form can block a syntactic analysis. This provides a clean, unified formal system for dealing with the different levels, but it also can adversely af-fect the complexity of parsing grammars written in these frameworks (Maxwell and Kaplan, 1993).

We thus find two competing perspectives on com-munication between levels in a sign. In this paper, we propose a generalization of linguistic signs for Combinatory Categorial Grammar (CCG) (Steed-man, 2000b). This generalization enables different levels of linguistic information to be represented but limits their interaction in a resource-bounded man-ner, following White (2004). This provides a clean separation of the levels and allows them to be de-signed and utilized in a more modular fashion. Most importantly, it allows us to retain the parsing com-plexity of CCG while gaining the representational advantages of the HPSG and LFG paradigms.

To illustrate the approach, we use it to model var-ious aspects of the realization of information struc-ture, an inherent aspect of the (linguistic) meaning of an utterance. Speakers use information struc-ture to present some parts of that meaning as de-pending on the preceding discourse context and oth-ers as affecting the context by adding new content. Languages may realize information structure us-ing different, often interactus-ing means, such as word order, prosody, (marked) syntactic constructions, or morphological marking (Vallduv´ı and Engdahl, 1996; Kruijff, 2002). The literature presents vari-ous proposals for how information structure can be captured in categorial grammar (Steedman, 2000a; Hoffman, 1995; Kruijff, 2001). Here, we model the essential aspects of these accounts in a more per-spicuous manner by using our generalized signs.

com-plexity; and (3) we use these signs to simplify pre-vious CCG’s accounts of the effects of word order and prosody on information structure.

2 Combinatory Categorial Grammar In this section, we give an overview of syntactic combination and semantic construction in CCG. We use CCG’s multi-modal extension (Baldridge and Kruijff, 2003), which enriches the inventory of slash types. This formalization renders constraints on rules unnecessary and supports a universal set of rules for all grammars.

2.1 Categories and combination

Nearly all syntactic behavior in CCG is encoded in categories. They may be atoms, like np, or func-tions which specify the direction in which they seek their arguments, like (s\np)/np. The latter is the category for English transitive verbs; it first seeks its object to its right and then its subject to its left.

Categories combine through a small set of univer-sal combinatory rules. The simplest are application rules which allow a function category to consume its argument either on its right (>) or on its left (<):

(>) X/?Y Y ⇒ X (<) Y X\?Y ⇒ X

Four further rules allow functions to compose with other functions:

(>B) X/Y Y/Z ⇒ X/Z (<B) Y\Z X\Y ⇒ X\Z (>B×) X/×Y Y\×Z ⇒ X\×Z (<B×) Y/×Z X\×Y ⇒ X/×Z

The modalities?, and×on the slashes enforce different kinds of combinatorial potential on cate-gories. For a category to serve as input to a rule, it must contain a slash which is compatible with that specified by the rule. The modalities work as follows. ? is the most restricted modality, al-lowing combination only by the application rules (> and <). allows combination with the appli-cation rules and the order-preserving composition rules (>Band<B). ×allows limited permutation via the crossed composition rules (>B×and<B×) as well as the application rules. Additionally, a per-missive modality·allows combination by all rules in the system. However, we suppress the· modal-ity on slashes to avoid clutter. An undecorated slash may thus combine by all rules.

There are two further rules of type-raising that turn an argument category into a function over func-tions that seek that argument:

(>T) X ⇒ Y/i(Y\iX) (<T) X ⇒ Y\i(Y/iX)

The variable modality i on the output categories constrains both slashes to have the same modality.

These rules support the following incremental derivation for Marcel proved completeness:

(1) Marcel proved completeness np (s\np)/np np

>T s/(s\np)

>B s/np

>

s

This derivation does not display the effect of us-ing modalities in CCG; see Baldridge (2002) and Baldridge and Kruijff (2003) for detailed linguistic justification for this modalized formulation of CCG.

2.2 Hybrid Logic Dependency Semantics Many different kinds of semantic representations and ways of building them with CCG exist. We use Hybrid Logic Dependency Semantics (HLDS) (Kruijff, 2001), a framework that utilizes hybrid logic (Blackburn, 2000) to realize a dependency-based perspective on meaning.

Hybrid logic provides a language for represent-ing relational structures that overcomes standard modal logic’s inability to directly reference states in a model. This is achieved via nominals, a kind of basic formula which explicitly names states. Like propositions, nominals are first-class citizens of the object language, so formulas can be formed us-ing propositions, nominals, standard boolean oper-ators, and the satisfaction operator “@”. A formula @i(p∧ hFi(j∧q))indicates that the formulaspand

hFi(j∧q)hold at the state named byiand that the statejis reachable via the modal relation F.

In HLDS, hybrid logic is used as a language for describing semantic interpretations as follows. Each semantic head is associated with a nominal that identifies its discourse referent and heads are connected to their dependents via dependency rela-tions, which are modeled as modal relations. As an example, the sentence Marcel proved completeness receives the representation in (2).

(2) @e(prove∧ hTENSEipast

∧ hACTi(m∧Marcel)∧ hPATi(c∧comp.))

In this example,eis a nominal that labels the predi-cations and relations for the head prove, andmand clabel those for Marcel and completeness, respec-tively. The relations ACTand PATrepresent the de-pendency roles Actor and Patient, respectively.

(3) @eprove∧@ehTENSEipast∧@ehACTim

∧@ehPATic∧@mMarcel∧@ccomp.

2.3 Semantic Construction

Baldridge and Kruijff (2002) show how HLDS representations can be built via CCG derivations. White (2004) improves HLDS construction by op-erating on flattened representations such as (3) and using a simple semantic index feature in the syntax. We adopt this latter approach, described below.

EPs are paired with syntactic categories in the lexicon as shown in (4)–(6) below. Each atomic cat-egory has an index feature, shown as a subscript, which makes a nominal available for capturing syn-tactically induced dependencies.

(4) prove ` (se\npx)/npy :

@eprove∧@ehTENSEipast

∧@ehACTix∧@ehPATiy

(5) Marcel`npm:@mMarcel

(6) completeness`npc:@ccompleteness

Applications of the combinatory rules co-index the appropriate nominals via unification on the cat-egories. EPs are then conjoined to form the result-ing interpretation. For example, in derivation (1), (5) type-raises and composes with (4) to yield (7). The indexxis syntactically unified withm, and this resolution is reflected in the new conjoined logical form. (7) can then apply to (6) to yield (8), which has the same conjunction of predications as (3).

(7) Marcel proved ` se/npy :

@eprove∧@ehTENSEipast

∧@ehACTim∧@ehPATiy∧@mMarcel

(8) Marcel proved completeness ` se :

@eprove∧@ehTENSEipast∧@ehACTim

∧@ehPATic∧@mMarcel∧@ccompleteness

Since the EPs are always conjoined by the com-binatory rules, semantic construction is guaranteed to be monotonic. No semantic information can be dropped during the course of a derivation. This pro-vides a clean way of establishing semantic depen-dencies as informed by the syntactic derivation. In the next section, we extend this paradigm for use with any number of representational levels.

3 Generalized dimensionality

To support a more modular and perspicuous encod-ing of multiple levels of analysis, we generalize the notion of sign commonly used in CCG. The ap-proach is inspired on the one hand by earlier work by Steedman (2000a) and Hoffman (1995), and on

the other by the signs found in constraint-based ap-proaches to grammar. The principle idea is to ex-tend White’s (2004) approach to semantic construc-tion (see §2.3). There, categories and the mean-ing they help express are connected through co-indexation. Here, we allow for information in any (finite) number of levels to be related in this way.

A sign is an n-tuple of terms that represent in-formation atndistinct dimensions. Each dimension represents a level of linguistic information such as prosody, meaning, or syntactic category. As a repre-sentation, we assume that we have for each dimen-sion a language that defineswell-formed

representa-tions, and a set ofoperations which can create new

representations from a set of given representations.1 For example, we have by definition a dimension for syntactic categories. The language for this di-mension is defined by the rules for category con-struction: given a set of atomic categoriesA,Cis a category iff (i)C∈ Aor (ii)Cis of the formA\mB orA/mB withA, Bcategories andm∈ {?, ×,·}. The set of combinatory rules defines the possible operations on categories.

This syntactic category dimension drives the grammatical analysis, thus guiding the composition of signs. When two categories are combined via a rule, the appropriate indices are unified. It is through this unification of indices that information can be passed between signs. At a given dimen-sion, the co-indexed information coming from the two signs we combine must be unifiable.

With these signs, dimensions interact in a more limited way than in HPSG or LFG. Constraints (re-solved through unification) may only be applied if they are invoked through co-indexation on cat-egories. This provides a bound on the number of indices and the number of unifications to be made. As such, full recursion and complex unification as in attribute-value matrices with re-entrancy is avoided. The approach incorporates various ideas from constraint-based approaches, but remains based on a derivational perspective on grammatical analysis and derivational control, unlike e.g Categorial Uni-fication Grammar. Furthermore, the ability for di-mensions to interact through shared indices brings several advantages: (1) “parallel derivations” (Hoff-man, 1995) are unnecessary; (2) non-isomorphic, functional structures across different dimensions can be employed; and (3) there is no longer a need to load all the necessary information into syntactic categories (as with Kruijff (2001)).

1

4 Examples

In this section, we illustrate our approach on several examples involving information structure. We use signs that include the following dimensions.

Phonemic representation: word sequences, composi-tion of sequences is through concatenacomposi-tion

Prosody: sequences of tunes from the inventory of (Pierrehumbert and Hirschberg, 1990), composi-tion through concatenacomposi-tion

Syntactic category: well-formed categories, combina-tory rules (see§2)

Information structure: hybrid logic formulas of the form@d[in]r, withra discourse referent that has

informativityin (themeθ, or rhemeρ) relative to the current point in the discoursed(Kruijff, 2003).

Predicate-argument structure: hybrid logic formulas of the form as discussed in§2.3.

Example (9) illustrates a sign with these dimen-sions. The word-form Marcel bears an H* accent, and acts as a type-raised category that seeks a verb missing its subject. TheH*accent indicates that the discourse referentmintroduces new information at the current point in the discoursed: i.e. the meaning @mmarcelshould end up as part of the rheme (ρ) of the utterance,@d[ρ]m.

(9) Marcel

H*

sh/(sh\npm)

@d[ρ]m

@mmarcel

If a sign does not specify any information at a particular dimension, this is indicated by>(or an empty line if no confusion can arise).

4.1 Topicalization

We start with a simple example of topicalization in English. In topicalized constructions, a thematic ob-ject is fronted before the subob-ject. Given the question Did Marcel prove soundness and completeness?, (10) is a possible response using topicalization:

(10) Completeness, Marcel proved, and sound-ness, he conjectured.

We can capture the syntactic and information structure effects of such sentences by assigning the following kind of sign to (topicalized) noun phrases:

(11) completeness

>

si/(si/npc) @d[θ]c

@ccompleteness

This category enables the derivation in Figure 1. The type-raised subject composes with the verb, and the result is consumed by the topicalizing category. The information structure specification stated in the sign in (11) is passed through to the final sign.

The topicalization of the object in (10) only indi-cates the informativity of the discourse referent re-alized by the object. It does not yield any indica-tions about the informativity of other constituents; hence the informativity for the predicate and the Ac-tor is left unspecified. In English, the informativity of these discourse referents can be indicated directly with the use of prosody, to which we now turn.

4.2 Prosody & information structure

Steedman (2000a) presents a detailed, CCG-based account of how prosody is used in English as a means to realize information structure. In the model, pitch accents and boundary tones have an ef-fect on both the syntactic category of the expression they mark, and the meaning of that expression.

Steedman distinguishes pitch accents as markers of either thetheme (θ) or of therheme (ρ): L+H* andL*+Hare θ-markers; H*, L*, H*+L andH+L* areρ-markers. Since pitch accents mark individual words, not (necessarily) larger phrases, Steedman uses theθ/ρ-marking to spread informativity over the domain and the range of function categories. Identical markings on different parts of a function category not only act as features, but also as occur-rences of a singular variable. The value of the mark-ing on the domain can thus get passed down (“pro-jected”) to markings on categories in the range.

Constituents bearing no tune have anη-marking, which can be unified with eitherη,θorρ. Phrases with such markings are “incomplete” until they combine with a boundary tone. Boundary tones have the effect of mapping phrasal tones into intonational phrase boundaries. To make these boundaries explicit and enforce such “complete” prosodic phrases to only combine with other com-plete prosodic phrases, Steedman introduces two further types of marking –ιandφ– on categories. Theφmarkings only unify with otherφorι mark-ings on categories, not withη,θorρ. These mark-ings are only introduced to provide derivational con-trol and are not reflected in the underlying meaning (which only reflectsη,θorρ).

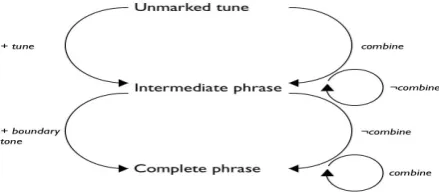

Figure 2 recasts the above as an abstract speci-fication of which different types of prosodic con-stituents can, or cannot, be combined.2 Steedman’s

2

completeness Marcel proved

si/(si/npc) sj/(sj\npm) (sp\npx)/npy @d[θ]c

@ccompleteness @mMarcel @pprove∧@phACTix∧@phPATiy

>B

sp/npy

@pprove∧@phACTim∧@phPATiy∧@mMarcel

>

sp @d[θ]c

@pprove∧@phACTim∧@phPATic∧@mMarcel∧@ccompleteness

Figure 1: Derivation for topicalization.

system can be implemented using just one feature

pros which takes the values ip for intermediate phrases, cp for complete phrases, and up for un-marked phrases. We writespros=ip, or simplysip if no confusion can arise.

Figure 2: Abstract specification of derivational con-trol in prosody

First consider the top half of Figure 2. If a con-stituent is marked with either a θ- or ρ-tune, the atomic result category of the (possibly complex) category is marked withip. Prosodically unmarked constituents are marked as up. The lexical entries in (12) illustrates this idea.3

(12) MARCEL proved COMPLETENESS

H* L+H*

sip/(sup\np) (sup\np)/np sip$\(sup$/np)

This can proceed in two ways. Either the marked MARCELand the unmarked proved combine to pro-duce an intermediate phrase (13), or proved and the markedCOMPLETENESScombine (14).

(13) MARCEL proved COMPLETENESS

H* L+H*

sip/(sup\np) (sup\np)/np sip$\(sup$/np)

>

sip/np

3

The $’s in the category forCOMPLETENESSare standard CCG schematizations:s$indicates all functions intos, such as

s\npand(s\np)/np. See Steedman (2000b) for details.

(14) MARCEL proved COMPLETENESS

H* L+H*

sip/(sup\np) (sup\np)/np sip$\(sup$/np)

<

sip\np

For the remainder of this paper, we will suppressup

marking and writesup simply ass.

Examples (13) and (14) show that prosodically marked and unmarked phrases can combine. How-ever, both of these partial derivations produce cate-gories that cannot be combined further. For exam-ple, in (14),sip/(s\np)cannot combine withsip\np to yield a larger intermediate phrase. This properly captures the top half of Figure 2.

To obtain a complete analysis for (12), bound-ary tones are needed to complete the intermediate phrases tones. For example, consider (15) (based on example (70) in Steedman (2000a)):

(15) MARCEL proved COMPLETENESS

H* L L+H* LH%

To capture the bottom-half of Figure 2, the bound-ary tones L and LH% need categories which cre-ate complete phrases out of those for MARCELand

proved COMPLETENESS, and thereafter allow them

to combine. Figure 3 shows the appropriate cate-gories and complete analysis.

We noted earlier that downstepped phrasal tunes form an exception to the rule that intermediate phrases cannot combine. To enable this, we not only should mark the result category withip (tune), but also any leftward argument(s) should have ip

(downstep). Thus, the effect of (lexically) combin-ing a downstep tune with an unmarked category is specified by the following template: add marking xip$\yip to an unmarked category of the formx$\y. The derivation in Figure 5 illustrates this idea on ex-ample (64) from (Steedman, 2000a).

MARCEL proved COMPLETENESS

H* L L+H* LH%

sip/(s\np) (scp/scp$)\?(sip/s$) (s\np)/np sip$\(s$/np) scp$\?sip$

< <

scp/(scp\np) sip\np

<

scp\np

>

scp

Figure 3: Derivation including tunes and boundary tones; (70) from (Steedman, 2000a)

Marcel PROVED COMPLETENESS

L+H* LH% H* LL%

np (sip:p\npx)/npy scp$\?sip$ sip\(s/npc) (scp\scp$)\?(sip\s$)

@d[θ]p @d[ρ]c

@mMarcel @pprove∧@phACTix∧@phPATiy @ccompleteness

>T <

sip/(sip\np) scp\(scp/npc)

@d[ρ]c

@mMarcel @ccompleteness

>B sip/np

@d[θ]p

@pprove∧@phACTim∧@phPATiy∧@mMarcel

<

scp/npy

@d[θ]p

@pprove∧@phACTim∧@phPATiy∧@mMarcel

<

scp

@d[θ]p∧@d[ρ]c

@pprove∧@phACTim∧@phPATic∧@mMarcel∧@ccompleteness

Figure 4: Information structure for derivation for (67)-(68) from (Steedman, 2000a)

on atomic categories makes a nominal (discourse referent) available. We represent information struc-ture as a formula @d[i]r at a dimension separate from the syntactic category. The nominalr stands for the discourse referent, which has informativity iwith respect to the current point in the discourse d(Kruijff, 2003). Following Steedman, we distin-guish two levels of informativity, namelyθ(theme) andρ(rheme).

We start with a minimal assignment of informa-tivity: a theme-tune on a constituent sets the infor-mativity of the discourse referentr realized by the constituent toθand a rheme-tune sets it toρ. This is a minimal assignment in the sense that we do not

project informativity; instead, we only set

informa-tivity for those discourse referents whose realization shows explicit clues as to their information status. The derivation in Figure 4 illustrates this idea and shows the construction of both logical form and in-formation structure.

Indices can also impose constraints on the infor-mativity of arguments. For example, in the down-step example (Figure 5), the discourse referents cor-responding to ANNAandSAYSare both part of the

theme. We specify this with the constituent that has received the downstepped tune. The referent of the subject of SAYS (indexedx) must be in the theme along with the referentsforSAYS. This is satisfied in the derivation:aunifies withx, and we can unify the statements abouta’s informativity coming from ANNA(@d[θ]a) andSAYS(@d[θ]xwithx replaced byain the>Bstep).

5 Conclusions

In this paper, we generalize the traditional Saus-surian sign in CCG with ann-dimensional tic sign. The dimensions in the generalized linguis-tic sign can be related through indexation. Index-ation places constraints on signs by requiring that co-indexed material is unifiable, on a per-dimension basis. Consequently, we do not need to overload the syntactic category with information from different dimensions.

ANNA SAYS he proved COMPLETENESS

L+H* !L+H* LH%

npip:a (sip:s\npip:x)/sy s/(s\np) (sp\np)/np

@d[θ]a @d[θ]s∧@d[θ]x @d[θ](pron) @d[i]p

>T sip/(sip\npip)

@d[θ]a

>B sip/s

@d[θ]s∧@d[θ]a

>B sip/(s\np)

@d[θ]s∧@d[θ]a∧@d[θ](pron)

>B sip/np

@d[θ]s∧@d[θ]a∧@d[θ](pron)∧@d[i]p

Figure 5: Information structure for derivation for (64) from (Steedman, 2000a)

referenced. As analysis remains driven only by in-ference over categories, only those constraints trig-gered by indexation on the categories are imposed. We do not allow for re-entrancy.

It is possible to conceive of a scenario in which the various levels can contribute toward determin-ing the well-formedness of an expression. For ex-ample, we may wish to evaluate the current informa-tion structure against a discourse model, and reject the analysis if we find it is unsatisfiable. If such a move is made, then the complexity will be bounded by the complexity of the dimension for which it is most difficult to determine satisfiability.

Acknowledgments

Thanks to Ralph Debusmann, Alexander Koller, Mark Steedman, and Mike White for discussion. Geert-Jan Kruijff’s work is supported by the DFG SFB 378 Resource-Sensitive Cognitive Processes, Project NEGRA EM 6.

References

Jason Baldridge and Geert-Jan Kruijff. 2002. Coupling CCG and Hybrid Logic Dependency Semantics. In Proc. of 40th Annual Meeting of the ACL, pages 319– 326, Philadelphia, Pennsylvania.

Jason Baldridge and Geert-Jan Kruijff. 2003. Multi-Modal Combinatory Categorial Grammar. In Proc. of 10th Annual Meeting of the EACL, Budapest.

Jason Baldridge. 2002. Lexically Specified Derivational Control in Combinatory Categorial Grammar. Ph.D. thesis, University of Edinburgh.

Patrick Blackburn. 2000. Representation, reasoning, and relational structures: a hybrid logic manifesto. Journal of the Interest Group in Pure Logic, 8(3):339– 365.

Beryl Hoffman. 1995. Integrating ”free” word order syntax and information structure. In Proc. of 7th An-nual Meeting of the EACL, Dublin.

Ronald M. Kaplan and Joan Bresnan. 1982. Lexical-functional grammar: A formal system for

grammat-ical representation. In The Mental Representation of Grammatical Relations, pages 173–281. The MIT Press, Cambridge Massachusetts.

Geert-Jan M. Kruijff. 2001. A Categorial-Modal Logi-cal Architecture of Informativity: Dependency Gram-mar Logic & Information Structure. Ph.D. thesis, Charles University, Prague, Czech Republic.

Geert-Jan M. Kruijff. 2002. Formulating a category of informativity. In Hilde Hasselgard, Stig Johansson, Bergljot Behrens, and Cathrine Fabricius-Hansen, ed-itors, Information Structure in a Cross-Linguistic Per-spective, pages 129–146. Rodopi, Amsterdam. Geert-Jan M. Kruijff. 2003. Binding across boundaries.

In Geert-Jan M. Kruijff and Richard T. Oehrle, editors, Resource Sensitivity, Binding, and Anaphora. Kluwer Academic Publishers, Dordrecht.

John T. III Maxwell and Ronald M. Kaplan. 1993. The interface between phrasal and functional constraints. Computational Linguistics, 19(4):571–590.

Janet Pierrehumbert and Julia Hirschberg. 1990. The meaning of intonational contours in the interpretation of discourse. In J. Morgan P. Cohen and M. Pollack, editors, Intentions in Communication. The MIT Press, Cambridge Massachusetts.

Carl Pollard and Ivan A. Sag. 1993. Head-Driven Phrase Structure Grammar. University of Chicago Press, Chicago IL.

Mark Steedman. 2000a. Information structure and the syntax-phonology interface. Linguistic Inquiry, 31(4):649–689.

Mark Steedman. 2000b. The Syntactic Process. The MIT Press, Cambridge, MA.

Enric Vallduv´ı and Elisabet Engdahl. 1996. The linguis-tic realization of information packaging. Linguislinguis-tics, 34:459–519.