Available online:

https://edupediapublications.org/journals/index.php/IJR/

P a g e | 1240 Abstract: In up to date instances, some study believes thechallenge of comfortable with efficient public data integrity auditing for unified dynamic data. On the other hand, these techniques are still no longer relaxed beside the collusion of cloud storage server as good as revoked group clients for the duration of person revocation in sensible cloud storage system. In this paper, we found out that the collusion attack within the exiting scheme. An effective public integrity auditing scheme with relaxed workforce user revocation based on vector commitment plus verifier-local revocation group signature. We designed a concrete scheme with a new constitution known as Decrypt key, which presents effectivity and reliability assurance for convergent key administration on mutually user along with cloud storage sides. The design is to use de-duplication to the convergent keys to affect secret sharing approaches.

Key Words: Key management, Insider attacks, Outsider attacks, Data confidentiality, Integrity Checking.

I. INTRODUCTION

The development of cloud computing encourages the endeavors and group to subcontract their data to third-party cloud service provider. This may increasingly growth the storage drawbacks of useful resource limit local contraptions. In up to date times, quite a lot of lucrative cloud storage services, such as the easy storage provider, data backup offerings, practical cloud based software Google drive are developed for cloud utility. Ever considering the fact that the cloud servers could return unacceptable outcome, it’s seeing that of servers’ hardware failure or software failure. Mostly human preservation may just lead to problems. And malicious attack will result in unacceptable loss or outcome of data. To restrict from this concern, we're in need of data integrity and accessibility. This data integrity and accessibility are helps to preserve data of cloud users. It additionally helps to furnish privateness to the users’ data. To triumph over the above critical

protection dispute of in these days’s cloud storage services, simple replication, data dispersion scheme are a long way from intelligent declare. To make certain the provision of data when a minimum of repositories we want later protocols. Then again, they don't present warranty about the availability of each repository. This may increasingly surely restrict the reassurance, which the protocols will also be equipped to provide relying events. To furnish integrity and availability of distant cloud storage, now we have some decision and their choices. On this rationalization, if a approach supports data modification, we are saying it's a dynamic scheme, if no longer static one. Yet another substitute approach is restrained dynamic scheme, it is just like that one, but it competently support few detailed operations, particularly append operation.

[A] Cloud Computing

Cloud computing is nothing but internet based computing which made revolution in today's world. It is the biggest innovation which uses advanced computational power and improves data sharing and data storing capabilities. Cloud is a large group of interconnected computers, which is a major change in how we store data and run application. Cloud computing is a shared pool of configurable computing resources, on demand network access and provisioned by the service provider [1].The advantage of cloud is cost savings. The prime disadvantage is security. The cloud computing security contains to a set of policies, technology & controls deployed to protect data, application & the associated infrastructure of cloud computing.

Some security and privacy issues that need to be considered. The only thing was the cloud computing lacks regarding the issues of data

Public Auditing for Group User Revocation in Cloud Data

1Mr. Rammohan Burra,2Mr. N. Vijay Kumar,3Dr. R. Vijayaprakash

1M.Tech.(S.E.) Scholar, Dept.of CSE, SR Engineering college,Warangal,Telangana, India.

2Assistant Professor, Dept.of CSE,SR Engineering college, Warangal, Telangana, India.

3HOD, Dept.of CSE,SR Engineering college, Warangal, Telangana, India.

Available online:

https://edupediapublications.org/journals/index.php/IJR/

P a g e | 1241 integrity, data privacy, and data accessed byunauthorised members.

[B] Data integrity

Integrity is nothing but consistency. It is a major factor that affects on the performance of the cloud. Data integrity contains protocols for writing of the data in a reliable manner to the persistent data storages which can be retrieved in the same format without any changes later. Maintaining integrity of shared data is quite difficult task. Numbers of mechanisms have been proposed [2]-[10] to protect integrity of data. Concept of attaching Signature to each block of data is used in these mechanisms. Data Integrity is most important of all the security issues in cloud data storages as it ensures completeness of data as well as that the data is correct, accessible, consistent and of high quality. Data model consist of three types of integrity constraints:

[C] Public Data Auditing in Cloud

On cloud we can able to store data as a group and share it or modify it within a group. In cloud data storage contains two entities as cloud user (group members) and cloud service provider/ cloud server. Cloud user is a person who stores large amount of data on cloud server which is managed by the cloud service provider. User can upload their data on cloud and share it within a group. A cloud service provider will provide services to cloud user. The major issue in cloud data storage is to obtain correctness and integrity of data stored on the cloud. Cloud Service Provider (CSP) has to provide some form of mechanism through which user will get the confirmation that cloud data is secure or is stored as it is. No data loss or modification is done by unauthenticated member. To achieve security data auditing concept is come into picture. This can be achieved in 2 ways as

d party based on who does the verification.

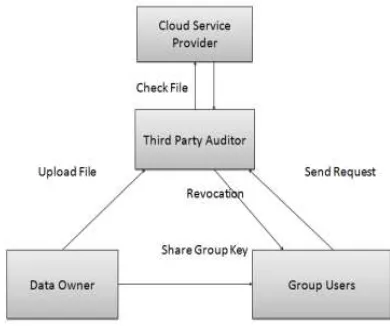

Fig.1 Architecture of Cloud Data Storage Service

In cloud computing structure data is saved centrally and managing this centralised data and supplying safety to it is rather complex mission. TPA is used on this obstacle. The reliability is extended as data is dealt with through TPA but data integrity is not done. TPA uses encryption to encrypt the contents of the file. It assessments data integrity but there may be threat of TPA itself leaks user's data.

II. RELATED WORKS

A significant amount of researchers have committed huge attention to the troubles on find out how to securely outsource nearby pile as much as remote cloud server. The main issue of remote data integrity and availability auditing attacks the attestation of many researchers. Sagarika Dev Roy, et.Al (2014) proposed a strategy for convenient outsourcing of linear Computations into the cloud environment. Outsourcing is a original system engaged in the business world when the client chooses to farm out a distinct mission to an agent for the benefit of the company in terms of time and cost. They proposed methodology to detecting a malicious server, in an effective influence verification process.

Available online:

https://edupediapublications.org/journals/index.php/IJR/

P a g e | 1242 Within the course of protection evaluation andperformance evaluation, their scheme is secure and high efficiency.

FrancescSebe, et.Al (2008) proposed a methodology to determine the efficent of remote data control or possession. For checking the data possession in a complicated expertise method such as power services, airports, data vaults, and,defence techniques is a subject of important value. Data possession checking protocols allows for us to verify a remote server is able to admission an uncorrupted file. In this kind of means that the verifier needn't to understand in regards to the entire file, that is going to be proven. Unfortunately, present protocols only allow a constrained quantity of successive verifications or just the impractical from the computational factor of view. On this grants a brand new protocol for distant data possession checking.

Giuseppe Ateniese, et.Al (2008) proposed a methodology to function on the distant storage data in a excessive secured manner. The primary hindrance is how so much ordinarily, effectively and securely the approach will confirm that a storage server is realistically storing its client’s. Key factor is the purchasers’ outsourced data are probably very colossal. The storage server is believed to be now not depended on in terms of both the safety and reliability. It would unkindly or unintentionally wipe out data being hosted. However the quandary is exacerbated through the user being a small computing device with partial assets. Previous work has care for this crisis that is use public key cryptography or outsource its data in encrypted structure. In this paper, they developed a enormously efficient and expedient system depend entirely on symmetric key cryptography. If detection of any amendment or deletion of small constituents of the file is primary then erasure codes would be used.

Jiawei Yuan, et.Al (2014) proposed a brand new procedure based on some modern methods reminiscent of depend on authentication polynomial tags and linear authenticators. Data integrity auditing is finished at the same time on this process. The proposed suggestion is to signify the consistent real time verbal exchange and in addition the computational rate on the clients’

aspect. It supports both public auditing together with batch auditing process. The safety of our proposed scheme is entirely established on the Computational Diffie-Hellman hitch. Many data loss and corruption routine are stated towards the good known cloud service vendors, data house owners, to unravel these problems they have to periodically audit the integrity of their outsourced data. And likewise every cloud service vendors have got to fortify their efficiency of cloud storage. To scale down the useless redundant copies, the cloud storage servers would deduplicate the data. Through having just one or few copies for each file and making a link to the file for each person who asks the identical file saved in the disk.

III. PROPOSED METHOD

The traditional encryptions have want of distinctive users to encrypt their data, with the possess keys of the person. Accordingly, the identical data copies of unique users will lead to assorted cipher texts. It creates integrity checking procedure is an inconceivable venture. Data outsourcing hoist security and privateness fear. We have to believe one third-occasion vendors for proper implementation of confidentiality, integrity checking, and access control mechanisms. The reward approach use average encryption scheme for deciding on reproduction blocks, the blocks are stored in cloud. In Cloud Storage, general encryption of identical files generates equal key and same cipher textual content. Accordingly data de duplication is inconceivable in encrypted data. When user misplaced the key, there used to be impossible to revive the original content material of the file. Message digest algorithm supplies a doable option to put in force data confidentiality at the same time realizing duplication.

Available online:

https://edupediapublications.org/journals/index.php/IJR/

P a g e | 1243 the cipher texts. Cipher texts are capable to decryptvia the corresponding clients’ with their convergent keys. Convergent encryption sensible is to efficiently and reliably control a huge number of convergent keys.

Fig. 1. Architecture of a proposed system

The certain data block will probably be chosen and those blocks are placed within the cloud provider provider’s area. We're utilising crypto graphic algorithm for integrity checking. Message authentication code is the scheme of manufacturing Message digest for input file. The integrity checking should be executed by way of 0.33 social gathering auditor by way of checking this message digest code.Earlier than importing file; data proprietor ought to send the hash key to the third party auditor. Third get together Auditor receives the key and affirm with cloud provider supplier to determine whether this file is already uploaded or not. In this module, user revokes the content via getting secret key of data proprietor. Data proprietor have to share the secret key for crew clients. User downloads the file from the cloud service provider using hash key.

IV. CONCLUSION:

Data auditing is the method of conducting an data evaluation to measure how manufacturer's data is fit for agreed operate. This engages profiling of data and assesses the collision of pitiable best data on the group's efficiency and profits. This paper proposed approach to comprehend efficient and relaxed data integrity auditing for dynamic data. The proposed model consists of the general public data auditing. This technique will provide higher

data confidentiality examine to other methodologies.

REFERENCES

[1] P. Mell and T. Grance, “Draft NIST working definition of cloud computing”.

[2] C. Wang, Q. Wang, K. Ren, and W. Lou, “Towards Secure and Dependable Storage Services in Cloud Computing,” IEEE Transactions on Services Computing, vol. 5, no. 2, pp. 220–232, 2011.

[3] Y. Zhu, G.-J. Ahn, H. Hu, S. S. Yau, H. G. An, and S. Chen, “Dynamic Audit Services for Outsourced Storage in Clouds,” IEEE Transactions on Services Computing, accepted.

[4] S. Marium, Q. Nazir, A. Ahmed, S. Ahthasham and Aamir M. Mirza, “Implementation of EAP with RSA for Enhancing The Security of Cloud Computig”, International Journal of Basic and Applied Science, vol 1, no. 3, pp. 177-183, 2012

[5] Balkrishnan. S, Saranya. G, Shobana. S and Karthikeyan.S, “Introducing Effective Third Party Auditing (TPA) for Data Storage Security in Cloud”, International Journal of computer science and Technology, vol. 2, no. 2, ISSN 2229-4333 (Print) | ISSN: 0976- 8491(Online), June 2012

[6] K. Kiran Kumar, K. Padmaja, P. Radha Krishna, “Automatic Protocol Blocker for Privacy-Preserving Public Auditing in Cloud Computing”, International Journal of Computer science and Technology, vol. 3 pp, ISSN. 0976-8491(Online), pp. 936-940, ISSN: 2229-4333 (Print), March 2012

[7] Jachak K. B., Korde S. K., Ghorpade P. P. and Gagare G. J.,“Homomorphic Authentication with Random Masking Technique Ensuring Privacy & Security in Cloud Computing”, Bioinfo Security Informatics, vol. 2, no. 2, pp. 49-52, ISSN. 2249-9423, 12 April 2012

[8] J. Yuan and S. Yu, “Proofs of Retrievability with Public Verifiability and Constant Communication Cost in Cloud,” in Proceedings of ACM ASIACCS-SCC’13, 2013 [9] H. Shacham and B. Waters, “Compact Proofs of Retrievability,”in the Proceedings of ASIACRYPT 2008. SpringerVerlag,2008,pp.90–107.

Available online:

https://edupediapublications.org/journals/index.php/IJR/

P a g e | 1244 [11] S. Halevi, D. Harnik, B. Pinkas, and A.Shulman-Peleg,‘‘Proofs of Ownership in Remote Storage Systems,’’ in Proc.ACM Conf. Comput. Commun. Security,Y.Chen,G.Danezis,and V. Shmatikov, Eds., 2011, pp. 491-500.

[12] D.Harnik, B.Pinkas, A.Shulman-Peleg, ‘‘Side Channels in Cloud Services: Deduplication in Cloud Storage,’’IEEE SecurityPrivacy, vol. 8, no. 6, pp. 40-47, Nov./Dec. 2010.

[13] S. Kamara, K. Lauter, ‘‘Cryptographic Cloud Storage,’’ in Proc. Financial Cryptography: Workshop Real-Life Cryptograph. Protocols Standardization, 2010, pp. 136-149.