Efficient probabilistic top-down and left-corner parsingt

B r i a n R o a r k a n d M a r k J o h n s o n C o g n i t i v e a n d Linguistic Sciences

Box 1978, B r o w n University P r o v i d e n c e , RI 02912, U S A

brian-roark@brown, edu mj @cs. brown, edu

A b s t r a c t

This paper examines efficient predictive broad- coverage parsing without dynamic program- ming. In contrast to b o t t o m - u p methods, depth-first top-down parsing produces partial parses that are fully connected trees spanning the entire left context, from which any kind of non-local dependency or partial semantic inter- pretation can in principle be read. We con- trast two predictive parsing approaches, top- down and left-corner parsing, and find b o t h to be viable. In addition, we find that enhance- ment with non-local information not only im- proves parser accuracy, but also substantially improves the search efficiency.

1 I n t r o d u c t i o n

Strong empirical evidence has been presented over the past 15 years indicating that the hu- m a n sentence processing mechanism makes o n - line use of contextual information in the preced- ing discourse (Crain and Steedman, 1985; Alt- m a n n and Steedman, 1988; Britt, 1994) and in the visual environment (Tanenhaus et al., 1995). These results lend support to Mark Steedman's (1989) "intuition" that sentence interpretation takes place incrementally, and that partial in- terpretations are being built while the sentence is being perceived. This is a very commonly held view among psycholinguists today.

Many possible models of h u m a n sentence pro- cessing can be made consistent with the above view, b u t the general assumption that must un- derlie t h e m all is that explicit relationships be- tween lexical items in the sentence must be spec- ified incrementally. Such a processing mecha-

tThis material is based on work supported by the

National Science Foundation under Grant No. SBR-

9720368.

nism stands in marked contrast to dynamic pro- gramming parsers, which delay construction of a constituent until all of its sub-constituents have been completed, and whose partial parses thus consist of disconnected tree fragments. For ex- ample, such parsers do not integrate a main verb into the same tree structure as i t s subject NP until the VP has been completely parsed, and in many cases this is the final step of the entire parsing process. W i t h o u t explicit on-line inte- gration, it would be difficult (though not impos- sible) to produce partial interpretations on-line. Similarly, it may be difficult to use non-local statistical dependencies (e.g. between subject and main verb) to actively guide such parsers.

Our predictive parser does not use dynamic programming, but rather maintains fully con- nected trees spanning the entire left context, which make explicit the relationships between constituents required for partial interpretation. The parser uses probabilistic best-first pars- ing methods to pursue the most likely analy- ses first, and a beam-search to avoid the non- termination problems typical of non-statistical top-down predictive parsers.

There are two main results. First, this ap- proach works and, with appropriate attention to specific algorithmic details, is surprisingly efficient. Second, not just accuracy b u t also efficiency improves as the language model is made more accurate. This bodes well for fu- ture research into the use of other non-local (e.g. lexical and semantic) information to guide the parser.

2 P a r s e r a r c h i t e c t u r e

T h e parser proceeds incrementally from left to right, with one item of look-ahead. Nodes are e x p a n d e d in a s t a n d a r d top-down, left-to-right fashion. T h e parser utilizes: (i) a probabilis- tic context-free g r a m m a r (PCFG), induced via s t a n d a r d relative frequency estimation from a corpus of parse trees; a n d (ii) look-ahead prob- abilities as described below. Multiple compet- ing partial parses (or analyses) are held on a priority queue, which we will call the p e n d i n g

heap. T h e y are ranked by a figure of merit (FOM), which will be discussed below. Each analysis has its own stack of nodes to be ex- panded, as well as a history, probability, and FOM. T h e highest ranked analysis is p o p p e d from t h e p e n d i n g heap, and the category at the top of its stack is expanded. A category is ex- p a n d e d using every rule which could eventually reach t h e look-ahead terminal. For every such rule expansion, a new analysis is created 1 and pushed back onto the p e n d i n g heap.

T h e FOM for an analysis is the p r o d u c t of the probabilities of all PCFG rules used in its deriva- tion and w h a t we call its look-ahead probabil- ity (LAP). T h e LAP approximates the p r o d u c t of the probabilities of t h e rules t h a t will be re- quired to link t h e analysis in its current state with the look-ahead t e r m i n a l 2. T h a t is, for a g r a m m a r G, a stack state [C1 ... C,] and a look- ahead t e r m i n a l item w:

(1) L A P --- PG([C1. . . Cn] -~ w a )

We recursively e s t i m a t e this with two empir- ically observed conditional probabilities for ev- ery n o n - t e r m i n a l Ci on the stack: /~(Ci 2+ w) a n d / ~ ( C i -~ e). T h e LAP approximation for a given stack state and look-ahead t e r m i n a l is:

(2)

PG([Ci . .. Ca] wot) P ( C i w) +W h e n the t o p m o s t stack category of an analy- sis m a t c h e s t h e look-ahead terminal, the termi- nal is p o p p e d from t h e stack and the analysis

1We count each of these as a parser state (or rule expansion) considered, which can be used as a measure of efficiency.

2Since this is a non-lexicalized grammar, we are tak- ing pre-terminal POS markers as our terminal items.

is pushed onto a second priority queue, which we will call the success heap. Once there are "enough" analyses on the success heap, all those remaining on the p e n d i n g heap are discarded. T h e success heap t h e n becomes t h e p e n d i n g heap, and the look-ahead is moved forward to the next item in the input string. W h e n the end of the input string is reached, the analysis with the highest probability and an e m p t y stack is r e t u r n e d as the parse. If no such parse is found, an error is returned.

T h e specifics of the beam-search d i c t a t e how m a n y analyses on the success heap constitute "enough". One approach is to set a constant b e a m width, e.g. 10,000 analyses on the suc- cess heap, at which point the parser moves to the next item in the input. A problem with this approach is t h a t parses towards t h e b o t t o m of the success heap m a y be so unlikely relative to those at the top t h a t t h e y have little or no chance of becoming the most likely parse at the end of the day, causing wasted effort. A n al- ternative approach is to d y n a m i c a l l y vary the b e a m w i d t h by stipulating a factor, say 10 -5, and proceed until t h e best analysis on t h e pend- ing heap has an FOM less t h a n 10 -5 times t h e probability of the best analysis on t h e success heap. Sometimes, however, the n u m b e r of anal- yses t h a t fall within such a range can be enor- mous, creating nearly as large of a processing b u r d e n as the first approach. As a compromise between these two approaches, we stipulated a base b e a m factor a (usually 10-4), a n d the ac- tual b e a m factor used was a •/~, w h e r e / 3 is the n u m b e r of analyses on the success heap. Thus, when f~ is small, the b e a m stays relatively wide, to include as m a n y analyses as possible; b u t as /3 grows, the b e a m narrows. We found this to be a simple a n d successful compromise.

Of course, with a left recursive g r a m m a r , such a top-down parser m a y never t e r m i n a t e . If

(a) (b) (c) (d)

NP NP

DT+JJ+JJ NN DT NP-DT

DT+JJ JJ cat the JJ NP-DT-JJ

DT JJ h a p p y fat JJ NN

I

I

I

I

the fat h a p p y cat

NP NP

DT NP-DT DT NP-DT

l

the JJ NP-DT-JJ

tLe JJ NP-DT-JJ

_J

fat JJ NP-DT-JJ-JJ

fiat JJ NP-DT-JJ-JJ

happy NN h a p p y NN NP-DT-JJ-JJ-NN

I

I

I

c a t c a t e

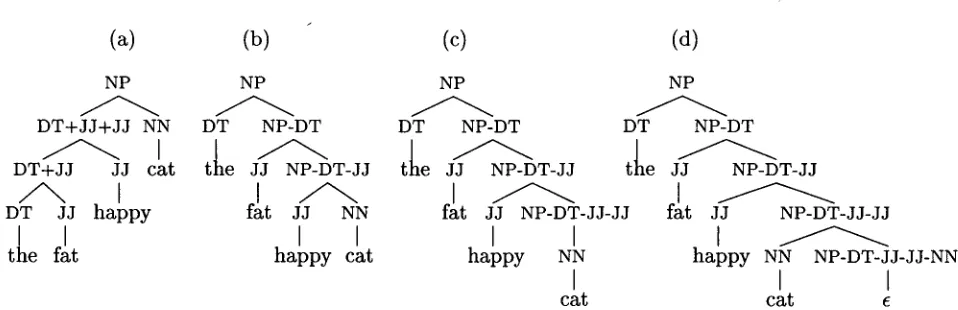

Figure 1: Binaxized trees: (a) left binaxized (LB); (b) right binaxized to b i n a r y (RB2); (c) right binaxized to u n a r y (RB1); (d) right binarized to nullaxy (RB0)

3 G r a m m a r t r a n s f o r m s

Nijholt (1980) characterized parsing strategies in terms of announce points: the point at which a parent category is a n n o u n c e d (identified) rel- ative to its children, and the point at which the rule expanding the parent is identified. In pure top-down parsing, a parent category and the rule expanding it are a n n o u n c e d before any of its children. In pure b o t t o m - u p parsing, t h e y are identified after all of the children. Gram- m a r transforms are one m e t h o d for changing the a n n o u n c e points. In top-down parsing with an appropriately binaxized g r a m m a r , the pax- ent is identified before, but the rule expanding the parent after, all of the children. Left-corner parsers a n n o u n c e a parent category and its ex- p a n d i n g rule after its leftmost child has been completed, but before any of the other children.

3.1 D e l a y i n g r u l e i d e n t i f i c a t i o n t h r o u g h b i n a r i z a t i o n

Suppose t h a t the category on the top of the stack is an N P and there is a determiner (DT)

in the look-ahead. In such a situation, there is no information to distinguish between the rules

N P ~ D T J J N N a n d N P - - + D T J J N N S .

If the decision can be delayed, however, until such a time as the relevant pre-terminal is in the look-ahead, the parser can make a more in- formed decision. G r a m m a r binaxization is one way to do this, by allowing the parser to use a rule like N P --+ D T N P - D T , where the new non-terminal N P - D T can expand into anything t h a t follows a D T in an N P . T h e expansion of

N P - D T occurs only after the next pre-terminal is in the look-ahead. Such a delay is essential

for an efficient i m p l e m e n t a t i o n of the kind of incremental parser t h a t we are proposing.

There axe actually several ways to make a g r a m m a r binary, some of which are b e t t e r t h a n others for our parser. T h e first distinction t h a t can be d r a w n is between w h a t we will call left

binaxization (LB) versus right binaxization (RB, see figure 1). In the former, the leftmost items on the righthand-side of each rule are g r o u p e d together; in the latter, the rightmost items on the righthand-side of the rule are g r o u p e d to- gether. Notice that, for a top-down, left-to-right parser, RB is the appropriate transform, be- cause it underspecifies the right siblings. W i t h LB, a top-down parser must identify all of the siblings before reaching the leftmost item, which does not aid our purposes.

W i t h i n RB transforms, however, there is some variation, with respect to how long rule under- specification is maintained. One m e t h o d is to have the final underspecified category rewrite as a binary rule (hereafter RB2, see figure lb). An- other is to have the final underspecified category rewrite as a u n a r y rule (RB1, figure lc). T h e last is to have the final underspecified category rewrite as a nullaxy rule (RB0, figure ld). No- tice t h a t the original motivation for RB, to delay specification until the relevant items are present in the look-ahead, is not served by RB2, because the second child must be specified w i t h o u t being present in the look-ahead. RB0 pushes t h e look- ahead out to the first item in the string after the constituent being expanded, which can be use- ful in deciding between rules of unequal length, e.g. NP---+ D T N N and N P ~ D T N N N N .

[image:3.612.63.542.63.218.2]Binarization Rules in Percent of Avg. States Avg. Labelled Avg. MLP Ratio of Avg.

Grammar Sentences Considered Precision and Labelled Prob to Avg.

Parsed* Recall t Prec/Rec t MLP Prob t

None 14962 34.16 19270 .65521 .76427 .001721

LB 37955 33.99 96813 .65539 .76095 .001440

I~B1 29851 91.27 10140 .71616 .72712 .340858

RB0 41084 97.37 13868 .73207 .72327 .443705

Beam Factor = 10 -4 *Length ~ 40 (2245 sentences in F23 Avg. length -- 21.68) t o f those sentences parsed

Table 1: The effect of different approaches to binarization

ing the effect of different binarization ap- proaches on parser performance. The gram- mars were induced from sections 2-21 of the P e n n Wall St. J o u r n a l Treebank (Marcus et al., 1993), and tested on section 23. For each transform tested, every tree in the training cor- pus was t r a n s f o r m e d before g r a m m a r induc- tion, resulting in a t r a n s f o r m e d PCFG and look- ahead probabilities e s t i m a t e d in the s t a n d a r d way. Each parse r e t u r n e d by the parser was de- t r a n s f o r m e d for evaluation 3. T h e parser used in each trial was identical, with a base b e a m factor c~ = 10 -4. T h e performance is evaluated using these measures: (i) the percentage of can- didate sentences for which a parse was found (coverage); (ii) the average n u m b e r of states (i.e. rule expansions) considered per candidate sentence (efficiency); and (iii) the average la- belled precision and recall of those sentences for which a parse was found (accuracy). We also used the same g r a m m a r s with an exhaustive, b o t t o m - u p CKY parser, to ascertain b o t h the accuracy and p r o b a b i l i t y of the m a x i m u m like- lihood parse (MLP). We can then additionally compare the parser's performance to the MLP's on those same sentences.

As expected,

left

binarization conferred no benefit to our parser.Right binarization, in con-

trast, improved performance across the board. RB0 provided a substantial improvement in cov- erage and accuracy over RB1, with something of a decrease in efficiency. This efficiency hit is p a r t l y a t t r i b u t a b l e to the fact that the same tree has more nodes with RB0. Indeed, the effi- ciency improvement with right binarization over the s t a n d a r d g r a m m a r is even more interesting in light of the great increase in the size of the grammars.3See Johnson (1998) for details of the transform/de- transform paradigm.

It is worth noting at this point that, w i t h the RB0 grammar, this parser is now a viable broad- coverage statistical parser, with good coverage, accuracy, and efficiency 4. Next we considered the left-corner parsing strategy.

3 . 2 L e f t - c o r n e r p a r s i n g

Left-corner (LC) parsing (Rosenkrantz and Lewis II, 1970) is a well-known s t r a t e g y t h a t uses b o t h b o t t o m - u p evidence (from the left corner of a rule) and t o p - d o w n prediction (of the rest of the rule). Rosenkrantz and Lewis showed how to transform a context-free gram- mar into a g r a m m a r that, when used b y a top- down parser, follows the same search p a t h as an LC parser. These LC g r a m m a r s allow us to use exactly the same predictive parser to evaluate top-down versus LC parsing. Naturally, an LC g r a m m a r performs best with our parser when right binarized, for the same reasons outlined above. We use transform composition to apply first one transform, then another to the o u t p u t of the first. We denote this A o B where (A o B) (t) = B (A (t)). After applying the left-corner transform, we then binarize the resulting gram- mar 5, i.e. LC o RB.

Another probabilistic LC parser investigated (Manning and Carpenter, 1997), which uti- lized an LC parsing architecture (not a trans- formed grammar), also got a p e r f o r m a n c e b o o s t

4The very efficient b o t t o m - u p statistical parser de- tailed in Charniak et al. (1998) measured efficiency in terms of total edges popped. A n edge (or, in our case, a

parser state) is considered when a probability is calcu-

lated for it, and we felt t h a t this was a better efficiency measure t h a n simply those popped. As a baseline, their

parser considered an average of 2216 edges per sentence

in section 22 of the WSJ corpus (p.c.).

[image:4.612.82.553.75.177.2]Transform Rules in Pct. of Avg. States Avg Labelled Avg. MLP Ratio of Avg.

Grammar Sentences Considered Precision and Labelled Prob to Avg.

Parsed* Recall t Prec/Rec t MLP Prob t

Left Corner (LC) 21797 91.75 9000 .76399 .78156 .175928

LB o LC 53026 96.75 7865 .77815 .78056 .359828

LC o RB 53494 96.7 8125 .77830 .78066 .359439

LC o RB o ANN 55094 96.21 7945 .77854 .78094 .346778

RB o LC 86007 93.38 4675 .76120 .80529

* L e n g t h _ 40 (2245 s e n t e n c e s in F23 - Avg. l e n g t h ---- 21.68 B e a m F a c t o r ---- 1 0 - 4

.267330 tOf those sentences parsed

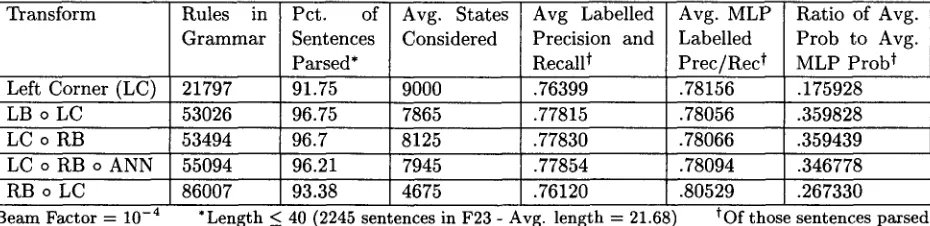

Table 2: Left Corner Results

through right binarization. This, however, is equivalent to RB o LC, which is a very differ- ent g r a m m a r from LC o RB. Given our two bi- narization orientations (LB and RB), there are four possible compositions of binarization and LC transforms:

(a) LB o LC (b) RB o LC (c) LC o LB (d) LC o RB

Table 2 shows left-corner results over various conditions 6. Interestingly, options (a) and (d) encode the same information, leading to nearly identical performance 7. As s t a t e d before, right binarization moves the rule announce point from before to after all of the children. The LC transform is such that LC o RB also delays

parent identification until after all of the chil-

dren. The transform LC o RB o ANN moves the parent announce point back to the left corner by introducing u n a r y rules at the left corner t h a t simply identify the parent of the binarized rule. This allows us to test the effect of the position of the parent announce point on the performance of the parser. As we can see, however, the ef- fect is slight, with similar performance on all measures.

RB o LC performs with higher accuracy than the others when used with an exhaustive parser, b u t seems to require a massive b e a m in order to even approach performance at the MLP level. Manning and C a r p e n t e r (1997) used a b e a m width of 40,000 parses on the success heap at each input item, which must have resulted in an order of m a g n i t u d e more rule expansions t h a n w h a t we have b e e n considering up to now, and

6 O p t i o n (c) is n o t t h e a p p r o p r i a t e k i n d of b i n a r i z a t i o n for o u r p a r s e r , as a r g u e d in t h e p r e v i o u s section, a n d so is o m i t t e d .

7 T h e difference is d u e t o t h e i n t r o d u c t i o n of v a c u o u s u n a r y r u l e s w i t h R B .

yet their average labelled precision and recall (.7875) still fell well below what we found to be the MLP accuracy (.7987) for the grammar. We are still investigating why this g r a m m a r func- tions so poorly when used by an incremental parser.

3.3 N o n - l o c a l a n n o t a t i o n

Johnson (1998) discusses the improvement of PCFG models via the a n n o t a t i o n of non-local in- formation onto non-terminal nodes in the trees of the training corpus. One simple example is to copy the parent node onto every non- terminal, e.g. the rule S ~ N P V P becomes

S ~ N P ~ S V P ~ S . The idea here is that the

distribution of rules of expansion of a particular non-terminal may differ depending on the non- terminal's parent. Indeed, it was shown that this additional information improves the MLP accuracy dramatically.

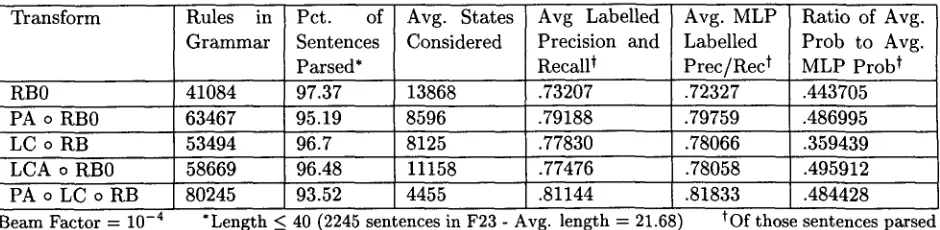

We looked at two kinds of non-local infor- mation annotation: parent (PA) and left-corner (LCA). Left-corner parsing gives improved accu- racy over top-down or b o t t o m - u p parsing with the same grammar. W h y ? One reason m a y be that the ancestor category exerts the same kind of non-local influence u p o n the parser t h a t the parent category does in parent annotation. To test this, we a n n o t a t e d the left-corner ancestor category onto every leftmost non-terminal cat- egory. The results of our a n n o t a t i o n trials are shown in table 3.

[image:5.612.67.532.77.190.2]Transform Rules in Pct. of Avg. States Avg Labelled Avg. MLP Ratio of Avg.

Grammar Sentences Considered Precision and Labelled Prob to Avg.

Parsed* Recall t Prec/Rec t MLP Prob t

RB0 41084 97.37 13868 .73207 .72327 .443705

PA o RB0 63467 95.19 8596 .79188 .79759 .486995

LC o RB 53494 96.7 8125 .77830 .78066 .359439

LCA o RB0 58669 96.48 11158 .77476 .78058 .495912

PA o LC o RB 80245 93.52 4455 .81144 .81833 .484428

Beam Factor -- 10 -4 *Length ~ 40 (2245 sentences in F23 - Avg. length -= 21.68) tOf those sentences parsed

Table 3: Non-local a n n o t a t i o n results

to the final p r o d u c t . T h e a n n o t a t e d g r a m m a r has 1.5 times as m a n y rules, and would slow a b o t t o m - u p CKY parser proportionally. Yet our parser actually considers far fewer states en route to the more accurate parse.

Second, LC-annotation gives nearly all of the accuracy gain of left-corner parsing s, in s u p p o r t of the hypothesis t h a t the ancestor information was responsible for the observed accuracy im- provement. This result suggests that if we can d e t e r m i n e the information t h a t is being anno- t a t e d b y the t r o u b l e s o m e RB o LC transform, we m a y b e able to get the accuracy improve- ment with a relatively narrow beam. Parent- a n n o t a t i o n before the LC transform gave us the best p e r f o r m a n c e of all, with very few states considered on average, and excellent accuracy for a non-lexicalized grammar.

4 A c c u r a c y / E f f i c i e n c y t r a d e o f f

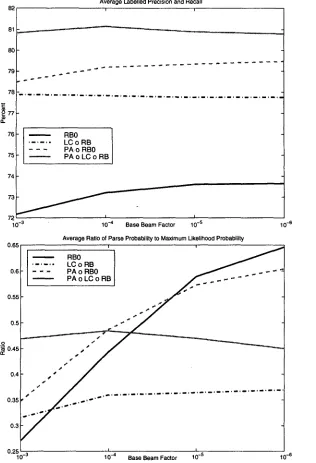

One point t h a t deserves to b e made is t h a t there is something of an accuracy/efficiency tradeoff with regards to the base b e a m factor. T h e re- sults given so far were at 10 -4 , which func- tions p r e t t y well for the transforms we have investigated. Figures 2 and 3 show four per- formance measures for four of our transforms at base b e a m factors of 10 -3 , 10 -4 , 10 -5 , and

10 -6. There is a dramatically increasing effi-

ciency b u r d e n as the b e a m widens, with vary- ing degrees of payoff. W i t h the t o p - d o w n trans- forms (RB0 and PA o RB0), the ratio of the av- erage p r o b a b i l i t y to the MLP probability does improve substantially as the b e a m grows, yet with only marginal improvements in coverage and accuracy. Increasing the b e a m seems to do less with the left-corner transforms.

SThe rest could very well be within noise.

5 C o n c l u s i o n s a n d F u t u r e R e s e a r c h We have examined several probabilistic predic- tive parser variations, and have shown the ap- proach in general to b e a viable one, b o t h in terms of the quality of the parses, and the ef- ficiency with which t h e y are found. We have shown t h a t the improvement of the g r a m m a r s with non-local information not only results in b e t t e r parses, b u t guides the parser to t h e m much more efficiently, in contrast to d y n a m i c p r o g r a m m i n g methods. Finally, we have shown t h a t the accuracy improvement t h a t has b e e n d e m o n s t r a t e d with left-corner approaches can be a t t r i b u t e d to the non-local information uti- lized by the m e t h o d .

This is relevant to the s t u d y of the h u m a n sentence processing mechanism insofar as it d e m o n s t r a t e s t h a t it is possible to have a model which makes explicit the syntactic relationships b e t w e e n items in the input incrementally, while still scaling up to broad-coverage.

F u t u r e research will include: • lexicalization of the parser

• utilization of fully connected trees for ad- ditional syntactic and semantic processing • the use of syntactic predictions in the b e a m

for language modeling

• an examination of predictive parsing with a left-branching language (e.g. G e r m a n ) In addition, it m a y be of interest to the psy- cholinguistic c o m m u n i t y if we introduce a time variable into our model, and use it to compare such competing sentence processing models as race-based and c o m p e t i t i o n - b a s e d parsing.

R e f e r e n c e s

[image:6.612.82.552.74.189.2]x l O 4 Average States Considered per Sentence

98

9 6

9 4

14 i i

R B 0 . . . L C 0 R B 12 - - - P A 0 R B 0

. . . P A 0 L C 0 R B

10

8

6

4 q " -

0 r -

10 - 3 10 .-4 Base Beam Factor 10 - s 1 0 - 6

Percentage of Sentences Parsed

100

R B 0 . . . L C o R B

[image:7.612.128.445.75.549.2]- - - P A o R B 0 ~ ~ .,,,, =

... P A o L C o R B ~,~"~,...,.,"'~, . . . . . ~ ~ .

92 ~ ,4"~

9 0

880_ 3 I =

10 ..4 Base Beam Factor 10 - 5 10 -6

Figure 2: Changes in performance with beam factor variation

M. Britt. 1994. The interaction of referential ambiguity and argument structure.

Journal

o/ Memory and Language,

33:251-283.E. Charniak, S. Goldwater, and M. Johnson. 1998. Edge-based best-first chart parsing. In

Proceedings of the Sixth Workshop on Very

Large Corpora,

pages 127-133.S. Crain and M. Steedman. 1985. On not be- ing led up the garden path: The use of con- text by the psychological parser. In D. Dowty,

L. Karttunen, and A. Zwicky, editors,

Natu-

ral Language Parsing.

Cambridge UniversityPress, Cambridge, UK.

M. Johnson. 1998. P C F G models of linguistic tree representations.

Computational Linguis-

tics,

24:617-636.A v e r a g e Labelled Precision and R e c a l l

82 , ,

81

80

79

78

o~ 7~

(1.

76

75

74

73

72 10"-3

0.65

0.6

0.55

0.5

.o 0,45

rr

0 . 4

0.35

0.3

0.25 10 -3

R B 0

. . . L C o R B

- - - P A o R B 0

P A O L C o R B

i

10 -4

i

B a s e B e a m F a c t o r 1 0 - 6 10 -s

A v e r a g e R a t i o of P a r s e Probability to Maximum Likelihood Probability

, R B 0 -' '

. . . L C o R B

- - - P A o R B 0 / ~ ~ . - "

I I

10 -4 Base Beam Factor 10 -s 10 -6

Figure 3: Changes in performance with beam factor variation

M.P. Marcus, B. Santorini, and M.A.

Marcinkiewicz. 1 9 9 3 . Building a large

annotated corpus of English: The Penn

Treebank. Computational Linguistics,

19(2):313-330.

A. Nijholt. 1980. Context-/tee Grammars: Cov-

ers, Normal Forms, and Parsing. Springer Verlag, Berlin.

S.J. Rosenkrantz and P.M. Lewis II. 1970. De-

terministic left corner parsing. In IEEE Con-

ference Record of the 11th Annual Symposium

on Switching and Automata, pages 139-152. M. Steedman. 1989. Grammar, interpreta-

tion, and processing from the lexicon. In

W. Marslen-Wilson, editor, Lexical represen-

tation and process. MIT Press, Cambridge, MA.

M. Tanenhaus, M. Spivey-Knowlton, K. Eber- hard, and J. Sedivy. 1995. Integration of vi- sual and linguistic information during spoken

language comprehension. Science, 268:1632-

[image:8.612.165.481.80.543.2]