VIS-PROCLUS: A NOVEL PROFILING

SYSTEM FOR INSTIGATING USER

PROFILES FROM SEARCH ENGINE

LOGS BASED ON QUERY SENSE

*

Dr.S.K.JAYANTHI

Associate Professor and Head , Computer Science Department, Vellalar college for women, Erode, India

+0-91-9442350901

Ms.S.PREMA

Assistant Professor, Computer Science Department, KSR College of Arts and Science, Tiruchengode, India

+0-91-9842979939, prema_shanmuga@yahoo.com

Abstract:

Most commercial search engines return roughly the same results for the same query, regardless of the user’s real interest. This paper focus on user report strategy so that the browsers can obtain the web search results based on their profiles in visual mode. Users can be mined from the concept-based user profiles to perform mutual filtering. Browsers with same idea and domain can share their knowledge. From the existing user profiles the interest and domain of the users can be obtained and the search engine personalization is focused in this paper. Finally, the concept-based user profiles can be incorporated into the vis -(Visual) ranking algorithm of a search engine so that search results can be ranked according to individual users’ interests and displayed in visual mode.

Keywords: User Profile; Book Shelf Data Structure; Visualization; Clustering; Vis- Ranking; Search Engine.

1. Introduction

Same results for the same query are followed by most of the commercial search engines regardless of the user’s interest. Since queries submitted to search engines tend to be short and ambiguous, they are not likely to be able to express the user’s precise needs. For example, an interior designer may use the query “windows” to find information about models of the windows, while software engineer may use the same query to find information about window operating systems. Personalized search is an important research area that aims to resolve the ambiguity of query terms. To increase the relevance of search results, personalized search engines create user profiles to capture the users’ personal preferences and as such identify the actual goal of the input query. A good user profiling strategy is an essential and fundamental component in search engine personalization.

2. Search engine personalization

2.1. Information Retrieval

Information Retrieval [IR] is the science of searching for documents, for information within documents, and for metadata about documents, as well as that of searching relational databases [2] and the World Wide Web. Information is better utilized when it is processed to be easier to find, better organized, or summarized for easier digestion. Text and web mining problems in particular use methodologies often spanning those areas.

2.2.User profile extraction from query evaluation engine

the existing logs and page ranking as in Fig .1.No user is attached to a particular outcome, as users are free to move from one locality to another. However, with carefully designed user profile extraction, the recommendation covers the majority of the user session. The pages are ranked using a Vis algorithm (1) [1]:

Fig. 1. User Profile System

n

i 1

Gradevalue(i) Rank p

Marks(i) =

=

(1)

In the formula, Marks (i) represents total marks obtained by the student, Grade value (i) represents the number of hits for a page during Marks (i), p is a probability value between 0 and 1 and can be predefined. Thus, only the nearest past will play a crucial role in ranking.

2.3. Web Structure Mining

The goal of Web structure mining is to generate structural summary about the Web site and Web page as in Fig.2. Technically, Web content mining mainly focuses on the structure of inner-document, while Web structure mining tries to discover the link structure of the hyperlinks at the inter-document level. Web structure mining describes the connectivity in the Web subset, based on the given collection of interconnected web documents. The structural information generated from the Web structure mining includes the follows:

(1)The information measuring the frequency of the local links in the Web tuples in a Web table [3]

(2)The information measuring the frequency of Web tuples in a Web table containing links that are interior and the links that are within the same document

(3)Information measuring the frequency of Web tuples in a Web table that contains links that are global and the links that span different Web sites

(4)The information measuring the frequency of identical Web tuples that appear in the Web table or among the Web tables.

Query Evaluation

Engine Web resources

Refined Topology Web

Repository Data Mining

URL Resolve

Ranked Pages Extracted

User Profile

Tactical and Strategic Adoption

Web Site

Ontology Web Ontology

3. Related Work

Data clustering is an established field. Jain et al [4,5] cover the topic very well from the point of view of cluster analysis theory, and they break down the methodologies mainly into partitioned and hierarchical clustering methods. Kosala and Blockeel [7] touch upon many aspects of web mining, showing the differences between web content mining, web structure mining, and web usage mining. Text mining research in general rely on a vector space model, first proposed in 1975 Salton et al [10, 9, 8] to model text documents as vectors in the feature space. Posing the problem as a distributed data mining problem as opposed to the traditional centralized approach was largely due to Kargupta et al [6]. Keyword search over a mass of tables, and show that they can achieve substantially higher relevance than solutions based on a traditional search engine using Referenced attribute Functional Dependency Database (RFDDb) proposed in 2011 prema et al [11].

4. Mining unstructured data

Web browser can perform knowledge discovery on unstructured data with the help of tools such as Intelligent Miner (IM) for Text and Via Voice for continuous speech audio. Other technologies, such as handwriting recognition, content-based querying for images and time series, image classification, and video indexing, are in various stages of readiness for widespread use. There are three fundamental text mining operations: clustering, categorization, and information retrieval.

4.1. Clustering

Clustering turns unstructured document collections into thematically organized groups that provide a summary view of the documents in that group and facilitate effective and efficient navigation as in Fig.3.

4.2. Categorization

It is the process in which ideas and objects are recognized, differentiated and understood. Categorization as in Fig.4.implies that objects is grouped into categories usually for some specific purpose. Ideally, a category illuminates a relationship between the subjects and objects of knowledge. Categorization is fundamental in language, prediction, inference, decision making and in all kinds of environmental interaction. There are many categorization theories and techniques. In a broader historical view, however, three general approaches to categorization may be identified: Classical categorization, Conceptual clustering, and Prototype theory.

Fig. 3.Clustering Fig .4.Categorization Web

Document

4.3.Information retrieval

Information retrieval (IR) is the area of study concerned with searching for documents, for information within documents, and for metadata about documents as in Fig .5 as well as that of searching relational databases and the World Wide Web. There is overlap in the usage of the terms data retrieval, document retrieval, information retrieval, and text retrieval, but each also has its own body of literature, theory, praxis, and technologies as in Fig .6. IR is interdisciplinary, based on computer science, mathematics, library science, information science, information architecture, cognitive psychology, linguistics, and statistics.

Fig .5. Information Retrieval Fig .6.Indexing and Searching

5. Document Clustering

5.1.Host Distribution Model

The host distribution model follows a peer-to-peer model. The system is represented as a connected graph G(H,L)where

H: is the set of hosts {hi}, i = 1, . . . ,Hn.

L: is the set of links {lij} between every pair of hosts hi and hj , i, j = 1, . . . ,Hn, i ≠ j.

Like the peer-to-peer framework the hosts connected in the network are equally weighted. The hosts are interconnected and interchanged for effective information retrieval. Hosts are assumed to have identical roles in the network. The model also suggests that each host is assumed to have information about how to find all other hosts.

Each and every host in the network has access to the document collection based on Lexicon. The network is a connected graph, in which every host is interconnected with every other host. Every host starts by generating a local cluster of the documents based on user profile strategy as in Fig .7. The purpose is to improve the clustering solution at each host by allowing peers to receive recommended documents so that the local clustering quality is maximized. By forming local cluster of documents based on user profile the summary of lexicon construction is created. Each host applies a similarity calculation between received cluster summaries and its own set of documents. It then recommends to each nearest neighbor what set of documents can benefit the peer’s clustering solution. Finally, the receiving peers decide which documents should be merged with their own clusters. Eventually, each host should have an improved set of clusters over the initial solution, facilitated by having access to global information from its nearest neighbor. All the relevant clusters based on user profile are arranged in the bookshelf Data Structure to present the final result in visual mode.

Information Retrieval

Documents

Databases Browser1

Browser 2

Resources

Server

Databases Documents

Index 1 Index 2 Index documents Indexing

Fig.7. Document Clustering

5.2.Cluster Model

Upon clustering the documents, each node will have created a set of document clusters that best fit its local document collection. Thus, each host hi maintains a set of clusters Ci = {cr}, r =1, . . . ,HCi .Document-to-cluster membership is represented as a relation R (D, C), which is a binary membership matrix:M= [mk,r]

5.3.Cluster Summarization Using Lexicon Construction

Once the initial clusters have been formed at each system, a process of cluster summarization is conducted. The summary of each cluster is represented as a set of core lexicons that accurately describe the topic of the cluster. An algorithm for lexicon construction is proposed for cluster summarization, since it produces more accurate representation of clusters. Based on the score collected from the local candidates the lexicons are ranked. Each host starts by forming a local clustering solution, to be enriched later by mutual clustering. A number of traditional clustering solutions were investigated and achieved comparable results. This included hierarchical, single-pass, k-nearest neighbor, and similarity histogram-based clustering (SHC) [12]. Hierarchical clustering usually performed slightly better than the other three as described below:

Lexicon construction for document clustering

Ci null

For each document dj

∈

Di do For each cluster cr∈

Ci do LCold=LC(cr)Simulate adding dk to cr LC new=LC (cr)

If (LCnew

≥

LCold) OR ((LCnew > LC min) AND (LC old-LCnew<∈

)) then mk,r 1{add dk to cr}end if end for if(M↓Ci)k=0 then

create new cluster c new arrange in BKDS end if

end for

Host Clustering

Document Clusters

Lexicon Matching

Vis-Ranking Cluster lexicon

summaries

Host

Document Host

Document

Bookshelf Data Structure Visual result

6. Bookshelf Data Structure

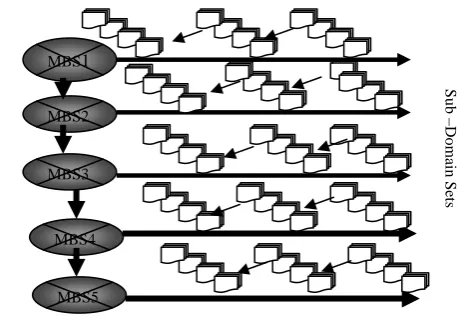

Bookshelf data structure has been introduced for community formation, which stores the inverse indices of the web pages as in Fig.8.This data structure is formed by combining a matrix and list with dynamically allocated memory. This is an extended data structure of hash table and bi-partite core [5], which is used to store base domain and sub-domain indices of various communities. The number of results to process must also be specified because it is crucial for the organization and visualization choices. A recent study [5] shows that 81.7%of users will try a new search if they are not satisfied with the listings they find within the first 3 pages of results. However it would be too restrictive to only consider the first 30 results (10 results per page). Indeed this study has been done on search engines with linear results visualization (ordered lists) and users may want to see more results on 2D or 3D visualizations. Facing the lack of studies for 2D and 3D visualizations, this number is currently fixed to the first 50 results, which is more than the 30 results with which the users are satisfied. This weak number of results is not really a problem to compute the effective organization itself.

Fig .8. Bookshelf Data structure

7. Vis -Ranking

The pseudo code of Vis-Ranking algorithm for effective web search results based on Java Language [13] using the packages util (HashMap , Iterator, List , Map) and Jama (Matrix) is described below .

Vis - Ranking

Initialize Damping Factor as 0.85 Assign List params = new ArrayList(); begin

Ranking ranking = new Ranking(); Print ranking.rank("C"); end

Rank(String pageId)

begin

generateParamList(pageId); Matrix a = new Matrix (generateMatrix()); Initialize parameter size to array B; Repeat for i = 0 and i < params.size() Assign arrB[i][0] = 1 - Damping_Factor end

Matrix b = new Matrix (arrB) // To get the page rank Begin

Initialize Matrix x = a.solve (b)

Initialize index and count value as zero Iterate till param=null

Check current referencePage with next related page If current page equals pageid then

Assign count value to index Increment count value

MBS1

MBS2

MBS3

MBS4

MBS5

M

ain Domain Bookshelf

Sets

Sub

–

Return value end

8. Findings and Discussion

Based on user profile the documents are clustered as in Fig.9.From the document clustering the lexicon is constructed as in Fig.10.Semantic web search home page is shown in Fig.11. In the home page For example, an interior designer may use the query “windows” to find information about models of the windows, while software engineer may use the same query to find information about window operating systems. The user after analyzing the result of semantic web search prefers to produce the result as web graph as in Fig.12. with color specification for nodes like blue for links, red for tables ,green for the DIV tag, violet for images ,yellow: for forms. Series of applets, developed according to the D2K framework will allow for integration of the analytic tools into the Presentation, Research and Visualization interface. These applets will include visualization applets that allow users to drill-down to underlying documents and to map document clusters onto geographic maps using existing geo-referenced place names in the underlying texts.

Fig .9. Web repository Fig.10. Lexicon construction

Acknowledgments

Fig.11.Semantic Search result of for the key “Operating System Fig.12.Web graph simulated result of search engine results

Semantic Search

9. Conclusion

In this paper it has been presented a user profile strategy to improve a search engine’s performance by identifying the information needs for individual users. For clustering of documents both content based clustering and session based clustering techniques is used. The clustered documents are arranged in bookshelf data structure for effective and easy information retrieval. The clustered documents are ranked using Vis (Visual) ranking algorithm and the final result is displayed in visual mode. To automate the identification of groups of similar pages, the approach has been implemented in a Java prototype. This paper proposes an effective method for organizing and visualizing web search results. Finally, the concept-based user profiles can be integrated into the ranking algorithms of a search engine so that search results can be ranked according to individual users’ interests. To automate the identification of groups of similar pages, the approach has been implemented in a Java prototype. This paper proposes an effective method for organizing and visualizing web search results.

References

[1] Middleton, S., De Roure, D, Shadbolt, N. Capturing Knowledge of User Preferences:Ontologies in Recommender Systems, Proc. of the 1st Int. Conference on Knowledge Capture, ACM Press, 2001, pp. 100-107

[2] S.Prema and Dr.S.K.Jayanthi,”Facilitating Efficient Integrated Semantic Web Search with Visualization and Data Mining Techniques”, International Conference on Information & Communication Technologies, Springer Transl.sep, 2010,pp. 437 – 442. [3] S.Prema and Dr.S.K.Jayanthi ,”Referenced Attribute Functional Dependency Database for Visualizing Web Relational Tables”,

International Conference on Network and Computer Science (ICNCS 2011),published in IEEE Xplore, and indexed by the Ei Compendex and Thomson ISI (ISTP),2011

[4] A. K. Jain and R. C. Dubes. Algorithms for Clustering Data. Prentice Hall, Englewood Cliffs, N.J., 1988.

[5] A. K. Jain, M. N. Murty, and P. J. Flynn. Data clustering: a review. ACM Computing Surveys, 31(3):264–323, 1999.

[6] H. Kargupta, I. Hamzaoglu, and B. Stafford. Distributed data mining using an agent based architecture. In Proceedings of Knowledge Discovery and Data Mining,pages 211–214. AAAI Press, 1997.

[7] R. Kosala and H. Blockeel. Web mining research:a survey. ACM SIGKDD Explorations Newsletter,2(1):1–15, 2000.

[8] G. Salton. Automatic Text Processing: The Transformation, Analysis, and Retrieval of Information byComputer. Addison Wesley, Reading, MA, 1989.

[9] G. Salton and M. J. McGill. Introduction to ModernInformation Retrieval. McGraw-Hill computer science series. McGraw-Hill, New York, 1983.

[10] G. Salton, A. Wong, and C. Yang. A vector space model for automatic indexing. Communications of the ACM, 18(11):613–620, November 1975.

[11] S.Prema and Dr.S.K.Jayanthi ,“Web Search Results Visualization Using Enhanced Branch and Bound Bookshelf Tree Incorporated with B3-Vis Technique”, International Conference on Machine Learning and Computing (ICMLC 2011),Sponsored by International Association of Computer Science and Information Technology (IACSIT), Singapore Institute of Electronics (SIE) and IEEE. Listed in the IEEE Xplore and indexed by INSPEC, ISI and Ei Compendex. February 26-28, 2011, Singapore.

[12] K. Hammouda and M. Kamel. Efficient phrase-baseddocument indexing for web document clustering. IEEE Transactions on Knowledge and Data Engineering,16(10):1279–1296, October 2004.

[13] htp://www.javadev.org/fles/ranking.zip.

Biographical notes

Dr.S.K.Jayanthi received the M.Sc., M.Phil., PGDCA, Ph.D in Computer Science from Bharathiar University in 1987, 1988, 1996 and 2007 respectively. She is currently working as an Asso. Professor, Head of the Department of Computer Science in Vellalar College for Women. She secured District First Rank in SSLC under Backward Community. Her research interest includes Image Processing, Pattern Recognition and Fuzzy Systems. She has guided 18 M.Phil Scholars and currently 4 M.Phil Scholars and 4 Ph.D Scholars are pursuing their degree under her supervision. She is a member of ISTE, IEEE and Life Member of Indian Science Congress. She has published 6 papers in International Journals and one paper in National Journal and published an article in Reputed Book. She has presented 15 papers in International level Conferences/Seminars (Papers has been published in IEEE Xplore, ACEEE Search Digital library and online digital library) in various places within India and in London (UK), Singapore and Malaysia, 16 papers in National level Conferences/Seminars and participated in around 40 Workshops/Seminars/Conferences/FDP.